Kernels and support vector machines

Contents

Kernels and support vector machines#

The problem only depends on \(x_i\cdot x_{i'}\)#

As with the maximal margin classifier, the problem can be reduced to finding \(\alpha_1,\dots,\alpha_n\):

As for maximal margin classifier, this only depends on the training sample inputs through the inner products \(x_i\cdot x_j\) for every pair \(i,j\).

Why care?

Key fact about the support vector classifier#

To find the hyperplane all we need to know is the dot product between any pair of input vectors:

K_f(x_i, x_j) = \langle f(x_i), f(x_j) \rangle, \qquad K_f(x, x_i) = \langle f(x), f(x_i) \rangle $$

How to create non-linear boundaries?#

The support vector classifier can only produce a linear boundary.

How to create non-linear boundaries?#

In logistic regression, we dealt with this problem by adding transformations of the predictors.

The original decision boundary is a hyperplane: $\(\log \left[ \frac{P(Y=1|X)}{P(Y=0|X)} \right] = \beta_0 + \beta_1 X_1 + \beta_2 X_2 = 0.\)$

With a quadratic predictor, we get a quadratic boundary: $\(\log \left[ \frac{P(Y=1|X)}{P(Y=0|X)} \right] = \beta_0 + \beta_1 X_1 + \beta_2 X_2 + \beta_3 X_1^2= 0.\)$

With a support vector classifier we can apply a similar trick.

The original decision boundary is the hyperplane defined by: $\( \beta_0 + \beta_1 X_1 + \beta_2 X_2 = 0.\)$

If we expand the set of predictors to the 3D space \((X_1,X_2,X_1^2)\), the decision boundary becomes: $\( \beta_0 + \beta_1 X_1 + \beta_2 X_2 + \beta_3 X_1^2= 0.\)$

This is in fact a linear boundary in the 3D space. However, we can classify a point knowing just \((X_1,X_2)\). The boundary in this projection is quadratic in \(X_1\).

Use polynomials!#

Idea: Add polynomial terms up to degree \(d\): $\(Z = (X_1, X_1^2, \dots, X_1^d, X_2, X_2^2, \dots, X_2^d, \dots, X_p, X_p^2, \dots, X_p^d).\)$

Does this make the computation more expensive?

Recall that all we need to compute is the dot product: $\(x_i \cdot x_{k} = \langle x_i,x_k\rangle = \sum_{j=1}^p x_{ij}x_{kj}.\)$

With the expanded set of predictors, we need: $\(z_i \cdot z_{k} = \langle z_i,z_k\rangle = \sum_{j=1}^p\sum_{\ell=1}^d x^\ell_{ij}x^\ell_{kj}.\)$

Modulo computing these dot products, the order of computations is the same as with linear features. Not quite true for logistic regression as described above.

Kernels and the kernel trick#

The kernel matrix defined by \(K(x_i,x_k) = \langle z_i, z_k \rangle\) for a set of linearly independent vectors \(z_1,\dots,z_n\) is always symmetric and positive semi-definite, i.e. has no negative eigenvalues.

Theorem: If \(K\) is a positive definite \(n\times n\) matrix, there exist vectors \((z_1,\dots,z_n)\) in some space \(\mathbf{Z}\), such that \(K(x_i,x_k) = \langle z_i,z_k\rangle\).

We just need \(\mathbf{K}_{ij}=K(x_i,x_j)\) to classify all of our learning examples, but to classify we need to know \(x \mapsto K(x, x_i)\).

The kernel \(K:\mathbb{R}^p \times \mathbb{R}^p \rightarrow \mathbb{R}\) should be such that for any choice of \(\{x_1, \dots, x_n\}\) the matrix \(\mathbf{K}\) is positive semidefinite.

Such kernel functions are associated to reproducing kernel Hilbert spaces (RKHS).

Support vector classifiers using this kernel trick are support vector machines…

With a kernel function \(K\), the problem can be reduced to the optimization:

This is the dual problem of a different optimization problem than we started with.

Predictions can be computed similarly to original kernel \(K(x,y)=x \cdot y\). (Details omitted.)

Kernels: implicit basis expansion#

Using basis expansions:#

Find a mapping \(\Phi\) which expands the original set of predictors \(X_1,\dots,X_p\). For example, $\(\Phi(X) = (X_1,X_2,X_1^2)\)$

For each pair of samples, compute: $\(\mathbf{K}_{ik} = \langle\Phi(x_i),\Phi(x_k)\rangle.\)$

Predictions use \(\langle\Phi(x), \Phi(x_i) \rangle, 1 \leq i \leq n\).

Using a kernel#

Prove that a function \(R(\cdot,\cdot)\) is positive definite (there are a lot of known examples). For example: radial basis (Gaussian) kernel $\(R(x_i,x_k) = e^{-\frac{\gamma}{2}\| x_i-x_k\|^2_2}.\)$

For each pair of samples, compute: $\(\mathbf{K}_{ik} = R(x_i,x_k).\)$

Often much easier!

Predictions use \(R(x, x_i), 1 \leq i \leq n\).

A polynomial kernel with \(d=2\):#

This is equivalent to the expansion:

For a single \(x\), computing \(K(x,x_k)\) directly is \(O(p)\).

For a single \(x\) computing the kernel using the expansion is \(O(p^2)\).

Finding kernel functions#

Proving that a symmetric function \(R(\cdot,\cdot)\) is positive definite (PD) is not always easy.

However, we can easily define PD kernels by combining those we are familiar with:

Sums, scalings \((>0)\), and products of PD kernels are PD.

Intuitively, a kernel \(K(x_i,x_k)\) defines a similarity between the samples \(x_i\) and \(x_k\).

This intuition can guide our choice in different problems.

Left: polynomial degree=3, Right: radial basis

Common kernels#

The polynomial kernel:

The radial basis kernel:

Summary so far#

As noted above support vector machine is a support vector classifier applied on an expanded set of predictors, e.g. $\(\Phi: (X_1,X_2) \to (X_1,X_2,X_1X_2, X_1^2,X_2^2).\)$

We expand the vector of predictors for each sample \(x_i\) and then perform the algorithm.

We only need to know the dot products:

for every pair of samples \((x_i,x_k)\) as well as how to compute \(\langle \Phi(x), \Phi(x_i) \rangle\) for new \(x\)’s.

Often, the dot product:

is a simple function \(f(x_i,x_k)\) of the original vectors. Even if the mapping \(\Phi\) significantly expands the space of predictors.

Examples#

Example 1: Polynomial kernel

- \[K(x_i,x_k) = (1+ \langle x_i,x_k \rangle)^2.\]

With two predictors, this corresponds to the mapping:

\[\Phi: (X_1,X_2) \to (\sqrt{2}X_1,\sqrt{2}X_2,\sqrt{2}X_1X_2, X_1^2,X_2^2).\]Example 2: RBF kernel

\(K(x_i,x_k) = \exp(-\gamma d( x_i,x_k )^2)\) where \(d\) is the Euclidean distance between \(x_i\) and \(x_k\).

In this case, the mapping \(\Phi\) is an expansion into an infinite number of transformations!

We can apply the method even if we don’t know what these transformations explicitly are.

Kernels for non-standard data types#

We can define families of kernels (with tuning parameters), which capture similarity between non-standard kinds of data:

Text, strings

Images

Graphs

Histograms

Sometimes we know the mapping \(\Phi\), but there are algorithms that are fast for computing \(K(x_i,x_k)\) without doing the expansion explicitly.

Other times, the expansion \(\Phi\) is infinite-dimensional or simply not known.

Stringdot kernel#

Suppose we want to compare two strings in a finite alphabet:

Stringdot kernel: For each word \(u\) of length \(p\), we define a feature:

Naive algorithm would require looping over each sequence, for every subsequence \(u\) of length \(p\).

This would be \(O(n^2)\) steps, where \(n\) is the length of the sequences.

Gapweight kernel#

Suppose we want to compare two strings in a finite alphabet: $\(x_1 = ACCTATGCCATA\)\( \)\(x_2 = AGCTAAGCATAC\)$

Gap weight kernel: For each word \(u\) of length \(p\), we define a feature:

with \(0<\lambda\leq 1\).

The number of features can be huge! However, this can be computed in \(\mathcal O(Mp\log n )\) steps where \(M\) is the number of matches.

Applying SVMs with more than 2 classes#

SVMs don’t generalize nicely to the case of more than 2 classes.

Two main approaches:

One vs. one: Construct \(n \choose 2\) SVMs comparing every pair of classes. Apply all SVMs to a test observation and classify to the class that wins the most one-on-one challenges.

One vs. all: For each class \(k\), construct an SVM \(\beta^{(k)}\) coding class \(k\) as \(1\) and all other classes as \(-1\). Assign a test observation to the class \(k^*\), such that the distance from \(x_i\) to the hyperplane defined by \(\beta^{(k^*)}\) is largest (the distance is negative if the sample is misclassified).

Relationship of SVM to logistic regression#

Recall the Lagrange form of the problem.

Set \(D=1/\lambda\) and minimize over \(\epsilon_i\) explicitly.

If \(1 - y_i(\beta+0 + w \cdot x_i) \leq 0\) we can take \(\hat{\epsilon}_i=0\). Otherwise, we take $\(\hat{\epsilon}_i=1 - y_i(\beta_0 + w \cdot x_i).\)$

Or, $\(\hat{\epsilon}_i = \max(1 - y_i(\beta_0+w \cdot x_i), 0).\)$

Plugging this into the objective (and replacing \(w\) with \(\beta\)) yields

This loss is a function of \(y_i(\beta_0 + \sum_{j=1}^p \beta_j x_{ij})\) and a ridge penalty.

Loss for logistic regression is also a function of \(y_i(\beta_0 + \sum_{j=1}^p \beta_j x_{ij})\).

Large \(\lambda\) \(\iff\) small \(D\) \(\iff\) large \(C\).

Comparing the losses#

The kernel trick can be applied beyond SVMs#

Kernels and dot products:

Associated to a positive definite \(R\) is a dot product. For \(x\) in the feature space \(\mathbb{R}^p\), define \(R_x:\mathbb{R}^p \rightarrow \mathbb{R}\) by

The kernel defines a dot product on linear combinations of the \(R_x\)’s for different \(x\)’s:

and hence a length

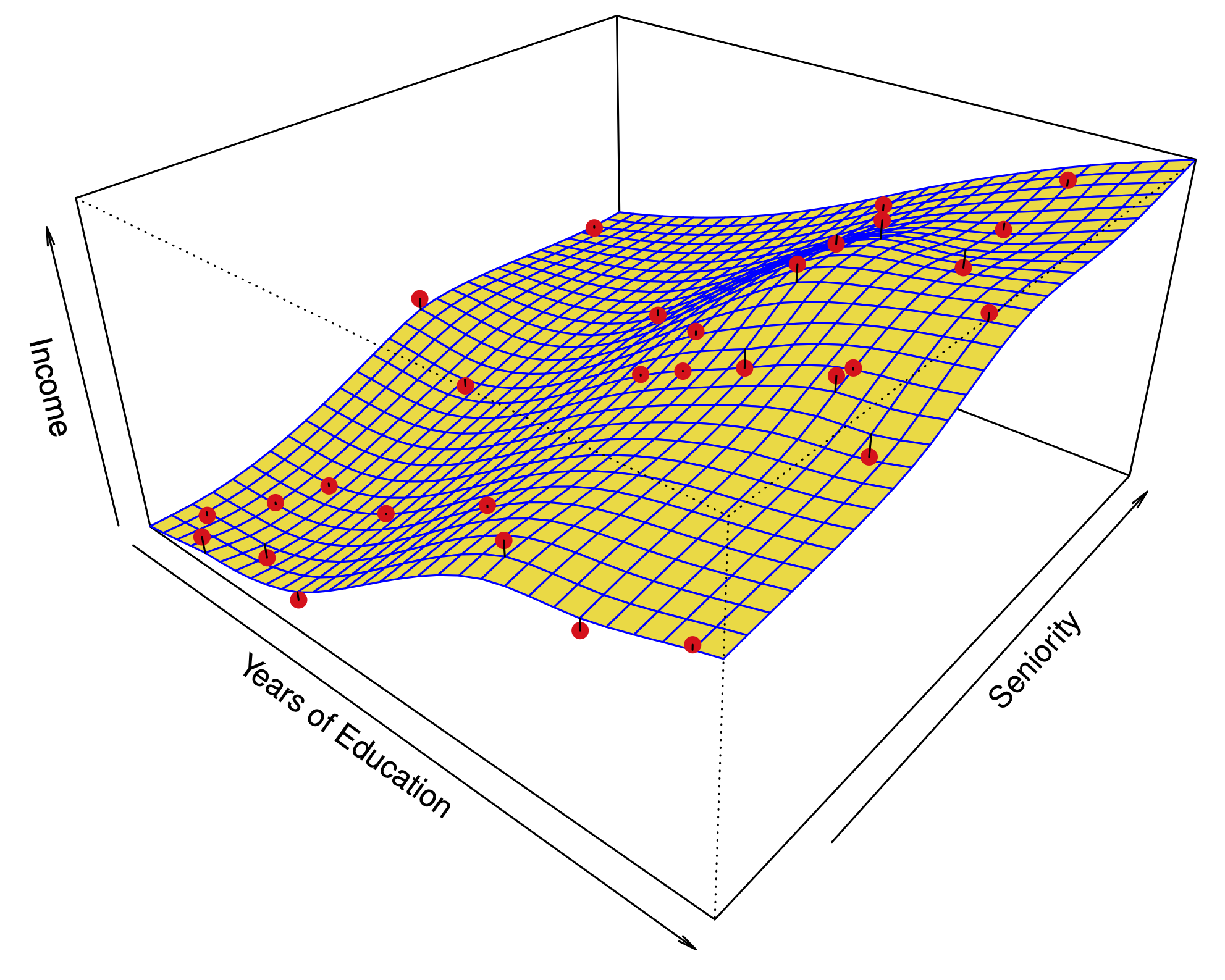

Kernel regression:

For tuning parameter \(\lambda\) define

Remarkably, it is known that

for some \(\hat{\alpha}((X_1,Y_1), \dots, (X_n,Y_n))\).

Problem reduces to finding

Finding \(\hat{\alpha}\) is just like ridge regression!

Similar phenomenon for logistic regression…

Just like smoothing splines, we solved a problem over an big space of functions!

Smoothing splines are a special case of the kernel trick…

Like support vector machines, optimization is over an \(n\)-dimensional vector, not a \(p\)-dimensional vector as in linear regression…

Kernel PCA:

Suppose we want to do PCA with an expanded set of predictors, defined by the mapping \(\Phi\).

First principal component is

Even if \(\Phi\) expands the predictors to a very high dimensional space, we can do PCA!

The cost only depends on the number of observations \(n\).

Long story short: Kernel trick allows transformation of linear methods into nonlinear ones by implicit featurization.