Best subset selection

Contents

Best subset selection#

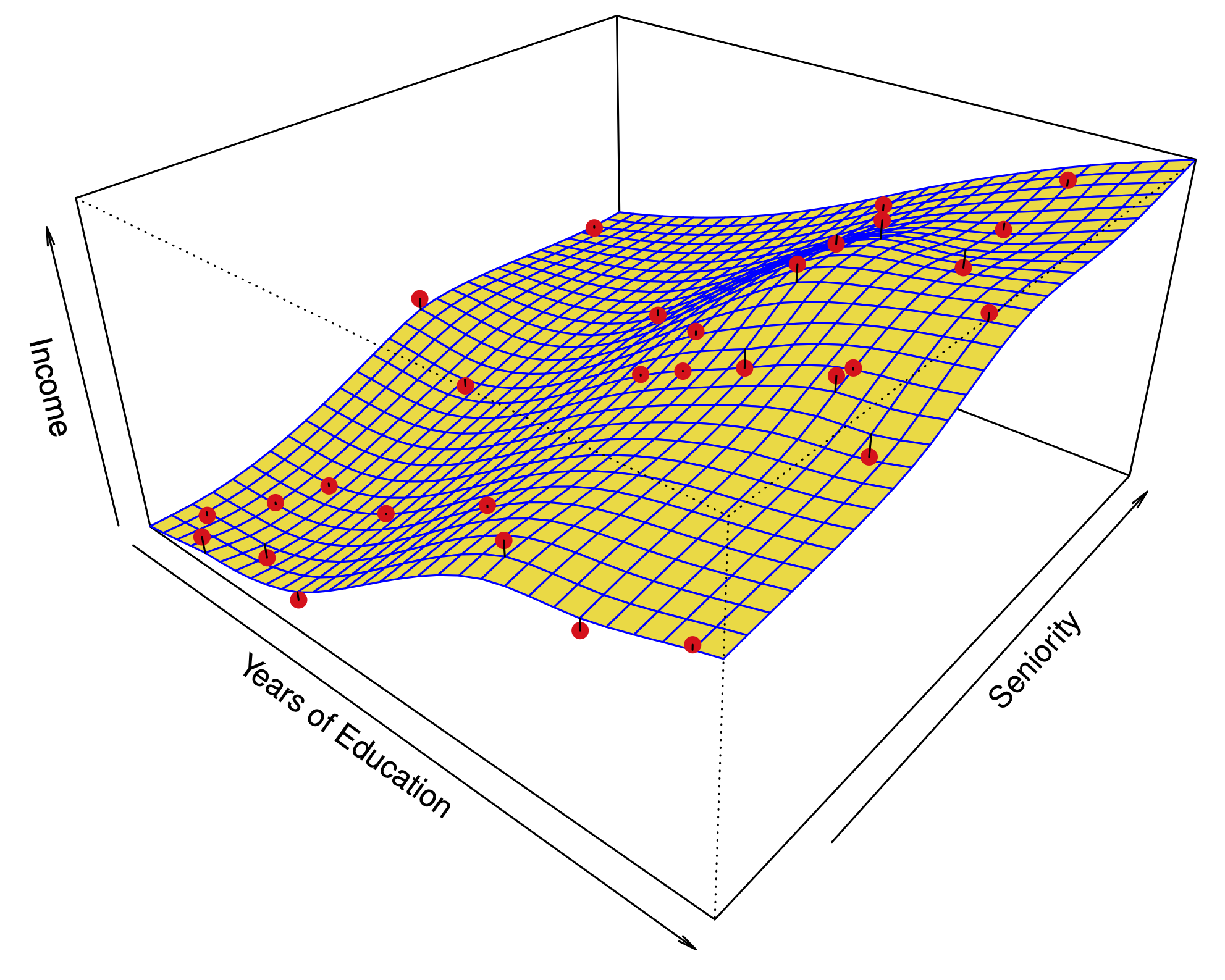

Simple idea: let’s compare all models with \(k\) predictors.

There are \({p \choose k} = p!/\left[k!(p-k)!\right]\) possible models.

For every possible \(k\), choose the model with the smallest RSS.

Best subset with regsubsets#

library(ISLR) # where Credit is stored

library(leaps) # where regsubsets is found

summary(regsubsets(Balance ~ ., data=Credit))

Best model with 4 variables includes:

Cards, Income, Student, Limit.

Choosing \(k\)#

Naturally, \(\text{RSS}\) and $\( R^2 = 1-\frac{\text{RSS}}{\text{TSS}} \)\( improve as we increase \)k$.

To optimize \(k\), we want to minimize the test error, not the training error.

We could use cross-validation, or alternative estimates of test error:

Akaike Information Criterion (AIC) (closely related to Mallow’s \(C_p\)) given an estimate of the irreducible error \(\hat{\sigma^2}\) :

Bayesian Information Criterion (BIC):

Adjusted \(R^2\):

How do these criteria above compare to cross validation?#

They are much less expensive to compute.

They are motivated by asymptotic arguments and rely on model assumptions (eg. normality of the errors).

Equivalent concepts for other models (e.g. logistic regression).

Example: best subset selection for the Credit dataset#

Best subset selection for the Credit dataset#

Recall: In \(K\)-fold cross validation, we can estimate a standard error or accuracy for our test error estimate. Then, we can apply the 1SE rule.