Leave one out cross-validation (LOOCV)

Contents

Leave one out cross-validation (LOOCV)#

For every \(i=1,\dots,n\):

train the model on every point except \(i\),

compute the test error on the held out point.

Average the test errors.

Regression#

Overall error:

\[\text{CV}_{(n)} = \frac{1}{n}\sum_{i=1}^n (y_i - \color{Red}{\hat y_i^{(-i)}})^2\]

Notation \(\hat y_i^{(-i)}\): prediction for the \(i\) sample when learning without using the \(i\)th sample.

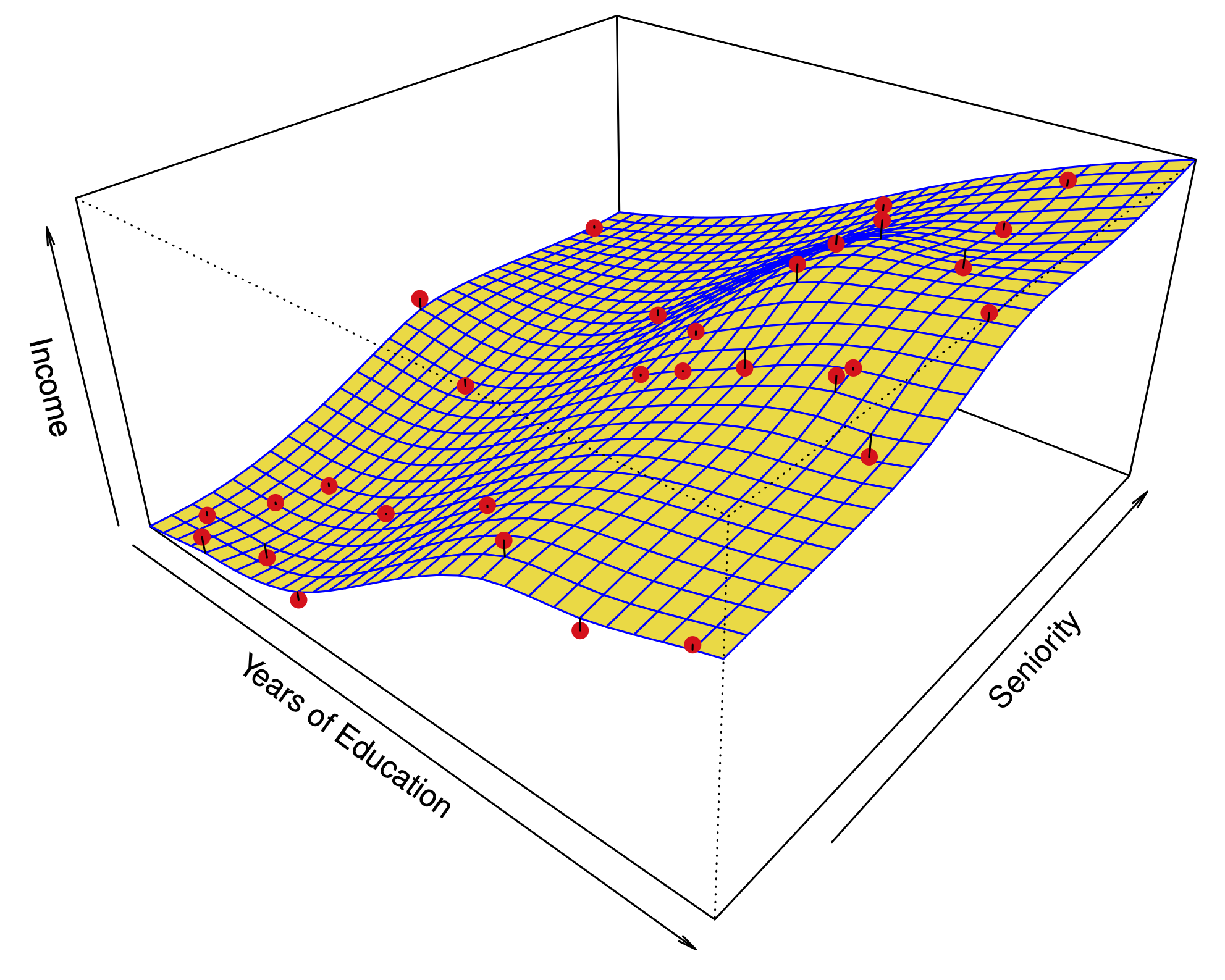

Schematic for LOOCV#

Fig. 31 Schematic of leave-one-out cross-validation (LOOCV) set approach.#

Classification#

Overall error:

\[\text{CV}_{(n)} = \frac{1}{n}\sum_{i=1}^n \mathbf{1}(y_i \neq \color{Red}{\hat y_i^{(-i)}})\]

Here, \(\hat y_i^{(-i)}\) is predicted label for the \(i\) sample when learning without using the \(i\)th sample.

Shortcut for linear regression#

Computing \(\text{CV}_{(n)}\) can be computationally expensive, since it involves fitting the model \(n\) times.

For linear regression, there is a shortcut:

\[\text{CV}_{(n)} = \frac{1}{n} \sum_{i=1}^n \left(\frac{y_i-\hat y_i}{1-h_{ii}}\right)^2\]

Above, \(h_{ii}\) is the leverage statistic.

Approximate versions sometimes used for logistic regression…