High-dimensional regression

Contents

High-dimensional regression#

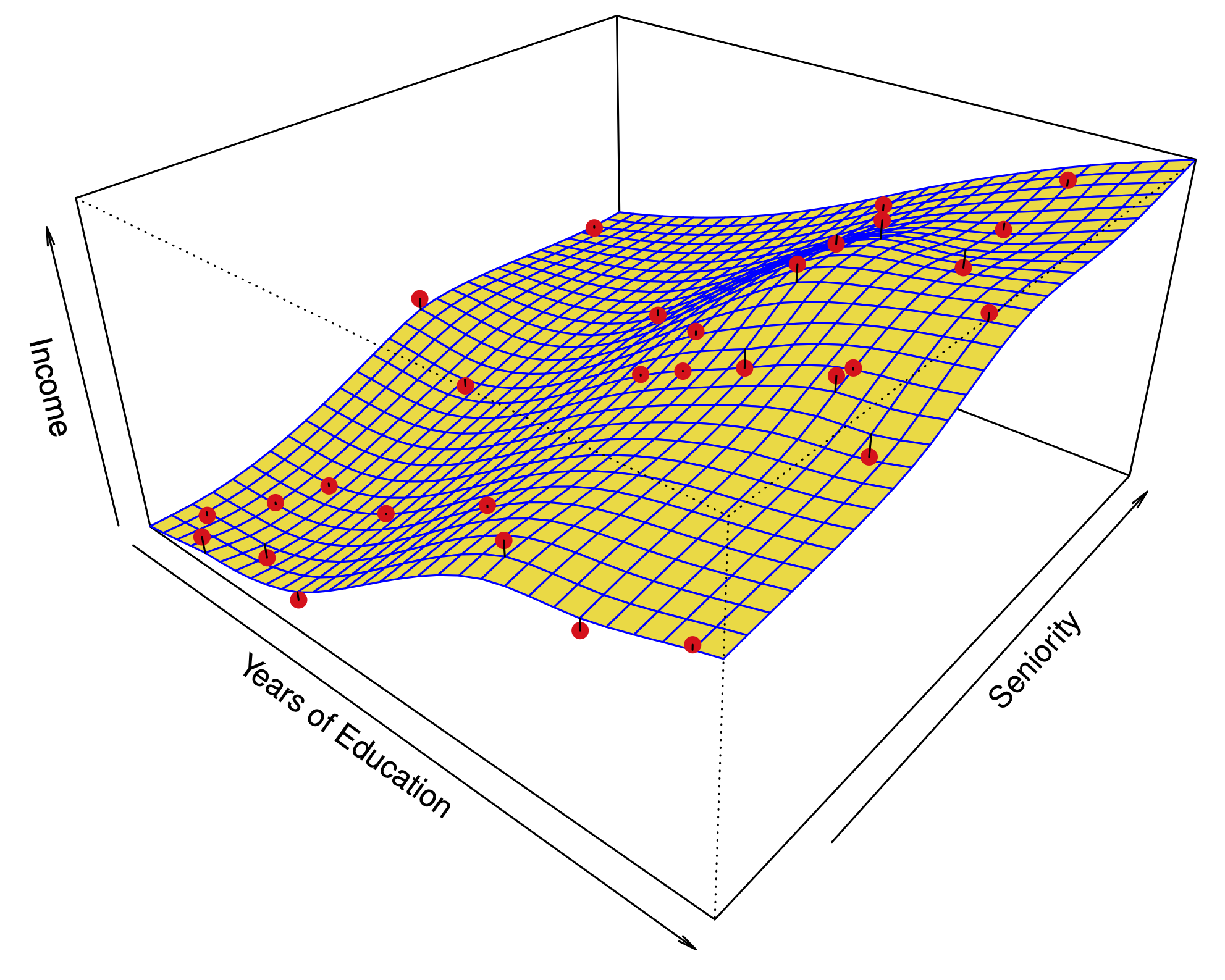

Most of the methods we’ve discussed work best when \(n\) is much larger than \(p\).

However, the case \(p\gg n\) is now common, due to experimental advances and cheaper computers:

Medicine: Instead of regressing heart disease onto just a fewclinical observations (blood pressure, salt consumption, age), we use in addition 500,000 single nucleotide polymorphisms.

Marketing: Using search terms to understand online shopping patterns. A bag of words model defines one feature for every possible search term, which counts the number of times the term appears in a person’s search. There can be as many features as words in the dictionary.

Some problems#

When \(n=p\), we can find a fit that goes through every point.

We could use regularization methods, such as variable selection, ridge regression and the lasso.

Some problems#

Measures of training error are really bad.

Furthermore, it becomes hard to estimate the noise \(\hat \sigma^2\).

Measures of model fit \(C_p\), AIC, and BIC fail.

Some problems#

In each case, only 20 predictors are associated to the response.

Plots show the test error of the Lasso.

Message: Adding predictors that are uncorrelated with the response hurts the performance of the regression!

Interpreting coefficients when \(p>n\)#

When \(p>n\), every predictor is a linear combination of other predictors, i.e. there is an extreme level of multicollinearity.

The Lasso and Ridge regression will choose one set of coefficients.

The coefficients selected \(\{i\;;\; |\hat\beta_i| >\delta \}\) are not guaranteed to be identical to \(\{i\;;\; |\beta_i| >\delta \}\). There can be many sets of predictors (possibly non-overlapping) which yield apparently good models.

Message: Don’t overstate the importance of the predictors selected.

Interpreting inference when \(p>n\)#

When \(p>n\), LASSO might select a sparse model.

Running

lmon selected variables on training data is bad.Running

lmon selected variables on independent validation data is OK-ish – is thislma good model?Message: Don’t use inferential methods developed for least squares regression for things like LASSO, forward stepwise, etc.

Can we do better? Yes, but it’s complicated and a little above our level here.