Logistic regression

Contents

Logistic regression#

We model the joint probability as:

This is the same as using a linear model for the log odds:

Fitting logistic regression#

The training data is a list of pairs \((y_1,x_1), (y_2,x_2), \dots, (y_n,x_n)\).

We don’t observe the left hand side in the model

\(\implies\) We cannot use a least squares fit.

Likelihood#

Solution: The likelihood is the probability of the training data, for a fixed set of coefficients \(\beta_0,\dots,\beta_p\):

We can rewrite as

Choose estimates \(\hat \beta_0, \dots,\hat \beta_p\) which maximize the likelihood.

Solved with numerical methods (e.g. Newton’s algorithm).

Logistic regression in R#

library(ISLR)

glm.fit = glm(Direction ~ Lag1 + Lag2 + Lag3 + Lag4 + Lag5 + Volume,

family=binomial, data=Smarket)

summary(glm.fit)

Inference for logistic regression#

We can estimate the Standard Error of each coefficient.

The \(z\)-statistic is the equivalent of the \(t\)-statistic in linear regression:

The \(p\)-values are test of the null hypothesis \(\beta_j=0\) (Wald’s test).

Other possible hypothesis tests: likelihood ratio test (chi-square distribution).

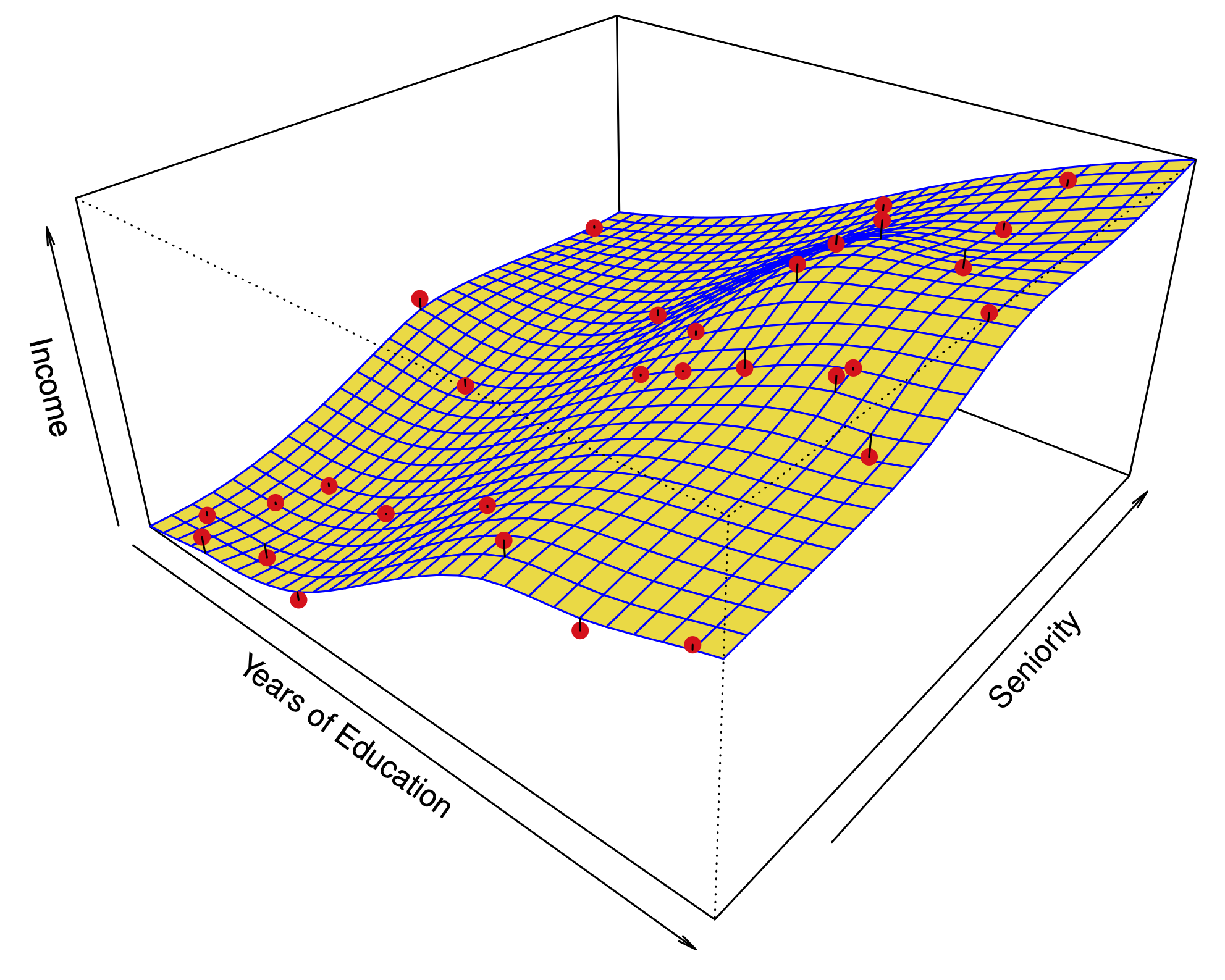

Example: Predicting credit card default#

Predictors:

student: 1 if student, 0 otherwisebalance: credit card balanceincome: person’s income.

Confounding#

In this dataset, there is confounding, but little collinearity.

Students tend to have higher balances. So,

balanceis explained bystudent, but not very well.People with a high

balanceare more likely to default.Among people with a given

balance, students are less likely to default.

Results: predicting credit card default#

Fig. 16 Confounding in Default data#

Using only balance#

library(ISLR) # where Default data is stored

summary(glm(default ~ balance,

family=binomial, data=Default))

Using only student#

summary(glm(default ~ student,

family=binomial, data=Default))

Using both balance and student#

summary(glm(default ~ balance + student,

family=binomial, data=Default))

Using all 3 predictors#

summary(glm(default ~ balance + income + student,

family=binomial, data=Default))

Multinomial logistic regression#

Extension of logistic regression to more than 2 categories

Suppose \(Y\) takes values in \(\{1,2,\dots,K\}\), then we can use a linear model for the log odds against a baseline category (e.g. 1): for \(j \neq 1\)

In this case \(\beta \in \mathbb{R}^{p \times (K-1)}\) is a matrix of coefficients.

Some potential problems#

The coefficients become unstable when there is collinearity. Furthermore, this affects the convergence of the fitting algorithm.

When the classes are well separated, the coefficients become unstable. This is always the case when \(p\geq n-1\). In this case, prediction error is low, but \(\hat{\beta}\) is very variable.