The Story

The Google driverless car is a project that involves developing technology for a car to drive without input from a human. The project is currently being led Stanford researcher Sebastian Thrun, a professor in the Stanford Artificial Intelligence Laboratory and co-inventor of Google Street View. Thrun's team at Stanford created the robotic vehicle Stanley which won the 2005 DARPA Grand Challenge and its US$2 million prize from the United States Department of Defense.

In August 2012, the team announced that they have completed over 300,000 autonomous-driving miles (500 000 km) accident-free, typically have about a dozen cars on the road at any given time, and are starting to test them with single drivers instead of in pairs. Four U.S. states have passed laws permitting driverless cars as of September 2012: Nevada, Florida, Texas and California [source: wikipedia].

Stanley the driverless car.

AI Techniques

Localization

In order to drive the car must first know its location within its environment however it is not possible to use an instrument to measure exactly where a driverless car is. Even the best GPS sensors have a margin of error of around 5 meters (and think about what that means for a car given that the standard lane width is around 3.5 meters). Finding where a robot is when it can't measure location directly is called localization. There are several AI techniques that allow a driverless car to incorporate temporal, noisy input to generate a probabilistically sound estimate.

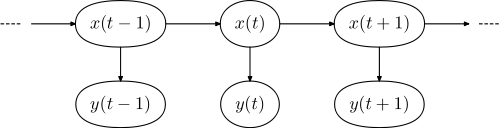

For localization, the underlying model is generally some form of Bayesian model similar to a hidden markov model where the state space of the the unknown "location" variables are continuous. At each time point t there are two variables: an unknown variable which is the location of the car, x(t), and observations about the car's location based on the sensor inputs at that given time, y(t). The model assumes that x(t) is generated from x(t - 1) with some unknown distribution and that y(t) is generated from x(t) with some unknown distribution.

Figure 1: The baysian model for localization.

Stanley used a particle filters algorithm, a randomized algorithm which repeatedly samples possible scenareos, to come up with a best estimate for the where it is: in the diagram above the variables x(t).

Perception

Once a car knows where it is, its next job is to perceive and track objects it might collide with (for example other cars or padestrians). This problem has many similarities to localization. The sensors of other objects are noisy and we want to predict where they are. Again, the underlying model is a modification of a hidden markov model to allow for continuous variables. The picture below shows a variable representation. At each time point there are two variables: the

However, though a robot represents localizing itself and perceiving others with very similar underlying models, the two problems are solved using different algorithms. Instead of using a particle filter to estimate position, perceiving other objects is solved with kalman filters. Kalman filters make an additional assumption about the variables that they are tracking. The algorithm assumes that the location variables are gaussian. This assumption simplifies the problem into one where the solution to where the other cars are can be computed exactly (and thus much faster).

Planning

Get Involved

If you want to work on similar problems you should concentrate on...