Research Discussions

The following log contains entries starting several months prior to the first day of class, involving colleagues at Brown, Google and Stanford, invited speakers, collaborators, and technical consultants. Each entry contains a mix of technical notes, references and short tutorials on background topics that students may find useful during the course. Entries after the start of class include notes on class discussions, technical supplements and additional references. The entries are listed in reverse chronological order with a bibliography and footnotes at the end.

Class Discussions

Welcome to the 2021 class discussion list. Preparatory notes posted prior to the first day of classes are available here. Introductory lecture material for the first day of classes is available here, a sample of final project suggestions here and the calendars of invited talks and discussion lists from previous years are available in the CS379C archives. Since the class content for this year builds on that of last year, you may find it useful to search the material from the 2020 class discussions available here.

Following the 2020 class, the course instructor and teaching assistant met with several interested students on a regular basis to discuss more advanced topics and projects than those covered in 2020 with the aim of integrating these into the 2021 version of course. The field is progressing at an incredible rate and there have been several advances in the intervening months that warrant more than a passing mention. We chronicled our weekly discussions and related project ideas in an appendix to the 2020 class discussion list that you are welcome to take a look at here if you are interested.

July 15, 2021

%%% Thu Jul 15 05:45:29 PDT 2021

Channeling Your Inner Turing Machine

Following up on ideas introduced in the July 11 entry, I reverted to traditional concepts from computer science in an effort to explain how human beings become so adept at handling hierarchies and recursion. The main insight is that humans and conventional computers simply flatten hierarchies and serialize recursive structures.

The primate brain is Turing complete, but it is difficult to master for much of anything besides running and writing motor programs. During development, we create a virtual machine on top of our innate computational substrate that enables us to read, write and run programs in the form of stories communicated by natural language.

In acquiring a natural language, you learn how to serialize your thoughts and express complicated narratives with many twists and turns. You become adept at keeping track of multiple threads in a story involving different characters, remembering who said what, identifying the referent of pronouns, and handling recurrent structure.

Children learn to attribute goals to the agents in stories. They learn that agents construct plans to achieve goals, often have multiple, possibly conflicting goals and multiple plans for achieving them, and that goals have a natural hierarchy and dependency structure in which one goal's postconditions satisfy another goal's preconditions.

By the age of five, most children have the prerequisites for learning a programming language. Their first decade is dedicated to developing their latent von Neumann machine and learning a series of domain-specific languages (DSL) culminating in a language facility capable of interpreting any program expressed in natural language.

The maturation of your biological computing machinery is mirrored by the requirements of the DSLs that you learn as a part of development, starting with simple motor plans, graduating to more complicated recipes for everything from climbing the stairs in your home to preparing a peanut butter sandwich for your lunch.

The earliest DSL's correspond to the machine language for your body and learning to write programs in that language amounts to experimenting with sequences of primitive actions guided by an exploratory instinct and reward of novel experience. Subsequent learning benefits from human tutors following simple training protocols.

The neural substrate is highly plastic allowing training to proceed in sync with the complexity of the concepts to be learned. The first priority is to learn what essentially constitutes the firmware of your brain. Once established, you never install a full update of that firmware, only patches intended to fix bugs introduced during development.

During early development, a good tutor points out problems and corrects mistakes. This supervision is relaxed over time, with the burden of identifying and correcting problems shifting from tutor to student. It is because of this early advantage that learning occurs so quickly, but the child must learn the logical-thinking skills required for identifying and repairing bugs largely on its own.

| |

| Figure 1: Two figures from Baars et al [14] are reproduced here for the reader's convenience in understanding the discussion in the main text. The paper is published in Frontiers in Psychology an open-access journal and available here for download. | |

|

|

Cell Assemblies & Prediction Machines

You can think of the time between infancy and early adulthood as primarily dedicated to acquiring a general-purpose vocabulary grounded in the physical environment that we directly experience throughout our extended development along with a set of skills for using that vocabulary to express useful knowledge about how best to exploit the affordances that the environment offer. There is no mystery how we learn novel skills including skills that involve abstract reasoning. The mammalian brain and the human brain, in particular, is a predictive machine; our brains approach every problem as a problem of prediction [102, 45, 207].

As we learn and explore the environment, we constantly encounter new situations that are similar enough to previously encountered ones that we can describe and take advantage of them by adapting existing skills. Rather than allocate scarce neural resources, we simply reuse our existing knowledge and skills where possible and supply patches to handle the differences. For the most part, all of the learning from now is essentially one-shot or zero-shot imitation learning. To facilitate such learning, new information is layered on existing cortical tissue by learning to create, combine, and alter (reprogram) cell assemblies that are associated with abstract concepts and defined by the enabling of inhibitory and excitatory neurons controlled by circuits in the hippocampus and prefrontal cortex.

This implies that no new axons, dendrites, or synapses are formed and that the activation of neurons in semantic memory in the inferior parietal and temporal lobes is determined by altering the programming of inhibitory neurons in layer-five and excitatory neurons in layers two through six using some variant of Hebbian learning. It is hypothesized the hippocampus and prefrontal cortex play a central role in the processes by which cell assemblies are created, activated, altered, and maintained. Elsewhere in this log, we've discussed the role of the hippocampus and the notion of a global neuronal workspace spanning the neocortex that facilitates both conscious and unconscious attention.

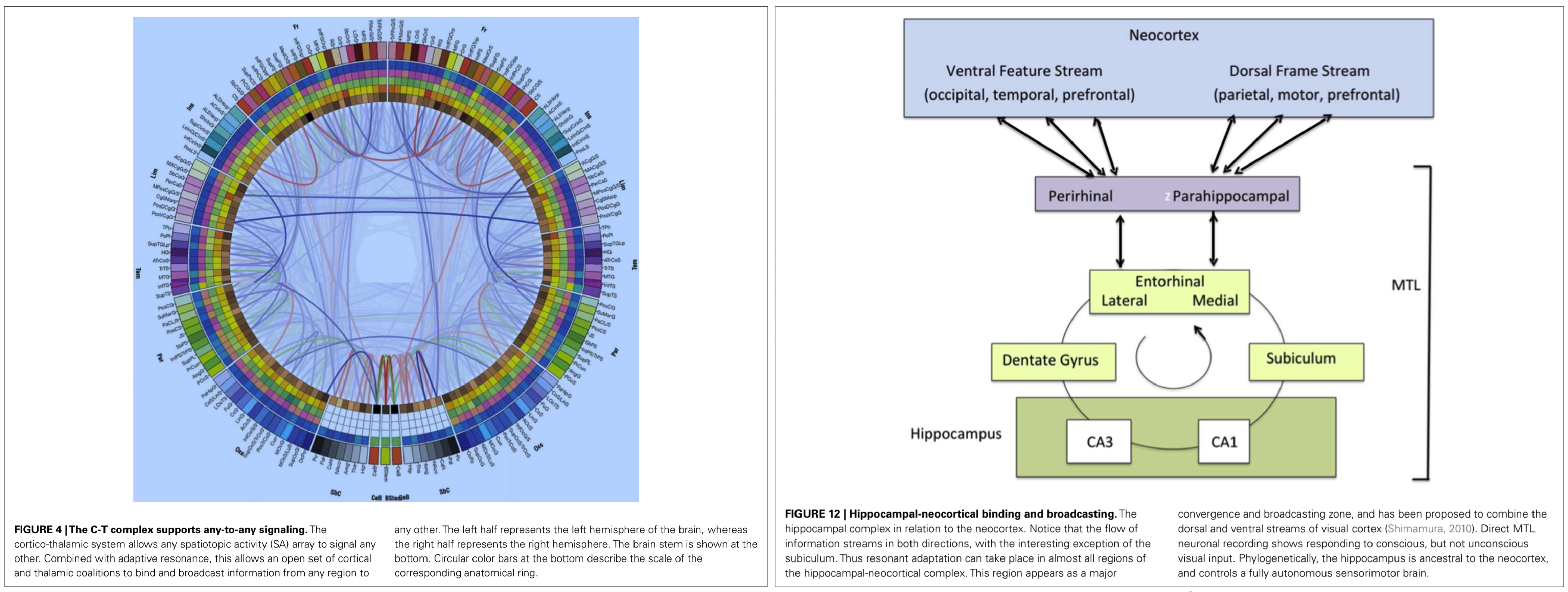

Figure 1 shows two figures from Baars et al [14] illustrating, on the left, the pathways between neocortical regions comprising the global workspace and, on the right, the role of the hippocampus and prefrontal cortex in supporting the transfer of information between regions to facilitate neural computations. See Modha and Singh [183] on the graph structure of the network shown on the left, and Mashour et al [175] for details regarding how cell assemblies are activated and give rise to cascades of activation.

For additional background on the organization of the underlying neural structure shown graphic on the left in Figure 1, Binder and Desai [39] summarize progress in understanding the structural-functional relationships at the level of cortical areas building on the work of Korbinian Brodmann [44], and Amunts and Zilles [11] review what is known about the structural-functional relationships underlying the cytoarchitectural divisions in the brain at the level of cortical areas. See relevant class notes here and here.

Computational Modeling Technologies

Papadimitriou et al [193] describe a mathematical model of neural computation based on creating, activating, and performing computations on cell assemblies. They define a simple abstraction for representing assemblies and provide a repertoire of operations that can be performed on them. Using a formal model of the brain intended to capture cognitive phenomena in the association cortex, the authors describe a set of experiments demonstrating the operations performing computations and prove that under appropriate assumptions that their model can perform arbitrary computations.

Rolls et al [215, 153] present a quantitative computational theory of the operation of the hippocampus as an episodic memory system. "The CA3 system operates as a single attractor or auto-association network (1) to enable rapid one-trial associations between any spatial location (place in rodents or spatial view in primates) and an object or reward and (2) to provide for completion of the whole memory during recall from any part. The theory is extended to associations between time and object or reward to implement temporal order memory, which is also important in episodic memory."

Ramsauer et al [206] show that the attention mechanism in the transformer model [235] is the update rule of a modern Hopfield network [159] with continuous states. This new Hopfield network can store exponentially (with the dimension) many patterns, converges with one update and has exponentially small retrieval errors [88]. Seidl et al [222] demonstrate that modern Hopfield networks are capable of few- and zero-shot prediction on a challenging prediction problem related to drug discovery that has interesting analogs in other application areas.

Miscellaneous Loose Ends: The three modeling technologies in the previous subsection represent a sample of potential algorithmic solutions to the computational problems involved in implementing a complementary learning system that roughly models the hippocampus and supports a scalable approach to rapid learning based on Hebbian cell-assemblies. We plan to resurrect this theme in designing the syllabus for CS379C in the Spring of 2022. The rest of the discussion listing for this year includes many entries exploring alternative solutions some of which we'll return to next year. You can find the BibTeX references including abstracts for several of the papers that were useful in compiling the previous subsection here1.

July 11, 2021

%%% Sun Jul 11 04:36:58 PDT 2021

Complementary Learning Models

This poster summarizes and illustrates the component conceptual and architectural parts of the hierarchical complementary learning system [160] (CLS) model I've been working on over the last three months. By way of introduction, the following list briefly summarizes the six subcomponents / talking points labeled A through F in the poster:

A provides two views of the hippocampal formation (HPC): a simplified anatomical rendering on the left and a schematic block diagram on the right illustrating the primary information processing networks in the hippocampus along with their interconnecting pathways – see Figure 7 in [77] for more detail. C is the analogous description for the basal ganglia (BG) – see Figure 6 in [77] for more detail.

B is a rendering of the PBWM model – prefrontal-cortex (PFC) + basal-ganglia (BG) + working memory (WM) – extended to include the hippocampus (HPC) as the basis for complementary learning. The diagram illustrates the role of the HPC in influencing action selection by supplementing information the BG obtains from posterior cortex and assisting the PFC by suggesting episodic memory – see O'Reilly and Jilk [189] and Huang et al [142].

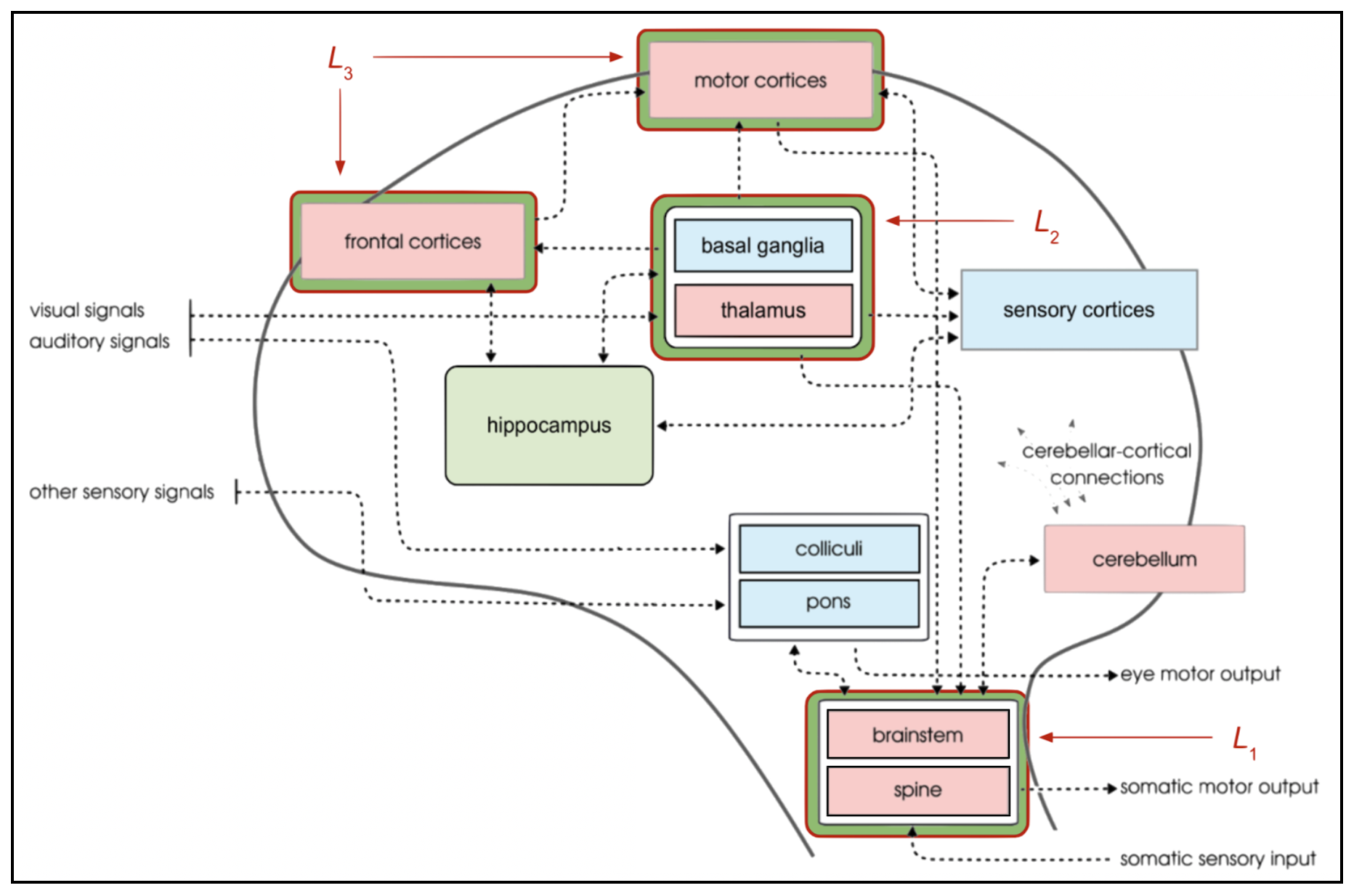

D is a modification of Box 2 in Merel et al [177] highlighting in forest green the three structures that roughly align with levels L1, L2, and L3 in the current hierarchical model that Yash has been working on and Juliette is using as the basis for designing the curriculum training protocol – see Figure 2 below. I've also made changes to incorporate the hippocampal formation in accord with the PBWM + HPC model in B.

E shows a recent model of the hippocampus that includes pathways by which the HPC can alter the activity of circuits in frontal and posterior cortex – see Kleinfeld et al [154] for details. This model is one of several attempting to understand how the four subregions – CA1, CA2, CA3, and CA4 – combine to support capabilities beyond the traditional spatial reasoning tasks that are the subject of most mouse studies.

| |

| Figure 2: A modification of Box 2 in Merel et al [177] highlighting in forest green the three cortical and subcortical structures that roughly align with levels L1, L2, and L3 in the hierarchical model described in Figure 11, and adding the hippocampal formation as providing a basis for complementary learning. | |

|

|

I revised the original cognitive version of Box 2 in Merel et al [177] to include the hippocampal formation and the reciprocal connections predicted in the O'Reilly et al CLS extension of PBWM [142, 189] (B) and Figure 1 in Kleinfeld et al [154] (E). We might want to feature the revised version (D) as an inset in the paper highlighting the differences between our model and that of Merel et al. Much of the inspiration for the design of this model derives from the papers reviewed in the June 22 entry in this discussion log.

Hippocampal Representations

Jessica Robin and Morris Moscovitch [214] argue that the hippocampus is responsible for transforming detail-rich representations into gist-like and schematic representations based on functional differentiation observed in the circuits along the longitudinal-axis of the hippocampus, and its functional connectivity to related posterior and anterior neocortical structures, especially the ventromedial prefrontal cortex (vmPFC) – see Ghosh and Gilboa [111] for detail regarding the use of the term "schema" in cognitive neuroscience and the June 22 entry in the class discussions for more detail regarding "gist-like" representations.

Vanessa Ghosh and Asaf Gilboa [111] argue that the necessary features of schema are (1) an associative network structure, (2) basis on multiple episodes, (3) lack of unit detail, and (4) adaptability, and optional features include (5) chronological relationships, (6) hierarchical organization, (7) cross-connectivity, and (8) embedded response options. In Table 2 (Page 111) they attempt to identify the neural correlates of different schema-related functions. Unfortunately, the material is highly speculative and provides little insight one might used to design artificial neural correlates.

CA1 and CA3 are at the poles of this longitudinal-axis, with the latter often characterized as an associative network and Hopfield networks suggested as a potential artificial network architectures. The traditional functional account [153] suggests the dentate gyrus as performing pattern separation followed by CA3 performing pattern completion – operations that could ostensibly be be of use in creating and adapting schematic representations of the sort envisioned above. This narrow perspective completely ignores the roles of CA2 and CA4 which are of increasing interest due to improved recording technology [93, 62].

Architectural Considerations

The challenge here is to explain how the apprentice might exploit its episodic memory to efficiently "program" the prefrontal cortex and the basal ganglia to learn new tasks from a single demonstration, generalizing on the single exposure while not inflicting collateral damage – catastrophic interference – on previously learned tasks.

The prefrontal cortex and the basal ganglia have access to the same structured sensory, somatosensory, and motor state information gathered from throughout the cortex and made available in the thalamus. The prefrontal cortex and the basal ganglia share responsibility in selecting and orchestrating behavior – both cognitive and physical activity – by shaping the context for performing such activity.

In addition, the prefrontal cortex retains selected information in working memory thereby providing an advantage in performing its executive functions. In principle, both the prefrontal cortex and the hippocampus could potentially control the behavior of the basal ganglia by altering the patterns of activity available in the thalamus and that the basal ganglia rely upon for action selection.

If we assume that the current summary of activity throughout the neocortex is sufficient to reconstruct the cognitive and physical action taken in response to that summary, then all we have to store is a representation that provides a suitably compressed account of that activity, and we know that the input to the hippocampus provides the information necessary to construct such a representation.

It seems reasonable to suggest that the representations stored in the activity patterns of CA1 neurons are sufficient to recover aspects of those patterns sufficient to recreate the originally elicited cognitive and physical behavior even if the current state at the time of their recreation differs in nonessential ways from the original state at the time of their encoding in the hippocampus.

This also assumes that the hippocampus is able to infer the essential features of the state that enable the application of a specified activity as well as nonessential features that when present are also relevant to the elicitation and execution of the specified activity. There is certainly a good deal of well-situated hippocampal circuitry, e.g., in CA2 and CA4 that could be utilized to identify such features.

The above observations suggest that the ability to identify the essential and optional features prerequisite for exploiting a given state supports the encoding of gist-like representations that might be implemented as relational models [246, 216, 27], and that these representations, together with the auto-associative properties of CA3 neurons [153], are capable of encoding schema-based representations suitable to meet the challenge mentioned earlier.

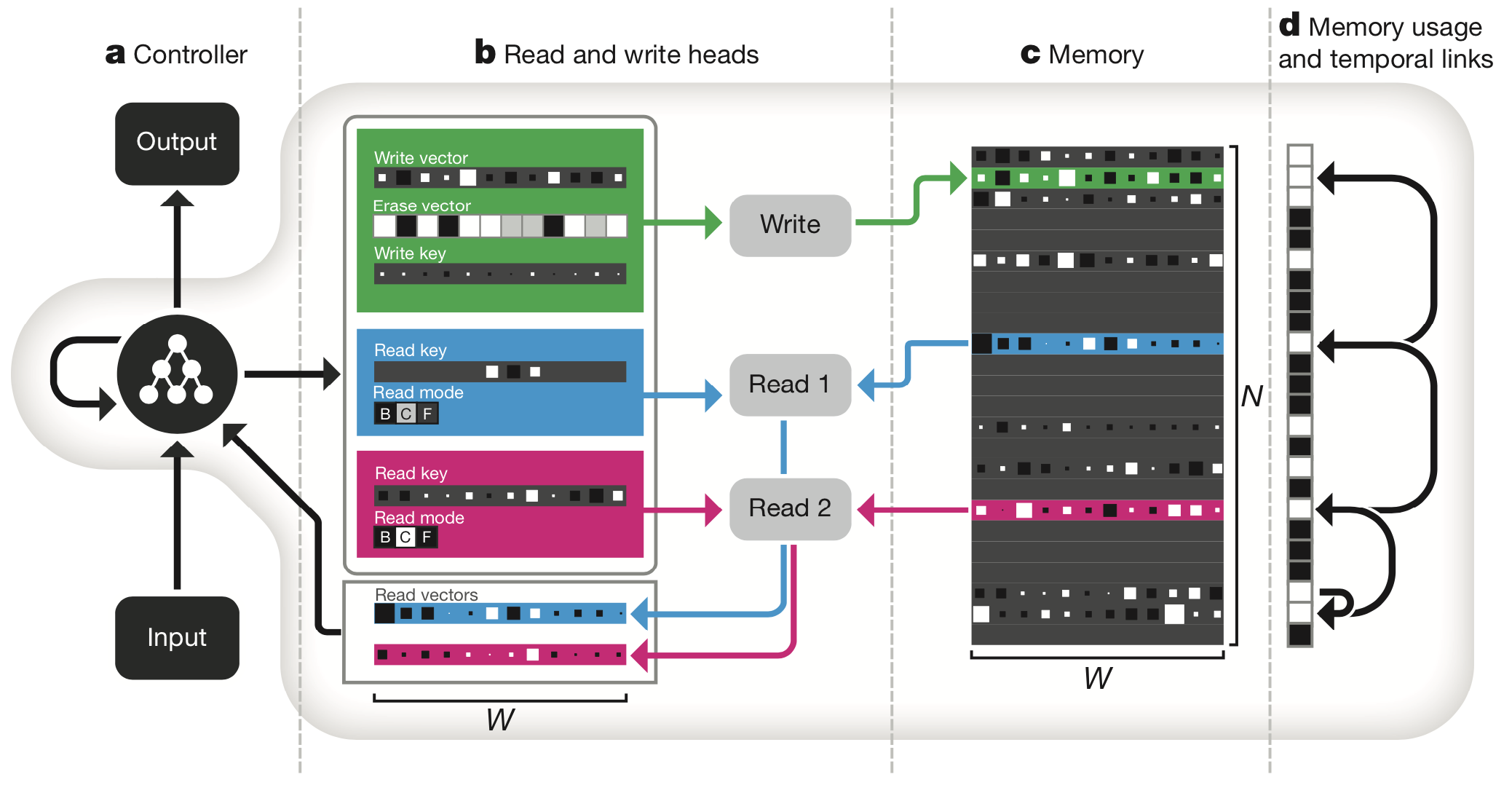

The earlier model from Dean et al [77] – labeled F in the poster – captures some, but not all, of the intuitions discussed above. It would be more plausible to model the LTM DNC as a single network composed of dynamically separated cell assemblies [193] rather than as a collection key / value pairs – keys = contexts and values = programs – as in Reed and DeFreitas [212]. We pursue the idea somewhat further in the July 15 entry in this log.

Miscellaneous Loose Ends: Kesner and Rolls [153, 215] provide a summary of current computational models of hippocampal function – including detail concerning CA1 and CA3, along with a review of the evidence supporting their adoption. The three inset text boxes in [77] – written by Chaofei Fan and Meg Sano – are relevant to the discussion in this log entry: Box A: Pattern Separation, Completion, Integration, Box B: Replaying Experience, Consolidating Memory, and Box C: Hierarchy, Abstraction and Executive Control.

June 22, 2021

%%% Tue Jun 22 14:04:51 PDT 2021

This entry follows up on the abbreviated discussion concerning the function of the hippocampal formation in the previous entry. Additional research revealed a gap in my understanding of hippocampal function. In particular, I overlooked research on human hippocampal function based on evidence gleaned from behavioral, lesion and fMRI studies of human subjects as well as non-human animal studies specifically relating to observed functional variation expressed along the longitudinal axis of the adult human hippocampus. I've compiled a collection of relevant papers available here2 , and a sample of representitive excerpts are included below:

Robin and Moscovitch [214] – argue that memory transformation from detail-rich representations to gist-like and schematic representation is accompanied by corresponding changes in their neural representations. These changes can be captured by a model based on functional differentiation along the long-axis of the hippocampus, and its functional connectivity to related posterior and anterior neocortical structures, especially the ventromedial prefrontal cortex (vmPFC). In particular, we propose that perceptually detailed, highly specific representations are mediated by the posterior hippocampus and neocortex, gist-like representations by the anterior hippocampus, and schematic representations by vmPFC. These representations can co-exist and the degree to which each is utilized is determined by its availability and by task demands.

Sekeres et al [223] – The posterior hippocampus, connected to perceptual and spatial representational systems in posterior neocortex, supports fine, perceptually rich, local details of memories; the anterior hippocampus, connected to conceptual systems in anterior neocortex, supports coarse, global representations that constitute the gist of a memory. Notable among the anterior neocortical structures is the medial prefrontal cortex (mPFC) which supports representation of schemas that code for common aspects of memories across different episodes. Linking the aHPC with mPFC is the entorhinal cortex (EC) which conveys information needed for the interaction/translation between gist and schemas. Thus, the long axis of the hippocampus, mPFC and EC provide the representational gradient, from fine to coarse and from perceptual to conceptual, that can implement processes implicated in memory transformation.

Schacter et al [220] – Recent work has revealed striking similarities between remembering the past and imagining or simulating the future, including the finding that a common brain network underlies both memory and imagination. Here, we discuss a number of key points that have emerged during recent years, focusing in particular on the importance of distinguishing between temporal and nontemporal factors in analyses of memory and imagination, the nature of differences between remembering the past and imagining the future, the identification of component processes that comprise the default network supporting memory-based simulations, and the finding that this network can couple flexibly with other networks to support complex goal-directed simulations.

Grady [114] – These three approaches provided converging evidence that not only are cognitive processes differently distributed along the hippocampal axis, but there also are distinct areas coactivated and functionally connected with the anterior and posterior segments. This anterior/posterior distinction involving multiple cognitive domains is consistent with the animal literature and provides strong support from fMRI for the idea of functional dissociations across the long axis of the hippocampus.

Vogel et al [236] – We find frontal and anterior temporal regions involved in social and motivational behaviors, and more functionally connected to the anterior hippocampus, to be clearly differentiated from posterior parieto-occipital regions involved in visuospatial cognition and more functionally connected to the posterior hippocampus. These findings place the human hippocampus at the interface of two major brain systems defined by a single molecular gradient.

Hassabis and Maguire [125] – Demis Hassabis and Eleanore Maguire suggest two possilble roles for hippocampal memory: First, the hippocampus may be the initial location for the memory index [173] which reinstantiates the active set of contextual details and later might be consolidated out of the hippocampus. Second, the hippocampus may have another role as an online integrator supporting the binding of these reactivated components into a coherent whole to facilitate the rich recollection of a past episodic memory, regardless of its age. From latter, they hypothesize that such a function would be of great use also for predicting the future, imagination and navigation.

Cer and O'Reilly [60] – [p]ostulate that different regions of neural the brain are specialized to provide solutions to particular computational problems. The posterior cortex employs coarse-coded distributed representations of low-order conjunctions to resolve binding ambiguities, while also supporting systematic generalization to novel stimuli and situations. [T]his cognitive architecture represents a more plausible framework for understanding binding than temporal synchrony approaches.

Eichenbaum [95] – The prefrontal cortex (PFC) and hippocampus support complementary functions in episodic memory. Considerable evidence indicates that the PFC and hippocampus become coupled via oscillatory synchrony that reflects bidirectional flow of information. Furthermore, newer studies have revealed specific mechanisms whereby neural representations in the PFC and hippocampus are mediated through direct connections or through intermediary regions. – a claim that if true need not undermine the explanatory value of Cer and O'Reilly's computational model.

Miscellaneous Loose Ends: I added several papers to the related work mentioned earlier. The newly added papers were authored by – different subsets of – Demis Hassabis, Dharshan Kumaran, Eleanor Maguire, and Daniel Schacter for their constructive model [124, 125, 220] of hippocampal episodic memory that inspired the papers from DeepMind on imagination-based planning and optimization [122, 194]. There is a lot of overlap between these papers and the work of Morris Moscovitch.

In terms of implementing a flexible memory system to support one- and zero-shot learning of the sort we envision for the programmer's apprentice I suggest you start by reading the 2017 paper by Jessica Robin and Moscovitch [214]. It is worth reviewing the Nature paper by Graves et al [115] on the functions supported by Differentiable Neural Computers to contrast with our requirements in the programmer's apprentice – a synopsis is provided here3.

Given an observed state-action summary, ⟨st, at, rt, st1⟩, the apprentice has to both generalize the context for acting by adjusting the observed (posterior/perceptual) state st as sketched in an earlier entry in this log and modify the program to be executed in that context by altering the control (anterior/prefrontal) circuits at as discussed in the previous entry. Interaction and translation between gist and schema representations along the gradient described in Sekeres et al [223] may provide implementation insight.

June 17, 2021

%%% Thu Jun 17 10:43:50 PDT 2021

I spent the last two weeks looking into the literature in an attempt to better understand the design choices made in the implementation described in Huang et al [142]. It appears to provide a practical foundation for building agents like the programmer's apprentice, and, where it falls short, it can be adapted without deviating too far from the basic skeleton outlined in Huang et al.

As a check on my thinking, I asked Randy O'Reilly for feedback concerning the following design principles that I believe roughly account for our current understanding of the relevant biology as laid out in O'Reilly et al [191] and in our discussions with Randy over the last two years concerning his Prefrontal cortex Basal ganglia Working Memory (PBWM) model of executive control.

If you're not familiar with PBWM, check out the transcript of the discussion we had with Randy in 2020 during which we talked about adapting the model for the programmer's apprentice, and the followup with Randy and Michael Frank in which we discussed the sharing of responsibility for action selection and executive control between basal ganglia and prefrontal cortex.

Human brains are functionally organized along the anterior-posterior axis with the back of the brain largely responsible for sensory processing and the front responsible for action selection and execution including actions that perform abstract reasoning tasks.

There appear to be rostrocaudal distinctions in frontal cortex activity that reflect a hierarchical organization, whereby anterior frontal regions influence processing by posterior frontal regions during the realization of abstract action goals as motor acts.

The basal ganglia take as input a compressed summary of activity collected from throughout both the motor and sensory cortex and produce an annotated version that serves as a context for action selection – both concrete and abstract – in the frontal cortex.

This basal-ganglia-generated context is modified by feedback from the executive regions of the frontal cortex in which the rostrocaudal axis of the PFC supports a control hierarchy so posterior-to-anterior regions of PFC exert progressively more abstract, higher-order control.

The hippocampal formation and the circuits in the prefrontal cortex work together in order to exploit prior experience in current circumstances as well as assimilate new memories into pre-existing networks of knowledge in anticipation of their use in future circumstances.

Relevant design choices that follow from the above include the assumption that the frontal cortex is responsible for performing both concrete motor and abstract cognitive processing and control. Performing sequences of actions is facilitated by PFC access to both the input and output of such actions whether through the perception of the consequences of acting in the external environment or by way of the extensive reciprocal white matter tracts connecting circuits in the frontal cortex with circuits scattered throughout the rest of the cortex.

The idea that the posterior cortex is representational / perception-oriented and the anterior cortex is computational / action-oriented is obviously misleading given that the hallmark of biological computing is that representation and computation are collocated. Perhaps it might be more useful to say that the posterior cortex is primarily responsible for precipitating computations that are dictated by circumstances/signals that are external to the posterior cortex whereas the anterior cortex is primarily responsible for precipitating computations that are self-initiated including computations precipitated by previous self-initiated computations, i.e., recursively.

June 16, 2021

%%% Wed Jun 16 05:29:34 PDT 2021

This entry extends the discussion on the scope of cognitive functions in the human neocortex begun in the previous entry. Brodmann area 10 (BA10), also referred to as the frontopolar prefrontal cortex or rostrolateral prefrontal cortex, is often described as "one of the least well understood regions of the human brain". Katerina Semendeferi et al [225] argue, from examination of the skulls of early homonids, that during human evolution, the functions in this area resulted in its expansion relative to the rest of the brain. They suggest that "the neural substrates supporting cognitive functions associated with this part of the cortex enlarged and became specialized during hominid evolution."

Koechlin and Hyafil [157] claim that frontopolar prefrontal cortex (FPC) function "enables contingent interposition of two concurrent behavioral plans or mental tasks according to respective reward expectations, overcoming the serial constraint that bears upon the control of task execution in the prefrontal cortex. This function is explained by interactions between FPC and neighboring prefrontal regions. However, its capacity appears highly limited, suggesting that the FPC is efficient for protecting the execution of long-term mental plans from immediate environmental demands and for generating new, possibly more rewarding, behavioral or cognitive sequences, rather than for complex decision-making and reasoning."

Dehaene et al [87] offer a model of a global workspace engaged in effortful cognitive tasks for which perceptual, motor and related specialized processors do not suffice. "In the course of task performance, workspace neurons become spontaneously coactivated, forming discrete though variable spatio-temporal patterns subject to modulation by reward signals. A computer simulation of the Stroop task shows workspace activation to increase during acquisition of a novel task, effortful execution, and after errors. We outline predictions for spatio-temporal activation patterns during brain imaging, particularly about the contribution of dorsolateral prefrontal cortex and anterior cingulate to the workspace."

June 14, 2021

%%% Mon Jun 14 14:19:27 PDT 2021

I've been studying papers on the structure and supported cognitive functions of the human neocortex, primarily authored by Etienne Koechlin, David Badre and their colleagues, relating to cognitive control and in particular hierarchical planning, internal goals, task management, and action selection in the dorsolateral prefrontal cortex. Rather than my summarizing them here, I suggest that you read Badre's 2018 Trends in Cognitive Sciences paper [21] that provides the best recent review and attempt at reconciling the differences between the competing theories that were featured in Figure 2 in the 2008 paper [20] in the same journal4.

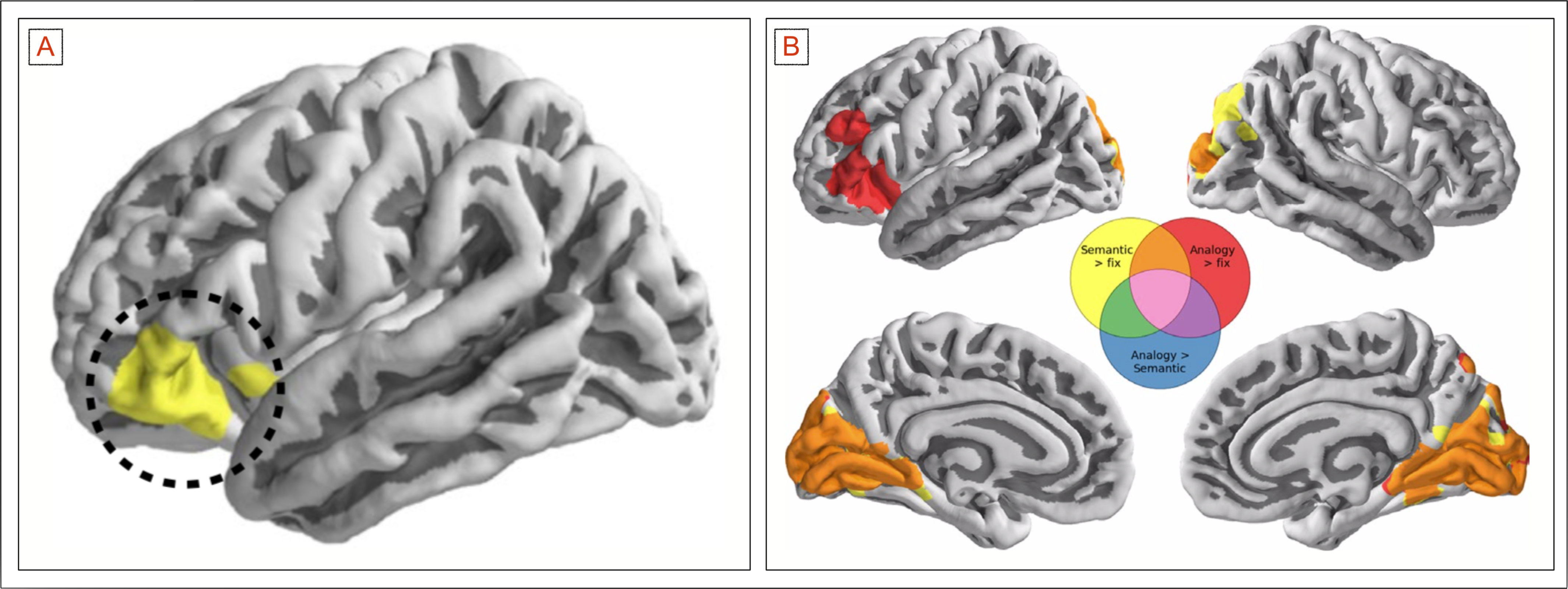

Two widely-cited journal articles and a 2012 special issue of the Journal of Experimental Psychology dedicated to research on identifying the neural substrates of analogical reasoning describe an emerging consensus that the rostrolateral prefrontal cortex, known for its increased connectivity with higher-order association areas in humans, plays an important role in supporting analogical reasoning as well as other executive functions including cognitive flexibility, planning, and abstract reasoning. This and support from Koechlin et al [158] and others for the cascade model argues for the sort of neural network architecture that Yash and I discussed on Sunday5.

June 12, 2021

%%% Sat Jun 12 05:32:08 PDT 2021

I spent much of the last week attempting to justify the hypothesis that the prefrontal cortex (PFC) and hippocampus (HPC) work together to construct and execute high-level programs involving the serial activation of cell assemblies in the neocortex, in much the same way as the basal ganglia (BG) and the cerebellum (CB) work together to construct and execute low-level motor programs involving the serial activation of motor control circuits in the motor cortex. In the following, I justify the reasons for my abandoning this hypothesis and provide an alternative.

Despite their sharing cell types not found elsewhere in the brain and some superficial local cytoarchitectural characteristics, the CB and HPC differ substantially in terms of both their functional network architecture and number of neurons, with the CB at ≈ 70B CB having approximately 1000 × more neurons than the HPC at ≈ 40M neurons. Moreover, there is a good deal of evidence that the HPC, with its substantially increased white matter tracts, reciprocally connecting the PFC and HPC in humans, plays a key role in supporting cognitive behaviors in a manner analogous the way in which it supports motor control actions.

The focus on cell assemblies in the original proposal was predicated on three assumptions, (a) it is necessary to activate arbitrary ensembles of neurons to perform computations, (b) it is necessary to route arbitrary patterns of activity to serve as input to those computations, and (c) changes in the strength of connections between neurons must be restricted to the neurons in the activated ensembles in order to avoid the negative impact of catastrophic interference. Of the three, only (c) remains a concern and the potentially negative consequences of such interference apply to any proposal for lifelong-learning and can probably be mitigated by some variant of Hebbian learning or finessed using memory-augmented neural networks.

The presumed necessity to deal with arbitrary ensembles was partly based on believing that the information provided by the thalamocortical radiations and the manner in which it is represented in the striatum and subsequently projected onto working memory in the frontal cortex are limited in such a way as to preclude more complicated cognitive and abstract reasoning. I no longer believe this to be the case, and am now predisposed to assume that most motor-related functions that involve the PFC, HPC, BG and CB are likely to have cognitive counterparts that depend on the same neural components and related pathways.

In particular, I had to stumble over the early work by Redgrave, Prescott, and Gurney [211] on the generality of action selection in the basal ganglia extending to cognitive activities before finally circling back to O'Reilly's Chapter 7 on the basal ganglia, action selection and reinforcement learning in Computational Cognitive Neuroscience in which Randy and his co-authors explore these issues in detail [191]. The paper by Huang et al [142] (PDF) describes a Leabra model that implements an instructive simplfied instantiation of their theory6.

Supporting Research Papers

Redgrave et al [211] – A selection problem arises whenever two or more competing systems seek simultaneous access to a restricted resource. Consideration of several selection architectures suggests there are significant advantages for systems which incorporate a central switching mechanism. We propose that the vertebrate basal ganglia have evolved as a centralized selection device, specialized to resolve conflicts over access to limited motor and cognitive resources. Analysis of basal ganglia functional architecture and its position within a wider anatomical framework suggests it can satisfy many of the requirements expected of an efficient selection mechanism.

Buzsàki [47] – Review of the normally occurring neuronal patterns of the hippocampus suggests that the two principal cell types of the hippocampus, the pyramidal neurons and granule cells, are maximally active during different behaviors. Granule cells reach their highest discharge rates during theta-concurrent exploratory activities, while population synchrony of pyramidal cells is maximum during immobility, consummatory behaviors, and slow wave sleep associated with field sharp waves. [...] Sharp waves reflect the summed post-synaptic depolarization of large numbers of pyramidal cells in the CA1 and subiculum as a consequence of synchronous discharge of bursting CA3 pyramidal neurons. The trigger for the population burst in the CA3 region is the temporary release from subcortical tonic inhibition.

Buzsàki [48] – Theta oscillations represent the "on-line" state of the hippocampus. The extracellular currents underlying theta waves are generated mainly by the entorhinal input, CA3 (Schaffer) collaterals, and voltage-dependent CA2 currents in pyramidal cell dendrites. The rhythm is believed to be critical for temporal coding/ decoding of active neuronal ensembles and the modification of synaptic weights. [...] Key issues therefore are to understand how theta oscillation can group and segregate neuronal assemblies and to assign various computational tasks to them. An equally important task is to reveal the relationship between synaptic activity (as reflected globally by field theta) and the output of the active single cells (as reflected by action potentials).

One of the most interesting aspects of sharp waves is that they appear to be associated with memory. Wilson and McNaughton 1994, and numerous later studies, reported that when hippocampal place cells have overlapping spatial firing fields (and therefore often fire in near-simultaneity), they tend to show correlated activity during sleep following the behavioral session. This enhancement of correlation, commonly known as reactivation, has been found to occur mainly during sharp waves. It has been proposed that sharp waves are, in fact, reactivations of neural activity patterns that were memorized during behavior, driven by the strengthening of synaptic connections within the hippocampus.

This idea forms a key component of the "two-stage memory" theory, advocated by Buzsáki and others, which proposes that memories are stored within the hippocampus during behavior and then later transferred to the neocortex during sleep. Sharp waves in Hebbian theory are seen as persistently repeated stimulations by presynaptic cells, of postsynaptic cells that are suggested to drive synaptic changes in the cortical targets of hippocampal output pathways. (SOURCE)

Proskovec et al [202] – Employed magnetoencephalography (MEG) to study the oscillatory dynamics of alpha, beta, gamma, theta oscillations – with a focus on beta signaling – in governing the dynamics in the prefrontal and superior temporal cortices predict spatial working memory (SWM) performance. [...] Given the established functional specializations of distinct prefrontal regions and the prior reports of increased frontal theta during WM performance, we predicted increased theta activity in prefrontal regions that would be differentially recruited during SWM encoding and maintenance processes. [...] In contrast, we hypothesized persistent decreases in alpha and beta activity in posterior parietal and occipital regions throughout SWM encoding and maintenance, as decreased alpha and/or beta activity has been linked to active engagement in ongoing cognitive processing, and given the involvement of posterior parietal and occipital regions in spatial attention and mapping the spatial environment.

Choi et al [64] – The canonical striatal map, based predominantly on frontal corticostriatal projections, divides the striatum into ventromedial-limbic, central-association, and dorsolateral-motor territories. While this has been a useful heuristic, recent studies indicate that the striatum has a more complex topography when considering converging frontal and nonfrontal inputs from distributed cortical networks. The ventral striatum (VS) in particular is often ascribed a "limbic" role, but it receives diverse information, including motivation and emotion from deep brain structures, cognition from frontal cortex, and polysensory and mnemonic signals from temporal cortex.

Haber [120], McFarland and Haber [176] – Corticostriatal connections play a central role in developing appropriate goal-directed behaviors, including the motivation and cognition to develop appropriate actions to obtain a specific outcome. The cortex projects to the striatum topographically. Thus, different regions of the striatum have been associated with these different functions: the ventral striatum with reward; the caudate nucleus with cognition; and the putamen with motor control. However, corticostriatal connections are more complex, and interactions between functional territories are extensive. These interactions occur in specific regions in which convergence of terminal fields from different functional cortical regions are found.

Simić et al [226], West and Gundersen [247] – The total numbers of neurons in five subdivisions of human hippocampi were estimated using unbiased stereological principles and systematic sampling techniques. For each subdivision, the total number of neurons was calculated as the product of the estimate of the volume of the neuron-containing layers and the estimate of the numerical density of neurons in the layers. The volumes of the layers containing neurons in five major subdivisions of the hippocampus (granule cell layer, hilus, CA3-2, CA1, and subiculum) were estimated with point-counting techniques after delineation of the layers on each section. The estimated numbers of neurons in the different subdivisions were as follows: granule cells 15.0 x 106 = 15,000,000, hilus 2.00 x 106 = 2,000,000, CA3-2 2.70 x 106 = 2,700,000, CAI 16.0 x 106 = 16,000,000, subiculum 4.50 x 106 = 4,500,000.

Herculano-Houzel [131], Azevedo et al [13] – The following table shows Expected values for a generic rodent and primate brains of 1.5 kg, and values observed for the human brain. Notice that although the expected mass of the cerebral cortex and cerebellum are similar for these hypothetical brains, the numbers of neurons that they contain are remarkably different. The human brain thus exhibits seven times more neurons than expected for a rodent brain of its size, but 92% of what would be expected of a hypothetical primate brain of the same size7: Using an estimate of the number of the neurons in the human hippocampus calculated from the sectional estimates provided in Simić et al, notice the differences between the hippocampus and two cortical regions: hippocampus ≈ 40,000,000, neocortex ≈ 16,000,000,000, cerebellum ≈ 69,000,000,000:

|

June 9, 2021

%%% Wed Jun 9 05:28:24 PDT 2021

Complementary Learning Systems

Complementary Learning Systems theory posits two separate but complementary learning system. One depends primarily on cortical circuits that are highly recurrent, self isolating and perpetuating, governed by attractor dynamics. Here we suggest that these circuits facilitate the formation of cell assemblies that can be excited to perform recursive computations with inputs and outputs relying the dense network of thalamocortical radiations in the primate neocortex. The other learning system involves the hippocampus and adjacent areas in the entorhinal cortex with reciprocal access to neocortical circuits through the thalamus in its function as a – one of many – relay. We aim to model hippocampal formation as key/value memory systems whose keys correspond to compressed perceptual sensorimotor patterns in thalamus and values correspond to the activation patterns of Hebbian cell assemblies.

Self Organizing Cell Assemblies

Tetzlaff et al [231] provide a computational model and robotics application describing how the brain self-organizes large groups of neurons into coherent dynamic activity patterns. Buzsaki [50] reviews three interconnected topics that he claims could facilitate progress in defining cell assemblies, identifying their neuronal organization, and revealing causal relationships between assembly organization and behavior. Christos Papadimitriou, Michael Collins, and Wolfgang Maass [193] describe an interesting model they call the assembly calculus, occupying a level of detail intermediate between the level of spiking neurons and synapses and that of the whole brain capable of carrying out arbitrary computations. They hypothesize that something like their model may underlie higher human cognitive functions such as reasoning, planning, and language8. For excerpts from O'Reilly et al [190] concerning the relevant neural architecture see9.

Analogies Grounded in Experience

Analogies are mappings of properties and relationships between – dynamical/relational – models that (a) preserve specific features essential for a particular use case and (b) retain an overall consistency sufficient to support any requisite use-specific analysis. Grounding serves as the foundation for constructing analogies – it is the mother of all models. We assume that the model generated in the process of grounding is (a) constructed in stages during early development, (b) driven by extensive self-supervised exploration [195]10, and (c) verified by the action perception cycle as a form of error-correcting predictive contrastive coding [251] – see related work on the equivalence of contrastive Hebbian learning in multilayer networks [253]11.

Perception as Proactive Attention

We view attention as a – proactive – combination of pattern completion and separation for identifying and merging correlated features in representations encoding dynamical/relational models. This is facilitated in the hippocampal formation by the dentate gyrus (ostensibly) performing pattern separation, and area CA3 (ostensibly) performing pattern completion early in development and a combination of completion and integration later in adulthood. The combined post-developmental separation and integration occurring in the adult is believed to be aided by neurogenesis.

Hippocampus in Cognitive Control

In his 2006 book [49] entitled Rhythms of the Brain, György Buzsáki has a chapter by the name "Oscillations in the 'Other Cortex': Navigation in Real and Memory Space" dedicated to the hippocampus that emphasizes the different roles played by gamma, theta, and sharp-wave ripple (SWR) oscillations. Research over the following decade led to the hypothesis that organized neuronal assemblies can serve as a mechanism to transfer compressed spike sequences from the hippocampus to the neocortex for long-term storage. In its simplest version, this model posits that during learning the neocortex provides the hippocampus with novel information leading to transient synaptic reorganization of its circuits, followed by the transfer of the modified hippocampal content to the neocortical circuits [51].

More recently there appears to be an emerging consensus that the hippocampus is involved in much more than simply storing and retrieving episodic memory [161]. Loren Frank gave an invited talk in CS379C on the hypothesis that the hippocampus and SWR mediate the retrieval of stored representations that can be utilized immediately by downstream circuits in decision-making, planning, recollection and/or imagination while simultaneously initiating memory consolidation processes [56].12.

Hippocampus-Cerebellum Connection

The hypothesized role of the hippocampus in enabling cognitive function by controlling activity in cell assemblies suggests comparison with our growing appreciation for the support of cognitive function in the cerebellum [208, 144, 94]. David Marr's careful studies of the cerebellum [174] and what he referred as the archicortex [250, 173], now more commonly known as the hippocampus and hippocampal formation, underscore similarities between these two brain structures with respect to their cytoarchitecture and specialized cell types including granule cells, purkinje cells and mossy fibers.

Constructing Cell Assembly Programs

As an exercise, consider the hypothesis that the prefrontal cortex and hippocampus work together to construct and execute high-level programs involving the serial activation of cell assemblies in the necortex, in much the same way as the basal ganglia and the cerebellum work together to construct and execute low-level motor programs involving the serial activation of motor control circuits in the motor cortex. NOTE: On further reflection and reading related research papers, this hypothesis was retracted – see here for a summary of the reasons why.

Here are just a few references that provide some support for the above hypothesis. There are a lot more pieces pieces scattered about in class notes and email exchanges that I will try to dig up once I take care of a number of delayed tasks that had to be set aside during the quarter I was teaching:

Gage and Baars [108] – This suggests that a brain-based global-workspace capacity cannot be localized in a single anatomical hub. Rather, it should be sought in a functional hub – a dynamic capacity for binding and propagation of neural signals over multiple task-related networks, a kind of neuronal cloud computing.

Deco et al [82] – The dense lateral intra- and inter-areal connections [...] make possible the emergence of a reverberatory dynamic when the level of excitation exceeds the level of inhibition, which can be propagated globally across the brain [...] provide direct evidence on the hierarchical structuring of information processing in the network.

Dehaene et al [86, 87] – In conclusion, human neuroimaging methods and electrophysiological recordings during conscious access, under a broad variety of paradigms, consistently reveal a late amplification of relevant sensory activity, long-distance cortico-cortical synchronization at beta and gamma frequencies, and ignition of a large-scale prefronto-parietal network.

Frank et al [152, 258] – Hippocampal-cortical networks maintain links between stored representations for specific and general features of experience, which could support abstraction and task guidance in mammals [...] that have radically different anatomical, physiological, representational, and behavioral correlates, implying different functional roles in cognition.

June 5, 2021

%%% Sat Jun 5 15:49:42 PDT 2021

A transformational analogy does not look at how the problem was solved – it only looks at the final solution. The history of the problem solution – the steps involved – are often relevant. Carbonell [54, 55] showed that derivational analogy is a necessary component in the transfer of skills in complex domains, "In translating Pascal code to LISP – line by line translation is no use. You will have to reuse the major structural and control decisions. One way to do this is to replay a previous derivation of the PASCAL program and modify it when necessary. If the initial steps and assumptions are still valid copy them across. Otherwise alternatives need to be found – best first search fashion."

Bhansali and Harandi [123, 38] apply derivational analogy to synthesizing UNIX shell scripts from a high-level problem specification. The work of Carbonell, Bhansali, and Harandi is classic GOFAI with an "expert-systems" based approach, but it is interesting to walk through the algorithm they present in [123] – see the page numbered 393 in this PDF. Their use of a derivation trace13 reminded me of using a latent code to specify the high-level components of a program as a plan in Hong et al [141]. The application to UNIX scripts is also an interesting target domain. I'm not endorsing this approach as a solution, rather, I see it as a different perspective and possible idea generator.

May 31, 2021

%%% Mon May 31 04:7:09 PDT 2021

Explain for Manan, William, and Peter how subroutines might be implemented as a general framework for integrating complementary memory systems:

In the following, we assume that the assistant observes many instances of the programmer executing IDE repair workflows consisting of individual IDE invocations, e.g., jump 10, strung together like beads on a string. Each instance corresponds to an execution trace of a more general workflow applicable to a particular class of repairs. In principle, the assistant could concatenate all of the instances it has observed into one long string and index into this string to identify substrings relevant to the particular task at hand. Alternatively, it could compile the instances into a collection of subroutines indexed by the general type of repair they handle. The following presents a model of episodic memory that supports subroutine compilation.

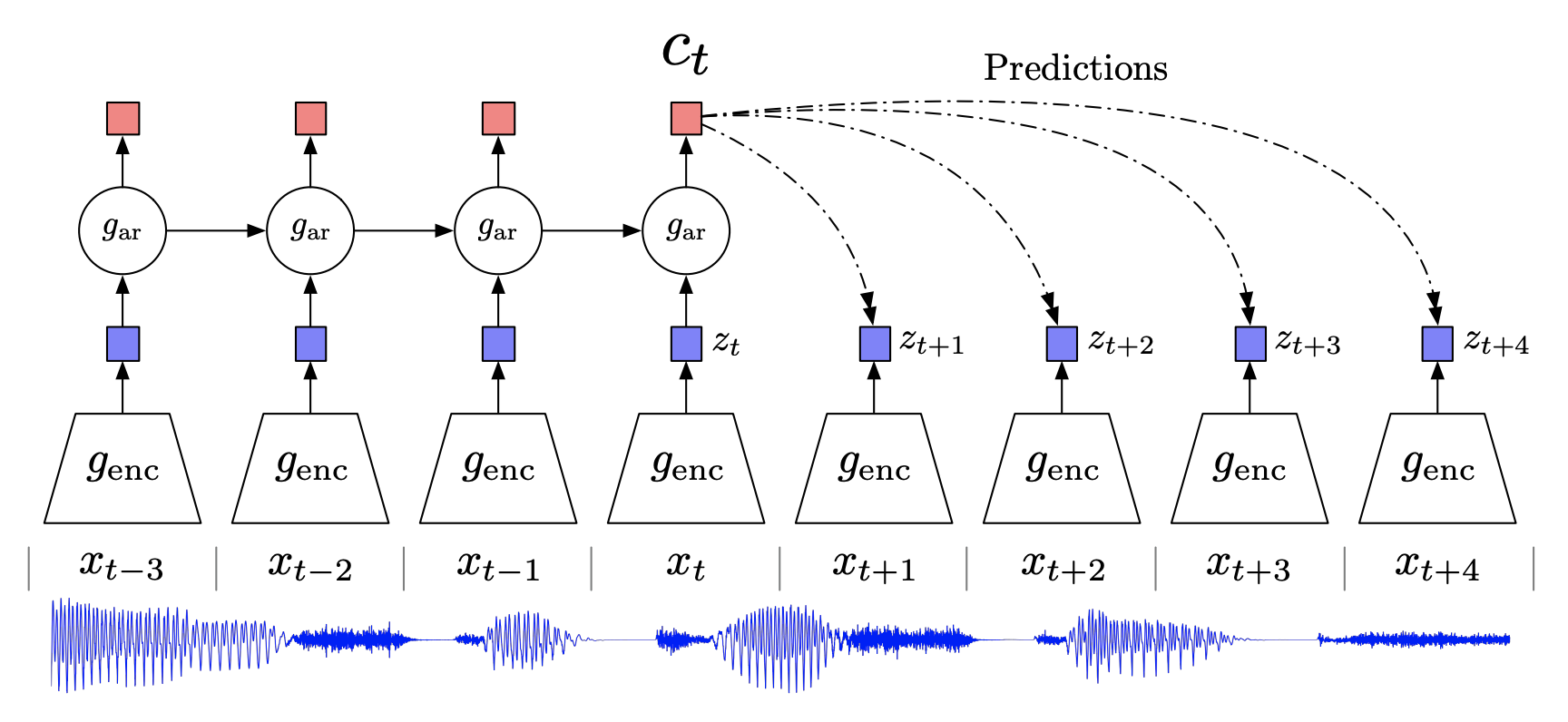

As shown in the diagram, a short-term key/value memory (STM) is used to store initial-state + action + reward + final-state tuples, [st, at, rt, st+1], during a session of the assistant working with the programmer. The keys correspond to perceptual state summaries produced by the perception stack labeled A. As discussed and employed in Abramson et al [2] these could correspond to a concatenation of K sensory snapshots where K is an estimate of the K-Markov property applying to the partially observable environment. Following the working session, during the analog equivalent of the apprentice in NREM sleep, the STM tuples are (selectively) committed to long-term key/value memory (LTM storage) using some variant of experience replay.

Subroutines – as illustrated in the inset on the lower-right – correspond to directed graphs in LTM storage such that edges in the graph are IDE invocations and the vertices correspond to context-based conditional branch points. The current context, denoted kt, for selecting the next action / IDE invocation is generated by the subnetwork represented by the dark green trapezoid labeled K and combines the current perceptual state summary Ct with LTM keys that predict opportunities for reward, and roughly corresponds to the way in which the striatum and basal ganglia shape the context for action selection by combining current and prior (episodic) state information – see here.

This augmented contextual summary kt is then used to retrieve a function ft from LTM storage representing an IDE invocation in the form of a set of weights for the network represented by the light green trapezoid labeled M. This method of executing previously learned functions by instantiating a network with stored weights is now relatively common in machine learning and neural programming in particular. In class, we saw representative examples in the work of Reed and de Freitas [212] and Wayne et al [244]. See also the work of Duan et al [92] on efficient one-shot learning.

By context-based conditional branching, we mean that the perceptual state summary following the last IDE invocation evaluated in executing a given subroutine is used to select the next IDE invocation in the subroutine or exit the subroutine having determined that the current state is a terminal in the directed graph in LTM storage. This description covers the basics for how the assistant might learn subroutines by watching and imitating the programmer. It does not, however, provide any clues for how you might build a neural network to execute a given subroutine, but you can find some hints in the three papers mentioned in the previous paragraph.

In terms of exploiting the benefits of having complementary memory systems, it should be possible to apply subroutines recursively, perform nested loops, call a subroutine in the midst of executing another subroutine, and more generally traverse any path in the execution tree by using the current perceptual state augmented with episodic overlays from the LTM in the role of the hippocampus. This suggests a general strategy of merging of (current) perceptual state vectors derived from sensorimotor circuits distributed thoughout the neocortex and (past) episodic perceptual state vectors selectively encoded in the LTM on the basis of their ability to predict expected cumulative reward.

Explain for Matthew, John, Sasankh, and Griffin a possible version of analogical thinking to complement search in the programmer's apprentice:

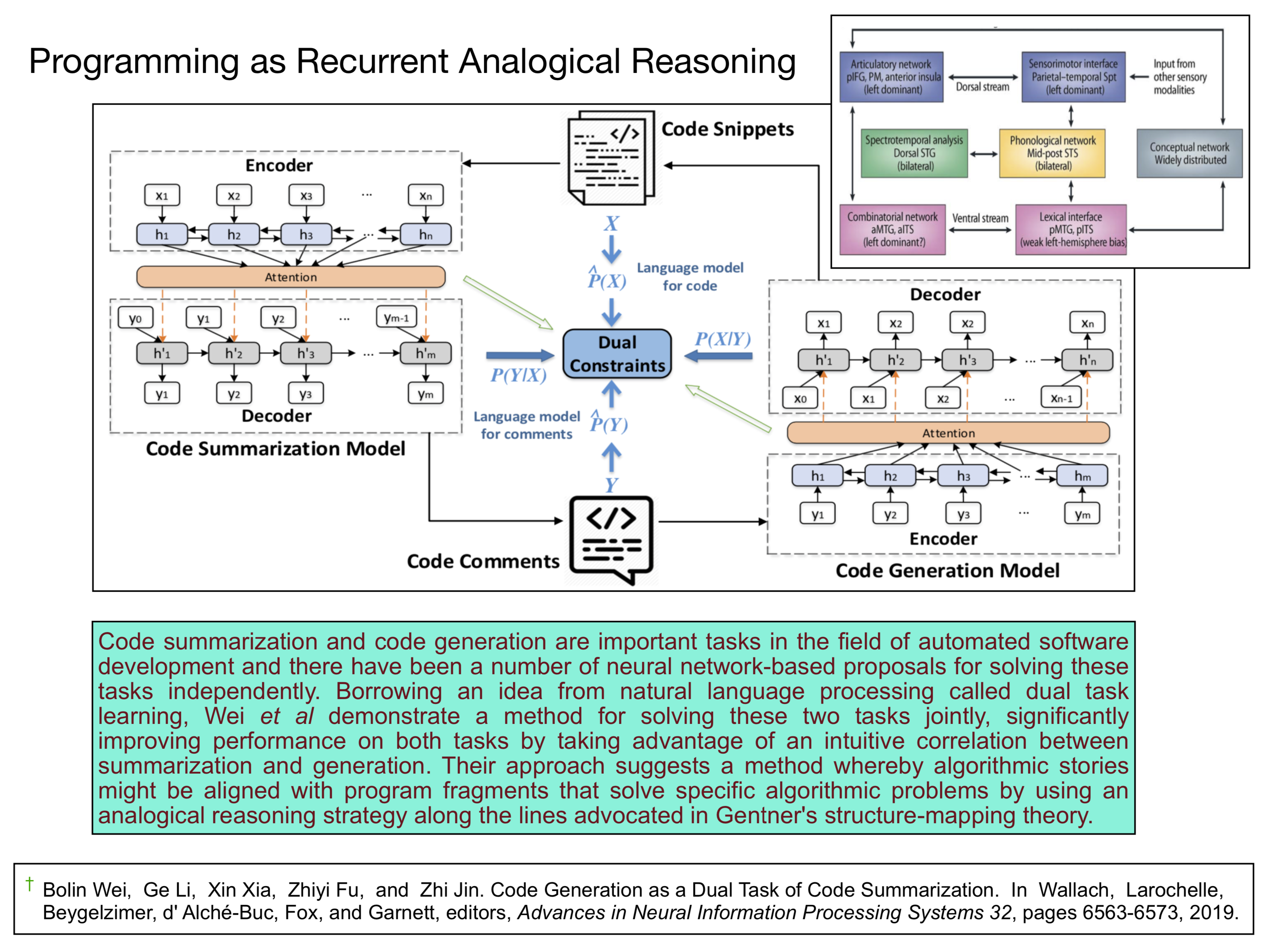

Many of papers on analogy that we've read either acknowledge or should have acknowledged Deidre Gentner's structure mapping theory of analogy [109]. Hill et al [135] combine ideas from Gentner's work and Melanie Mitchell's perception theory [181]. There are also a number of symbolic implementations of Genter's theory [70, 97]. Crouse et al [69] inspired by Gentner's work, starts with models expressed as graphs and then uses graph networks to encode them in embedding spaces and provide a link to their code here. In the following, we focus almost exclusively on embedding methods, but it should be obvious that we are talking about analogy.

Here's a general strategy for pursuing analogy in the context of the programmer's apprentice. You begin by creating an embedding space that encodes a rich class of relational objects. These could be graph networks [27, 147] or something more sophisticated like the models of dynamical systems that Peter Battaglia and Jessica Hamrick developed [28, 26]. Given a new instance of your target class of relational objects, consider its nearest neighbors in embedding space. Sounds simple, but what are the properties and relationships that define programs.

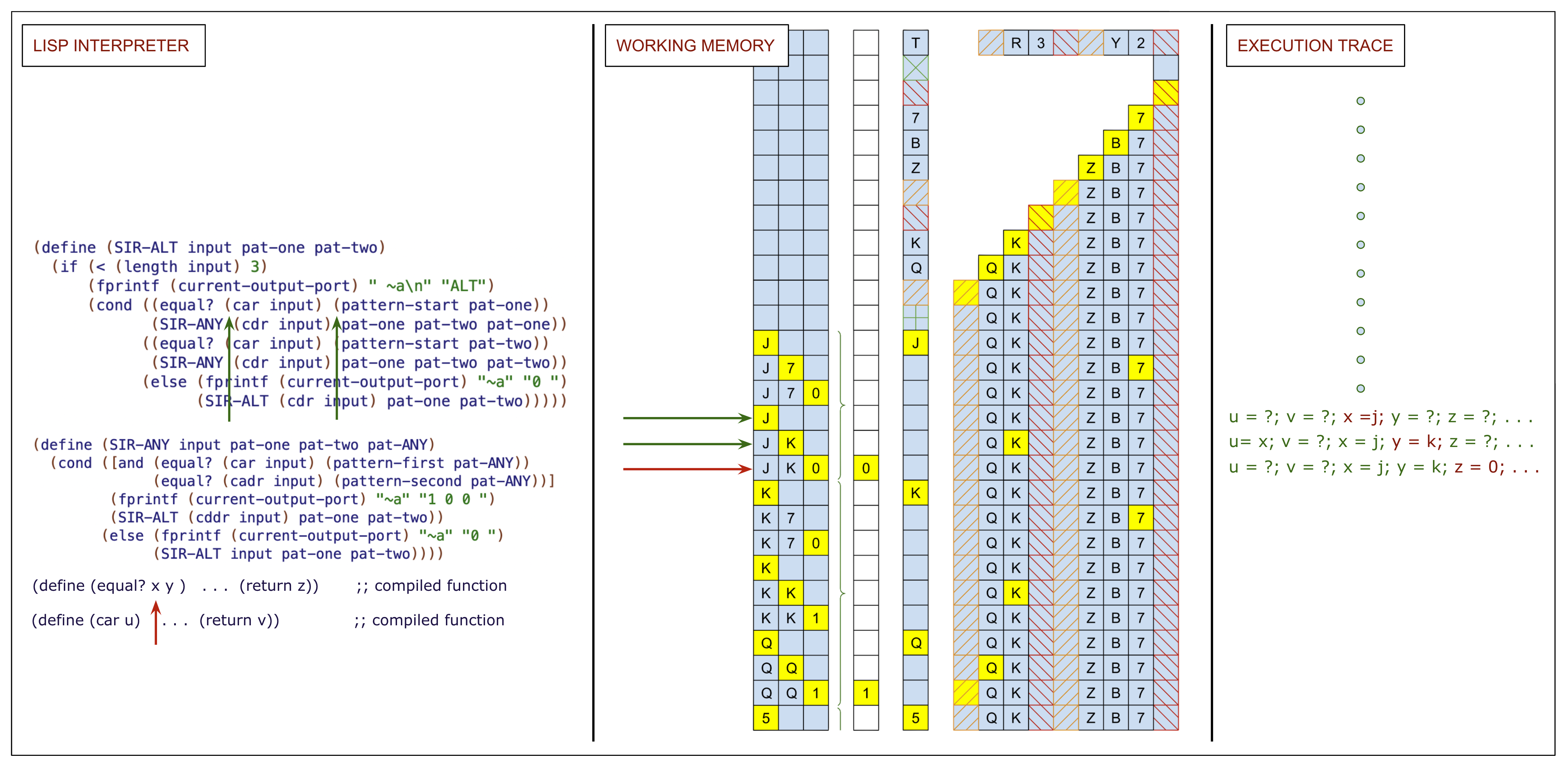

Early work in neural programming attempted to directly apply NLP methods to encode programs. This captures the static structure of a programming language revealing how one might write syntactically correct programs, but not their functional semantics. To capture semantics of programs, researchers have tried input-output pairs to provide feedback to students in introductory programming classes [198], and execution traces to classify the types of errors student tend to make [241]. Execution traces have also been used to train neural networks to interpret programs [212].

Hong et al [141] adopt a different strategy in an attempt to reduce the amount of search required to write a program. They observe that programmers often start by specifying the high-level components of a program as a plan. The authors provide a method for learning an abstract language of latent tokens that they can use to represent such plans and infer them from the program specification. Synthesizing a program consists of first learning the latent representation of the plan and then using the resulting sequence of latent tokens to guide the search for a program realizing the plan in the target language.

The Hong et al method is one of the most promising I've encountered in my readings. A program written in a high-level programming language is essentially plan for an optimizing compiler to convert into efficient code. Their method is not a solution to the problem of search, rather, it suggests a division of labor in which the (latent) programmer searches in the algorithmic space of latent programs for a plan that meets the problem specification and then hands it off to the assistant who carries out the tedious job of turning the plan into an executable that passes all the unit tests and satisfies all the input-output pairs.

When I first read Seidl et al [222] on using modern Hopfield networks [206] for drug discovery, I thought of molecules, their properties and the reaction templates used to alter those properties as dynamical systems with at least the complexity computer programs. They are certainly combinatorially complex and exhibit complicated difficult-to-predict dynamics, but like Chess and Go they appear to yield to a combination of pattern recognition and search which deep networks excel at. Like Chess in which learning to make legal is moves simple to learn, writing syntactically correct programs is relatively easy, but unlike chess figuring what even a short program with lots of conditional branch point computes can be daunting.

Becoming adept at writing software requires a great deal of specialized knowledge much of it focusing on how to compartmentalize and mitigate complexity. The practice of carefully documenting and writing tests that often require more lines than the actual program is not an option for the professional software engineer. The apprentice is not expected to invent new algorithms or deploy them in novel situations, but rather to take advantage of its ability to focus, avoid distraction, and offload tedious chores allowing the programmer to concentrate on problems that benefit from decades of experience. We imagine the apprentice carrying out many of the tasks that standard developers tools like linters can't handle and would normally require a couple hours of tedium or writing short script. This is the sort of coding we expect of the apprentice and analogy to help with.

Consider how grounding in the physical world might provide a bias for exploiting analogy as in the example of teaching students to arrange themselves in a line where each student but the first is behind a student who is the same height or shorter. There is a large literature on how children learn better by being exposed to physical analogies – think abacus, and how signaling with sign language combined with spoken language can improve learning depending on developmental stage [217], and how young children talk to themselves describing their behavior and the behaviors of others including fictional characters and inanimate toys. I conjecture the benefit from using pretrained transformers as universal computation engines [171] derives from the same bias as analogy derives from physical grounding.

Explain for Griffin of how future state-of-the-art AI systems will inevitably come to believe what many humans believe about being conscious:

Every mammal – for that matter any animal that we would ascribe intelligence, in addition to being able to parse the relevant features of its physical environment, must be able to represent and reason about the various parts of its body and to distinguish between those parts and the external world, otherwise, confusion about this distinction will lead to inappropriate and possibly destructive behavior. The human brain adapts the representations of its body and its sensory map. Animals that make sounds have to know that they are making sounds and in the case of birds and mammals, they have to learn how to make the right sounds whether for sounding an alarm or all-clear signal, or attracting a mate. Male birds learn their mating call by mimicking their male parent and then practicing on their own listening to themselves. Apropos our discussion here, they don't confuse their call from that of their parents, other birds and especially not other birds they compete against for reproductive advantage.

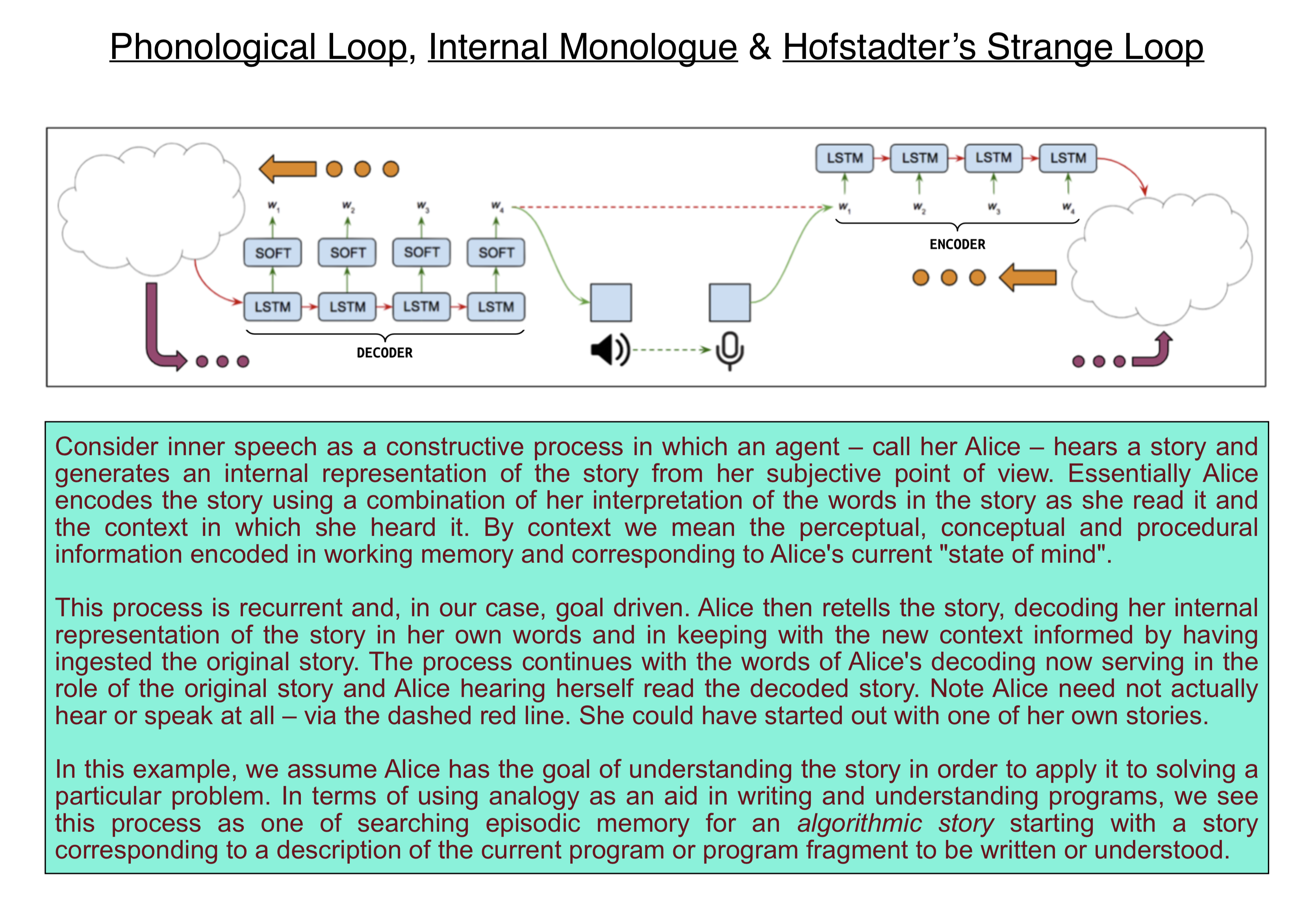

Humans have a much greater range of signals they communicate with and also learn by mimicking other humans and practicing by repeating new words that in infants generally elicits a parent correcting the infant's pronunciation by a variety of stereotyped methods. The phonological loop plays a crucial role in learning the novel phonological forms of new words, Baddeley et al [16] have proposed that the primary purpose for which the phonological loop evolved is to store unfamiliar sound patterns while more permanent memory records are being constructed. Obviously, the speaker is aware or at least behaves as though aware that he or she produced the sounds. As young children acquire language, most children take to describing their behavior to themselves or anyone else within earshot. As they mature it becomes obvious that such behavior is inappropriate in most contexts and they internalize their "dialogue" in what is generally referred to as inner-speech [5, 7, 6].

Suppose you write a neural network as part of a robotic system capable of inner-speech and competent enough to converse with a human being about a wide range of topics. Suppose further that you tell such a robot that you are conscious14 whereby you mean – and demonstrate to the robot by providing examples – that consciousness is your awareness of yourself and the world around you. Essentially, if you can describe something that you are experiencing in words, then it is part of your consciousness15. Given what you know about artificial agents equipped with modern natural language processing systems including those that aspire to fluid question answering, conversational dialogue management, commonsense reasoning, and collaboration as in the case of the Abramson et al paper, and extrapolating out a decade hence given the current trajectory, it seems inevitable that future artificial agents will, if pressed, make reports of their exerience similar to those we have come to expect from humans.

May 26, 2021

%%% Wed May 26 05:46:40 PDT 2021

It is said that you can lead a horse to water, but you can't make him drink. I've learned from 40 years of teaching that good teaching is not about students learning facts, it's about teaching them how to navigate in new knowledge spaces and enabling them to learn how to learn what they need to know in order to absorb new facts in context. You would never say that in a course prospectus; students wouldn't understand it, and, in point of fact, a course that only focused on design principles would be dry indeed.

CS379C is ostensibly about systems that learn how to read, write, repair conventional computer programs. The impetus for creating the course and arranging for a very specific group of scientists and engineers to talk about their work was a little-heralded paper that came out in the midst of a global pandemic and was largely ignored and under-appreciated. For some of the same reasons that I gave you yesterday, the majority of people who read it considered it largely irrelevant to either natural language processing or robotics.

Admittedly, the paper was a baby step forward in terms of the actual problem that it solved. However, the way in which it solved the problem provides a foundation of ideas and technologies for achieving human-level AI that is both replicable and scalable for those few who have the insight to recognize its potential value.

In addition to the first five weeks of the class listening to and interacting with some of the researchers that contributed to the underlying concepts and technologies, students were provided with a relatively small number of papers, some of them annotated carefully to focus on the important design principles as seen through the lens of implemented systems. In addition to the paper by Abramson et al, students were repeatedly advised to read the MERLIN paper and the paper by Merel et al – all three of them carefully annotated to underscore the important content.

Eve Clark's talk and participation in class provided key insights into how we acquire language and ground our understanding in the experience of the world we share with other humans, and the recent "Latent Programmer" paper by Joey Hong et al and work by Felix Hill on the relationship between analogy and contrastive predictive coding were added to the mix and placed in context in Rishabh's and Felix's invited talks and class discussions.

In encouraging students to apply what they learned to a particular problem – the programmer's apprentice, the hope was that individual teams would be able to focus on key elements of the problem and not only gain a greater understanding of how those elements depend upon one another, but also experience what it's like to work on such a complicated problem with a larger team of researchers from a diverse set of scientific and engineering disciplines. To encourage them to appreciate and take advantage of the dependencies between the suggested projects, students were told that if they contribute to this collective enterprise they would be listed as co-authors on an archive preprint that would be shared with the experts that enlisted to serve as invited speakers and consultants on student projects.

This lengthy discourse was intended to explain why in our discussions concerning project proposals and more recently in project design reviews I have tried to encourage you to think about how to focus your projects to make such a contribution to the larger aspirational goal of designing the next generation of interactive agents building on the work of the interactive agents group at DeepMind.

I hope that I have also made it quite clear that such a contribution is not required for you to get a good grade in the class, and have done what I can to suggest how what you proposed wanting to work on might connect the collective enterprise as well as providing advice on how best to pursue your interests independently. Make the most of these last few days of the quarter in working on something that really interests you.

May 25, 2021

%%% Tue May 25 04:44:15 PDT 2021

On Sunday morning, I met with Yash and Lucas and we talked about the model of Fuster's hierarchy that I shared with students attending the project discussion meeting last Thursday. When I tried to explain how to implement the reciprocal connections between the perceptual stack and the motor stack within each level of the hierarchy, I ran into problems but soldiered on to the end nonetheless. Following up, Yash provided a helpful critique specifically dealing with my description of the reciprocal connections16.

Afterward, Lucas sent around some of his thoughts concerning a related problem having to do with a model of introspection he has been working on. Among other ideas, he mentioned the relevance of work by Roger Shepard and provided a link to a relatively recent paper by Shepard that touches upon issues relating to my presentation in the morning. Lucas's mention of introspection reminded me of how I framed inner speech as a recurrent process for making sense of some given explanation or proposed solution to a problem by talking to yourself. Here is how it works:

Suppose someone proposes an interesting idea to you. As part of the normal process for your listening to the proposal, you encode the description of the proposal in the (putative) language comprehension areas in the posterior superior temporal lobe, including Wernicke's area, as you would any other utterance in a conversation. The resulting encoding is represented as an embedding vector that serves as a proxy for the corresponding pattern of activity in the language comprehension areas.

We model this biological process using some version of the standard NLP encoder-decoder architecture. For concreteness, assume we use a transformer or BERT style deep network, implementing a variation on the idea of Baddeley's phonological loop, that encodes the spoken words, performs some intermediate transformations on the encoding, and then decodes the resulting embedding vector as a sequence of words to be spoken whether out loud or to yourself as in the case of inner speech.

The intermediate transformations implemented as stages in the transformer stack are trained to search for analogies that map the input – the encoded description of the proposal in this case – to the output – an alternative description that you are more familiar with as it is cast in terms of your own vocabulary and the meanings you ascribe to the corresponding words and so is more likely to suit your purposes better than the one you just heard. The inputs and outputs are modeled as embedding vectors.

The result of these intermediate transformations is then decoded – this decoding process postulated to occur in the frontal cortex involving Brodmann's areas 47, 46, 45, and 44 which includes Broca's area located anterior to the premotor cortex in the inferior posterior portion of the frontal lobe – and then spoken out loud or to yourself in the case of inner speech, thereby completing the phonological loop.

This process could be repeated as many times as is deemed useful, with you now substituting for the person who proposed the original idea as if playing the telephone game (Chinese Whispers) with yourself – see here for more detail. The entire process provides an example of my interpretation of Lucas's introspection.

May 12, 2021

%%% Wed May 12 04:57:49 PDT 2021

Missing Mathematics

Where in my brain is the abstract knowledge I've acquired relating to mathematics, computer science, physics, and many other disciplines? I'm not referring to the elusive engram for Dijkstra's shortest path algorithm or Gödel's incompleteness theorem, I'm thinking about the circuits I exercise in writing a program or proving a theorem, and what constitutes the neural substrate for my knowledge of such abstract concepts and methods for their analysis.

It seems to me this sort of procedural and declarative knowledge is not accounted for, and could not easily be accommodated in the three-level architecture we are thinking about for the programmer's apprentice project application. Neither is it clear that fleshing out the roles of Fuster's hierarchy, the hippocampal formation, or the executive functions associated with the prefrontal cortex would reveal anything substantive to resolve the mystery17.

Hierarchical Models

I began the exercise by reviewing the three-level hierarchy corresponding to our current architecture for programmer's apprentice (PA). In anticipation of arguing for a missing fourth level, I also prepared a four-level hierarchy as visual aid accompanying this exercise including links to supplementary information anchored to the small inset images.

Elsewhere we described the PA's physical environment in terms of a collection of processes running a python interpreter and integrated development environment (IDE) that, among other services provides an interface to the Python debugger pub.py (PDB) and other developer tools. See here for more detail on the PA environment and its interface with the IDE.

Motor Primitives

The base level, L0, of our current architecture is dedicated to learning how to invoke IDE commands and attend to their inputs and outputs. This level is trained in the curriculum protocol using a variant of motor babbling in which commands and their arguments are sampled randomly and the system learns a forward inverse model of the underlying dynamics [37, 218, 89].

Simple Instructions

The next level, L1, of the architecture is responsible for learning how to execute commands, and examine the relevant output and error messages as preparation for deciding what to do next – this could be either not to do anything as in the case the problem is resolved, or provide the information necessary to guide conditional branching.

Coordinated Behavior

The penultimate level, L2, involves the assistant observing the programmer execute common code-repair workflows and recording the sequence of state-action-reward triples in an external memory network, such as a differentiable neural computer (DNC) or bank of LSTMs, modeled after the hippocampus, and, at a subsequent time analogous to the onset of non-rapid-eye-movement (NREM) sleep, the recorded sequences are replayed to produce general-purpose subroutines.

Composing Programs

At this point in training, the apprentice certainly can't be said to understand programs or programming. It does, however, have a rudimentary working knowledge of how to employ the IDE to identify a certain class of buggy programs in the same way that a baby acquires a working knowledge of how its muscles control its limbs. The objective here is to describe how the apprentice might learn to understand programming well enough to compose new programs on its own and repair a much broader class of programs and bugs. In order to do so, we consider adding another level, L3, to our three-level hierarchy.

Flexible Behavior

The architecture shown in Box 2 of Merel et al [177] is not flexible enough to support such agent-directed learning because it fails to account for what is arguably the most powerful computational asset of the human brain, namely the extensive white matter tracts that facilitate the flow of information and support its integration across functionally differentiated distributed centers of sensation, perception, action, cognition, and emotion [183].

The cortico-thalamic pathways controlled by the striatum and basal ganglia rely on highly structured representations that summarize perceptual state and constrain the way in which such information is transferred between both cortical and subcortical regions preserving in the process its inherent topographical structure. This arrangement facilitates variable binding in the prefrontal and motor cortex working memory.