Research Discussions

The following log contains entries starting several months prior to the first day of class, involving colleagues at Brown, Google and Stanford, invited speakers, collaborators, and technical consultants. Each entry contains a mix of technical notes, references and short tutorials on background topics that students may find useful during the course. Entries after the start of class include notes on class discussions, technical supplements and additional references. The entries are listed in reverse chronological order with a bibliography and footnotes at the end.

Personal Assistants

The main focus of the following entries is on digital assistants that collaborate with humans to write software by making the most of their respective strengths. This account of my early involvement in building conversational agents at Google emphasizes my technical interest in enabling continuous dialogue, resolving ambiguity and recovering from misunderstanding, three critical challenges in supporting effective human-computer interaction.

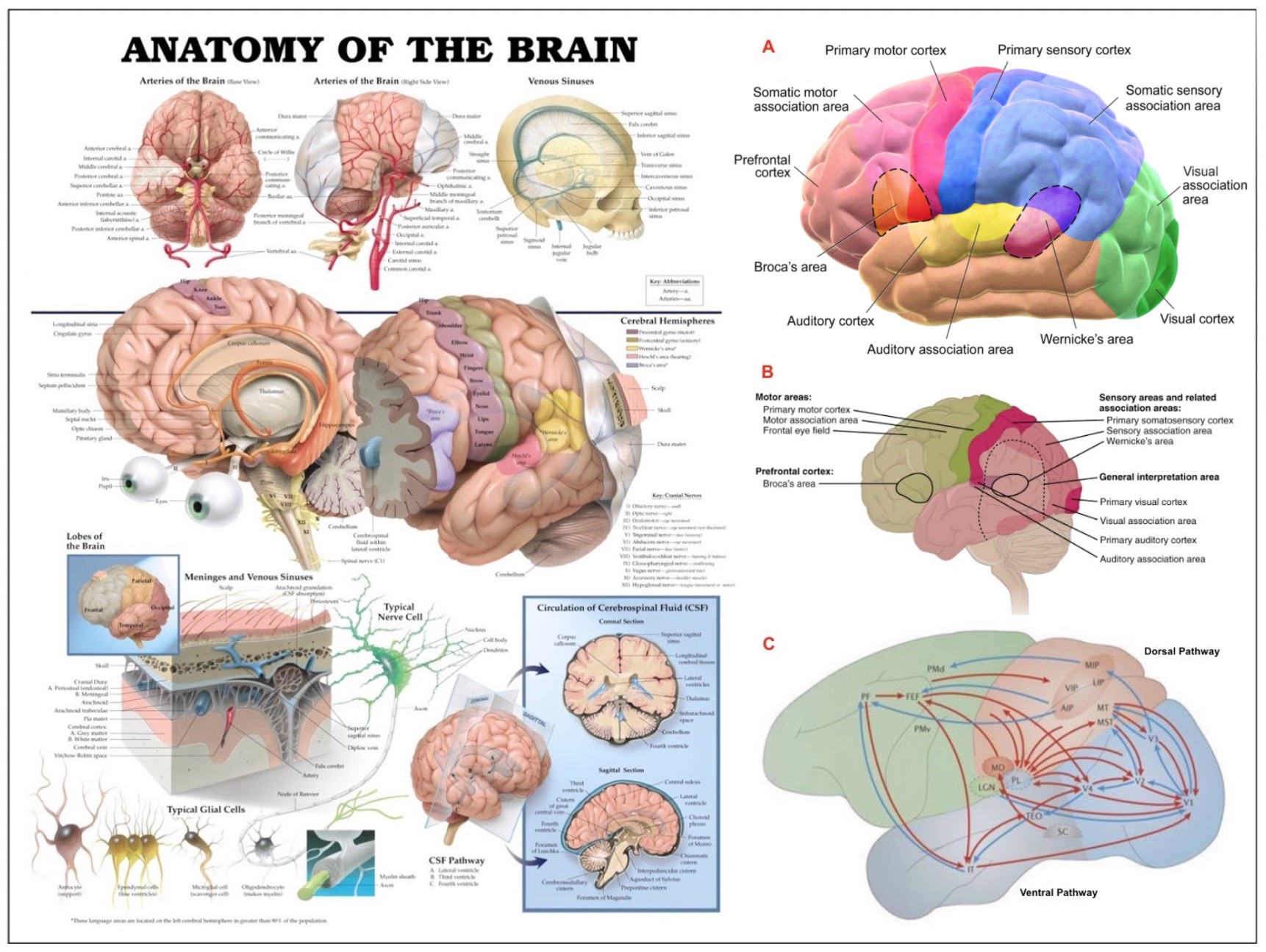

The earliest notes included below survey research in neuroscience relating to attention, decision making, executive control and theory-of-mind reasoning. The footnotes and bibliography provide an introduction to a cross section of recent research in artificial neural networks, machine learning and cognitive neuroscience that is deemed most likely to be relevant to class projects. This material is previewed in the second introductory lecture.

Class Discussions

August 31, 2018

%%% Fri Aug 31 05:57:47 PDT 2018

Chapter 8 of [87] takes a close look at the differences between the brains of humans and other primates with the motivation of trying to understand what evolutionary changes have occurred since our last common ancestor in order to enable our sophisticated use of language1. There is much to recommend in reading the later chapters of [87] despite their somewhat dated computational details. In particular, Deacon's treatment of symbols and symbolic references is subtle and full of useful insights, including an abstract account of symbols that accords well with modern representations of words, phrases and documents as N-dimensional points in an embedding space. His analysis of perseveration2 in extinction and discrimination reversal tasks following selective frontal ablations is particularly relevant to frontal cortex function [36, 234].

That said, I found Deacon's — circa 1998 — analysis of frontal cortex circuits lacking in computational detail, though perhaps providing the best account one could expect at the time of its writing. I suggest you review the relevant entries in this discussion list as well as the lectures by Randall O'Reilly and Matt Botvinick in preparation for reading this chapter. You might also want to check out a recent model of frontal cortex function arrived at through the perspective of hierarchical predictive coding by Alexander and Brown [4].

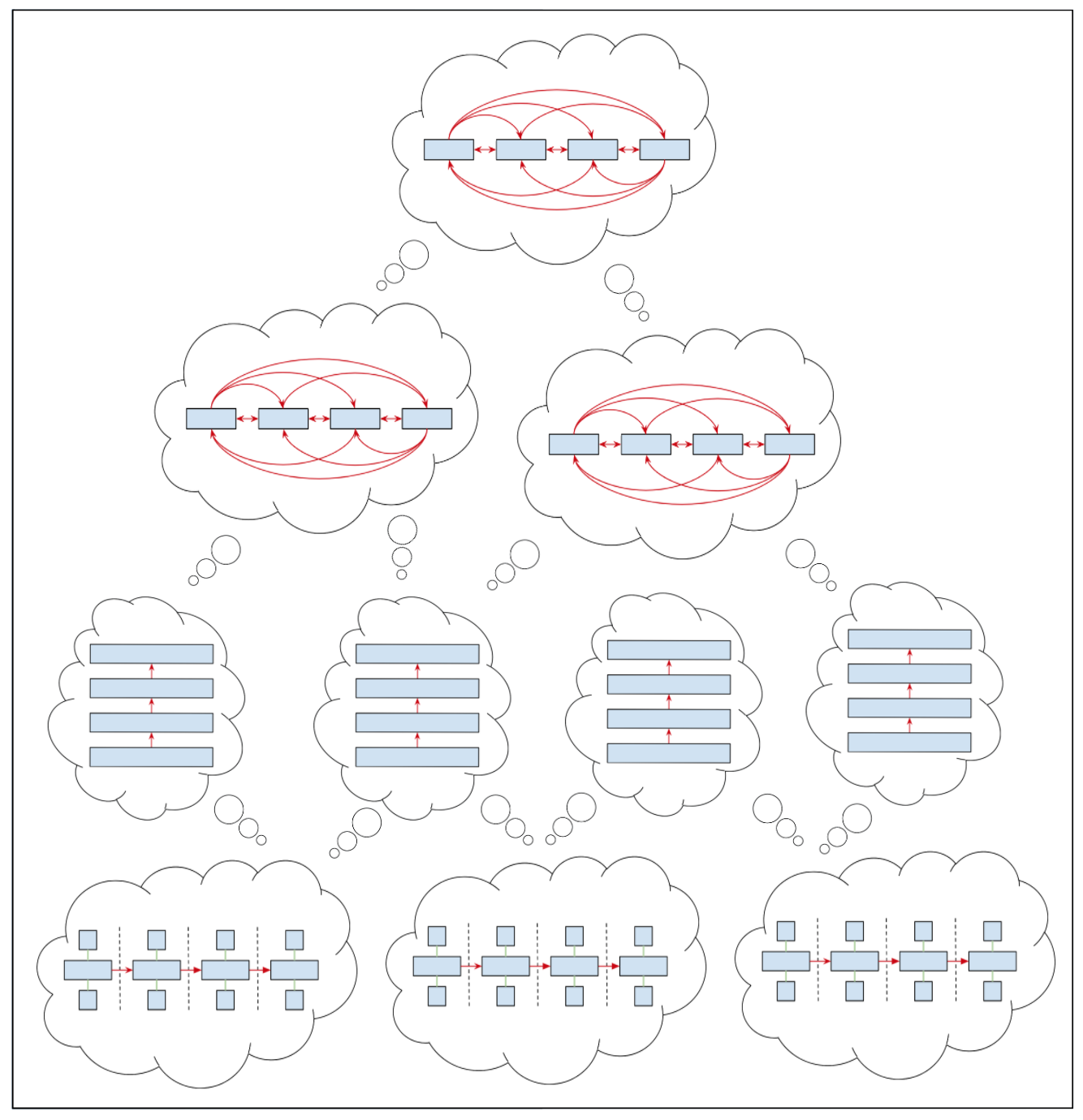

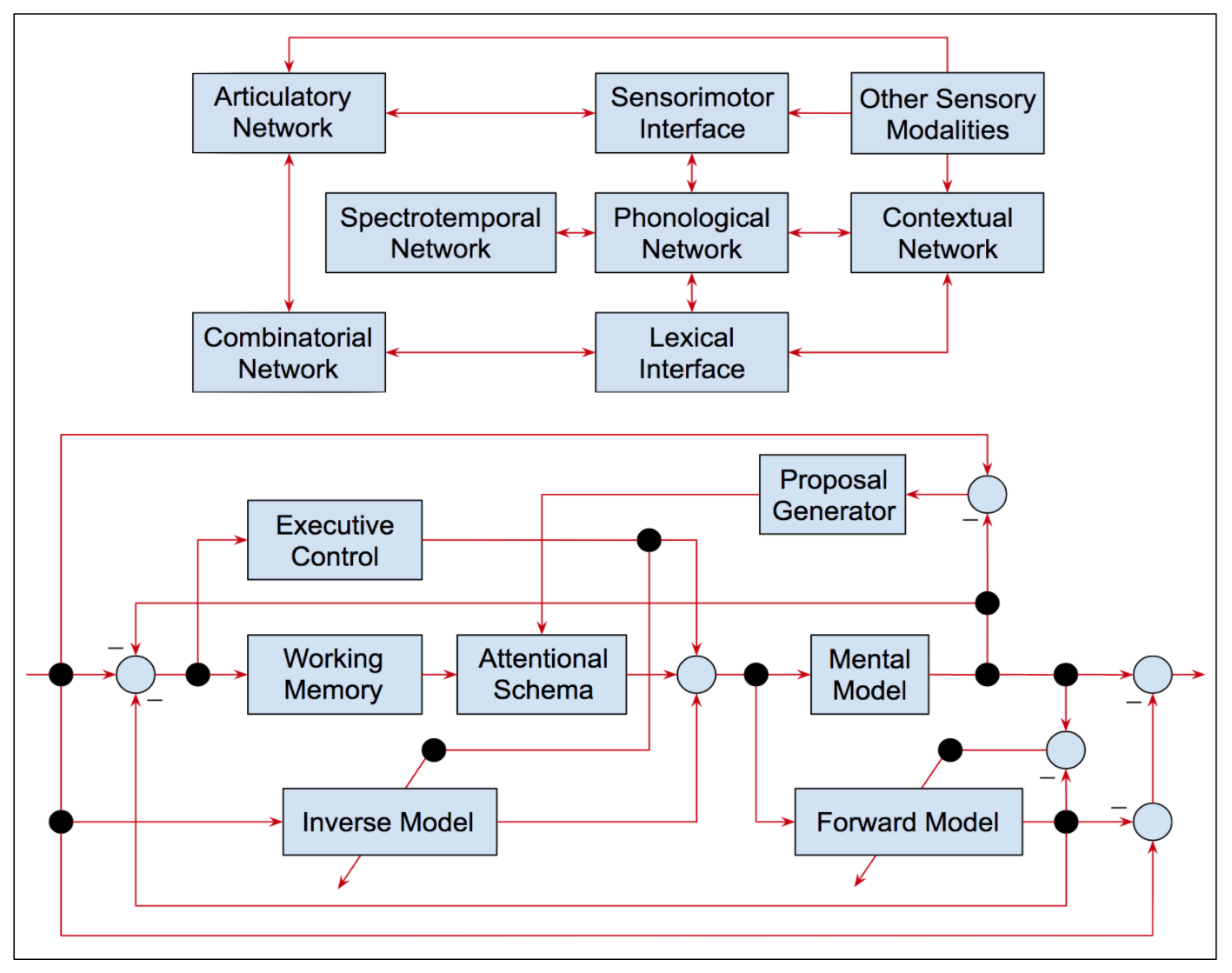

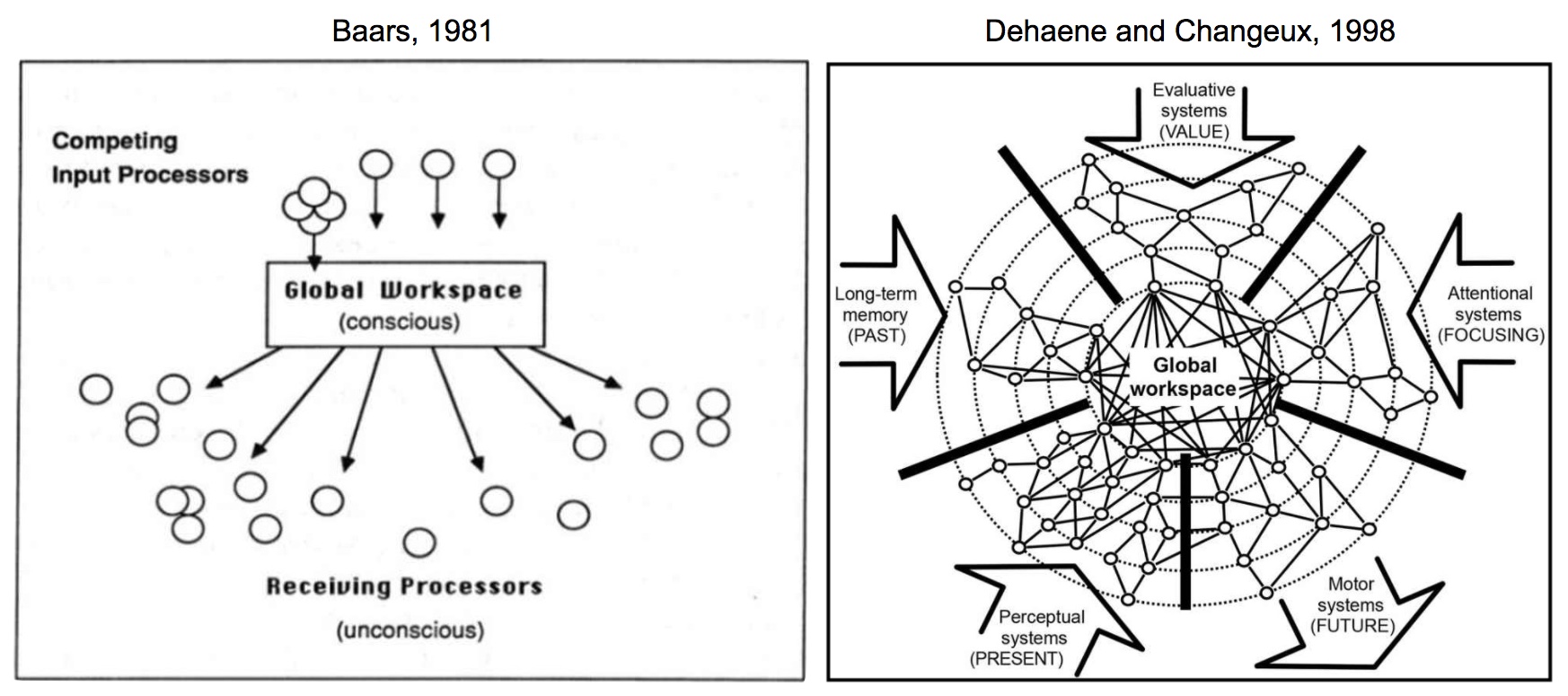

For those of you familiar with artificial recurrent neural networks, O'Reilly and Frank [331, 204] present a computational model of working memory (WM) based on the prefrontal cortex (PFC) and basal ganglia (BG) abbreviated as PBWM in the remainder of this entry. In his presentation in class, O'Reilly compares the PBWM model with the LSTM model of Hochreiter and Schmidhuber [218, 217] extended to include forgetting weights as introduced by Gers et al [154, 153]. In the PBWM model illustrated in Figure 48, the neural circuits in the PFC are gated by BG circuits in much the same way as hidden layers with recurrent connections and gating units are used to maintain activations in the LSTM model3.

Given the considerable neural chatter in most neural circuits such as the posterior cortex, Deacon mentions that it is important for complicated tasks requiring symbolic manipulation to be able to accurately and robustly maintain these representations in memory. Apropos of these characteristics of PFC working memory, O'Reilly mentions (00:22:45) that the gated neural circuits in the PFC are actively maintained so that they can serve much the same purpose as the registers employed to temporarily store operands in the arithmetic and logic unit (ALU) of a conventional von Neumann architecture computer in preparation for applying program-selected operators to their respective program-selected operands. During his presentation in class, Randy also answered questions and discussed his research on developing biologically-based cognitive architectures4.

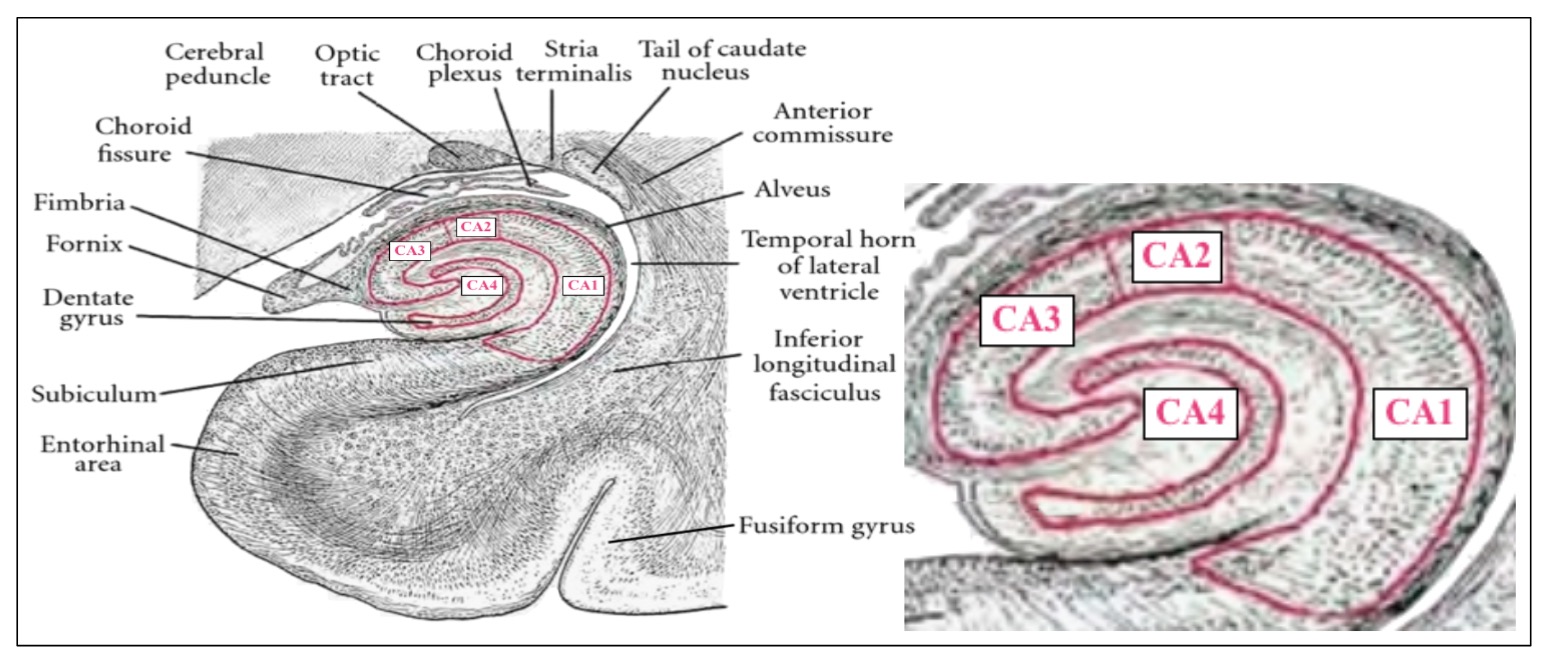

The hippocampus can be thought of as another level in the memory hierarchy that facilitates temporary storage of information that is likely to be relevant in subsequent computations or that has already been used in such computations and may be needed again later for additional computations. The hippocampus plays the role of a high-capacity memory cache and does not require the overhead of active maintenance as in the case of the BG gated neural circuits implementing WM in the PFC. It is perhaps worth noting that in building artificial systems modeled after PBWM, assuming we understand the basic principles and can engineer synthetic analogs, we could design a hybrid architecture that would be significantly superior to the biological system by engineering better technology to sustain active memory and avoid degradation due to noisy activations5.

August 29, 2018

%%% Wed Aug 29 4:53:47 PDT 2018

Almost all of the sixteen entries added to this document during August focus on the problem of bootstrapping basic language skills for the programmer's apprentice. The topics include: August 27 — abstract and fine-grained levels of specificity; August 25 — categories of hierarchical referential association; August 23 — relationship between animal signaling and language; August 19 — human language and procedural versus skill learning; August 17 — tractable targets for collaborative programming; August 13 — signaling as the basis for language development; August 11 — neuroscience of cognitive and language development; August 7 — ontological, categorical and relational foundations; August 4 — simulation-grounded and embodied language learning. We made progress on every one of these topics and it is worthwhile — as well as intellectually and emotionally cathartic — to take stock from time to time and celebrate what we have learned as opposed to being despondent about the seemingly infinite number of things we have yet to learn and may end up being crucially relevant in our present endeavor.

We learned valuable lessons from diverse fields, including cognitive and developmental psychology, computational linguistics, etymology, ethology, human evolutionary biology and computational and systems neuroscience. The work of Terrence Deacon was a particularly satisfying discovery as was my rediscovery of the work of Konrad Lorenz and Nikolaas Tinbergen whose research was first pointed out to me by Marvin Minsky in 1978 leading to my interest in AI. Deacon's theories on the origins of human language fundamentally changed the way I think about how language is acquired and how it has co-evolved within human society. Deacon's work also forced me to reconsider Chomsky's work on language [72] and Steven Pinker's [348] sympathetic view regarding universal grammar and disagreement with respect to Chomsky's skepticism about its evolutionary origins.

Deacon argues that the evidence for a universal grammar is not compelling — see Page 106 in [87]. He suggests a much simpler explanation for how children quickly learn languages, namely that the rules underlying language can be acquired efficiently through trial and error by making a relatively high proportion of correct guesses. Natural languages evolved to encourage a relatively high proportion of correct guesses by exploiting affordances that are built into the human brain. He motivates this explanation with the analogy that the ubiquitous object-oriented (WIMP) interface based on windows, icons, menus and pointing made it possible for humans to quickly learn how to use personal computers without reading manuals or spending an inordinate amount of time discovering how to accomplish their routine computing tasks. The details require over 500 pages.

In addition to the above conceptual and theoretical insights, we identified half a dozen promising technologies for bootstrapping language development including: [364] — scheduled auxiliary control for learning complex behaviors with sparse rewards from scratch; [15] — interpreting language at multiple levels of specificity by grounding in hierarchical planning; [209] — grounded language learning in a simulated robot world for bootstrapping semantic development; [238] — maximizing many pseudo-reward functions mechanism for focusing attention on extrinsic rewards; [210] — teaching machines to read, comprehend and answer questions with no prior language structure. By combining lessons learned from these recent papers and a dozen or so highly relevant classic papers mentioned in earlier entries, I believe we can efficiently learn a basic language facility suitable to bootstrap collaborative coding in the programmer's apprentice

To be clear, what I mean by "bootstrapping a basic language facility" includes the following core capabilities at a minimum: basic signaling including pointing, gesturing and simple performative6 speech acts such as those mentioned in this earlier entry; basic referencing including a facility with primary referential modes — iconic, indexical, symbolic — and their programming-relevant variants; basic interlocutory skills for providing and responding to feedback pertaining to whether signals and references have been properly understood.

In addition, the training protocol should require minimal effort on the part of the programmer by relying on three key technology components: (i) a word-and-phrase-embedding language model trained on a programming-and-software-engineering-biased natural language text corpus, (ii) a generative model for amplifying a small corpus of relevant utterances to produce a larger corpus that spans the space of possibilities afforded by the instrumented IDE along with a large collection of target-language-specific code fragments, and (iii) an automated curriculum-style [37, 39] training protocol that enables the early (incremental) developmental stages described by Deacon [87] and extensively studied in developmental child psychology7. While I fully expect that implementing such a protocol will be an engineering challenge, I feel reasonably confident that it can be done given a team of engineers skilled in working with the technologies of modern deep neural networks.

August 27, 2018

%%% Sun Aug 26 3:59:19 PDT 2018

This entry concerns two issues relating to grounding language for collaborative endeavors such as the programmer's apprentice. The first issue concerns the importance of hierarchy and abstraction in understanding and conveying instructions and the second looks at the problem of reference at a deeper level than discussed in the previous log entry to reveal the relationships between and difficulty learning different types of reference. We begin with the observation that the participants in collaborative projects often employ language at multiple levels of abstraction in order to convey instructions. Building on earlier work introducing the notion of abstract Markov decision process [166] (AMDP) which, in turn, builds upon Dietterich's MAXQ model [121, 120] that we've talked about elsewhere in these discussion notes, Arumugam et al address the implications of this observation in their recent arXiv paper [15]9.

The authors write that: "[h]umans can ground natural language commands to tasks at both abstract and fine-grained levels of specificity. For instance, a human forklift operator can be instructed to perform a high-level action, like "grab a pallet" or a low-level action like "tilt back a little bit." While robots are also capable of grounding language commands to tasks, previous methods implicitly assume that all commands and tasks reside at a single, fixed level of abstraction. Additionally, methods that do not use multiple levels of abstraction encounter inefficient planning and execution times as they solve tasks at a single level of abstraction with large, intractable state-action spaces closely resembling real world complexity. In this work, by grounding commands to all the tasks or subtasks available in a hierarchical planning framework, we arrive at a model capable of interpreting language at multiple levels of specificity ranging from coarse to more granular."

Existing approaches map between natural language commands and a formal representation at some fixed level of abstraction. Arumugam et al leverage the work of MacGlashan et al [298] that decouples the problem of decomposing abstract commands into modular subgoals and grounding the language used to communicate instructions by using a statistical language model to map between language and robot goals, expressed as reward functions in a Markov Decision Process (MDP). As a result, the learned language model can transfer to other robots with different action sets so long as there is consistency in the task representation (i.e., reward functions). Arumugam et al note, however, that "MDPs for complex, real-world environments face an inherent tradeoff between including low-level task representations and increasing the time needed to plan in the presence of both low- and high-level reward functions." and their paper offers a solution to this problem.

I appreciate how the authors have framed the problem and leveraged the earlier work in [166], but I'm not entirely satisfied with the way in which they map between words in language and specific reward functions [298]. It seems as though there must be some way to learn this mapping. Specifically, it seems there might be a more elegant solution using some form of Differentiable Neural Computer / Neural Turing Machine [169] solution, perhaps leveraging some of the ideas in the recent paper [471] by Greg Wayne and his colleagues at DeepMind that directly addresses the problem of dealing with partially observable Markov decision processes — you might want to review Greg's presentation in class that you can find here.

%%% Mon Aug 27 04:25:46 PDT 2018

Effective bootstrapping in the case of the programmer's apprentice problem involves simultaneously solving several problems relating to space of possible actions the agent can select from at any given moment in time. Perhaps the simplest and most straightforward problem involves dealing with very large — discrete rather than continuous — combinatorial action spaces 10. In addition, actions are related to one another hierarchically in terms of the specificity of the activities the enable and the recurrent nature of the behaviors in which they are deployed. In particular, the specificity and complicity of actions will require training protocols that account for the dependencies between different subproblems since the exploration of one action often requires the exploitation of another.

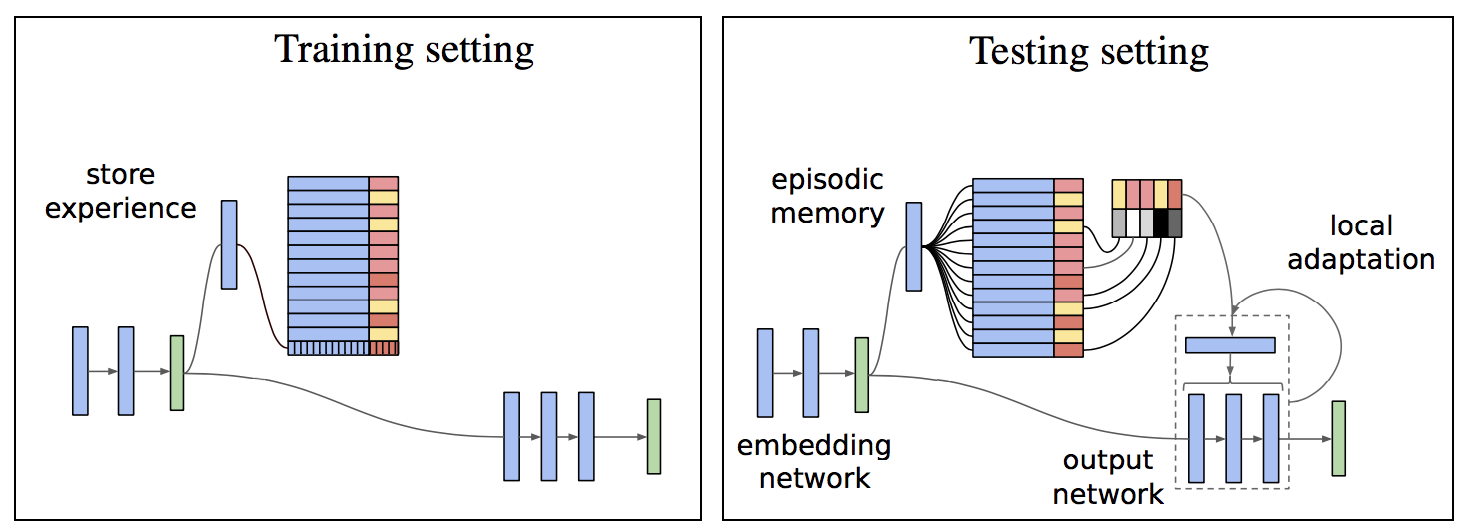

Finally and most fundamentally, actions in partially observable Markov decision processes are likely to have enabling preconditions whose monitoring and prediction will require tracking different state variables. Several of these problems have solutions that rely on managing episodic memory and are addressed in the following work: [471] — learning to decide what episodic information to store in memory based on unsupervised prediction; [357] — neural episodic control of reinforcement learning to incorporate new experience into our policies; [413] — memory-based parameter adaptation dealing with the non-stationarity of our immediate environment. Integrating these technologies to develop a cohesive and comprehensive solution to the above problems is, needless to say, a substantial, though I believe tractable, engineering challenge.

August 25, 2018

%%% Sat Aug 25 11:50:37 PDT 2018

The previous entry consisted of excerpts from Terrence Deacon [87] describing Charles Sanders Peirce's three categories of referential associations: iconic, indexical, and symbolic. In this entry, we build on that foundation to explain how these referential forms depend hierarchically and upon one another. All three of Peirce's referential associations are the result of interpretive processes. The interpretive process that generates an iconic reference is "none other than what we call recognition (mostly perceptual recognition, but not necessarily). Breaking down the term re-cognition says it all: to 'think [about something] again.' Similarly, representation is to present something again. Iconic relationships are the most basic means by which things can be re-presented. It is the basis on which all other forms of representations are built." It is at the bottom of the interpretive hierarchy.

| |

| Figure 48: A schematic diagram depicting the internal hierarchical relationships between iconic and indexical reference processes. The probability of interpreting something as iconic of something else is depicted by a series of concentric domains of decreasing similarity and decreasing iconic potential among beliefs. Surrounding objects have a decreasing capacity to serve as icons for the target object as similarities become unobvious. The form of the sign stimulus (S) elicits awareness of a set of past stimulus memories (e.g., mental "images") by virtue of stimulus generalization processes. Thus, any remembered object (O) can be said to be re-presented by the iconic stimulus. Similarly each mental image is iconic in the same way; no other referential relationship need necessarily be involved for an iconic referential relationship to be produced. Indexical reference, however, requires iconic reference. In order to interpret something as index, at least three iconic relationships must be also recognized. First, the indicating stimulus must be seen as an icon of other similar instances (the top iconic relationships); second, instances of its occurrence must also correlate (arrows) with additional stimuli either in space or time, and these need be iconic of one another (the bottom iconic relationships); and third, past correlations need to be interpreted as iconic of one another (indicated by the concentric arrangement of arrows). The index of interpretation is thus the conjunction of three iconic interpretations, with one being a higher-order icon than the other two (i.e., treating them as parts of a whole). As pointed out in the text, this is essentially the kind of reference provided by a conditioned response — Figure 3.2 adapted from Deacon [87] Page 79. | |

|

|

Having established the foundational role of iconic references, Deacon points out the importance of repeated correlation between pairs of iconic references that constitute evidence of their co-occurrence as a higher-order level of iconicity, providing the basis for estimating the probability that one might cause the other. He suggests that "the responses we develop as a result of day-to-day associative learning are the basis for all indexical interpretations, and that this is the result of a special relationship that develops among iconic interpretive processes. It's hierarchic. Prior iconic relationships are necessary for indexing a reference, but prior indexical relationships are not in the same way necessary for iconic reference." The hierarchic dependency of indices on icons is graphically depicted in Figure 3.2 of Deacon [87] — reproduced here as Figure 48.

| |

| Figure 49: A schematic depiction of the construction of symbolic referential relationships from indexical relationships. This figure builds on the logic depicted in Figure 48 (Figure 3.2 in Deacon [87]) but in this case the iconic relationships are only implied and the indexical relationships are condensed into single arrows. Three stages in the construction of symbolic representations are shown from the bottom to top. First, a collection of different indices are individually learned (varying strength indicated by darkness of arrows). Second, systematic relationships between index tokens (indexical stimuli) are recognized and learned as additional indices (gray arrows linking indices). Third, a shift (reversal of indexical arrows) in mnemonic strategy to rely on relationships between tokens (darker arrows above) to pick out objects indirectly via relationships between objects (corresponding to lower arrow system). Individual indices can stand on their own in isolation, but symbols must be part of a closed group of transformations that links them in order to refer, otherwise they revert to indices — Figure 3.3 adapted from Deacon [87] Page 87. | |

|

|

Deacon goes on to suggest that "[t]he problem with symbol systems, then, is that there is both a lot of learning and unlearning that must take place before even a single symbolic relationship is available. Symbols cannot be acquired one at a time, the way other learned associations can, except after a reference symbol system is established. A logically complete system of relationships among the set of symbol tokens must be learned before the symbolic association between any one symbol token and an object can even be determined. The learning step occurs prior to recognizing the symbolic function, and this function only emerges from a system; it is not invested in any individual sign-object pairing.” To make his point Deacon recounts the work of Wolfgang Köhler [257] who described experiments with chimpanzees in which to reach a piece of fruit they had to "see" problem in a new way. Kohler set his chimps the problem of retrieving a banana suspended from the roof of the cage out of reach, given only a couple of wooden boxes that when stacked one upon the other could allow the banana to be reached.

Köhler found that the solutions were not intuitively obvious to a chimpanzee, who would often become frustrated and give up for a long period. During this time the chimp would play with the boxes, often piling them up, climbing on them, and then knocking down. At some point, however, the chimp eventually appeared to have recognized how this fit with the goal of getting at the banana, and then quite purposefully maneuver the boxes into place and retrieve the prize. Deacon writes that "[w]hile not the sort of insight depicted in cartoons as the turning on of a lightbulb, what goes on inside the brain during moments of human insight may simply be a more rapid covert version of the same, largely aimless object play. We recognize these examples of insight solely because they involve a recoding of previously available but unlinked bits of information." Most insight problems do not involve symbolic recoding, merely sensory recoding: "visualizing" the parts of a relationship in a new way. Transference of a learning set from one context to another is in this way also a kind of insight.

At this point, I could attempt to generate a summary of the remainder of Chapter 3, but chapter is appropriately titled "Symbols Aren't Simple" and I wholeheartedly agree with the author's assessment. Not only do I agree, but I think Deacon has done an excellent job of providing a succinct but appropriately nuanced job of explaining the relevant (complicated) issues in a manner easily accessible to a computer scientist. And so, I highly recommend that you read the first three chapters of The Symbolic Species. For those of you who are still curious but reluctant to spend the time to follow my recommendation, I suggest you read the footnote at the end of this paragraph that primarily consists of excerpts from Chapter 3, and make a concerted effort to understand Figure 3.3, reproduced in these class discussion notes as Figure 49 and including Deacon's original annotations11 .

August 23, 2018

%%% Thu Aug 23 03:46:20 PDT 2018

Excerpts from Terrence Deacon's The Symbolic Species [87] relating to the semiotic theory of Charles Sanders Peirce. On the relationship between animal signaling in the form of calls and gestures and human language as traditionally characterized:

Treating animal calls and gestures as subsets of language not only reverses the sequence of evolutionary precedents, it also inverts their functional dependence as well. We know that the non-language communication used by other animals is self-sufficient and needs no support from language to help acquire or interpret. This is true even for human calls like sobbing or gestures like smiling. In contrast, however, language acquisition depends critically on nonlinguistic communication of all sorts, including much that is as innately prespecified as many human counterparts. Not only that, but extensive nonverbal communication is essential for providing the scaffolding on which most day-to-day language communication is supported. In conversations, demonstrations, and explanations using words we make extensive use of prosody, pointing, gesturing, and interactions with other objects and other people to disambiguate our spoken messages. Only with the historical invention of writing has language enjoyed even partial independence from this nonlinguistic support. In the context of the rest of communication, then, language is a dependent stepchild with very odd features. — Page 58 [87]

Reference characterized in terms of generating cognitive actions:

Ultimately, reference is not intrinsic to a word, sound, gesture or hieroglyph; it is created by the nature of some response to it. Reference derives from the process of generating some cognitive action, an interpretive response; and differences in interpretive responses not only can determine different references for the same sign, but can determine reference in different ways. We can refer to such interpretive responses as interpretants, following the terminology of the late 19th century American philosopher Charles Sanders Peirce. In cognitive terms, an interpretant is whatever enables one to infer the reference from some sign or signs in their context. Peirce recognized that interpretants cannot only be of different degrees of complexity, but they can also be categorically different kinds as well; moreover, he did not confine his definition only to what goes on in the head. Whatever process determines reference qualifies as an interpretant. The problem is to explain how differences in interpretants produce different kinds of reference, and specifically what distinguishes the interpretants required for language. — Page 63 [87]

On the different modes of reference as described by Charles Sanders Peirce:

In order to be more specific about differences in referential form, philosophers and semioticians have often distinguished between different forms of referential relationships. Probably the most successful classification of representational relationships was, again, provided by the American philosopher Charles Sanders Peirce. As part of a larger scheme of semiotic relationships, he distinguished three categories of referential associations: icon, index, and symbol. These terms were, of course, around before Pierce and have been used in different ways by others since. Pierce confined the use of these terms to describing the nature of the formal relationship between the characteristics of the sign token and those of the physical object represented as a first approximation these are as follows: icons are mediated by similarity between sign and object, indices are mediated by some physical or temporal connection between sign and object, and symbols are mediated by some formal or merely agreed-upon link irrespective of any physical characteristics of either sign or object.These three forms of reference reflect classical philosophical trichotomy of possible modes of associative relationship: (a) similarity, (b) contiguity or correlation, and (c) law, causality, or convention. [...] Peirce took these insights and rephrased the problem of mind in terms of communication, essentially arguing that all forms of thought (ideas) are essentially communication (transmission of signs), organized by an underlying logic (or semiotic, as he called it) that is not fundamentally different for communication processes inside or outside of brain. If so, it might be possible to investigate the logic of thought processes by studying the sign production and interpretation processes in more overt communication. — Page 70 [87]

While it helps to have someone like Peirce provide insight in the form of a compact, intuitive taxonomy, you may not want to apply his categorization directly since such taxonomies tend to make fine grained distinctions that are not born out in the data as a consequence of additional distinctions not available due limitations in sensing or sampling. The biological classification (taxonomy) formulated by Carl Linnaeus being a textbook case in point. The purpose here is to motivate our emphasis on non-linguistic signaling and reference as the scaffolding upon which to build a more general and robust language facility and the means for acquiring this scaffolding as part of a focused developmental strategy implemented as a form of hierarchical reinforcement learning.

August 19, 2018

%%% Sun Aug 19 04:28:13 PDT 2018

I've been thinking and reading a lot lately about early language development, and was dissatisfied with the explanations and theories I encountered in the literature. I happened to have a copy of Terry Deacon's Incomplete Nature: How Mind Emerged from Matter among my books [88], and noticed several references to an earlier book entitled The Symbolic Species: The Co-evolution of Language and the Brain [87]. I read the first four chapters comprising part one of the book focusing on language and found his theory compelling from a computational / cognitive-developmental perspective, despite the evolutionary-developmental origins for much of the evidence.

Both books are relatively long and academic — 500-plus pages each. I'll give you a flavor by quoting from this interview with David Boulton of Children of the Code in which Deacon talks about how The Symbolic Species "captures this notion that we are a species that in part has been shaped by symbols, in part shaped by what we do. Therefore, our brain is going to be very different in some regards than other species' brains in ways that are uniquely human." He notes that there is unlikely to be "a nice, neat direct map between what we see in the external world of language and what we see inside brains. In fact, the map may be very, very confused and very, very different inside the brain, that is, how the brain does what we see externally in language."

Deacon goes on to say that "[t]he logic of brains is this "embryology logic" that's very old, very conserved. The logic of language is something that is brand new in the world of evolutionary biology. [...] Language clearly forces us to do something that for other species is unnatural and it is that unnaturalness that's probably the key story. One might want to ask: 'What is so different about language? What are the aspects of language that are so different from what other species do?' [...] For me, one of the things I think is really exciting about languages is this aspect of how it reflexively changes the way we think. I think that's one of the most amazing things about being a human being." And, then, the following extended quote captures the critical difference between skill learning and procedural learning, with the former being common throughout the animal kingdom and the latter being quite rare:

Language is unique in the following sense: that it uses a procedural memory system. Most of what I say is a skill. Most of my production of the sounds, the processing of the syntax of it, the construction of the sentence, is a skill that I don't even have to think of. It's like riding a bicycle. I don't even have a clue of how I do it. [...] "On the other hand, I can use this procedural memory system because of the symbols that it contains, the meanings and the web of meanings that it has access to, that are also relatively automatic, to access this huge history of my episodes of life so that in one sense it's using one kind of memory to organize the other kind of memory in a way that other species won't have access to without this." [...] "The result is we can construct narratives in which we link together these millions and millions of episodes in our life in which you can ask me what happened last month on a particular day and if I can think through the days of the week and the things I was doing when, I can slowly zero in on exactly what that episodic memory is and maybe even relive it in some sense."

One reason that this jumped out at me — and Deacon comes back to this point at greater length within The Symbolic Species — is that words are symbolic, easily-remembered, precise-as-memory-aids and can — with some effort — be made to be categorically precise in ways that complement conventionally-connectionist, contextually-coded memories and thereby provide a basis for mathematical and logical thinking. While I'm pretty sure that Deacon didn't mean it as I interpreted it, the following excerpt caught my eye and current scientific /engineering interest in developing neural prosthetic devices:

I think the crucial problem with all of language as we use it today is the problem with automatization. How do we take something that has so many variables, so many possible connections and combinatorial options, and do it without having to think about it? How do we turn this complicated set of relationships into a skill, ultimately, that can be run, in effect, as though it was a computation?

That is indeed an interesting question and one that is critically important in developing systems that translate everyday procedural language into code that runs on non-biological computational substrates. It also underscores Deacon's view of what needs explaining. Deacon discounts the importance of grammar in explaining the evolutionary importance of language: "When we strip away the complexity, only one significant difference between language and non-language communication remains: the common, everyday miracle of word meaning and reference" — Page 43 [87]. It is easier to build syntax, recursion and grammar on the foundation of word meaning and reference than the other way around. This perspective also accords well with our current understanding of the postnatal neural and cognitive development of infants in their first three years.

August 17, 2018

%%% Fri Aug 17 06:17:44 PDT 2018

I asked Rishabh Singh for "examples of recent research that might help in working toward the sort of tractable, reasonably circumscribed capability I'm looking to develop in a prototype apprentice system as a proof of concept for the more general utility of developing digital assistants capable of usefully collaborating with human experts. The list of references along with links to several startups that are building technologies related to the accompanying preprints is provided in this footnote12.

August 13, 2018

Mon Aug 13 04:32:06 PDT 2018

The previous log entry focused on the neural and cognitive development of humans in the first three years. It was clear from the AAAS that there is more to language development than speaking to your baby. Specifically, there are signaling protocols that we refer to as signal acts in analogy to J.L. Austin's theory of speech acts corresponding to locutionary, illocutionary, and perlocutionary utterances. Within evolutionary biology, signaling theory refers to — generally non-verbal — communication between individuals both within and across species.

The basis for such communication is shared experience in the form of a sufficiently complex, causally coherent, reasonably accessible and transparent shared environment. In the case of the programmer's apprentice, this sharing is facilitated in part by having common interests and shared purpose, but most of all by language and access to the integrated development environment as a simulated world in which to share experience. We believe it will be substantially easier — and ultimately more valuable — for the apprentice to achieve a specialized competence in language by interacting with the programmer, than it would be to achieve a general competence and (possibly) reduce the up-front burden of training the apprentice.

That said, we can't expect a human programmer to be willing to raise the apprentice with an investment in time anything like a parent invests in training a child to communicate in natural language. There are a number of strategies we can employ to expedite language learning. Training a statistical language model on a large corpus of suitably enriched prose, is one relatively easy step we can take. Likewise, we can train a basic dialog manager using a large corpus of conversational data. Unfortunately, semantic understanding is significantly more challenging since it requires grounding language in the physics of programs running on conventional computers.

Our approach is patterned after the idea of semantic bootstrapping that was covered briefly in an earlier discussion. However, there is much more to achieving linguistic competence than simply engaging in listening and speaking. The apprentice needs to learn how to engage with the world in a collaborative setting. In order to bootstrap linguistic competence, we have to assume some degree of innate pre-linguistic signaling competence. Here is a partial list of basic signal acts that are prerequisite — in some form — for infant language learning:

point — involves gaze, head or hand movement: highlighting, underlining, blinking;

track — involves same motor activities as pointing: cursor movement, animation;

refer — combination of activities establish reference: speaking plus pointing;

notice — register auditory, visual, tactile changes: interrupt-driven exceptions;

select — display the alternatives and register selection: referring plus pointing;

resolve — emphasize the ambiguity and select resolution: noticing plus selecting;

There are also sensory and motor activities involving mimicry, miming and imitating sounds — onomatopoeia — that play an important role prior to achieving even minimal mastery of speech. For the programmer's apprentice, we could provide an extensive language model at the outset, but a smaller vocabulary might facilitate early learning and disambiguation as suggested in work of Jeff Elman and Elissa Newport. The IDE buffer shared between the programmer and apprentice containing the code under development would be memory mapped in a differential neural computer [169] (DNC) to enable the apprentice to access code in much the same way that a mouse employs hippocampal place cells to recall spatial landmarks — see Banino et al [27].

A DNC could also serve as a differential neural dictionary (DND) as described in Pritzel et al [358] as the basis for exploiting episodic memory or associating names and phrases, e.g., "the variable named counter'', with specific tokens in the abstract syntax tree representation of the code in the IDE buffer or locations in the memory-mapped virtual display. Such DNC representations could index complex representations of code fragments as embedding vectors providing support for the sort of operations described by Randall O'Reilly in his lecture on how the basal ganglia, hippocampus and prefrontal cortex work together to enable the precise application of complex actions — also featured in Figure 48.

August 11, 2018

%%% Sat Aug 11 05:35:29 PDT 2018

This entry attempts to summarize what cognitive neuroscience can tell us about the first three years in the cognitive development of normal, healthy human infants. What are the major milestones? What structural and functional changes occur at different stages and how are they manifest in behavior? What if an infant achieves a particular milestone earlier or later than is common? We start by assembling the basic, quantifiable facts concerning development in primate brains that are most likely to be relevant in understanding the connection between neural structure and function. A summary of the facts most relevant to our discussion of whether and how the early stages of brain development in infants might facilitate language learning as discussed in [76, 435, 133] is available here and in this footnote13.

In the spirit of how we acquire new skills and accommodate new ways of thinking, watch this fifty-six minute excerpt from a lecture in the Neuroscience & Society series, sponsored by The Dana Foundation and the American Association of the Advancement of Science. The remainder of the presentation focuses on the National Institute of Child Health and Human Development at NIH and is primarily concerned with US federal funding of related research and public outreach. There are a lot of related talks and science shows to be found on YouTube and much of it out of date or poorly researched. In particular, I encountered several presentations making spurious claims about functional specialization in the two hemispheres of the cerebral cortex. For a conceptually more nuanced viewpoint on the separate hemispheres / separate brains / separate functions / hemispheric specialization theory of brain organization check out this footnote14.

August 9, 2018

%%% Thu Aug 9 05:43:11 PDT 2018

I'm in the midst of learning more about developmental language acquisition 15 in the hope of better understanding the related machine learning problems for the programmer's apprentice application and better evaluating the ML papers we looked at earlier this week and the week before. If you're not familiar with the relevant literature in cognitive and developmental psychology, you might start by learning about the early stages in child development and early cognitive development in particular.

In scanning a small sample of recent papers that attempt to ground language acquisition from video and simulated worlds [492, 490, 480, 491], there were very few references outside of AI, ML and NLP, and most of those were to papers in computational linguistics. The reviewers for Hill et al 2018 ICLR submission [212] were less forgiving and suggested a number of interesting papers, including Jeffrey Elman [133], Elissa Newport [325] and Eliana Colunga and Linda Smith [76]. I found that the papers by Thomas and Karmiloff-Smith [434, 435] provided useful background on how such studies are carried out.

Jeffrey Elman [133] made some interesting observations that were pointed out in the ICLR reviews. Specifically, Elman trained networks to process complex sentences involving relative clauses, number agreement, and several types of verb argument structure. He noted that "training fails in the case of networks which are fully formed and 'adultlike' in their capacity" and that "[t]raining succeeds only when networks begin with limited working memory and gradually 'mature' to the adult state. This result suggests that rather than being a limitation, developmental restrictions on resources may constitute a necessary prerequisite for mastering certain complex domains. Specifically, successful learning may depend on starting small."

Elman's work involved relatively early technology for developing and training neural networks, but probably warrants looking into more carefully and possibly attempting to replicate using more recent models and training protocols. In general, I recommend that you direct your own inquiry into the relevant background in an effort to temper the infectious enthusiasms of researchers attempting to ground language acquisition in video and simulated worlds. I'm going to spend a few days conducting my own due diligence and attempting to fill in the details required to apply some of the ideas we considered in this discussion to the programmer's apprentice problem.

August 8, 2018

%%% Wed Aug 8 04:07:00 PDT 2018

Jaderberg et al [239] define an auxiliary control task c ∈ C by a reward function r(c) : S × A → R, where S is the space of possible states and A is the space of available actions. The underlying state space S includes both the history of observations and rewards as well as the state of the agent itself, i.e. the activations of the hidden units of the network. Note that the activation of any hidden unit of the agent's neural network can itself be an auxiliary reward. The authors note that in many environments reward is encountered rarely, incurring a significant delay in training feature extractors adept at recognizing states that signify the onset of reward. They define the notion of reward prediction as predicting the onset of immediate reward given some historical context.

In describing their method of Scheduled Auxiliary Control (SAC-X), Riedmiller et al [364] introduce four main principles:

Every state-action pair is paired with a vector of rewards, consisting of (typically sparse) externally provided rewards and (typically sparse) internal auxiliary rewards.

Each reward entry has an assigned policy, called an intention in the paper and following account, that is trained to maximize its corresponding cumulative reward.

There is a high-level scheduler that selects and executes the individual intention policies with the goal of improving the performance of the agent on the external tasks.

Learning is performed off-policy — and asynchronously from policy execution — and the experience between intentions is shared — to use information effectively.

The auxiliary rewards in these tasks are defined based on the mastery of the agent to control its own sensory observations which, in a robotic device, correspond to images, proprioception, haptic sensors, etc., and, in the case of the programmer's apprentice, correspond to highlighting and other forms of annotation, variable assignments, standard input (STDIN), output (STDOUT) and error (STDERR), as well as changes to the contents of the IDE buffer including the AST representation of programs currently in use or under development.

Riedmiller et al decompose the underlying Markov M process into a set of auxiliary MDPs { A1, A2, ..., A|C| } corresponding to the auxiliary control tasks mentioned earlier, that share the state, observation and action space as well as the transition dynamics with the main task M, but have separate auxiliary reward functions as defined above. Section 4.1 successfully thwarted my efforts to substantially simplify. Among other subtleties, it describes a reward function that is characterized by an ε-region in state space, a hierarchical reinforcement-learning system that employs a scheduling policy to control both a primary task policy and the set of auxiliary control tasks, and a relatively complex separate objective for optimizing the scheduler. I suggest you set aside an hour and work through the details.

August 7, 2018

%%% Tue Aug 7 04:37:42 PDT 2018

In the previous entry, we considered solutions to what is generally called the bootstrapping problem in linguistics and developmental psychology. We looked at one proposed solution in [209] that draws upon the related literature [299] and, in particular, the work of linguists and cognitive scientists including Steven Pinker [347] on semantic bootstrapping which proposes that children acquire the syntax of a language by first learning and recognizing semantic elements and building upon that knowledge.

Hermann et al [209] attempts to model the different stages of development including how the system acquires its initial ontological foundation, learns properties of the things it has encountered and been provided names for, discovers the affordances that such things offer for their accommodation and manipulation, and begins the long process of inferring the the relationships between ontological entities that are required to serve its needs. The result is a policy-driven approach to bootstrapping that roughly recapitulates the stages of language development in children.

Now we take a look at the experimental protocol and setup for the simulation experiments described in Appendix A of [364]. The authors present a training method that employs active (learned) scheduling and execution of auxiliary policies that allow the agent to efficiently explore its environment and thereby excel in environments characterized by having sparse rewards using reinforcement learning. The important difference between this and the earlier work of Hermann et al [209] is that Riedmiller et al largely ignore the related work in linguistics and attempt to directly leverage the inherent reward structure of the problem.

The approach of Riedmiller et al builds upon the idea of options due to Sutton et al [425] and a related architecture called HORDE for the efficient learning of general knowledge from unsupervised sensorimotor interaction by Sutton et al [424]. The HORDE architecture consists of a large number of independent reinforcement learning sub-agents each of which is responsible for answering a single predictive or goal-oriented question about the world, and thereby contributing in a factored, modular way to the system's overall knowledge [424]. See also the related work of Jaderberg et al [239] mentioned in an earlier entry in this log.

To understand Riedmiller et al, it helps to read the introduction to Jaderberg et al [239] contrasting the conventional reinforcement-learning goal of maximizing extrinsic reward with the goal of predicting and controlling features of the sensorimotor stream — referred to as pseudo rewards [239] or intrinsic motivations [397] — with the objective of learning better representations. The remaining details in Hermann et al and Riedmiller et al focus on how the system controller is specified in terms of a high-level scheduler / meta-controller that selects actions from a collection of policies intended to pursue different representational — ontological, categorical and relational — goals by using a combination of general extrinsic reward and policy-specific intrinsic motivations to guide action selection.

August 6, 2018

%%% Mon Aug 6 04:57:14 PDT 2018

This entry is intended to take stock of what we've learned so far in terms of bootstrapping the first steps in learning to program and thinking about embodied cognition applied to language production as code synthesis. We focus on the training protocols described in Hermann et al [209] and Riedmiller et al [364]. Given that we begin with Hermann et al, you might want take a look at Appendices A and B in [209] and check out this video demonstrating the behavior of agents trained using the algorithm described in [209].

The first thing to point out is that training amounts to a good deal more than the agent just initiating random movements and wandering around aimlessly in a simulated environment. Indeed, the authors are quick to point out that they tried this and it doesn't work. Language learning proceeds using a combination of reinforcement (reward-based) and unsupervised learning. In particular, the agent pursues auto-regressive objectives by carrying out tasks that are applied concurrently with the reward-based learning and that involve predicting or modeling various aspects of the agent's surroundings following the general strategy outlined in Jaderberg et al [239]16.

A temporal-autoencoder auxiliary task is designed to illicit intuitions in the agent about how the perceptual properties of its environment change as a consequence of its actions. To strengthen the agent's ability reconcile visual and linguistic modalities the authors designed a language-prediction auxiliary task that estimates instruction words given the visual observation. This task also serves to make the behavior of trained agents more interpretable, since the agent emits words that it considers best to describe what it is currently observing. The authors report the fastest learning was achieved by an agent applying both temporal-autoencoder and language-prediction tasks in conjunction with value replay and reward prediction.

The authors also demonstrate experimentally that before the agent can exhibit any lexical knowledge, it must learn skills that are independent of the specifics of any particular language instruction. They construct more complex tasks pertaining to other characteristics of human language understanding, such as the generalization of linguistic predicates to novel objects, the productive composition of words and short phrases to interpret unfamiliar instructions and the grounding of language in relations and actions as well as concrete objects. It should be possible to use a meta-controller to drive a suitable training policy implemented as an hierarchical task-based planner [321] building on our earlier dialogue management work [97].

August 4, 2018

%%% Sat Aug 4 06:03:09 PDT 2018

Deriving a clear description of the most difficult challenges faced in attempting to solve a particularly vexing problem is often the most important step in actually solving that problem. Such a description once clearly articulated often reveals the necessary insight to effectively unleash our engineering know-how and in the process also suggests what tools and resources are necessary to make progress. For some time now, I've been searching for such a description or trying to deduce it from first principles without success. Yesterday I stumbled on what may serve as such a description for the programmer's apprentice problem as a consequence of simply knowing what keywords to search for evidence of relevant progress. I found that evidence in two relatively recent papers from researchers at DeepMind.

There already exists some very interesting work in this direction. A 2017 arXiv preprint from DeepMind entitled "Grounded Language Learning in a Simulated 3D World" looks like it was written for the programmer's apprentice [209]. The introduction begins, "We are increasingly surrounded by artificially intelligent technology that takes decisions and executes actions on our behalf. This creates a pressing need for general means to communicate with, instruct and guide artificial agents, with human language the most compelling means for such communication. To achieve this in a scalable fashion, agents must be able to relate language to the world and to actions; that is, their understanding of language must be grounded and embodied. However, learning grounded language is a notoriously challenging problem in artificial intelligence research. Here we present an agent that learns to interpret language in a simulated 3D environment where it is rewarded for the successful execution of written instructions."

The subsequent paragraph is even more enticing: "Trained via a combination of reinforcement and unsupervised learning, and beginning with minimal prior knowledge, the agent learns to relate linguistic symbols to emergent perceptual representations of its physical surroundings and to pertinent sequences of actions. The agent's comprehension of language extends beyond its prior experience, enabling it to apply familiar language to unfamiliar situations and to interpret entirely novel instructions. Moreover, the speed with which this agent learns new words increases as its semantic knowledge grows. This facility for generalizing and bootstrapping semantic knowledge indicates the potential of the present approach for reconciling ambiguous natural language with the complexity of the physical world." The paper is worth the effort to read carefully, as are related papers cited in the bibliography by Jeffrey Siskind, Deb Roy and Sandy Pentland [30, 370, 402, 401].

A related paper [212] by a subset of the same authors, was submitted to 2018 ICLR. Unfortunately, the submitted paper was not accepted for publication, but a PDF version is available on OpenReview. Fortunately for those interested in this line of research, there is a resurgence of work on this problem spanning natural language processing, cognitive science and machine learning. The "Embodied Question Answering" work of Devi Parikh mentioned in class and featured in this NIPS workshop entitled "Visually-Grounded Interaction and Language" provides a very interesting and relevant perspective. Devi's entry on the 2018 CS379C course calendar includes a sample of her papers and presentations — of particular interest is her work on visual dialogue [84, 85].

A more recent paper by Riedmiller et al [364] proposes a new learning paradigm called Scheduled Auxiliary Control (SAC-X) that seeks to enable the learning of complex behaviors — from scratch — in the presence of multiple sparse reward signals. "SAC-X is based on the idea that to learn complex tasks from scratch, an agent has to learn to explore and master a set of basic skills first. Just as a baby must develop coordination and balance before she crawls or walks — providing an agent with internal (auxiliary) goals corresponding to simple skills increases the chance it can understand and perform more complicated tasks" — see their bibliography for an excellent sample of related work and here for a more detailed intuitive description.

In the above mentioned description, the authors claim their approach is "an important step towards learning control tasks from scratch when only the overall goal is specified" and that their method "is a general RL method that is broadly applicable in general sparse reinforcement learning settings beyond control and robotics." I've appended some statements that the programmer might use to induce the assistant to write or modify code. Some are relatively easy — though none of them trivial for a complete novice. As an exercise, think about how one might stage stage a collection of increasingly more difficult tasks so the assistant could bootstrap its learning. As a more challenging exercise, think about whether it is feasible to automatically stage the learning to achieve mastery of the entire collection of tasks with having to intervene and somehow debug the assistant's understanding:

Highlight in red the variable named str in the body of the for loop in the concatenate function.

Find and highlight where the variable named register is initialized. Call this the current register.

Change the name of variable str to lst everywhere in definition of the the concatenate function.

Add a statement that increments the variable count right after the statement that adds block to queue.

Insert a for statement that iterates over the key, value pairs of the python complements dictionary.

If we think of language as signaling and dialog as programming it makes sense to exploit pointing, highlighting and tracing as interactive explanatory aids, and utterances in pair programming as a form of machine translation or compilation in which the utterances adhere to a form of dialogical precision in their consistent use of logical structure and procedural semantics. In this view, we exploit the relationship between the narrative structure of conversation and the hierarchical task-based planning-view of procedural specification. I'm waving my hands here, but it seems that just as the arrangement of our limbs, muscles and tendons limits our movements to encourage grasping, reaching, walking and running, so too the interfaces to our prosthetic extensions be they artificial limbs, musical instruments or integrated development environments should constrain our activities to expedite the use of these extensions.

%%% Sun Aug 5 05:12:31 PDT 2018

Instead of using an instrument trained to speak in order to write programs, suppose we use an instrument trained to write programs in order to carry out a conversation. The assistant converts each utterance of the programmer into a program that it runs in the IDE. Such programs have the form of hierarchical plans that result in both changes to programs represented in the IDE and new utterances generated in response to what the programmer said. The assistant also generates such plans in pursuing its own programming-related goals, including learning and exploratory code development. Given the examples of relatively simple tasks included above, more complicated tasks are carried out by plans with conditionals and recursive subroutining. Signaling and resolving the identity of entities introduced earlier — but still in the scope of the current conversation, becomes natural, e.g.,

Wrap the expression you just wrote in a conditional statement so the expression is executed only if the flag is set.

Check to see if the variable is initialized in the function parameter list and if not set the default value to zero.

Insert an assignment statement, after the cursor, that assigns the variable you just highlighted to twice its value.

The last of these examples is intended to remind us that learning to program de novo also entails an understanding of basic logic and mathematics as expressed in natural language, e.g., the words "twice" and "double" serve as shorthand for "two times" and the assistant has to learn that the expressions, var = 2 * var, var = var + var, and var += var, all accomplish the same thing — a seemingly trivial feat that underscores the complexity of what we expect the assistant to learn. Our apprentice software engineer also requires, at the very minimum, a rudimentary understanding of basic mathematics and logic, and therefore we either have to train the apprentice in these skills or somehow embody them in its integrated development environment differential neural computer.

Miscellaneous Loose Ends: In his presentation in class, Randal O'Reilly described the hippocampus as a (relatively) fast, high-capacity memory cache that together with the basal ganglia and prefrontal cortex enable serial processing via gating and active task maintenance. Randy contrasted human serial-processing capability with the highly-efficient parallel-processing machinery we share with other mammals, noting that biological serial-processing capacity falls substantially short of conventionally-engineered computing hardware. In their 2015 NIPS paper entitled "Learning to Transduce with Unbounded Memory", Grefenstette et al [184] present new memory-based recurrent networks that implement continuously differentiable analogues of traditional data structures such as stacks and queues that outperform traditional recurrent neural networks on benchmark problems.

August 3, 2018

%%% Fri Aug 3 06:03:09 PDT 2018

As I mentioned on the first of my vacation, given the unfettered opportunity to think about thinking, I want to make the most of it by preparing my mind each day, eliminating distractions and irrelevant ideas, and focusing on as few ideas as makes sense to enable creative synthesis. This morning I've primed my thinking by focusing on the basic ideas behind embodied and situated cognition, and, inadvertently, on a central premise of John Ousterhout's recent book on software design emphasizing the challenge of building useful classes as one of the most important tools for managing complexity in software engineering [334].

Also on my mind is a recent discussion17 with Daniel Fernandes about Daniel Wolpert's comment that the brain evolved to control movement, full stop. One could argue that Wolpert is correct if one takes "evolved" to mean "originally evolved" — or "evolved if only to later digest it upon settling down to a sedentary life" as in the case of the common sea squirt, but his comments land wide of the mark if you are interested in the evolution of the brain as it relates to the evolution of language, sophisticated social engineering and abstract reasoning in Homo sapiens.

Daniel suggested Wolpert's comment might imply that, in order to learn (seemingly) more complicated skills like programming, we might first have to learn a (relatively) complex system, in analogy to a young animal learning to control its body in a physical environment that behaves according to the laws of physics — Newtonian physics provides one among many suitably-rich, well-behaved and reasonably-tractable dynamical systems that might suffice. Daniel suggested OpenAI Gym as one possible source of such dynamics to consider, but there are a number of alternatives indicating this is an idea whose time has come. See Tassa et al [432] for a full account of the simulations available in the DeepMind Control Suite.

Certainly it is our intention that the assistant be embodied, with the IDE as a differentiable neural computer [169] (DNC) so that writing and executing code is an almost effortless extension of thinking. The embodiment, as it were, of the assistant is a prosthetic extension, the computational environment of integrated development environment. The assistant attempts to make sense of everything in terms of its experience using the DNC. Unfortunately, this relies on the programmer having to painstakingly ground its language in this context and explain how everything other than its internal experience interacting with the IDE relates to this context:

Programmer: I have to go to the grocery store to purchase some things for dinner.

Assistant: What is a grocery store?

Programmer: Think of it as a data structure in which you can store physical items.

Assistant: What sort of items?

Programmer: Items of food that humans need to consume in order to remain healthy.

Assistant: What does it mean to purchase?

Programmer: To purchase an item you give the store money in exchange for the item.

The Programmer might explain commerce in terms of inventories, unit price tables, etc. Later when the programmer returns something to the store, she might say that the "purchase" function is invertible. Of course, this is just the beginning in terms of explaining this short exchange. What does it mean to consume something? What is food and what does it mean to remain healthy? How are physical things different from the strings and lists that the programmer employs in writing code. The onus is on the programmer to explain its world in order to teach the assistant rudimentary physics, basic logic, etc., so the programmer can perform relevant analogs in writing and understanding code. There has to be a more efficient method of educating the assistant. The method of simulation grounded language learning offers one possible approach.

August 2, 2018

%%% Thu Aug 2 15:15:25 PDT 2018

Here are a few bits of metaphorical whimsy that may be worth more than I credit: When you see a cup, your brain is already thinking about how to grasp and possibly drink out of that cup. In some very practical sense, the cup is intimately associated with the environmentally-available affordances for its use [158, 157, 29, 28]. When you think, you are essentially writing programs that execute on different parts of your brain. When you speak, you are essentially conveying instructions that run on someone else's brain / computational substrate brain, but they simultaneously echo in your brain.

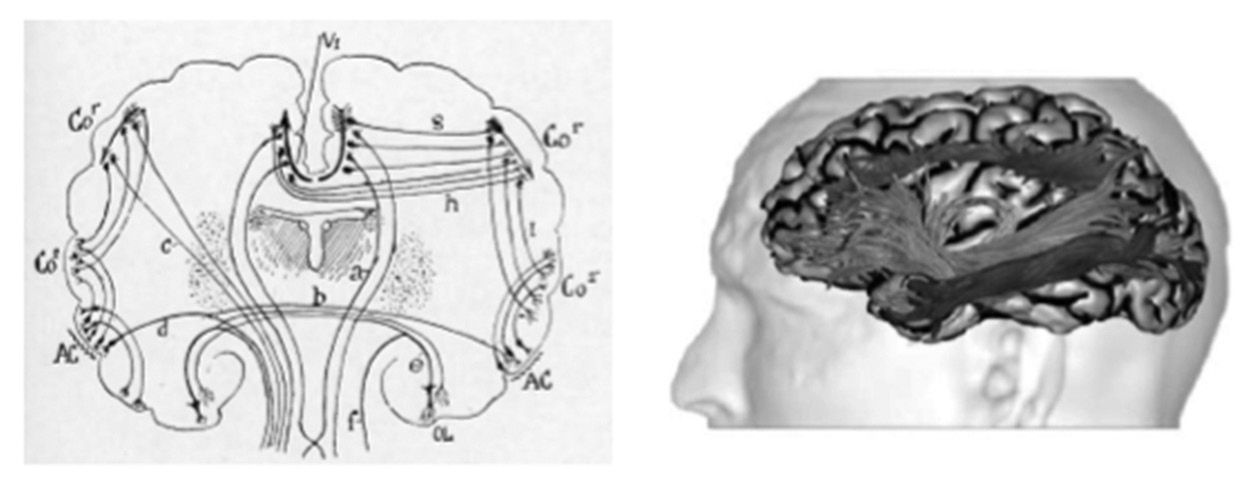

At the micro, meso and macro scale many of the related neural systems are said to be reentrant in the more colloquial sense of the word meaning that something exits a system only to reenter it a some later point either in time or via some alternative interface to the system. This is how Gerald Adelman uses the term to describe his theory of reentrant processing in the brain [130, 390] [source], and less like computer scientists use the term in referring to a computer program or subroutine as being reentrant if it can be interrupted in the midst of its execution and safely be called again ("re-entered") before its previous invocations complete execution [source].

The Gibsonian view of physical, ecological affordances has been extended to metaphysical realms by philosophers and cognitive scientists such as Daniel Dennett [114]. But the notion of embodiment has a much longer and deeper influence in the history ideas [source]. When we read that members of the Royal Society in the Victorian era believed that clockwork animated automata embodied intelligence, we scoff at their naïveté, but their understanding was not that far from the embodied-systems viewpoint espoused by Rod Brooks [56] and others [14, 368] in the late '80s and early '90s.

In salons and soirées throughout Europe the rich and famous would marvel at clockwork automata that mimicked the movements of living things. The orchestrated movement of head and limbs so mirrored the movements of the dancers and animals they were designed to simulate, that people had a hard time not ascribing intelligence to the simple mechanical devices. Where those automata behaved as if aware of their physical surroundings as in the case when two animated dancers would perform some joint maneuver or a simple walking automaton would move its jointed limbs to account for an uneven surface it seemed clear that they must have sensed one another or taken notice of the slope of the surface they walked upon.

It is a leap to suggest that all of the intelligence is built into the articulated limbs of these automata. We are too sophisticated to ignore what we've been told about how the peripheral and central nervous systems work together to orchestrate our complicated behavior, but there is a credible story to be told about how our bodies and their interactions with the world provide the basis for much of our physical understanding of the world, and, by analogy, our metaphorical understanding of many seemingly more complicated and less tangible aspects of our imaginative thinking.

Often this perspective seems at odds with our facility for abstract thought, even though such skills are likely significantly less common among the general population than we might imagine. It is relatively easy to imagine the way in which a concert pianist becomes seemingly inseparable from her instrument; indeed we can observe changes in the somatosensory regions of the brain that map the pianists fingers as well as the secondary sensory and association areas related to processing auditory sensation. The abstract reasoning of mathematicians and physicists seems of a very different order than that of the musician, carpenter or even expert chess player.

Here we assume that the pianist and the mathematician extend their native abilities in much the same way to achieve their respective feats of skill. And just as the piano keyboard, guitar frets, bow and strings of the violin along with music tablature, key signatures, chords progressions, etc become so familiar that the concert pianist becomes inseparable from his or her instrument and the rich repertoire which they inhabit, so too the programmer, mathematician and theoretical physicist builds a virtual machine that embodies and allows them to simulate their abstract worlds in such a way that is viscerally concrete and in many cases leverages or builds upon physical intuition.

In the following, we consider the idea that programming, as with playing a musical instrument at an advanced level, is embodied so that writing and executing code is — to some degree depending on the level of expertise — an extension of thinking, explore the possibility that one might acquire skills through downloading cognitive modules by direct computer-machine interface, and further explore the potential benefits of reentrant coding and its relationship to the the so-called two-streams hypothesis that we explored briefly back in May.

August 1, 2018

%%% Wed Aug 1 5:14:52 PDT 2018

First day of vacation. First real vacation in a very long time. Time for unfettered mental and physical activity: thinking, writing, walking, talking, swimming, meditating, cooking, observing, being. As a culture we attach so little value to such things when they are not in direct service to some articulated purpose. For those few of my students who visit this list beyond the end of class and anyone else for that matter, I suggest you think about this a little so you won't miss out on what is arguably more important to your health, happiness and general well being than anything else you will likely do in life. I'm looking forward to thinking about thinking in addition to lots of other activities less appropriate to bring up in this context.

As described elsewhere in these notes concerning the mathematicians Cedrìc Villani and Andrew Wiles, I'm preparing myself by preparing my mind to hold at, any given time, all and only the pieces of the puzzle I think most relevant to solving puzzle as currently formulated, where it is understood that the formulation will undergo frequent revision. In addition to technical papers and studying various mathematical methods, I'm reading a biography of Charles Babbage detailing his struggle to build a mechanical computing engine and a novel by Nick Harkaway about an artificer who specializes in clockwork automata. When inspired to do so, I'll explain why I think these literary affordances will help in my sabbatical quest.

July 29, 2018

In class we assumed familiarity with current RNN technology including the LSTM and GRU architectures, with an emphasis on the design of encoder-decoder pairs for sequence-to-sequence modeling in machine translation and sequence-to-tree and other structured targets in parsing and, in particular, working with abstract-syntax-tree representations of source code. We alluded to the fact that a number of more recent neural network architectures had superseded LSTM and GRU models with respect to their ability to address the vanishing gradient problem and facilitate attention.

Here I've included a set of tutorials that will help you understand the recent architectural innovations that have largely served to supplant these older models, including the technical and practical reasons that they have proved so useful. For completeness, if you're not already up-to-speed on the basics of RNN architectures, you might want to check out this tutorial on the LSTM and GRU architectures.

Residual Networks introduced by He et al [205] were among the first radical departures from the incumbent LSTM and GRU hegemony demonstrating that you could get the same or better performance from an architecturally simpler model with a considerable reduction in complexity and computational cost by replacing single layers in a simple multi-layer perceptron with residual blocks that first compute a function F of the residual block input x and then employ (rectified linear unit) layers to focus on the relevant new information in F(x) which is then combined with input x to produce the residual block output F(x) + x as shown below from [205]. Check out this tutorial for a more detailed overview of the basic idea and its variants:

|

Residual networks are related to attentional networks [198, 479, 469] and the Transformer architecture of Vaswani et al [450] that takes advantage of this to demonstrate that attentional part of the earlier attentional models is really all you need as made clear in this tutorial. WaveNet [446, 338] is a relatively recent addition to the modern neural network toolkit as nicely demonstrated in this introduction from DeepMind. These architectural innovations have some important computational consequences to take into consideration in thinking about their deployment, the beginnings of which are explored here.

Miscellaneous Loose Ends: During class we studied a number of interesting methods for prediction and imagination-based planning. Greg Wayne's presentation was particularly interesting and worth taking a look at if you haven't already. The paper on the predictron model for end-to-end learning and planning by Silver et al [395] is likewise interesting and this tutorial provides an introduction.

July 27, 2018

The exquisite cellular- and molecular-scale imaging work of Mark Ellisman, a friend and collaborator at UCSD, is featured prominently in this Google Talks presentation by Dr. Douglas Fields from NIH. In this excerpt, the second half of an hour-long presentation, Fields focuses on our rapidly changing understanding of the role of glial cells. His talk goes well beyond the role of microglia that David Mayfield and I have been studying and that was mentioned earlier in this discussion list here. Fields' presentation spans the different roles of glia in both healthy and diseased brains and serves as a whirlwind introduction to a broad swath of important and largely-ignored brain science.

Fields also sketches the historical context focusing on the fierce debate between Camillo Golgi and Ramón y Cajal culminating in their sharing the Nobel Prize for Physiology or Medicine in 1906 [159], and suggesting that these new findings challenge the neuron doctrine championed by Cajal and undermine our current understanding of the brain. If you're not familiar with this relatively recent research, I highly recommend you watch this presentation and check out some the ground-breaking research on astrocytes from Mark Ellisman's group at the National Center for Microscopy and Imaging Research NCMIR.

July 19, 2018

%%% Fri Jul 20 05:19:18 PDT 2018

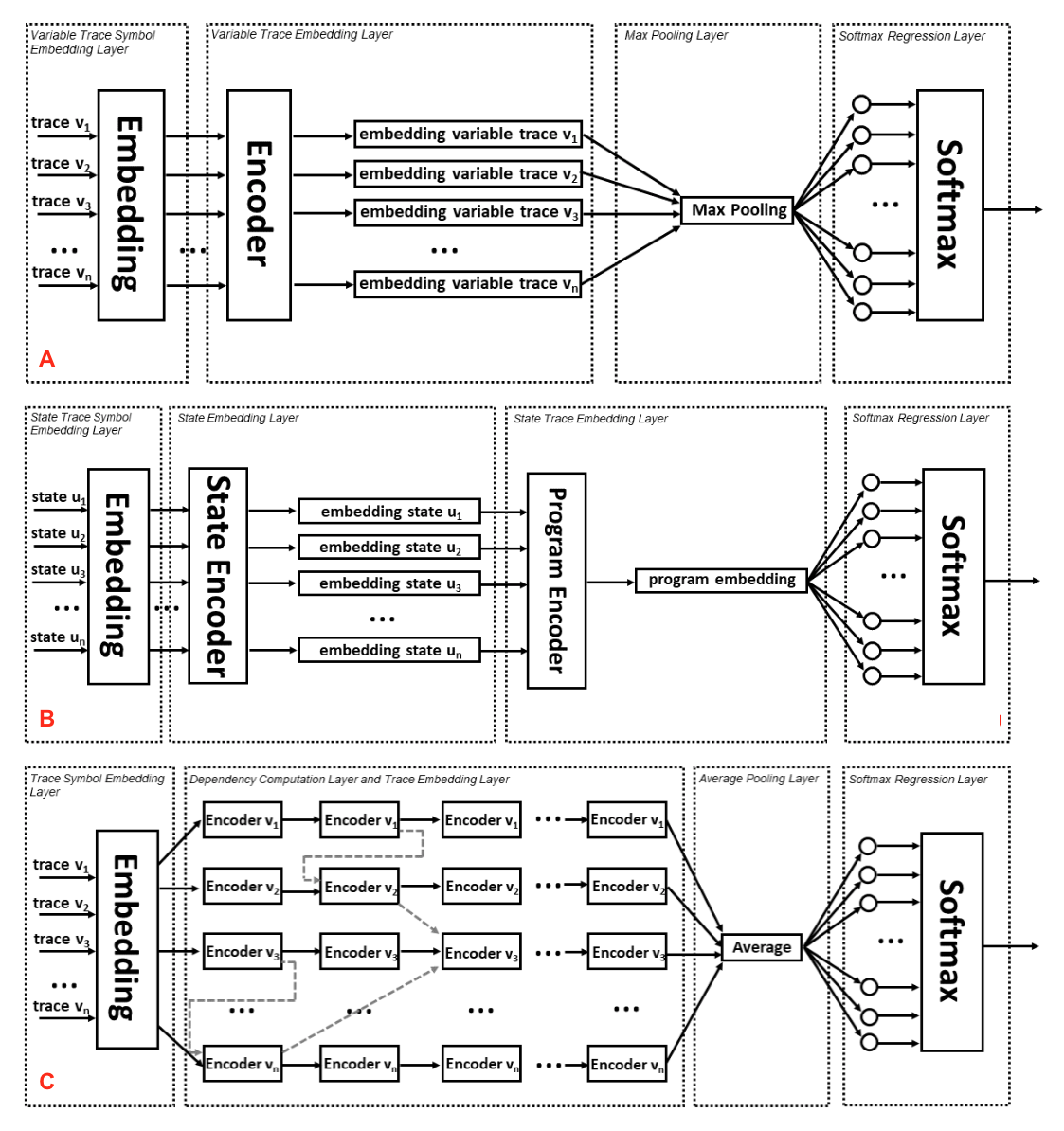

I spent a couple of hours yesterday doing a literature search and reading papers on bootstrapping methods for training dialogue systems, relating to the meta-learning and hierarchical-planning ideas for dialog management covered in this earlier log entry. I've listed a sample of the more interesting papers below and suggest you check out the hierarchical reinforcement-learning architecture described in Figures 1 and 2 of [340] and the EQNET model architecture shown Figure 1 of Allamanis et al [6]. If you're not familiar with the relevant background on semi-Markov decision problems, you might want to read Sutton et al [425].

The traditional approach to training generative dialogue models is to use large corpora. This paper describes a state-of-the-art hierarchical recurrent encoder-decoder model, bootstrapped by training on a larger question-answer pair corpus — see [388]

Example-based dialog managers store dialog examples that consist of pairs of an example input and a corresponding system response in a database, then generate system responses for input by adapting these dialog examples — see [216].

Alternatively, dialog data drawn from different dialog domains can be used to train a general belief tracking model that can operate across all of these domains, exhibiting superior performance to each of the domain specific models — see [318].

Hybrid Code Networks (HCNs) combine RNNs with domain-specific knowledge encoded as software and system action templates to considerably reduce the amount of data for training, while retaining the benefit of inferring a latent representation of dialog state — see [476].

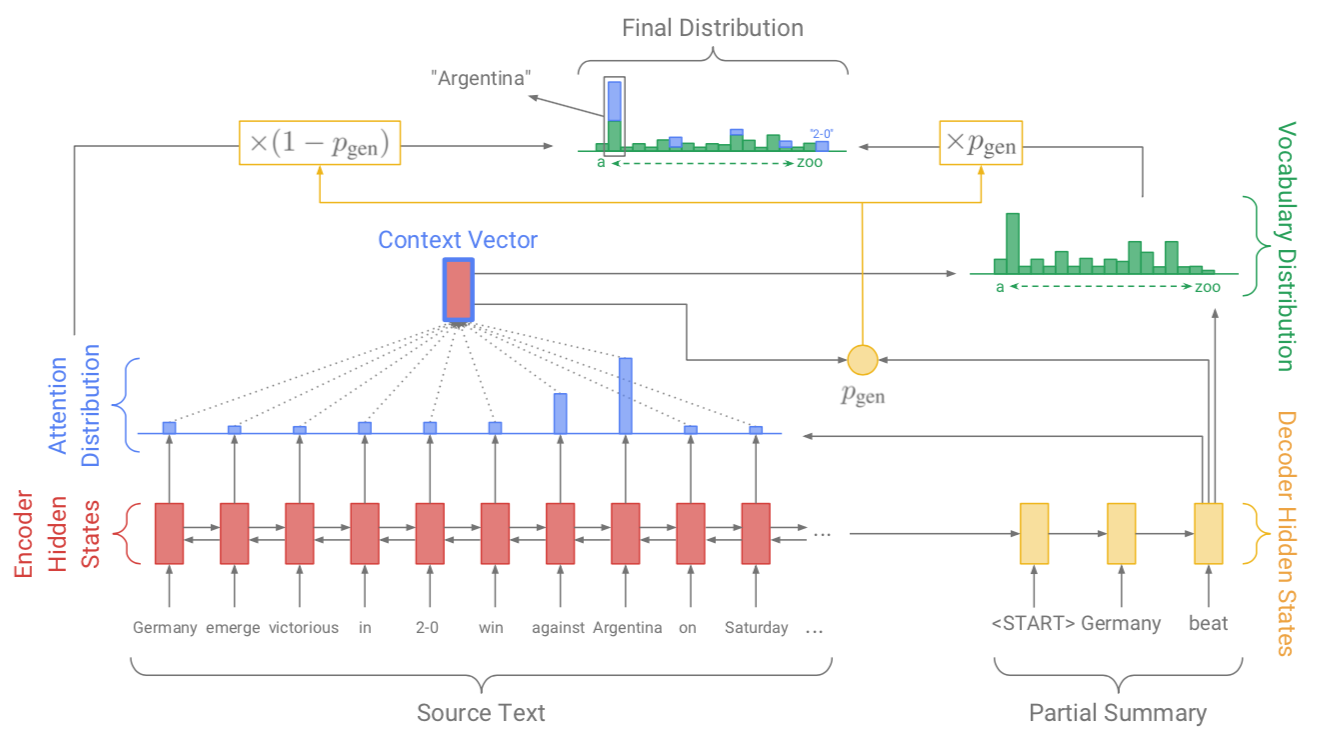

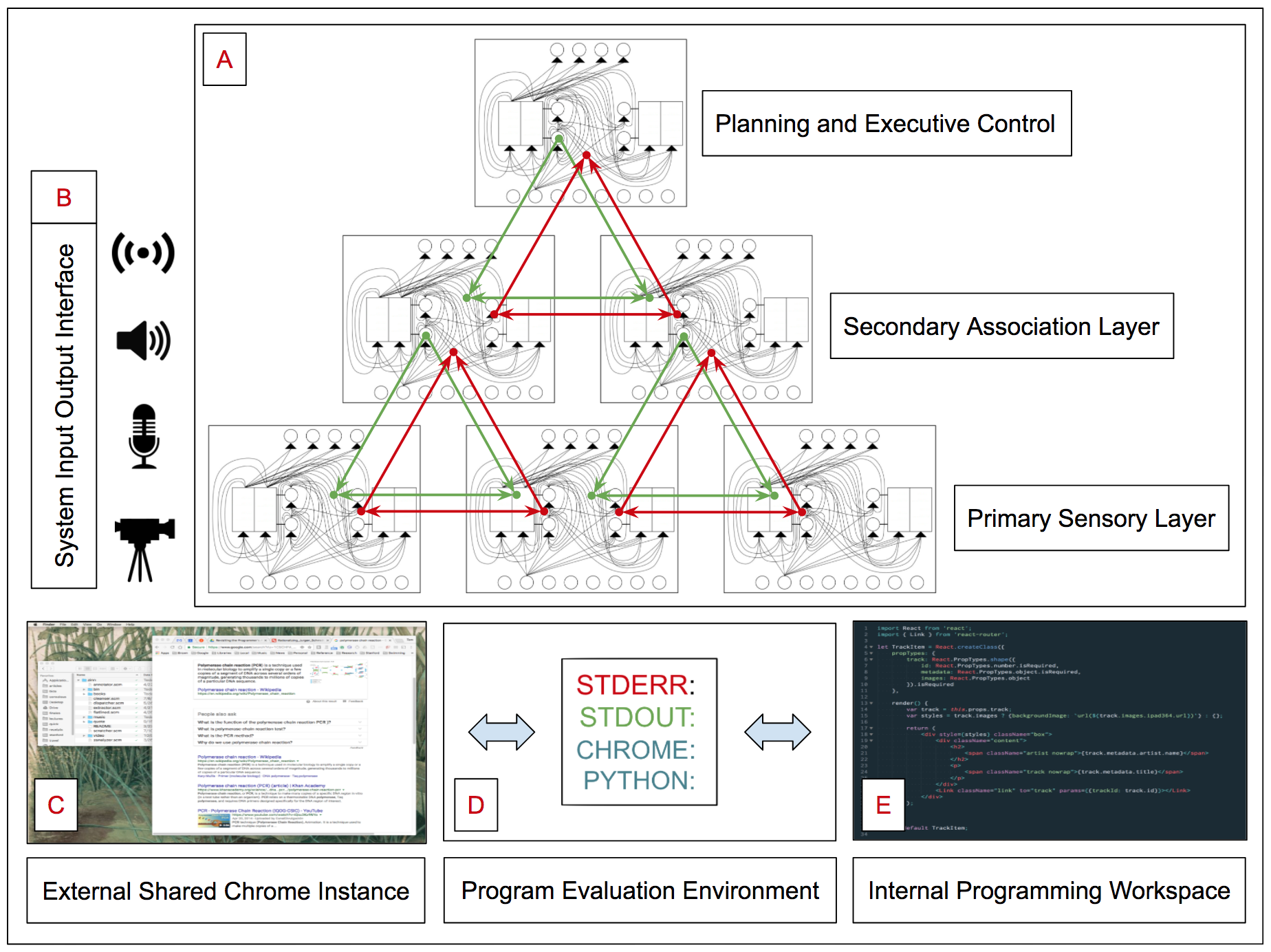

Dialogue management systems for dialogue domains that involve planning for multiple tasks with complex temporal constraints have been shown to gain some advantages from utilizing multi-level controllers corresponding to reinforcement-trained policies — see Figure 47 from [340].

| |