Research Discussions

The following log contains entries starting several months prior to the first day of class, involving colleagues at Brown, Google and Stanford, invited speakers, collaborators, and technical consultants. Each entry contains a mix of technical notes, references and short tutorials on background topics that students may find useful during the course. Entries after the start of class include notes on class discussions, technical supplements and additional references. The entries are listed in reverse chronological order with a bibliography and footnotes at the end. This listing is now closed, but if you would like to follow the research threads spawned by the 2020 class discussions, you are welcome to read about our ongoing work here.

Class Discussions

Welcome to the 2020 class discussion list. Preparatory notes posted prior to the first day of classes are available here. Introductory lecture material for the first day of classes is available here, a sample of final project suggestions here and last year's calendar of invited talks here. Since the class content for this year builds on that of last year, you may find it useful to search the material from the 2019 class discussions available here. Several of the invited talks from 2019 are revisited this year and, in some cases, are supplemented with new reference material provided by the list moderator.

July 4, 2020

%%% Sat Jul 4 03:29:17 PDT 2020

This entry, the last for the 2020 class, focuses on developing working models of various components of the cognitive architectures that we discussed in class this year, demonstrating how these components can be applied to solving interesting problems. In particular we focus on problem of exploiting working memory in the frontal cortex to solve a special case of the variable binding problem in service to learning simple rules encoded in sparse distributed representations in a differentiable connectionist. The discussion here is just a start. In coming months, we will dig deeper into this problem as well as explore other related problems in preparation for class next year.

Neural Coding Microarchitectures

%%% Fri Jun 19 05:40:13 PDT 2020

One of the core cognitive capabilities required in solving problems involves keeping track of task-relevant information including what tasks you are working on along with their inputs, intermediate products, current status, and dependencies between tasks, including their order of invocation, all of which are handled by the procedure call stack in a conventional von Neumann architecture.

In the models we consider here the attentional system is responsible for identifying relevant stimuli both internal and external, and the executive control system in prefrontal cortex is responsible for orchestrating the cognitive tasks required to deal with the challenges and opportunities that follow from observations and predictions prompted by the attentional system.

Attention only makes sense if there is more than one thing to attend to and those things are obvious enough to distinguish from the background and interesting enough to warrant your attention. If you are performing a task then presumably by attending to the task you will naturally expand the number of items that you have to keep track of and maintain access to.

Eventually there may be so many items that you run out of short-term memory in which to store those items. Having attended to some item in the process of working on one task and then not being able to recover that item after a span of working on other tasks, you might search for the item in recent episodic memory, but that assumes you know what you are looking for.

Attention, memory and the capacity to perform computations on information stored in memory are all limited resources that depend on one another. In order to perform computations it is necessary to identify and access information in the form of representations that manifest as patterns of activity among ensembles of neurons.

Whether these patterns arise from perception, prediction or the restoration of episodic memories stored long-term memory, they have to be captured in a stable format and transferred to locations where they can be operated upon by circuits that have been trained to perform particular information processing tasks.

You can think of the basal ganglia and prefrontal cortex as staging cognitive tasks by selecting information relevant to performing a given task and moving that information into specific registers in working memory where various operations can be carried out and the results stored in other registers in working memory.

Summarizing current estimates of the size of working memory in the frontal cortex, there are on the order of 20,000 stripes each one corresponding to approximately 100 interconnected rate-coded neurons, stripes are organized in stripe clusters consisting of approximately 10 stripes, and each stripe can be independently updated and is associated with a corresponding stripe in the striatum.

This sounds straightforward until you realize that all of this staging and moving information about has to be learned and the same substrate used for one task may need to be interrupted and used for another task before returning to completing the first task, and that you never perform exactly the same task twice.

Consider the task of making a cake with a recipe that you've used many times in the past. You might start by setting out all the bowls and other cooking paraphernalia you'll need and then measuring out the ingredients so you can mix the cake batter and start the oven heating to the right temperature for baking.

For every subtask such as measuring out flour, there will be dozens if not thousands of computations required, and for each one of these you will have to load registers, initiate computations and transfer results to other registers, all the while dealing with interruptions and novel problems like substituting unbleached for pastry flour.

Of course you will also likely have thousands of little subroutines many of that you've practiced many times, but perhaps not in exactly the order that you will need to perform them in making the cake and so they will have to be adapted and coordinated and their runtime requirements in terms of allocating registers and utilizing specific circuits satisfied.

Basic register allocation and circuit utilization seem low level to require that an agent learn to directly control them. It may be that there is a base level of control hardwired in the brain that works in the same way that the microarchitecture of a modern computer abstracts from its underlying processor-specific instruction set architecture1. Even if biological brains lack such a microarchitecture, engineers may find it advantageous to provide this level of abstraction to expedite learning.

The focus in this year's class was on exploring architectures motivated by our understanding of brain function. The architectures we developed attempted to integrate many of the cognitive functions generally associated with human cognition. The emphasis was on learning what the brain was doing and not necessarily how it was doing it.

Next year, students will be challenged to develop working models of various components of these architectures demonstrating how these components can be applied to solving interesting problems. Since developing a complete architecture to solve any nontrivial problem is well beyond the scope of the current state-of-the-art, the trick will be simplify the rest of the architecture just enough to solve the target application. The next subsection outlines such a project and offers it as a challenge to students working over the summer break. The goal for this summer is to develop a number of similar challenges in preparation for next year's class.

Integrating Basal Ganglia Functionality

%%% Tue Jun 23 15:14:36 PDT 2020

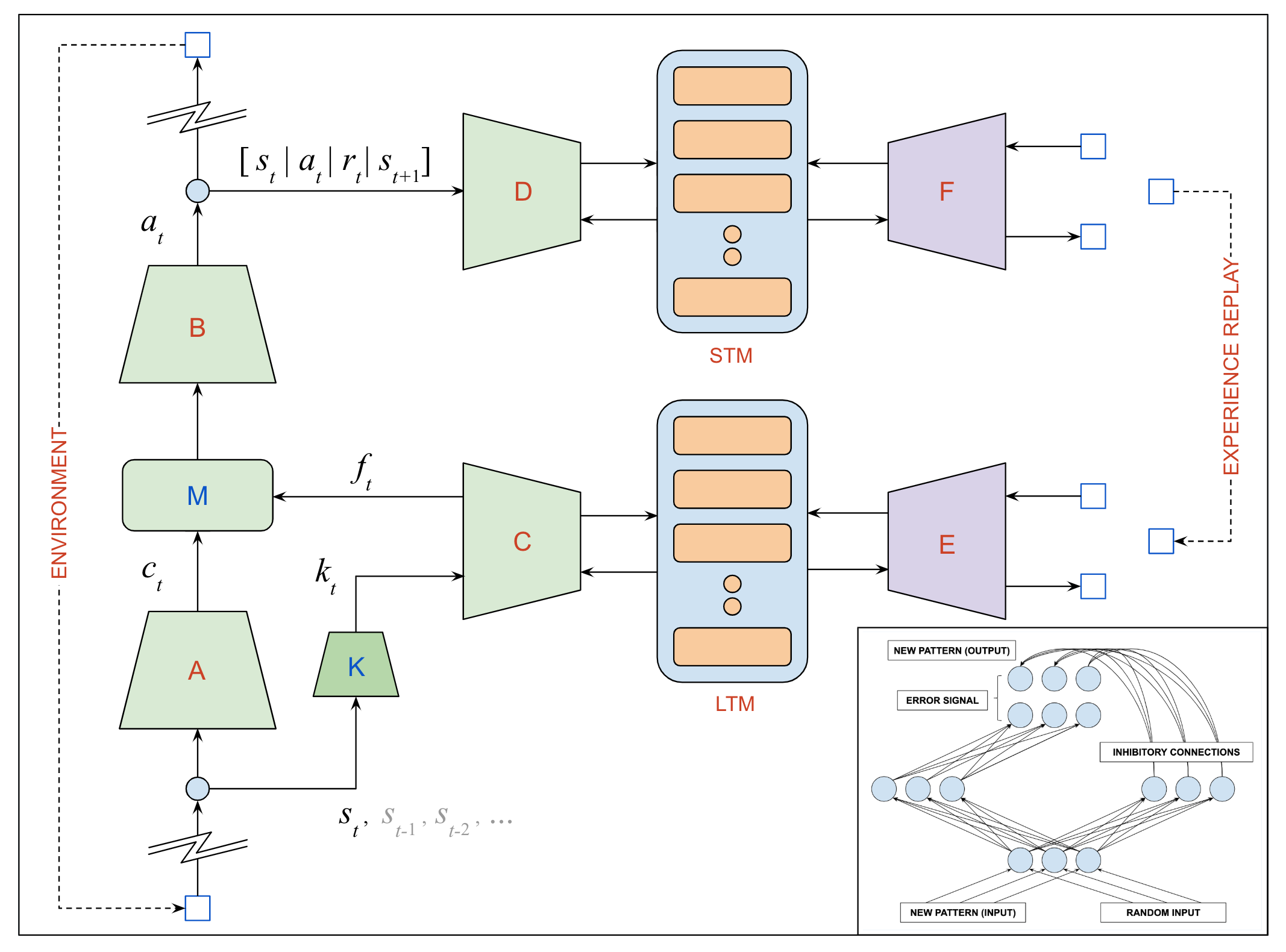

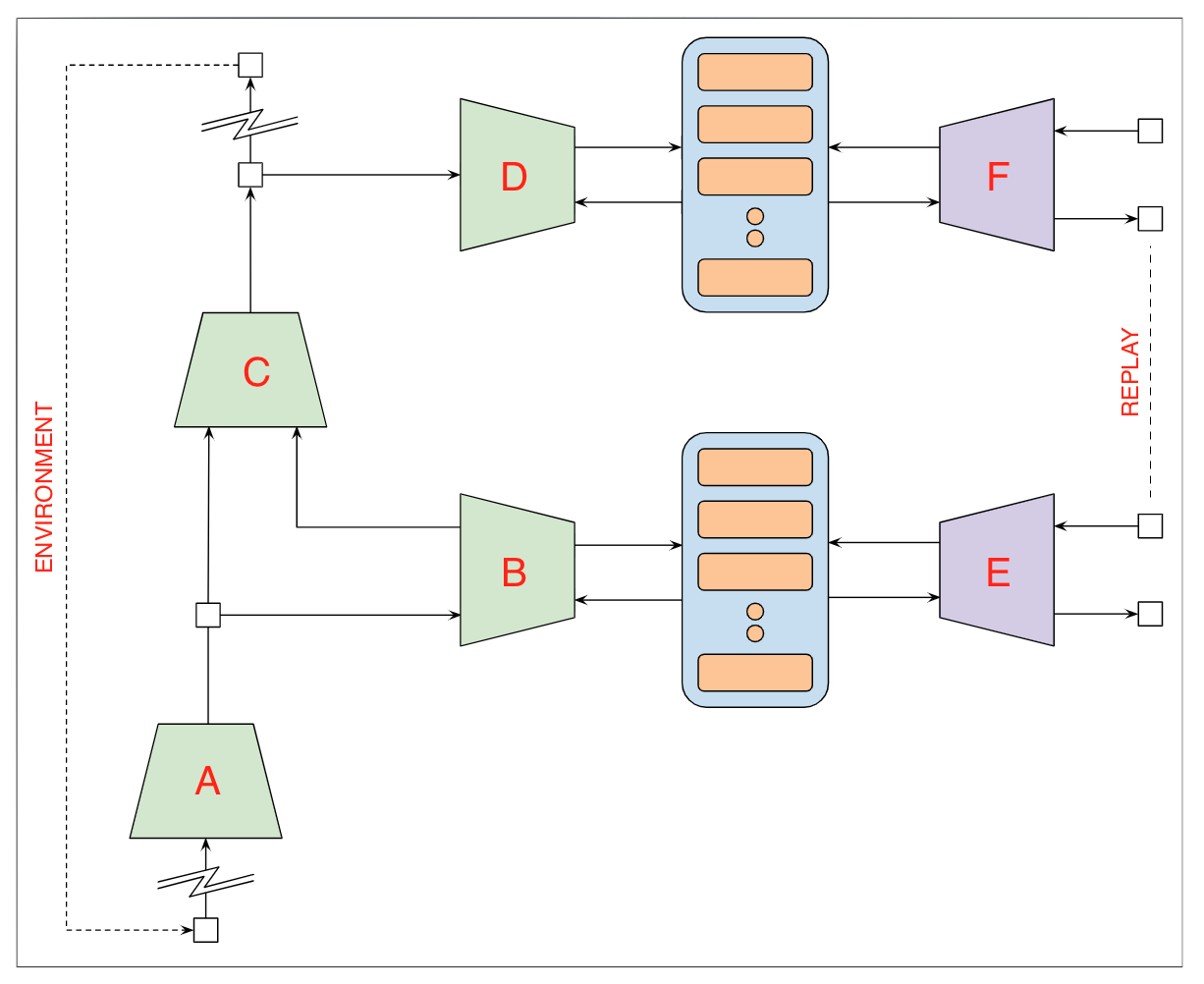

In class this year we spent several lectures discussing the basal ganglia as a reinforcement learning machine and the PBWM (prefrontal, basal ganglia and working memory) model described in O'Reilly et al [386] as the basis for a simple form of variable binding that enables rule-based inference.

In particular, we investigated how the components that comprise the PBWM might emulate a simple Turing complete automaton called a register machine. In the following, we sketch a somewhat more detailed version of the register machine that takes its inspiration from PBWM along with a simplified cognitive architecture and simple task to demonstrate its capabilities.

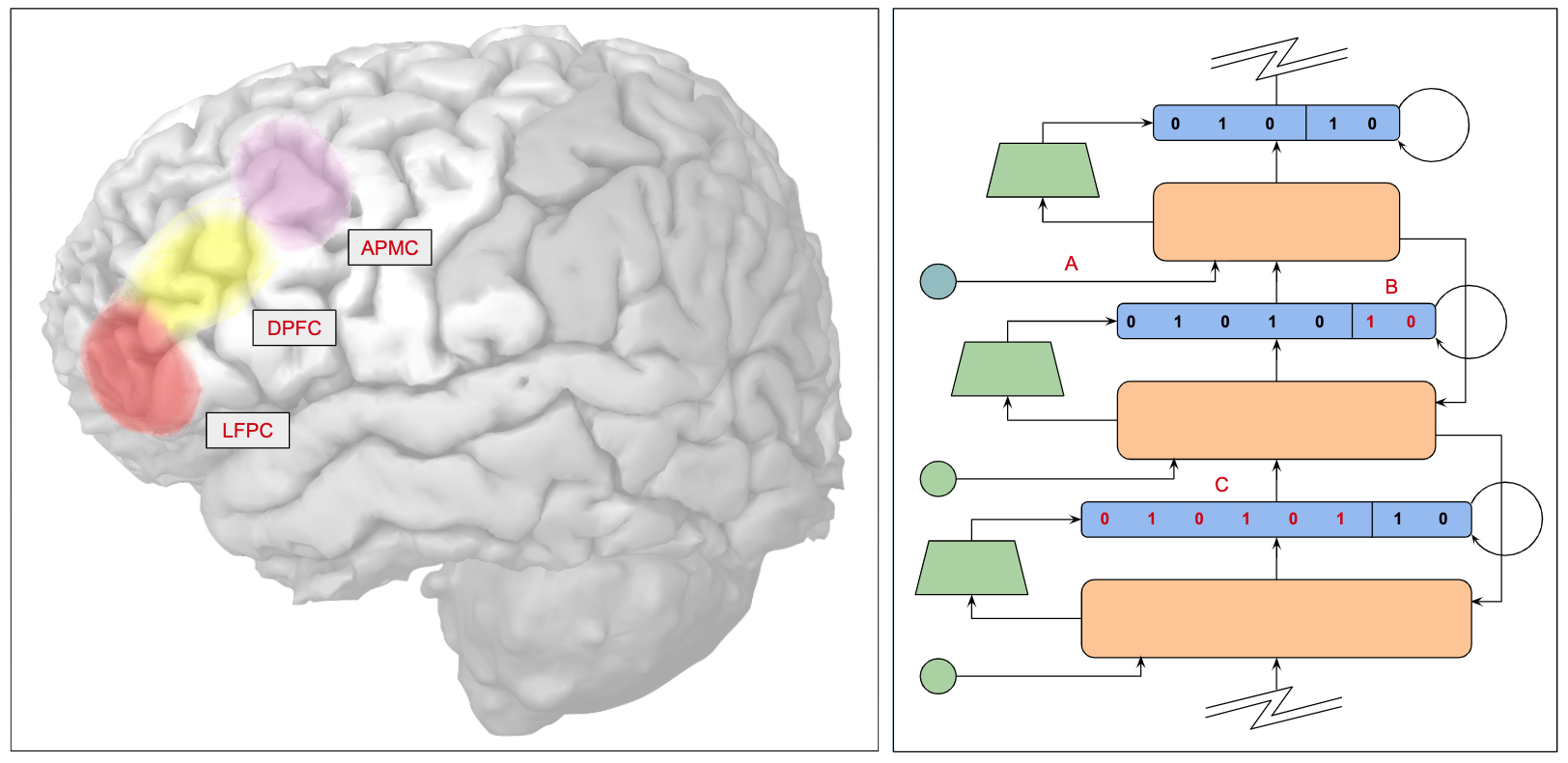

One important feature of the prefrontal cortex is that it supports hierarchical representations for planning and decision making. We have discussed the relevant biology and related technologies at some length in these notes – there are over 160 mentions of "hierarchy" in this year's notes alone, and here we argue that, in the case of the architectures we are contemplating, hierarchical structure will naturally arise as a consequence of learning and development.

For example, in conventional programming there exists a natural hierarchy induced by the procedure call stack and the function type signature by way of the corresponding hierarchy of types. Combinatorial complexity and compositionality induce natural procedural hierarchies, e.g., writing a program involves writing loops and conditionals, which, in turn, involves writing conditionals that involve writing Boolean expressions, etc.

Contrary to the standard top-down engineering model for software development, learning how to write programs generally starts with simple procedures that operate on simple types, e.g., you start off implementing list, string and sequence functions, before realizing that strings and sequences are subtypes of vectors and lists are subtypes of sequences, etc. In order to induce such natural hierarchical structure, we may have to recapitulate some features of human cognitive development.

Specifically, it may be possible to bootstrap writing and executing cognitive programs by using a developmental curriculum that serves to learn and then build on a low-level microarchitecture of the sort alluded earlier. In a strategy similar to that described by Josh Merel in his discussion in class2, the process of bootstrapping might start by learning to write simple subroutines that are constructed from even simpler components including basic arithmetic, relational and logical operators – see Figure 1.

| |

| Figure 1: The above graphic demonstrates some of the differences between motor control tasks and the architecture used to learn and deploy them as described in Merel et al [352] and the sort of cognitive tasks and corresponding architectures discussed here. The two panels A and B represent traces illustrating the execution of a task by a fully-trained instance of each architecture. Panel A represents a motor program as a policy that is deployed by stringing together motor primitives in the process of executing a primitive, sensing the environment, estimating the relevant state variables, and then iterating. This execution cycle is akin to traditional control-theory models that consist of modules that observe – perform state estimation, plan – learn a policy, and execute – carry out primitive actions. Panel B represents a cognitive program as a collection of subroutines that can call one another by passing information from the caller to the called subroutine, and decide what subroutine to call next by using their own internal logic contingent on local state information passed by a caller or global state originating from sensory data, other subroutines by way of shared working memory or directly from the prefrontal cortex in its role an operating system of sorts. B may seem more complicated than A given the relative autonomy of subroutines and the multiple ways in which subroutines can influence and be influenced by one another, however, the steps of selecting one motor primitive and carrying out the next are intricately coupled with one another through the environment serving as a high-bandwidth two-way communication channel between the two. | |

|

|

Building a system that can write simple subroutines is challenging enough, but the hardest part may be in learning how to coordinate the running of multiple simple subroutines to produce complex behavior. Note that in the case of the motor primitives described in Merel et al [353], separate subroutines do not have to pass information back and forth and so you simply string subroutines together and execute them sequentially with interleaved real-time conditional branching to adapt to unanticipated contingencies.

The problem arises when subroutines have inputs and outputs that require unpredictable / on-the-fly routing of information to support integral branching, recurrence and interrupt handling — the sort of problem that the microarchitecture in combination with the compiler, assembler and operating system are designed to facilitate. Conventional computing hardware supports virtually unlimited, easily repurposed, restructured and conveniently accessed working memory, making it relatively simple for subroutines to share information. In particular, programs and data can move about independently to accommodate varying demands.

In biological systems, information and computation are collocated, neural algorithms are said to be executed in-place requiring no auxiliary storage and relying on fixed pathways – the data changes but its sources, sinks and format are fixed. In order to accommodate these limitations, neural circuits in frontal cortex responsible for generating and coordinating behavior are directly connected to the sources of sensorimotor information that they require to perform the necessary computations3

The thalamus is divided into approximately 60 nuclei each of which provides a unique pathway for relaying specific sensorimotor information to cortical and subcortical locations, primarily in the cerebral cortex. Motor pathways, limbic pathways, and sensory pathways besides olfaction all pass through this central structure. The modular organization of the thalamus and its alignment with structures in the striatum and cerebral cortex explain the pairing of information and processing in frontal cortex, as well as the consequence that some general-purpose data processing functions will necessarily require duplication.

Implementing and Testing the Model

%%% Mon Jun 29 15:12:53 PDT 2020

The following assumes familiarity with PBWM. If you're not familiar with this model, check out the resources available here. If you are somewhat familiar, but want to learn more, you might want to check out the transcript of the discussion we had with Randy back in January during which we talked about PBWM and our interest in adapting the model for the programmer's apprentice application, and the followup (exchange) with Randy and Michael Frank in June in which, among other topics, we discussed more recent developments concerning the sharing of responsibility for action selection and executive control between basal ganglia and prefrontal cortex4

The SIR (Store, Ignore, Recall) task described in O'Reilly et al [219] provides a simple test of competence in selectively attending to and maintaining items in working memory while performing a task that requires finding patterns in sequences of alphanumeric characters, e.g., identify all instances of the three-character target strings 1AX and 2BY in the following test string "195H31AQ2BY472U22B1AXJR97"5.

As an exercise to familiarize yourself with the SIR task, compare and contrast the following problems assuming that you are interacting with a small child with a short attention span — a three-year-old will have trouble remembering the two target strings and have to be reminded whereas the five-year-old may still find the task challenging but derive benefit from assigning each of the target strings a memorable retrieval cue of their choice, e.g., "one axe" and "two buzz"6:

describe the SIR task to a three-year-old child in enough detail that they can easily perform the task;

explain to a five-year-old how a program that solves the SIR task works so they can perform the task;

The goal is to perform an efficient search and so concatenating the results from two searches, one for 1AX and another for 2BY, is not acceptable. To be explicit, you might describe the problem as follows: traverse the sequence one character at a time in the order given and output 1 if the current character is the last character in an occurrence of either 1AX or 2BY, and 0 otherwise.

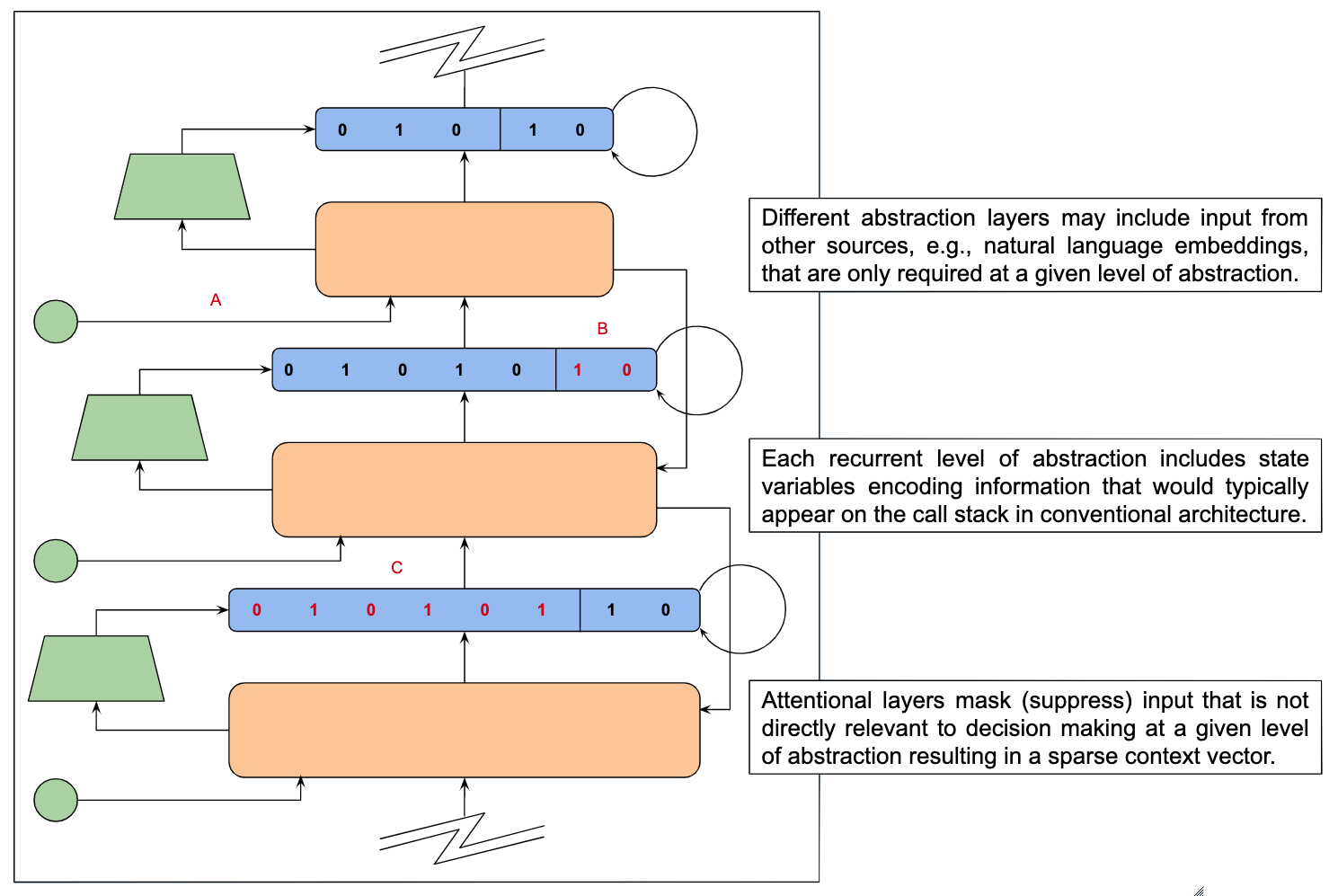

| |

| Figure 2: The proposed architecture employs a biologically less plausible variant of PBWM and a simple model of frontal cortex function separating executive control logic and procedural abstraction to solve the SIR (store, ignore, recall) (SIR) problem as described in O'Reilly et al [219]. This version of the SIR problem assumes that the specific challenge corresponding to the two patterns can change at any point in time. Each successful (true positive) identification of either pattern yields a reward of 1, each false positive yields -1 and any other outcome 0. The objective is to maximize expected reward. The network mapping the contents of the basal (ganglia) source registers (BSR) to the frontal (cortex) target registers (FTR) is a special case of the model shown here. Each of the networks in the subroutine library is a simple gated recurrent unit [32] – three are shown here, but only two are required to solve the SIR problem. The executive control network is a multicell LSTM, and working memory corresponds to the gated memory registers in the BSR and the executive control and subroutine library. The complete architecture is rendered here to highlight the functional characteristics of the (correctly) learned model and can be implemented as a single multicell LSTM. | |

|

|

Figure 2 depicts the basic model and the caption describes a simple basic version of the architecture that should suffice for solving SIR problems. The following pseudocode describes one possible solution that might learned. In the listing, the token PFC corresponds to the executive controller, SR1, SR2 and SR3 correspond to the three subroutines:

# SR1 determines whether to match the first, second or neither pattern: if SR1 is running and its input matches the first character in the ith pattern then output 1 or 2 otherwise 0; # SR2 and SR3 determine whether the input matches nth character in the ith pattern: if SR2 is running and its input matches the second character in the ith pattern then output 1 otherwise 0; if SR3 is running and its input matches the third character in the ith pattern then output 1 otherwise 0; # The PFC unit controls the run status (0,1) of subroutines: if SR3 is running and its output is not 0 then output i and set the run status of SR3 to 0; if SR2 is running and its output is not 0 then set the run status of SR3 to 1 and the run status of SR2 to 0; if SR1 is running and its output is not 0 then set the run status of SR2 to 1 and the run status of SR1 to 0; # if the target patterns or input sequence are updated then reset: reinitialize the run status of SR1, SR2 and SR3 to 0;

In the PBWM model, the basal ganglia is responsible for identifying contexts represented as patterns of activity in posterior cortex that are associated with rewards. In the simple model we consider here, the basal ganglia functions are subsumed in the prefrontal cortex, and so the PFC ends up being responsible for both action selection and executive control. Having recognized a potentially rewarding context, the PFC has to select a suitable plan and set it in motion.

During training the networks, the weights for both the PFC and subroutines responsible for carrying out tasks are tuned by gradient descent or an alternative learning rule. By ways of providing a microarchitecture to facilitate compilation, it seems reasonable to hardwire the basic machinery necessary to handle those aspects of PFC oversight common to all PFC operations and train only those weights responsible for novel uses of that machinery in the same way DNC controllers manage reads and writes7.

Assuming such a plan exists, the PFC signals a subroutine, SR1, by setting a register in PFC working memory that SR1 will notice and then activate itself. SR1 is responsible for checking whether the register containing the current character in the input sequence contains a 1, 2 or neither, and on the basis of the result set a flag visible to the PFC indicating failure to identify the beginning of rewarding pattern (0), the initial character of a 1AX (1) or 2BY sequence (2). This flag will serve to activate one of SR2 or SR3 if appropriate.

There are a lot of alternative implementations one might come up with, the challenge is to design a neural network that is easily trained to carry out the necessary steps. The above descriptions rely on logical and relational operators, conditionals and other operations familiar to programmers but challenging to learn from examples. It might help to speed learning by using configurable hybrid neural networks with both fixed and programmable (trained) weights analogous to field-programmable gate array (FPGA) devices8.

While the intent here is not focus on possible solutions so much as to motivate the problem and highlight some of the challenges, here are some suggestions you might find useful: O'Reilly and Frank [384] is directly relevant and worth reading carefully. In particular, the appendix provides detail about the experiments conducted and the models tested including an LSTM model. The Ott and Nieder [391] review article is an excellent complement to the PBWM materials suggested at beginning of this subsection. In previous classes, we investigated other approaches to modeling PFC function and subroutine libraries using variations on differentiable neural computer key-value memory [1], [2], differentiable structured programs [122] [3], neural program emulation [4] and neural coding using a variant of imagination based planning [213, 395, 524] [5].

Missing from the picture are the sort of learning one might observe in an infant that do lend themselves to neural network solutions, such as the way that a newborn learns to recognize its mother within hours of birth, differentiate between its parents and siblings when its vision develops further and soon recognize and track the family dog as it runs around the house. One could characterize such activities in terms of simple programs, but the point is that some problems are best solved with logic and unambiguous rules whereas others require the flexibility of smoothly varying differentiable functions9. Along similar lines, the sort of context-based template matching that manifests in language production and the effective use of analogy in transferring knowledge from one domain to another is another area where humans excel and machines lag behind10.

Learning rules of the sort exemplified in solving problems like the SIR task are likewise well suited to learning to write simple computer programs, whereas riding a bicycle or learning to walk upright yield to relatively simple reinforcement learning solutions. Interestingly, learning language may turn out to be more like learning to walk despite the fact that, as in the case of writing programs, language learning involves manipulating discrete symbols. Why is it that learning to write relatively simple programs is for most people notoriously difficult, whereas the planning and logic involved in preparing dinner seems so simple that a child can learn, and seems on the face of it every bit as complicated as performing multi-digit subtraction or computing the nth number in the Fibonacci series?

And yet writing a short computer program is truly difficult for most humans, requiring almost superhuman concentration and cognitive control. Perhaps we should build intelligent machines that have an innate faculty for such single-minded concentration that they can turn on and off at will and that enables them to perform feats that are virtually impossible for someone with the sort of otherwise quite useful cognitive flexibility and fluid intelligence that most humans possess and take for granted in their diverse activities and social interactions – toggle back and forth between "Mister Spock" and "Captain Kirk" mode. I'm being facetious, but it's a question worth spending a few minutes thinking about.

Miscellaneous Loose Ends: In responding to Gene's inquiry about books on partially observable Markov decision processes, I asked around and a friend of mine at Google recommended the course on POMDPs that Pieter Abbeel and John Schulman teach at Berkeley. I checked out the syllabus and slides and this looks like a well-designed course complete with a useful collection of resources.

Here are the references for a few of the papers that I consulted in writing this entry. The BibTeX records including abstracts are in the footnote at end of this sentence:11

Anthony Strock, Xavier Hinaut, Nicolas Rougier. A Robust Model of Gated Working Memory. Neural Computation, Massachusetts Institute of Technology Press (MIT Press), 2020, pp.151-181. [472]Hayworth, Kenneth and Marblestone, Adam. How thalamic relays might orchestrate supervised deep training and symbolic computation in the brain. bioRxiv. 2018. [218]

Meropi Topalidou, Daisuke Kase, Thomas Boraud, Nicolas Rougier. A Computational Model of Dual Competition between the Basal Ganglia and the Cortex. eNeuro, Society for Neuroscience, 2018. [488]

Nicolas P. Rougier, David C. Noelle, Todd S. Braver, John D. Cohen, Randall C. O'Reilly. Prefrontal Cortex and Flexible Cognitive Control: Rules Without Symbols. Proceedings of the National Academy of Sciences, National Academy of Sciences, 2005, 102 (20), pp.7338-7343. [429]

Nicolas P. Rougier, Randall C. O'Reilly. A Gated Prefrontal Cortex Model of Dynamic Task Switching. Cognitive Science, Wiley, 2002. [430]

O'Reilly, Randall and Frank, Michael. Making Working Memory Work: A Computational Model of Learning in the Prefrontal Cortex and Basal Ganglia. Neural Computation 2006 pp.283-328. [384]

Touretzky, David and Hinton, Geoffrey. Symbols among the neurons: details of a connectionist inference architecture, Proceedings of the 9th International Joint Conference on Artificial Intelligence, 1985, pp. 238-248. [489]

May 29, 2020

%%% Sun May 29 06:34:04 PDT 2020

I've recommended to several of you that you consult Randy O'Reilly's Computational Cognitive Neuroscience to learn more about a number of topics, in particular, those having to do with the interplay between the basal ganglia, prefrontal cortex, motor cortex and areas of the posterior cortex that are important for executive control. In previous discussions I've suggested a few keywords you might use to search in the book PDF for relevant information, but I realize that while it is a relatively short textbook, it is also exceedingly dense with information on many different levels of abstraction and diverse mechanisms and functions.

In case you are still looking for insight concerning executive control and how it relates to various sensory, motor and higher-level cognitive function, I marked up a copy of the book PDF by highlighting excerpts relevant to executive control using a green highlight to indicate important concepts, yellow to indicate important technical takeaways and blue to indicate figures and other resources that are worth a careful look. I've attached the marked up copy, and I've included below a scenario that illustrates some of the key use cases exercising aspects of human executive control.

If you read much of the cognitive science literature relating to executive control, you are apt to find papers on topics such as task switching, attention sets, cognitive shifting, consciously or unconsciously redirecting one's attention from one fixation to another, conflict monitoring, active maintenance of patterns of activity and short-term memory, selective attention versus flexible attention, and cognitive versus motor control, just to mention a few of the more frequent mentions. If you are interest is in developing human-inspired cognitive architectures, then I suggest you start with the attached, marked up copy of Randy's text.

The scenario below is "aspirational" in the sense that it illustrates a relatively simple example of everyday life in which cognitive challenges are hidden in the midst of what, for most of us, are decidedly not challenging since it seems to us that we cope with them effortlessly on a daily basis. Cognitive scientists have developed experimental protocols that employ exaggerated variants of such commonplace challenges in order to determine the limitations of our cognitive apparatus.

These protocols include the Stroop task developed by John Ridley Stroop, the A-not-B task developed by Jean Piaget, the n-back task developed by Wayne Kirchner, and the SIR (Store, Ignore, Recall) task described in O'Reilly et al [219] designed to test the ability to rapidly update and robustly maintain information in working memory using the dynamic gating of PFC representations by the basal ganglia in the PBWM model — where numbers in parentheses correspond to page numbers in [386]. The tasks mentioned above are often used to test implementations of cognitive theories as in the case of PBWM.

In Hazy et al [219] (HTML), the authors describe how their implementation of PBWM written in Leabra handles both the Stroop and n-back tasks. As part of your final project, you might think about how the model or architecture you are developing would handle one of these tasks. Alternatively, you might note that your model falls short on a particular task and discuss how it might be modified or extended to handle the task. Since in most cases you'll be describing and not implementing a model, you could perform a gedanken experiment in which you explain how your model might handle some of challenges described in the aspirational scenario below.

P.S. Here is the promised (aspirational) scenario illustrating different aspects of human executive control:

It's Saturday morning around 8 AM; you are finishing up the dishes after breakfast and thinking about your day ahead. You've committed to making the main dish for dinner tonight, and have decided that you will make up a batch of your favorite veggie burgers. As you clean the counter tops and put the last of the teacups in the dish strainer, you take inventory of the ingredients you'll need for the burgers to determine whether or not you have all the ingredients on hand or will have to make a trip to the grocery store – it appears that have everything you need.The recipe calls for brown rice and you decide that you will prepare the rice in advance, and leave rest of the preparations for later since you have all the ingredients on hand and making the burgers will require little effort aside from dicing some vegetables and using the blender to combine the beans, garlic and lemon juice. You place a saucepan on the stove top, add 2 cups of water to 1 cup of brown rice and turn the burner on high while you measure out the other ingredients. As soon as the water starts to boil you turn down the heat to low, cover the saucepan and set a timer to ring in 20 minutes.

Earlier in the week you promised yourself to spend time this morning practicing some of the jazz songs you've been working on, but on a whim you decide to try something you saw on a YouTube video last night. The video featured a classical pianist who started out playing one of Bach's Brandenburg Concertos and gradually mixed in jazz improvisations that matched the cadence and temperament of the Bach piece12.

After checking for new messages on your phone, you go into the living room, sit down at the piano and begin playing Bach's No. 5 major concerto in D minor without any sheet music since you know the piece so well you could play it in your sleep. After playing a couple of measures experimenting with tempo, you settle on a leisurely pace and experiment by introducing a few improvised notes here and there. This doesn't work for you and so you decide to use the musical score for the concerto to better anticipate and visualize promising opportunities for introducing departures from the notes on the score.

You are have just begun looking for the sheet music, when the timer in the kitchen starts to ring. You return to the kitchen, turn off the heat and remove the lid of the saucepan to check the rice. You notice that there is some rice stuck to the bottom of the pan but there's no sign of burning and so you transfer the rice to a bowl and set it aside to cool down before putting it in the refrigerator. You put the saucepan in the sink, fill it with hot water so it will be easier to clean later on and return to the living room.

You've forgotten exactly what you were doing before the timer interrupted your playing, but there is sheet music spread out all over the piano bench and you remember your plan to use the score of the concerto to help in identifying passages to improvise. You arrange the sheet music on the piano music stand and scan the first couple of pages looking for opportunities before starting to play the piano again. You are adept at sight reading classical music as you play the piano and you can do so for a familiar piece without exerting any (cognitive) effort, but you are disappointed with your first few attempts to improvise.

You pencil in a few notes on the score to suggest possibilities for short departures from original score and try again. This time you manage something more interesting by using the notes you added to launch your improvisation and your knowledge of the Bach piece to make an interesting return to the original score. However, despite your facility with playing the piece you selected for your attempt at improvisation, the result sounds clumsy and contrived, but you persist for another hour before becoming so dissatisfied with your performance that you decide to call it a day.

As you return the sheet music to the storage space under the piano bench, you suddenly remember how much you enjoyed adding slices of avocado to the veggie burgers the last time you made them, and so you decide to run out to the local vegetable stand to see if you can find a ripe avocado and perhaps some heirloom tomatoes to complement the burgers for dinner this evening. You grab your wallet and keys from the table in the hallway where you usually leave them and start for the garage, but then pause for a moment standing in the middle of the kitchen to take stock and make sure you aren't forgetting anything else. You think it prudent to take along your cell phone, but it's not on the table where you usually leave it and so you have to think for a moment to remember when and where you last used it.

May 27, 2020

%%% Thu May 27 04:33:32 PDT 2020

In the following, we attempt to answer two fundamental questions concerning early development: First, what are the prerequisites for grounding the physical, social and emotional intuitions necessary to ensure that an AI system can get the most out of its exposure to the spoken and written word. Second, what does early physiological development tell us about cognitive development and what might steps might we take in order to leverage the former to facilitate the latter in bootstrapping AI systems.

Grounding Machine Intelligence in Human Experience

Grounding human language in human experience may be critical if our goal is to leverage human knowledge in the form of books scientific articles or other documents. This doesn't imply that we need to slavishly recapitulate the experience of a human infant but rather that we somehow create a seed grounding using a simulated environment that captures the dynamics of having a human-like effectively-articulated and sensorily-equipped simulated body subject to (approximately) the same laws of physics that govern our bodies and provide stimulation to human brings our senses.

The challenge for the designer is to provide enough of a foundation that many of the key social and cultural experiences that inform our understanding of human habits and institutions can be acquired through reading—or watching videos on YouTube. Clearly an AI system will have to acquire much of its emotional and social experience indirectly through its exposure to human beings, until such time as we have the need to build AI systems with physical sensations and emotional feelings.

Note that the analogies that permeate the human use of language are not always drawn from the same domain of discourse. Humans routinely use mechanical analogies to explain social behaviors and emotional experiences, as well as to leverage stories and fairy tales about human behavior to explain basic physical concepts. We easily and without realizing mix metaphors and combine analogies to tell stories that explain everything from cooking omelets to explaining quantum mechanics.

The above argument would seem to require that AI systems will need to engage with humans and feel human-like emotions to fully realize the advantages of language. It may be, however, that it is possible bootstrap such a facility by building on top of a foundation based on understanding the physical world of human beings gleaned from an agents experience in a realistic simulator. The result may be more akin to the emotional understanding of someone with severe Asperger syndrome, lacking some aspects of emotional intelligence but able to compensate using its mechanical intuitions to predict human behavior.

Leveraging Biological Development Machine Cognition

After listening to the Spelke OCW lectures, I searched for papers that include the phrases: "core knowledge", "naive physics", "physical intuition" and "mental simulation" in different combinations and found dozens of relevant documents, but settled on just a few with a bias for work by Jason Fischer, whose summary of relevant research and choice of coauthors appealed to me. What follows is a summary of the key ideas that I gleaned from my reading, liberally punctuated with verbatim quotes from the papers that I found to be most informative:

Fischer [165] in reviewing the experiments of newborns in their first three or four months performing relatively sophisticated tasks that involve "early-emerging physical intuitions", notes that Liz Spelke and her colleagues argue from these studies that "children are born with an innate knowledge of basic principles governing object motion and that this knowledge provides the mental scaffolding for learning more sophisticated physical concepts over the course of development." Fischer goes on to ask "Just how accurate are our physical intuitions? Do we carry out mental simulations of physical dynamics, or do we rely upon heuristics that are effective in many scenarios but could break down in others? What brain machinery supports naíve physics?"

Fischer introduces additional detail regarding the experiments with newborns in the process of critiquing several theories about how infants might go about performing these tasks, including the possibility that infants learn rules by way of explanation having observed contradictory outcomes that can't be predicted by the infant's current knowledge. Fisher spends some time describing a competing proposal that involves the existence of a mental model that makes it possible to simulate perceived physical process using a biological version of a video-game physics engine—albeit one with substantially less detail—that can be run forward and backward in order to validate or undermine the infant's physical intuitions.

Mitko and Fischer [358] examine the degree to which intuitive physics can be accounted for by our spatial cognition and conclude that their findings point toward the two faculties being separable. Binz and Endres [58] compare synthetic models of perception and find agreement between the performance of a family of optimized models and the pattern of development observed in infants and children on three classic cognitive tasks [44], indicating common principles for the acquisition of knowledge—admittedly at a considerable difference in the amount of training data required. Fischer et al [166] examine the functional neuroanatomy of physical inference and observe a close relationship between the cognitive and neural mechanisms involved in parsing the physical content of a scene and preparing an appropriate action.

Pursuant to investigating the proposal that humans rely upon a mental simulation engine akin to the "physics engines" used in many video games, Fischer et al [166] identify a common brain network and frontal and parietal cortex that appears to be active "in a variety of physical inference tasks as well as simply viewing physically rich scenes". The investigators used (40) Amazon Mechanical Turk workers to classify short videos as to their relevance to physical inference and then collected fMRI data on twelve subjects (ages 18–26) participating in the fMRI component [167].

Without going into any great detail, the conducted experiments revealed a "systematic pattern of activation across all three tasks including bilateral frontal regions (dorsal premotor cortex / supplementary motor area), bilateral parietal regions (somatosensory association cortex / superior parietal lobule), and the left supramarginal gyrus," Interestingly, the authors also note that "subcortical structures and the cerebellum were also included in the parcel generation process, but no consistent group activity appeared in those areas."

The subjects in this case were all young adults and so it is not clear whether the same circuits play a role in the first few months postnally, nor whether these circuits are even fully mature in this early window. We have already seen that infant vision is limited at birth in terms of acuity (20/400), color discrimination (shades of gray) and depth disparity (monocular). In addition there are rather dramatic changes in the organization of primary motor cortex during the first three months.

Chakrabarty and Martin [87] studied the postnatal development of the motor representation in primary motor cortex in an effort to understand when the muscles and joints of the forelimb become represented in primary motor cortex (M1) during postnatal life and how local representation patterns change13. The authors "show that the M1 motor representation is absent at day 45 and, during the subsequent month, the motor map is constructed by progressively representing more distal forelimb joints.

Tau and Peterson [480] note that the "the brain grows to about 70% of its adult size by 1 year of age [...] [t]his increase in brain volume during the first year of life is greatest in the cerebellum, followed by subcortical areas and then the cerebral cortex, which increases in volume by an impressive 88% in the first year. Jernigan et al [249] provide additional insights regarding the achievement of cognitive milestones based on the development of myelinated fiber tracts.

It seems likely that the circuits in frontal and parietal cortex that Fischer et al identify as the basis for our intuitive physical reasoning either do not yet exist or are not mature enough to support the sort of learning we see in infants during the first few months postnatally. They may, however, physically develop in parallel with the earliest experiences of the infant leading to the acquisition of fundamental physical principles. Specifically, the incremental maturation of those circuits might provide the curricular scaffolding necessary to facilitate such learning by limiting sensory and motor abilities to focus the infant's attention and thereby simplify the learning process.

How could you arrange circumstances so that most of the time learning is one or zero shot and even if you get it wrong it is (relatively) easy to reverse or unlearn without collateral damage — my euphemism for catastrophic forgetting? Of course, if you linger too long with a misconception about some fundamental physical or psychological principle or assume that it must be true because no one has ever told you / demonstrated to you otherwise, then you may be setting yourself up for considerable grief downstream — all the more important getting it right the first time.

Babbling and Stumbling our Way to Physical Intuition

Imagine the staged maturation of the circuits that enable the infant's growing awareness of the position and movement of its body — its proprioceptive sense — in tandem with the gradual roll out of its ability to control its movement starting from the trunk and gradually extending to the extremities [87]. At each point in time, there will be an opportunity to experiment with a new motor capability, e.g., the (constrained) degrees of freedom allowed by the bones, muscles and tendons that comprise your elbow, conditioned on the controlled articulation of previously-learned perception-action pairings, e.g., your shoulder (scapula) and its associated tendons, plus the upper arm (brachium) consisting of your humerus, biceps and tendons that connect these to radius bone at the elbow.

Imagine further that as each new sensori-motor system comes on line, the newborn executes the motor equivalent of babbling [326] to encode a collection of motor primitives in primary motor cortex. This process could be extended to multiple joint-muscle pairs enabling the newborn to explore the recursive composition of simpler commands. As the infant's visual acuity, depth perception and color vision improve, the opportunity for more complicated goal-driven babbling activities creating a natural hierarchy of features in keeping with Joaqúin Fuster's hierarchy [184].

I am certainly no expert having read or skimmed a dozen or so papers on the early development of the primate central nervous system. Nor do I pretend to understand all of what I read about the behavioral and cognitive milestones of human infants. It seems to me however that these milestones could be aligned with neurophysiological changes especially within the first year, during which the human brain is undergoing growth and maturation in some of the key areas of the brain responsible for supporting the sort of behavioral trajectory that we witness in the growing toddler. Whether true or not, the very idea provides an interesting suggestion about how one might mimic the early coordinated stages of aligned cognitive and neurophysiological development.

The proposal that I have in mind would be to exploit the limitations of the evolving motor and sensory cortex to engage with the infants restricted ambient environment to quickly learn fundamental properties of the underlying dynamical system by relying solely on its limited repertoire of sensory-motor actions. So for example in the first few days the newborn can only make out blurry gray shapes that are in the foreground and is unable to move its body to any appreciable degree. At this stage, the infant might simply learn to attend to the blurry shape of its mother and perhaps register her movements without actually moving any body parts other than its eyes rotating in their sockets.

The scenario of a looming blobby object would likely repeat frequently in the first couple of days giving the infant ample time to learn how to track nearby object by gaze alone. We would expect that the reciprocal connections linking perception to action would serve to learn how to perform such tracking automatically. Subsequent developmental stages would introduce multiple blobs perhaps with some additional acuity so that the blobs could be distinguished and tracked independently if indeed they moved so. Two blobs in the same visual plane might knock into one another or seemingly one pass through the other since without depth it might be difficult to reliably infer occlusion.

As an exercise, it might be interesting to imagine the infant's sensorimotor trajectory as a timeline and see if one can identify opportunities for incorporating into the infant's evolving physics model the Gestalt principles of figure-ground, similarity, proximity, common region, continuity, closure, focal point and common fate. Some of these it might be possible to learn in any order, whereas others would build on one another thereby requiring some sort of curricular intervention to make sure such dependencies are observed. Alternatively the principles might simply be instilled into the infant's behavioral repertoire, their implicit observance a prerequisite for their success.

At some point the infant's sensorimotor apparatus will be sophisticated enough that the baby will have greater autonomy and the option of ignoring a parent trying to teach yet another lesson. To make further progress, the growing child will have to be convinced that it is in her interest to attend to and perhaps follow the instructions of a parent or appropriate surrogate such as a grandparent kindergarten instructor. I currently have no idea of how a child might graduate from being depended upon parents or other adults to achieving a greater degree of autonomy along with its attendant ambiguity and the opportunity to strike out on its own. It is however an interesting challenge to contemplate.

Miscellaneous Loose Ends: Here are some exchanges with a neuroscientist who took a look at an early version of the above:

TLD: Given the early development arc described by Spelke and others, it would seem that many or most of the areas you identify in the PNAS paper are either not fully articulated or simply absent in the infant brain and yet the authors maintain that there must exist some neural circuitry at work that explains the their performance on simple tasks that imply some basic physical intuition. My students and I are curious if there is any work identifying the neural substrate for that early intuitive capability in an infant during its first five months postnatally that might serve as an inductive bias for much of what follows during later development. Thanks in advance for any insights you might have or papers you can point us to.JIF: That's a great question regarding the neural basis of those early emerging physical intuitions. Since we know that non-human animals possess many of those same intuitions beginning early in life, I can imagine the kinds of experiments that could answer your question (e.g., neural recordings done during expectation violation paradigms similar to the ones used with human infants). To my knowledge, that kind of work has not been done yet. But I can say that on the behavioral side, those expectations about the mechanical properties of objects are present very early, about as close to a demonstration of innateness as you can get. For example, these findings from baby chicks show that this kind of knowledge is present just after hatching and with next to no additional experience [497, 421].

My thinking goes that core physical knowledge sits in the earliest seeds of the regions that will develop into those that we identified in the PNAS paper as being important for intuitive physics in adults. It kickstarts the process by which those regions develop the array of properties that we see in adulthood. If we were to look for the neural basis of that early-emerging knowledge in young infants, we may find it right in the places we'd expect based on the brain imaging in adults, even before the other functional properties of those brain regions develop. That does more or less seem to be the case for other core visual domains that have been studied with brain imaging in infants [126]. I know this doesn't provide much in the way of neural substrates, especially at the level of circuitry (it's more like a wishlist for neuroscience work that I'd like to see get done!) but hopefully some food for though anyway.

TLD: It's interesting how many recent papers are trying to make sense of early development starting at around four or five months. I now understand that five months old is a milestone neurophysiologically speaking — the entire infant brain is still undergoing substantial changes, but several key circuits have been established. I've been particularly interested in how the process enfolds during those first four weeks when the relevant substrate is very much in the process of assembly. When I wrote you earlier, I was entertaining the idea that there must be some subcortical circuitry at play to bridge the gap between the peripheral nervous system and the the developing cortex. After some thought this seems obviously the case, but largely beside the point given I now think of the rapidly maturing motor and somatosensory cortex as providing "curricular affordances" to guide exploration and focus learning.

May 23, 2020

%%% Sat May 23 04:23:10 PDT 2020

Earlier this month I suggested that some of you might be interested in videos on the MIT open courseware website featuring Liz Spelke lecturing on child development. Those lectures emphasized experiments that were conducted by Spelke, her colleagues at Harvard as well as other cognitive neuroscientists with an emphasis on the protocols used to elicit behavior for the purpose of verifying hypotheses concerning specific cognitive capacities in humans and other model organisms. Those experiments were considerably constrained by the limited repertoire of behavioral responses one has to work with in studying infants and young children.

This morning we watched an invited lecture at the Allen Institute for AI in which Spelke directly explained different types of cognitive systems that she refers to as core knowledge and does so in a manner designed to address the concerns of researchers in AI and machine learning who are interested in commonsense reasoning in machines and hoping that our current understanding of child development might provide insight into the kinds of concepts that AI systems could possibly learn and the various trajectories describing what children learn, in what order and at what age — with the hope of recapitulating such behavior in machine models of cognition.

Here is the abstract–with my italics added to emphasize topics that some of you have mentioned in our final project discussions–and a link to the video that was uploaded to YouTube on December 4, 2019:

Title: From Core Concepts to New Systems of Knowledge VIDEOAbstract: Young children rapidly gain a basic, commonsense understanding of how the world works. Research on infants suggests that this understanding rests on a set of early emerging, domain-specific cognitive systems. Six systems of core knowledge serve to (a) represent objects and their motions, (b) animate beings and their actions, (c) social beings and their engagements, (d) places and their relations of distance and direction, (e) forms and their scale-invariant geometry, and (f) number. These systems are innate, abstract, strikingly limited, and yet present and functional throughout human life. Infants' knowledge then grows both through gradual learning processes that people share with other animals, and through a fast and flexible learning process that appears to be unique to our species and emerges with the onset of language. The latter process composes new systems of concepts productively by combining concepts from core knowledge. The compositional process is poorly understood but amenable to study through coordinated research in psychology, neuroscience and artificial intelligence. To illustrate, this talk will focus on core knowledge of places, objects, and people, and on one new system of concepts that emerges early in human development: the artifact concepts underlying our prolific tool use.

Nikhil Bhattasali, who took the course last year, recently asked me about pointers to interesting research on (a) self-organizing learning mechanisms for visual and tactile senses and (b) mechanisms for formulating goals (e.g. a baby wanting to pick up a toy) and recognizing when those goals are satisfied. Here is my answer which includes references to work by Guido Schillaci and his colleagues and a link to an open-access special issue of Frontiers in Robotics and AI which he co-edited with Verena Hafner and Bruno Lara entitled "Re-Enacting Sensorimotor Experience for Cognition" — as a further enticement the first technical entry is "Self-organized internal models architecture for coding sensory–motor schemes".

Regarding (a) the first idea that comes to my mind is hierarchies of proprioceptive and multi-modal maps of the sort that Joaquín Fuster talks about, and then using some form of predictive coding with both forward and inverse maps constituting the corresponding reciprocal connections and the top-down corrections from predictive coding employed to implement some form of efference copy / corollary discharge. The hierarchy could be built layer by layer starting with, for example, the retinotopic maps in primary visual cortex – according to Brian Wandel, there are upwards of a dozen such topographically organized and spatially-aligned representations in the primary and secondary visual cortex. It seems pretty clear that the architecture is in place in the developing primate brain to support such a highly-preserved, stereotyped collection of representations.The higher-level uni-modal secondary and multimodal association areas may not be uniformly organized and interconnected but it would seem necessary that there be in place some system for partitioning the collection of all of these features so as to ensure that the thalamocortical_radiations and cortico-basal-ganglia-thalamo-cortical_loop provide a highly-stereotyped, highly-structured interface to working memory "registers" in the motor and prefrontal cortex. Within each of these areas, both unimodal and multimodal, the corresponding topographic mapping would probably have some leeway to accommodate changes due to age, disease and structural damage, as in the case of somatosensory representations in the rat barrel cortex. There appear to be quite a few – perhaps thousands – of papers suggesting the use of hierarchies of feature-aligned, self-organizing maps is a representation.

As for more concrete proposals implemented in working robots or simulated robot environments, Guido Schillaci, Verena Hafner and Bruno Lara co-edited an interesting collection of papers on the related topic of "Re-Enacting Sensorimotor Experience for Cognition" from which I've only sampled a few papers, but the collection is open-access and you can download the PDF if you are curious. In addition, I suggest that you take a look at Guido's papers many of which are available on his website here.

Miscellaneous Loose Ends: If you viewed Liz Spelke's OCW lecture, you might be curious about what exactly newborn sees during the first few months; here are three tools designed for new parents to be aware of and notice possible problems with their new baby's vision: WebMD newborn-vision resources, How Babies See the World and The Baby Sight Tool.

May 19, 2020

%%% Tues May 19 04:17:37 PDT 2020

Here is some of the background material I've been gathering as it seemed potentially relevant for a couple of final projects. The first three sections are relatively straightforward and could turn out to be useful for several projects. The last section is more theoretical and a bit dense. You may find it interesting if the title "On the Emergence of Sensory Motor Hierarchies" intrigues you, but it is primarily relevant to a better understanding the perception-action cycle and developing an information theoretic account of how sensory motor hierarchies might naturally arise out of the interaction between the environment and an agent's acting on its own behalf and (intrinsically) recognizing the value of information.

Behavioral Biases: Environmental Triggers

Formally, if you want to induce a behavior–as opposed to hard wiring the behavior and the rules for deploying it into agent programming–you need to introduce an instinct or bias in the agent cognitive architecture to facilitate the desired behavior such that once initiated the induced behavior will emerge naturally from interaction with the environment. Alternatively, you can choose an environment with features–generally referred to as affordances–such that when the agent encounters them in the wild these features automatically initiate appropriate behavior conditioned on the observable characteristics of the specific affordance [194].

Sometimes it make sense to do both, as generally happens in natural environments. For example, many species of birds have distinctive superficial features that, when seen by a hatchling / baby chick, will induce "imprinting" so that thereafter the chick will behave toward, e.g., stay near and follow around, the first adult bird it encounters as its mother. As another example, an abhorrence of and immediate retreat from snake- or spider-evocative shapes is built into most mammals.

Sensory Hierarchy: Exploratory Behaviors

There are other approaches that induce exploratory behavior as a response to a drive to (efficiently) collect information that serves–is relevant to–action learning and selection and is often initiated by random movement, sometimes called servo-babbling in robotics [530], e.g., see the attached paper14. In an hostile environment, this proclivity has to balanced by a survival instinct that recognizes and avoids dangerous situations.

In social animals and artificial agents expected to perform collaboratively, generally one instills an instinct to interact with, e.g., seek out and playfully engage with siblings, and learn how to "read" and respond appropriately to the intentions of other agents in their species-specific social milieu. You might want to think carefully about how to implement such instincts and affordances in your experimental environment and its programmable agents.

Efference Copies and Corollary Discharge

Gordon et al [202] in a review of discussions that took place in a workshop at the Santa Fe Institute entitled "Perception & Action – An Interdisciplinary Approach to Cognitive Systems Theory" discuss the biological evidence for integration of perception and action in the brain and the role of efference copies in particular. The two referenced papers in the following quote are worth a look if you are interested in this area:

Foundational anatomical and physiological studies have provided substantial evidence for the integration of motor and sensory functions in the brain (for a recent review, see Guillery and Sherman [204]). Regarding the neuroanatomy of the thalamus and cortex, Guillery and Sherman have noted that most, if not all, ascending axons reaching the thalamus for relay to the cortex have collateral branches that innervate the spinal cord and motor nuclei of the brainstem (see Guillery [204]). Similarly, those cortico-cortical connections that are relayed via higher-order thalamic structures, such as the pulvinar nucleus, also branch to innervate brainstem motor nuclei. Guillery and Sherman thus hypothesize that a significant portion of the driving inputs to thalamicrelay nuclei are "efference copies" of motor instructions sent to subcortical motor centers, suggesting a more pervasive ambiguity between sensory and motor signals than has previously been acknowledged. — Excerpt from Gordon et al [202]

Emergence of Sensory Motor Hierarchies

In doing background research helping students interested in projects relating to natural language processing, the Wei et al [512] paper that Rishabh Singh suggested as an interesting example of exploiting the relationship between natural and artificial (programming) languages got me thinking about using language as the basis for a bottleneck learning strategy [483]. In particular, I was intrigued with their use of the mutual information15 of two language models as the basis for – or at least one component of – a regularization scheme. Another idea that kept coming up in my discussions with students had to do with the nature of hierarchy, and in particular, hierarchy in the context of Fuster's perception-action cycle16.

With these ideas in my mind, I started reviewing related work by Naftali Tishby and Yoram Singer: Tishby for his joint effort with Fernando Pereira and Bill Bialek [483] on the information bottleneck method, and Yoram for his work on adaptive mixtures of probabilistic automata and the hierarchical Markov hidden model that he developed with Tishby and Shai Fine [162]. The relevance of the bottleneck method is obvious; the hierarchical model is the simplest partially observable stochastic process one might use as a theoretical basis for studying hierarchical reinforcement learning.

In his 2013 Technion lecture on the emergence of hierarchies in sensing-acting systems, Tishby uses information theory to model the performance of an agent constrained to interleave information gathering and acting in its environment. He and his coauthor Daniel Polani [484] are able to prove that a hierarchy of features will emerge naturally and converge on an asymptotically optimal solution as a consequence of gathering information useful for acting in its environment. Their general formulation shares some characteristics with Karl Friston's free energy principle for biological systems [261]; however, I found Tishby and Polani's analysis clearer and more rigorous17.

Simplifying somewhat, Tishby and Polani assume the agent will start by learning features that apply only to its immediate spatial and temporal extent and will continue doing so until the return on investment in effort yields diminishing returns relative to future rewards [530]. In their model, they appeal to free energy and the value of information, establishing a direct-analogy with compression-distortion trade-offs in source coding. Having exhausted its prospects for extracting value from this limited spatio-temporal extent, the agent expands its spatial and temporal extent and begins learning features whose respective receptive fields are proportionally expanded. The same cycle repeats until the features in the next level of the hierarchy extend beyond the scope of the agent's ability to extract additional value. By recursively expanding the agent's spatio-temporal extent at an exponential rate, e.g., doubling its size at each level, they are able to prove convergence and establish an optimal lower bound18.

Tishby’s lecture at the Institute for Advanced Studies on February 24, 2020, covers some of the same ground, but this time he provides a computational model using recurrent deep neural networks that starts with a single hidden layer and progressively adds new layers that behave in accord with his theoretical analyses [485]. I'm reading papers and watching lectures by Liz Spelke on child development, and it occurs to me that neonates and toddlers in their first 18 months appear to exhibit a similar trajectory of expanding focus, by progressively enlarging their sphere of influence as their sensory apparatus and means of interacting with their environment develop. While neurogenesis is nearly complete by birth, much of the infant brain–and early visual cortex in particular–undergoes extensive change over the first 18 months19.

Tishby's talk also prompted me to review the work of Etienne Koechlin and his colleagues Thomas Summerfield and Christopher Jubault as it relates to the role of time and temporal hierarchies [282, 284]. Joaquín Fuster [183] summarizes their contribution writing, "In their methodologically impeccable study, Koechlin et al [285] reveal the neural dynamics of the frontal hierarchy in behavioral action. Progressively higher areas control the performance of actions requiring the integration of progressively more complex and temporally dispersed information.

Their study substantiates the crucial role of the prefrontal cortex in the temporal organization of cognitive control." In [282], Koechlin and Summerfield contrast the roles of sensorimotor and cognitive control dividing the latter into three components corresponding to branching, episodic and contextual control. These three components are identified with adjacent areas in the prefrontal cortex and their time course and dependence on one another is explained in terms of their related total, conditional and mutual information. If you are reading about this general area of study for the first time, you might want to read David Badre's 2008 review summarizing the evidence for a rostro-caudal gradient of function in prefrontal cortex [41].

Miscellaneous Loose Ends: A number of you have asked for my recommendations regarding introductory material on various topics in systems and cognitive neuroscience. Some of you may know about the MIT Open Courseware (OCW) Foundation. I've taken several courses over the years and have been impressed with my small sample. About ten years ago I took Fundamentals of Biology with Eric_Lander. It refreshed (and corrected) what I had previously learned and enabled me to update what knowledge I already had by filling in gaps (gaping holes really) — as a plus, Lander is an affable and well-informed instructor full of interesting anecdotes about the human genome project and knowledgeable about a wide range of other topics20.

If you are looking for introductory lectures, go to OCW website and use the search box to find out what MIT has to offer. Relevant to CS379C, the Brains, Minds and Machines Summer Course run by Tommi Poggio includes several lectures directly relevant to our discussions concerning human development. In particular, Liz Spelke's lecture on Cognition in Infancy provides an excellent introduction. The lecture is in two parts with the second part dealing with how children learn various concepts of the sort relevant to common sense reasoning.

May 17, 2020

%%% Sun May 17 04:55:45 PDT 2020

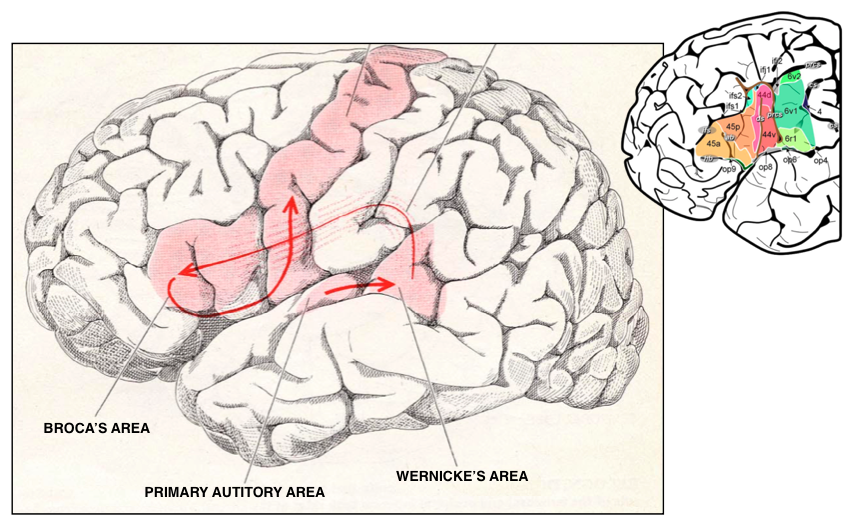

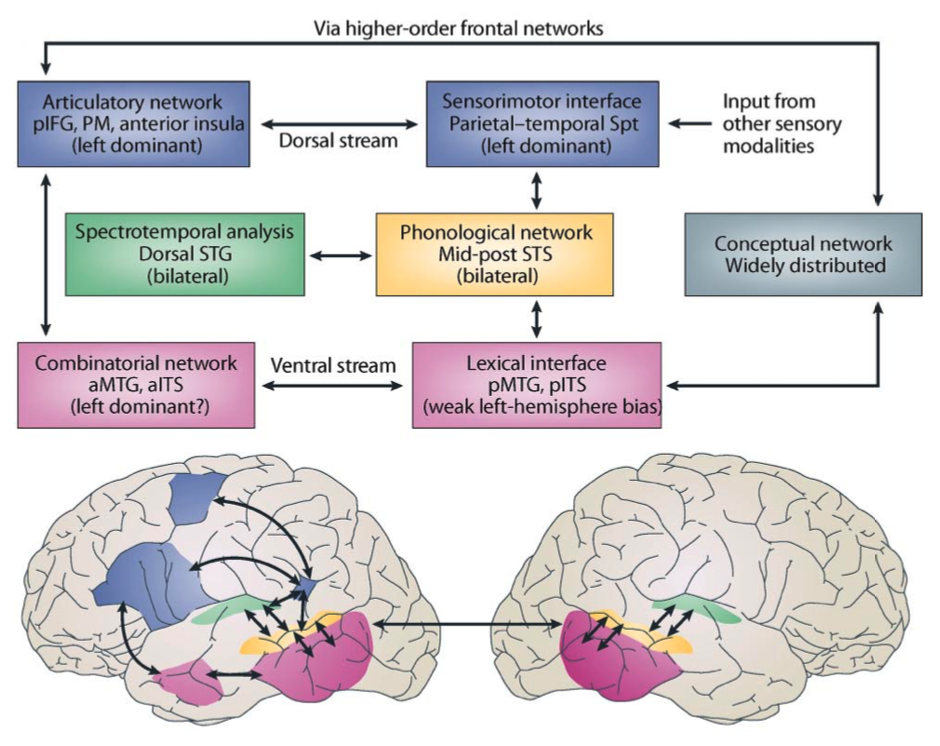

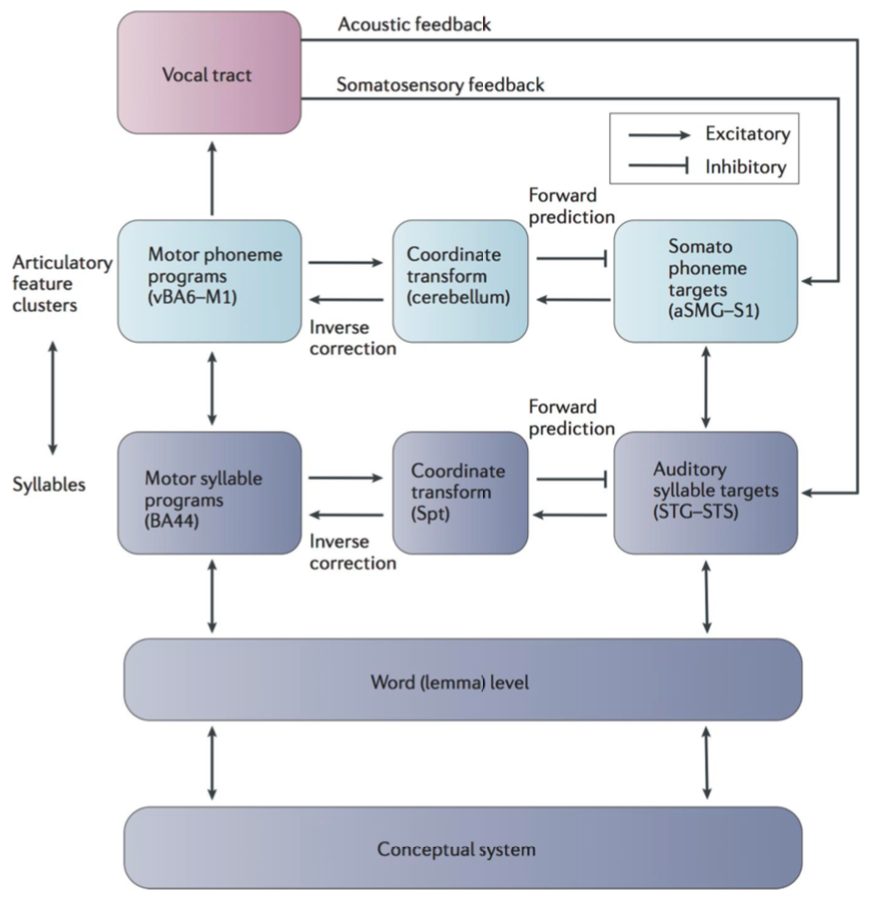

I've been (somewhat) obsessed with Fuster's perception-action cycle and related aligned-and-reciprocally-connected sensory / motor hierarchy since I first encountered his research on the prefrontal cortex [185]. Since then I've generated nearly a dozen models only a few of which ever made it into these research notes. Most recently I got excited thinking about perisylvian language networks, and, in particular the arcuate fasciculus and the inverse pathway from motor (Broca's complex) to sensory (including Wernicke's area) cortex and its possible role in supporting inner speech. The model that I proposed:

|

|

What if we were to implement a variant of their code-generation encoder-decoder unit as a forward model complementing my inverse–inner speech–model and then couple the couple the two with a regularizing loss function as in Wei et al? As a simple thought experiment, assume that both the forward and inverse models involve a latent-variable (informational) bottleneck layer [485] as shown in the following graphic. What might serve as the analog of their dual-constraints module integrating the language model for code and the language model for comments and what exactly would be their role in loss function?

|

Note that the dual-constraints component of the Wei et al paper is part of the objective function intended to bias the use of the language employed in each of the primal and dual models. In the case of the reciprocal connections in the above speech network, both the primal and dual pathways use the same vocabulary / lexicon for describing their respective source thought vectors, but the intended (contextually-based) meaning of a word or n-gram might be different depending on the target audience and ambient interlocutory environment, e.g., a technical discussion between two software engineers in the midst pair programming versus a conversation with a partner in an obviously social mixed-company context.

|

As illustrated in the graphic, the language-based forward model is depicted as a sequence of words just as was the inverse model. Instead, suppose that we replace each word sequence by a representation of the respective distribution with which the words and phrases in the language model were generated. In the case of the programmer's apprentice, we want to allow for multiple distributions associated with different agents that the apprentice interacts with, including the programmer and fictional agents that capture the different points of view or context-appropriate distribution, e.g., the difference between a social setting and the back and forth one might expect between the programmer and the apprentice in the midst of pair programming.

In the machine theory of mind (ToM) model proposed by Rabinowitz et al [412], the authors distinguish between "a general theory of mind–the learned weights of the network, which encapsulate predictions about the common behavior of all agents in the training set–and an agent-specific theory of mind–the 'agent embedding' formed from observations about a single agent at test time, which encapsulates what makes this agent’s character and mental state distinct from others’. These correspond to a prior and posterior over agent behavior." One use of such a ToM would allow, for example, the apprentice to channel the programmer when absent by approximating how the programmer might describe a code fragment depending on how the apprentice describes it.

Miscellaneous Loose Ends: Related to the action-perception cycle, I came across some work from Naftali Tishby on this topic. If you don't know Tali Tishby, his theoretical work with Fernando Pereira and Bill Bialek on the information bottleneck method [485, 483] has been highly influential in machine learning. A multi-class course taught by Tishby at The Edmond and Lily Safra Center for Brain Sciences (ELSC) at the Hebrew University of Jerusalem is available as a collection of individual videos entitled "Perception Action Cycle Week m" where m can run from 1 to posssibly larger than 12 — I haven't looked any further.

He has a related chapter in Perception-Action Cycle: Models, Architectures, and Hardware [109] which is available for download on the Springer website PDF. Also of possible interest are his Technion lectures "On the Emergence of Hierarchies in Sensing-Acting Systems" VIDE0 and the more recent lecture on February 24, 2020 at the Institute for Advanced Studies "Direct and Dual Information Bottleneck Frameworks for Deep Learning" VIDE0.

May 15, 2020

%%% Fri May 15 4:10:41 PDT 2020

I found Leslie Kaelbling's discussion in class on Thursday particularly thought provoking. I liked the way she set up the problem. Despite using some of the same familiar words it was clear she was departing from the usual story and she took her time bringing the audience along. That was important otherwise when she reached the point where she could reveal her alternative way of looking at the problem, the students might not have recognized it for being novel or undersood its implications.

It makes perfect sense that we as engineers can bootstrap a system by programming in inductive biases (instinct), general organizing strategies (cognitive architecture) and mechanical design (embodied affordances), in order to accelerate subsequent learning. As Leslie pointed out, the trick is to find a trade-off between up-front engineering effort that can benefit a wide range of applications and on-the-job training necessary to accommodate the details of the target working environment.

Apart from the fact that I really want to understand the computational architecture of the human brain for lots of personal and scientific reasons, I see my strategy for proceeding pretty much along the same lines as Leslie does, albeit with a very different way of describing it. Despite being determined by natural selection, the human brain is remarkably well engineered for its target environment(s). The trade-off between "nature and nurture" mirrors Leslie's engineering trade-off and exhibits some extraordinarily clever compromises.

In particular, the clever integration of connectionist and symbolic computing capabilities–which I can rhapsodize over at length–constitutes an engineering accomplishment of the first rank. From my perspective, natural systems benefit from three distinct engineering practices: (a) natural selection accounts for the basic physical substrate, (b) our staged development spread out over two decades but particular intense in the first 5-6 years corresponds to the work Leslie and her students do, and (c) the rest (overlapping with development) plays out over a lifetime of on-the-job training and includes constantly adapting to our changing environment and compensating for our aging physical infrastructure.

It's worth pointing out that in humans (b) and (c) include the integration and refinement of language—undoubtedly the most powerful technology ever developed by human collective intelligence, and missing from Leslie's account, perhaps only excluded for lack of time. I don't see language as special except in its more general characterization as a collection cognitive genes refined over millennia by cultural selection that can be passed on, literally by word of mouth, from one generation to the next, but rather as a tool for multi-agent coordination.

So instead of (a) Intel, Hitachi, Siemens, etc providing the basic hardware, (b) MIT and Stanford engineers writing code to support a general declarative and procedural substrate, and (c) on-the-job machine learning, we employ (a') our software instantiated biologically inspired cognitive architecture, (b') recapitulate human development using curriculum learning systems to reduce sample complexity, and (c') use complementary learning systems to enable life-long-learning that leverages human language to expedite on-the-job training.

Miscellaneous Loose Ends: You might take a look at the first few technical slides–#5 to #10– in my introductory lecture to get some idea just how far we are willing to abstract away from the messy biological details that most neurobiologists consider the most interesting aspects of human cognition.

I use the concept of a high-dimensional configuration space and a corresponding lower-dimensional manifold21 of reachable points to explain how the basal ganglia provides sequences of state-action-reward triples to the cerebellum which it then uses to program the motor cortex – an idea developed independently by both David Marr [341, 340] and James Albus [7, 8] building on ideas from Claude Shannon and Norbert Wiener.