Research Discussions

The following log contains entries starting several months prior to the first day of class, involving colleagues at Brown, Google and Stanford, invited speakers, collaborators, and technical consultants. Each entry contains a mix of technical notes, references and short tutorials on background topics that students may find useful during the course. Entries after the start of class include notes on class discussions, technical supplements and additional references. The entries are listed in reverse chronological order with a bibliography and footnotes at the end.

Prological Epilogue

Usually an epilogue appears at the end of a book and a prologue at the beginning. However, the entries in this research log appear in reverse chronological order and so I've coined the phrase "Prological Epilogue" to refer to an epilogue that appears as a prologue to a text organized in reverse chronological order. This literary conceit serves in the present circumstances to note that the final class for this installment of CS379C occurred on June 1 and that all the later entries are included for students who continued working on their projects as well as new students who joined the group and started new projects. These students regularly met with me and my colleagues at Google and were provided with Google Cloud Compute Engine accounts to work with some of the larger datasets and take advantage of the machine learning resources enabled through the TensorFlow and TensorProcessorUnit technologies.

September 21, 2016

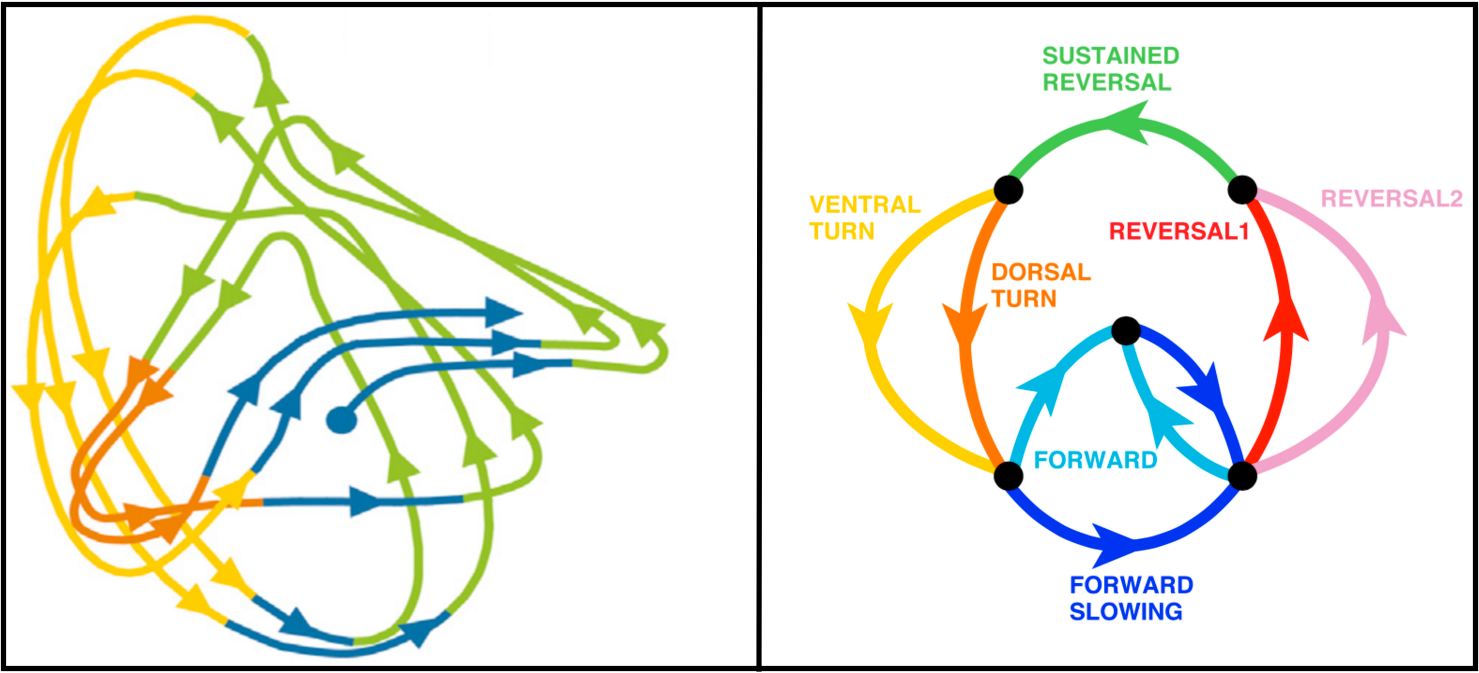

For the last few weeks I've been designing, building and experimenting with new tools for exploring the local structure of dense microcircuit connectomes. I spent yesterday developing a few demos to show off what I've done so far. The following notes are sketchy, but I'm hoping that the plots, associated captions and the introductory presentation I made during the San Francisco Neuromancer Rendezvous will provide enough context to give you an idea of what I'm trying to build.

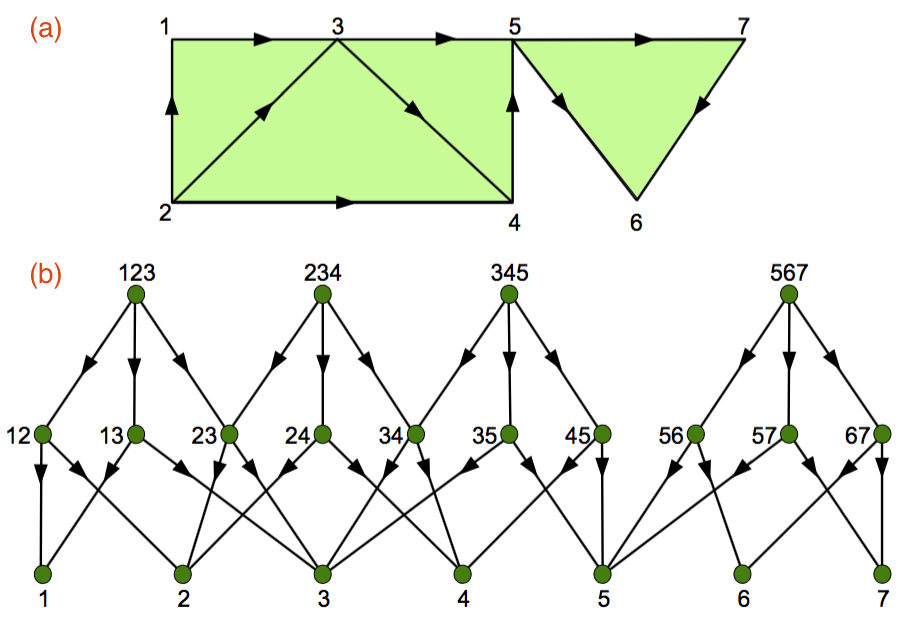

This log entry emphasizes topological invariants as microcircuit features. However, more-traditional, graph-theoretic methods for identifying functionally-relevant patterns of connectivity in terms of network motifs may work equally well or better. In the following, you may be well served by focusing primarily on the figures and their captions, since the intervening text consists primarily of notes to myself for expanding this entry to provide a more complete account of this project.

Revise and summarize earlier notes on using topological invariants to investigate the structural and functional properties of local regions of densely reconstructed microcircuits [ … ] review reasons for turning to the FlyEM dataset from Janelia [ … ] enumerate some of the main advantages of using the extensive FlyEM metadata provided by Janelia, and, in particular, the opportunity it affords for testing automated analysis algorithms to infer function from structure. [ … ] ←

|

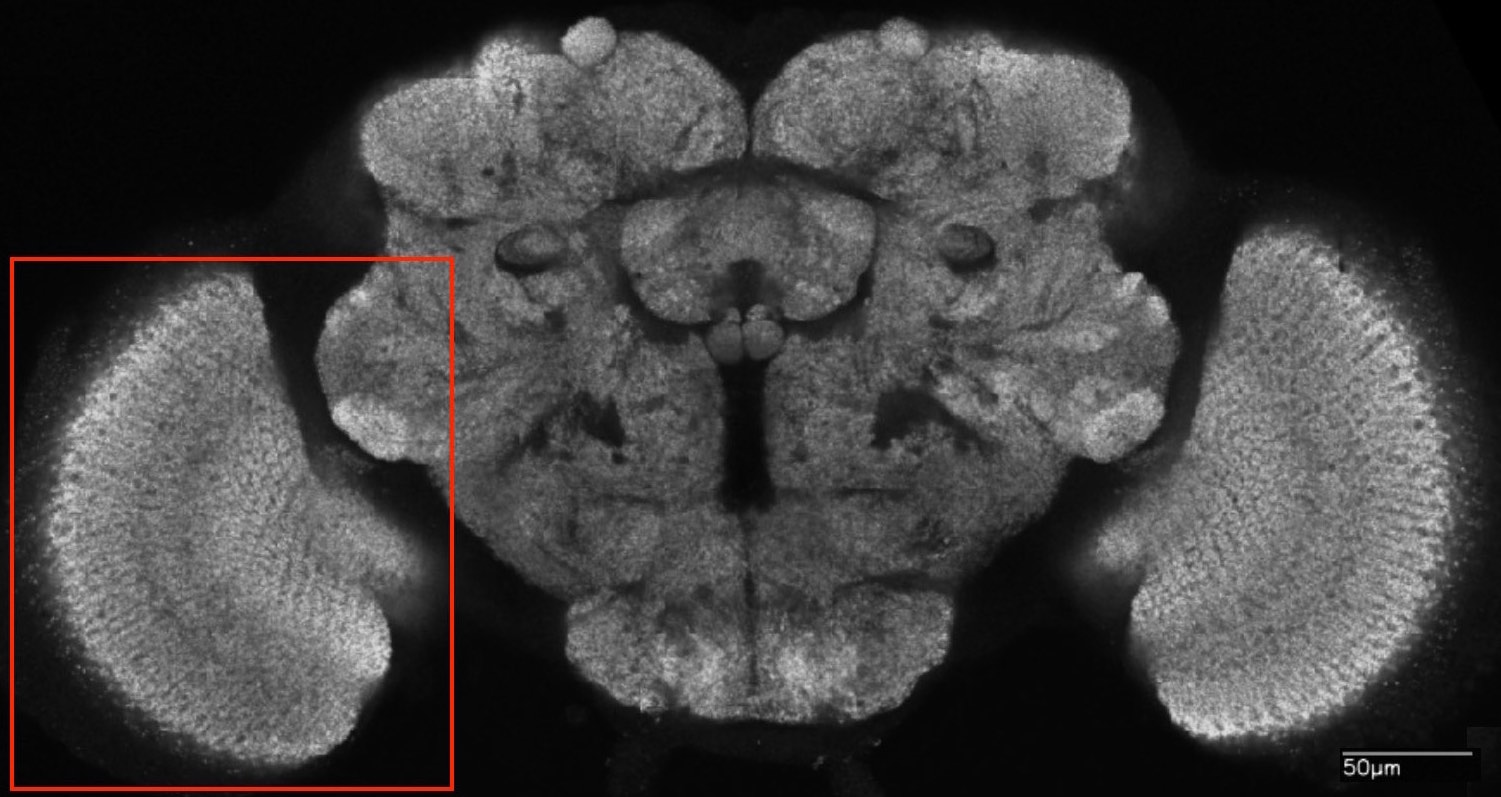

Mention related research at Janelia on the Drosophila visual system including, on the structural side, the work leading up to the seven-column medulla dataset [248, 281, 254], and, on the functional side, calcium imaging work out of Michael Reiser's lab [248]. Provide some detail on the resources offered in the FlyEM dataset and the extensive supporting tools and metadata. Note how the cell-type annotations and skeleton data facilitated much of the work described in this log entry.

|

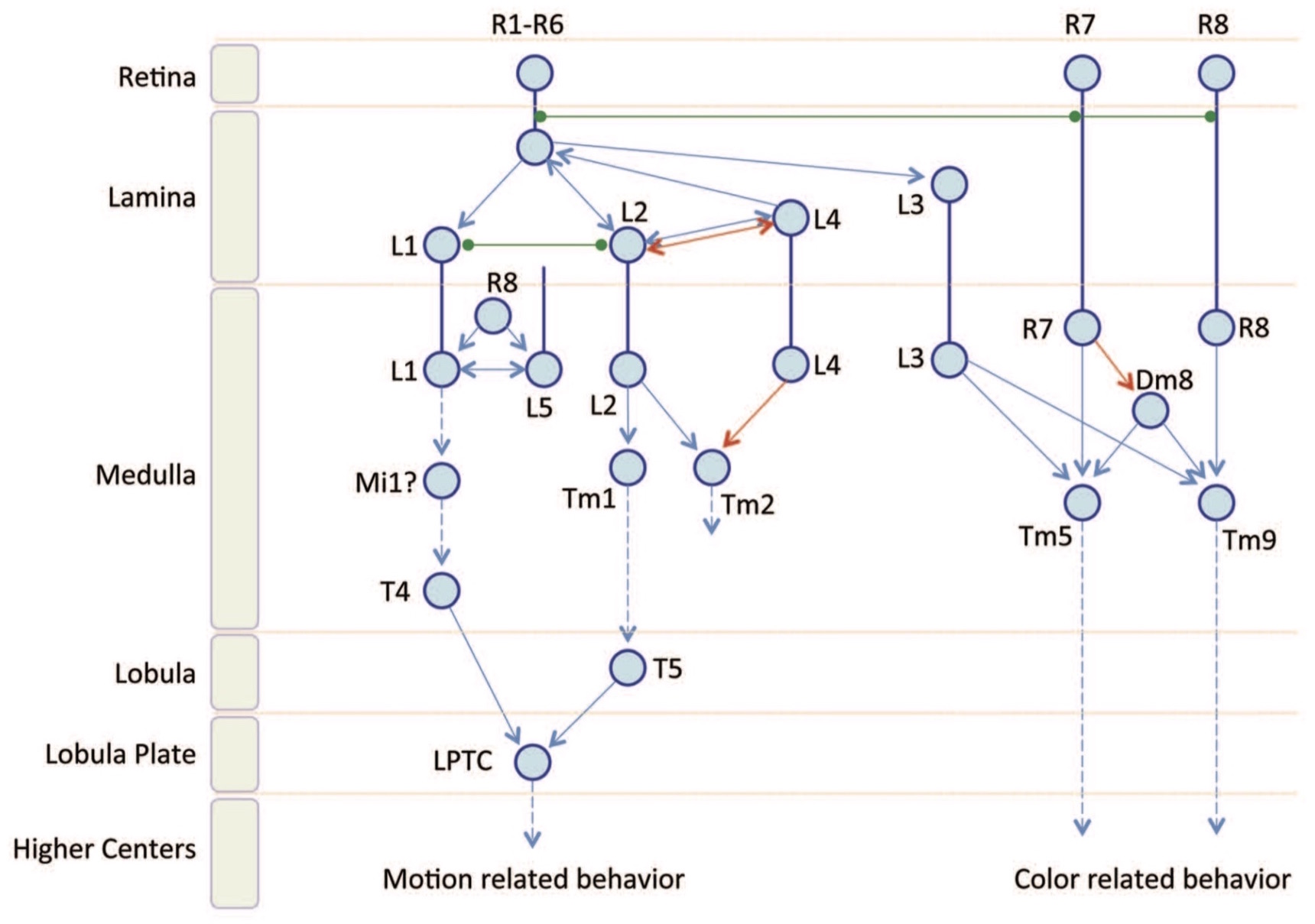

Mention Alexander Borst's research on the fly visual system [ … ] Borst and Euler [26] [ … ] possible relevance to the Reichardt-Hassenstein motion model which posits specific circuits including cell types that could be explained by or identified with topological properties [ … ] there are some obvious reasons why this would be challenging to achieve [ … ] thoughts about what constitutes localized computation [ … ] review of interpretations of topological invariants in the context of annotating neural circuits [51, 60, 89, 233] [ … ] ←

|

|

|

Appendix A: Drosophila Visual System

Expand this section and assign credit for the research and currated datasets alluded to in the borrowed figures. Provide the necessary background for students taking CS379C and supply links to the 2016 FlyEM Connectomic Hackathon and Virtual Fly Brain Project.

|

|

|

|

August 5, 2016

If you don't have time to read Fischbach and Dittrich [73], the introduction provided in the 2015 FlyEM Connectome Hackathon is probably enough to get you going. If you have a fast network connection, there are a number of tools for exploring the seven-column medulla dataset. VirtualFlyBrain serves FlyEM data in responding to a wide range of queries, for example, here is the response to the query for "medulla intrinsic neuron Mi1". I also recommend reading [14] to accompany the earlier mentioned papers [248] and [254] providing the first analyses of this dataset.

The seven-column medulla dataset consists of connectome-graph information including a description of each neuron in neuronsinfo.json and each synapse in synapses.json plus morphological information in the directory ./skeletons/ consisting of one file for each neuron containing a skeleton representation of the neuron specified in SWC2 file format:

One file for each skeleton such that each line of the file is of the form: n T x y z R P n is an integer label identifying the current point, & incremented by one from one line to the next. T is an integer representing the type of neuronal segment, such as soma, axon, apical dendrite, etc. The standard accepted integer values are given below. 0 = undefined 1 = soma 2 = axon 3 = dendrite 4 = apical dendrite 5 = fork point 6 = end point 7 = custom x, y, z gives the cartesian coordinates of each node. R is the radius at that node. P indicates the parent (the integer label) of the current point or -1 to indicate an origin (soma).

To organize the 3D Euclidean space in which the nodes of the graph—neurons—are embedded, the first thing we do is to transform the coordinates to a frame of reference more suitable for analysis, and then scale the coordinates to the unit cube to facilitate subsequent indexing and alignment. Ideally we would choose the centroid of the middle column as the origin of the new coordinate space and align the z-axis with the central axis of this column. Since the columns are fictional idealizations, we follow Stephen Plaza's suggestion to orient the frame of reference using the Mi1 medullary intrinsic neuron in the central (home) column since there is exactly one Mi1 type neuron per column and the cell is almost entirely contained within its associated column.

Below I've listed the neuronsinfo.json entry for the Mi1 neuron associated with the central column. The key 30465 is the cell body ID and is used to retrieve skeleton information stored in ./skeletons/30465.swc. PSD refers to post-synaptic dendritic spines, Tbar refers to the pre-synaptic boutons. Column PSD/Tbar Fraction lists the fraction of PSD/Tbar in each of the seven focal columns labeled A-F plus home or H, and Layer PSD/Tbar Fraction lists the fraction of PSD/Tbar in each of the 10 layers of the medulla. Column Volume Fraction lists the fraction of the cell arborization in each of the eight central columns. Note 30465 is almost entirely contained in H:

"30465": {

"Class": "Mi",

"Column ID": "home",

"Column PSD Fraction": {

"A": "0.0163636364",

"B": "0.0145454545",

"C": "0.0018181818",

"D": "0",

"E": "0.0181818182",

"F": "0.0418181818",

"H": "0.8363636364"

},

"Column Tbar Fraction": {

"A": "0",

"B": "0.0025125628",

"C": "0",

"D": "0",

"E": "0.0025125628",

"F": "0.0025125628",

"H": "0.9170854271"

},

"Column Volume Fraction": {

"A": "0.001248788",

"B": "0.01323397",

"C": "0.0036433003",

"D": "0.0002563759",

"E": "0.005611789",

"F": "0.0087104412",

"H": "0.9085093161"

},

"Columnar Location": "Interior",

"Columnar Spread": "Single Columnar",

"Layer PSD Fraction": {

"m1": "0.3732394366",

"m2": "0.1285211268",

"m3": "0.036971831",

"m4": "0.0052816901",

"m5": "0.2024647887",

"m6": "0.0158450704",

"m7": "0",

"m8": "0",

"m9": "0.1901408451"

"m10": "0.0457746479",

},

"Layer Tbar Fraction": {

"m1": "0.1642156863",

"m2": "0",

"m3": "0.0147058824",

"m4": "0.0343137255",

"m5": "0.0637254902",

"m6": "0.0049019608",

"m7": "0",

"m8": "0",

"m9": "0.5294117647"

"m10": "0.1789215686",

},

"Name": "Mi1 H",

"Superclass": "Intrinsic Medulla",

"Type": "Mi1"

},

To determine the origin of the transformed coordinate space, we compute the centroid of the skeletal coordinates supplied in ./skeletons/30465.swc. To normalize the coordinates, we scale each dimension to the interval [-0.5,0.5]. Stephen mentioned the column was somewhat tilted. We could fit a reference line to the 30465 skeletal coordinates and rotate the coordinate frame so the reference line coincides with the z-axis, but will refrain from mucking about further, unless the tilt in the original coordinate space unduly complicates analysis. Note we can use the Mi1 neurons associated with the other six focal columns to provide additional structurally-relevant spatial information to infer functional properties of cells.

Using the additional annotations available in synapses.json, neuronsinfo.json and ./skeletons.*.swc, we can enrich the language we use for defining functional motifs as Art suggested earlier this week. We can also use these annotations to evaluate motifs that rely entirely on directional connectivity available in the connectome adjacency matrix, and, given the functional data described in [248] which Michael Reiser has agreed to share with us, we might have a much better chance of aligning structural and functional information.

August 3, 2016

The number of ommatidia in a compound eye has a wide range, from the dragonfly with its ~30,000 ommatidia to subterranean insects having around 20. Even within the order of so-called true flies known as Diptera, there is wide variation, e.g., a fruit fly — Drosophila melanogaster — has ~800, a house fly ~4,000, and a horse fly ~10,000 ommatidia. The number of ommatidia is directly related to the number of columns in the medulla: There are as many columns in the medulla as there are cartridges in the lamina and as many cartridges as there are ommatidia in the eye. To estimate the number of neurons in seven columns of Drosophila medulla, multiply the total number of neurons in the medulla—approximately 40,000—by seven and divide by the total number columns—approximately 800 (40,000 × 7 / 800 = 350).

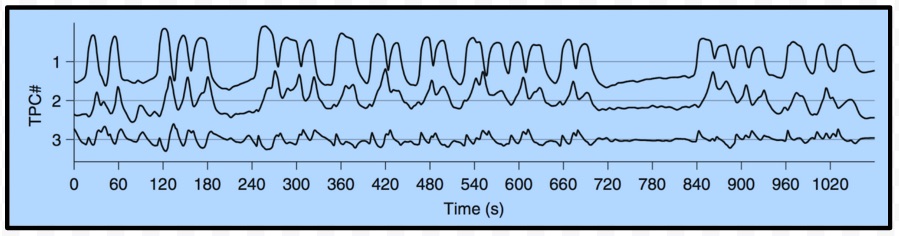

In response to my question about whether there exists calcium imaging data for Drosophila, Michael Reiser from Janelia (Reiser Lab) responded positively, and graciously volunteered to share the data from his 2014 paper [248] focusing on visual-motion sensing. Borst and Helmstaedter refer to this work in their paper [27] concerning motion-sensing circuits that exist in both fly and mammal. Here's what Michael had to say:

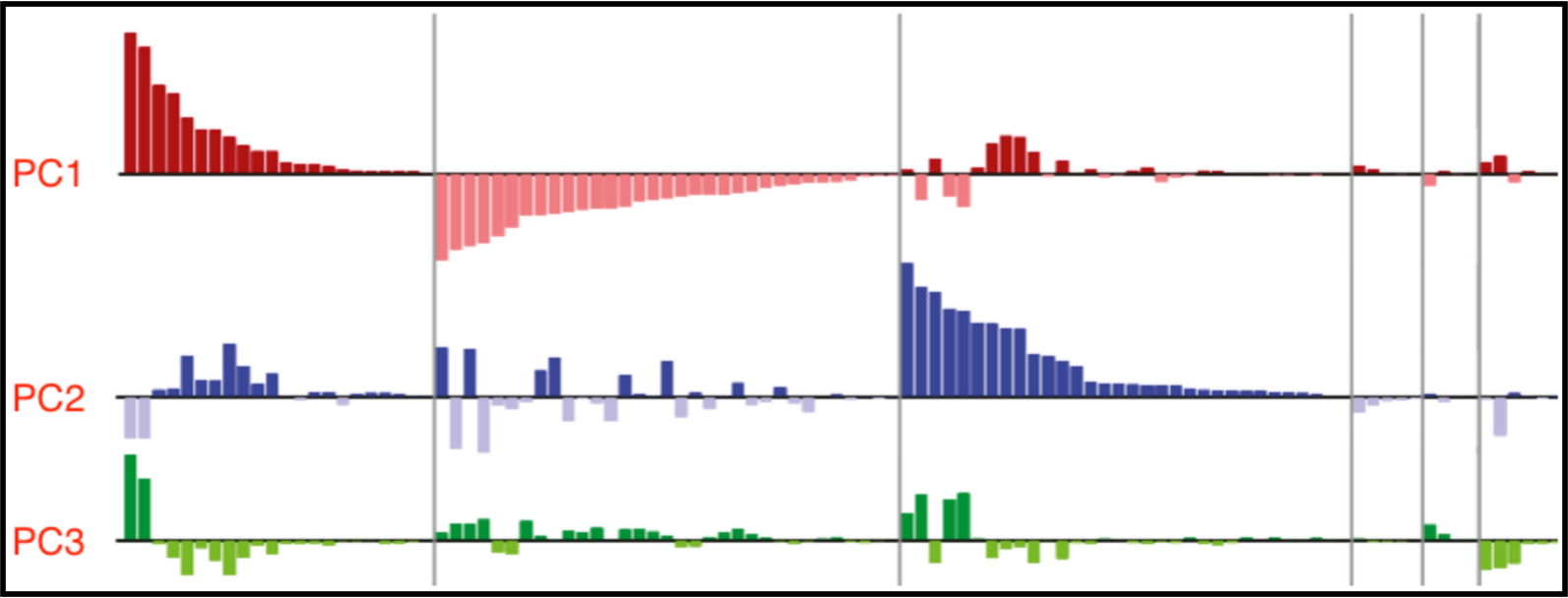

MBR: We did calcium imaging from the medulla, with a calcium indicator expressed in approximately all cell types. We did a pretty rudimentary analysis (PCA) in the attached paper, but that was good enough to show striking agreement between spatial patterns of neuronal activity and specific anatomical pathways. Clearly there is a lot more that could be done with that original data set, although in my lab we have moved on to imaging from individual cell types, one at a time (to get at the specific question of how directionally selective motion signals emerge from non-selective inputs). If you would be interested to dig deeper, I'll make sure we get the data from the 2014 paper to you.

For purposes, of visualization and possible alignment with the functional (CI) data that Michael volunteered to share with us, I wanted to get more precise information on the location of columns, layers, particular cell types, etc. Here is an email exchange with Stephen Plaza regarding the position of the "home" column in the FlyEM data:

TLD: Do you have the coordinates of the centroid and dimensions of the central (home or H) column in the same coordinate frame as the cell body locations are provided. The axis of the column appears to be parallel to the z-axis. Also could you tell me the units of the cell body coordinates / locations? It looks like the max separation (in z) is over 6000, and, since the size of an entire Drosophila brain is around 590 μm × 340 μm × 120 μm that probably argues for using the nanometer as the unit for expressing coordinates but I wanted to be sure.SMP: Correct. The column axis is roughly z-axis aligned (there is a slight tilt). The voxel resolution of the original dataset is 10 × 10 × 10 nm. I believe the skeletons are in one-to-one correspondence with the source data. 60-micron spans for the neurons seem correct. The best way to estimate column dimensions / location / etc is to use the Mi1 neurons since they are one per column and run down the center. The home column Mi1 should contain an H indication.

The primary topic examined in Nèriec and Desplan [182] is the development of the Drosophila visual system, "from the embryo to the adult and from the gross anatomy to the cellular level [...] explore the general molecular mechanisms identified that might apply to other neural structures in flies or in vertebrates." Worthwhile reading or at least skimming to acquaint yourself with its content for future reference. Regarding circuits exhibiting feedback and interesting substructural motifs, the discussion and cited work in Ehrlich and Schüffny [65] provide some interesting examples, for example, the following graphic illustrates two models of a putative attractor-network model of associative memory in layers II and III of neocortex:

|

Miscellaneous loose ends: I've been trying to carve out some time to think more about the relationship between conversation and programming—specifically pair—two humans—or semi-automated programming—a computer and a human. Here are few thoughts: Recovering from a misunderstanding, resolving ambiguity or mitigating errors, all of these have their analogies to what goes on in debugging code, pair programming, altering your travel plans, correcting and following directions with or without the aid of the person who gave you the instructions in the first place, understanding recipes and modifying procedures to suit new applications or use cases.

"What is this supposed to do?", "Does that do the same thing as hitting the escape key?", "Is that like the mapping function in Python?", "I want to get there in time for dinner.", "Are you enjoying this conversation? Would you like to talk about something else?", "Is there someone else I could talk to in order to get this straightened out?", "You think I can expense this dinner?", ..., How would you tell someone how to replace a washer on the cold water faucet of the kitchen sink? How would it make a difference if they could take apart a car and put it back together so it worked, but didn't know a thing about plumbing?

In fact, a large fraction of human communication — for that matter, human-computer interaction — involves context setting and switching, judging the attentiveness or understanding of your conversational partner, judging the interest of your audience in giving a public lecture, etc. "are you following this?", "Are we still talking about the same thing?", "Are we looking at the same person?" Estimating your interlocutor's tolerance for talking about whatever topic you opened the conversation with: "Am I boring you?", "Would you rather talk about something else?".

A couple of weeks back, I talked with Gilad Bracha <gbracha@google.com> and Luke Church <lukechurch@google.com> about possible synergy of my vaporish programmers apprentice PA with their proto project of generating code by finding and adapting similar code. Yesterday, I had lunch with Steve Reiss <spr@cs.brown.edu> and talked about his prototype system for semantics-based code search S6 that addresses similar use cases. Finally, DJ Seo sent me his new paper which came out in in Neuron yesterday. He and his collaborators have made considerable progress since he visited a few months back and now have a basic prototype system up and running [222].

July 31, 2016

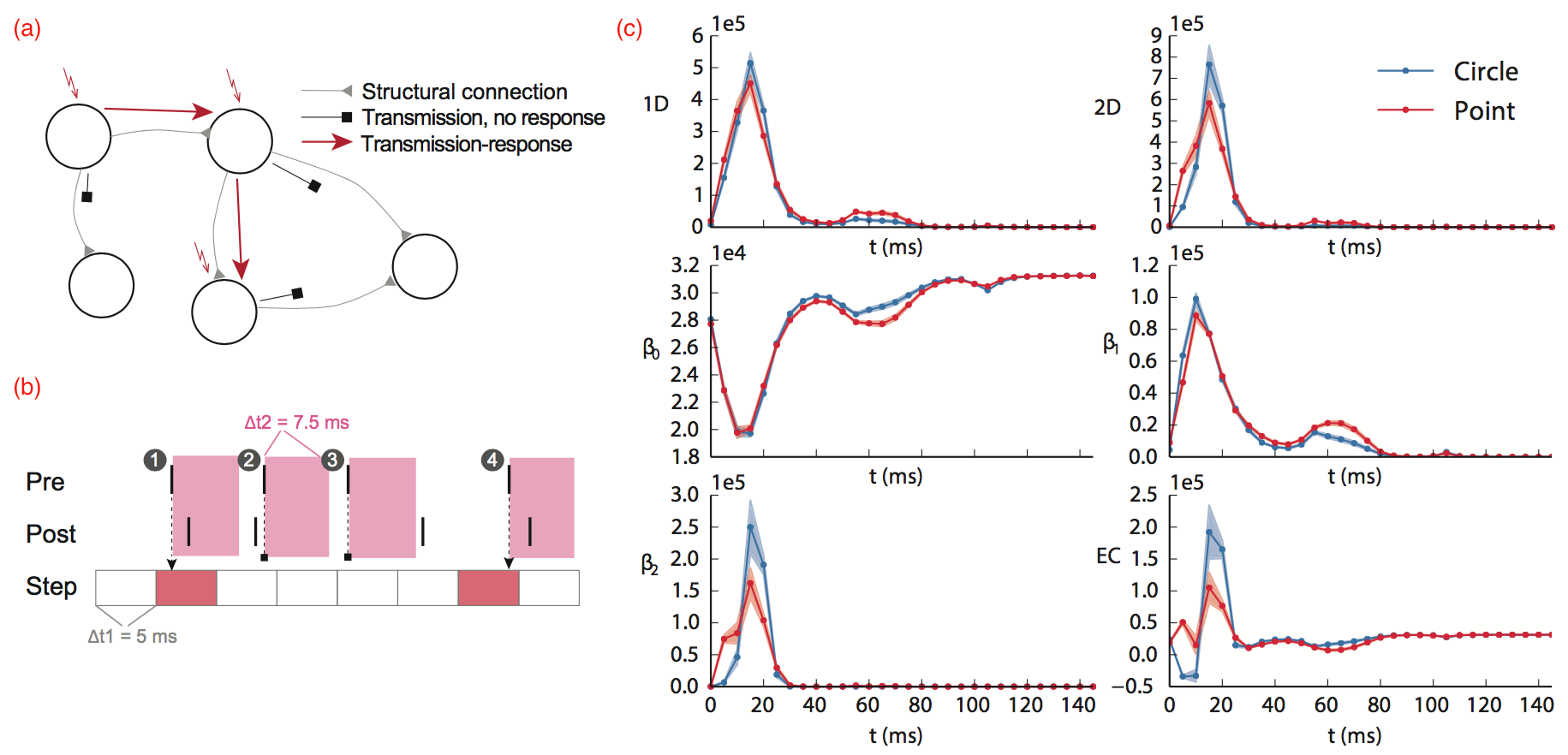

I've been working with three Stanford students—Rishi Bedi, Nishith Khandwala and Barak Oshri—to develop software for computing localized, topologically-invariant properties of connectome adjacency matrices. Instead of computing global properties of the complete connectome, i.e., the directed graph of all neurons (vertices) and synapses (edges), we compute properties of the subgraphs restricted to subvolumes that (together) cover (local-region-of-interest-width > step-size > 0) or tile (step-size = local-region-of-interest-width) the 3D volume embedding the full graph.

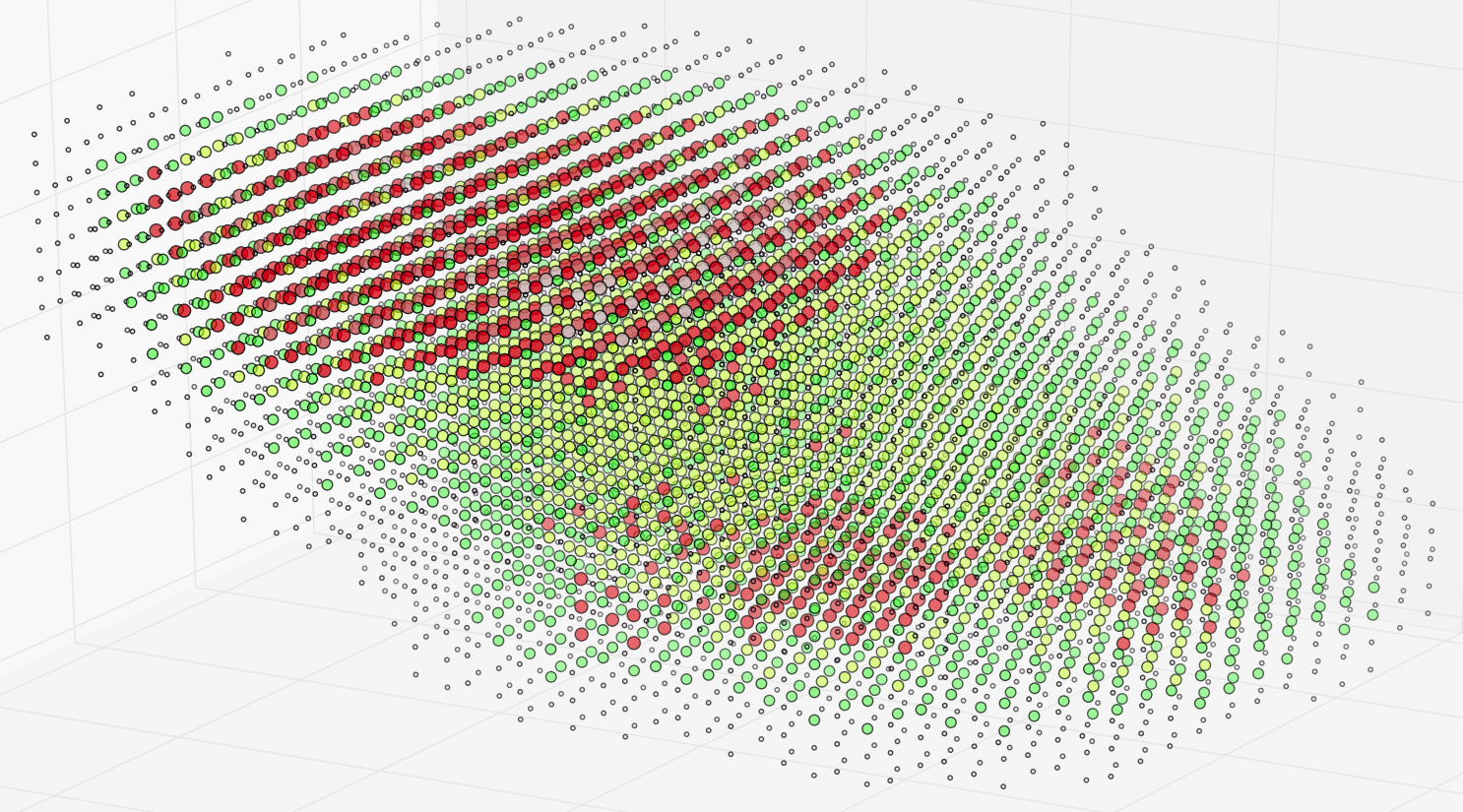

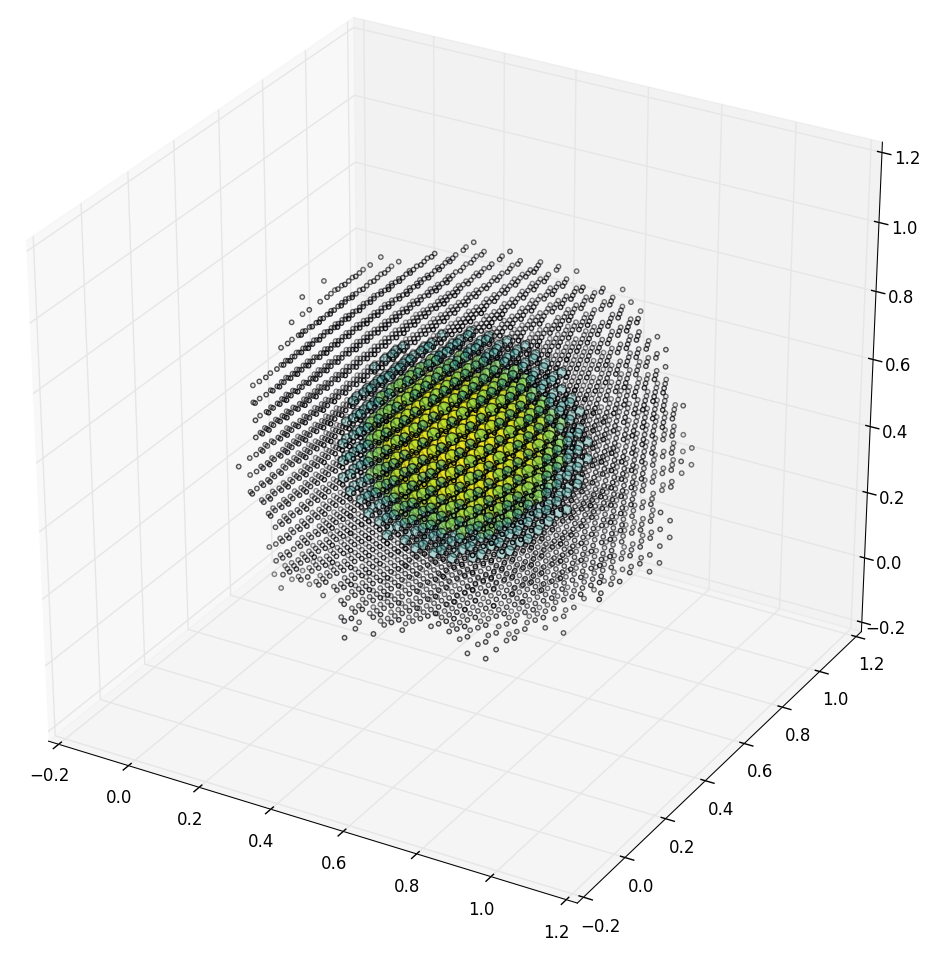

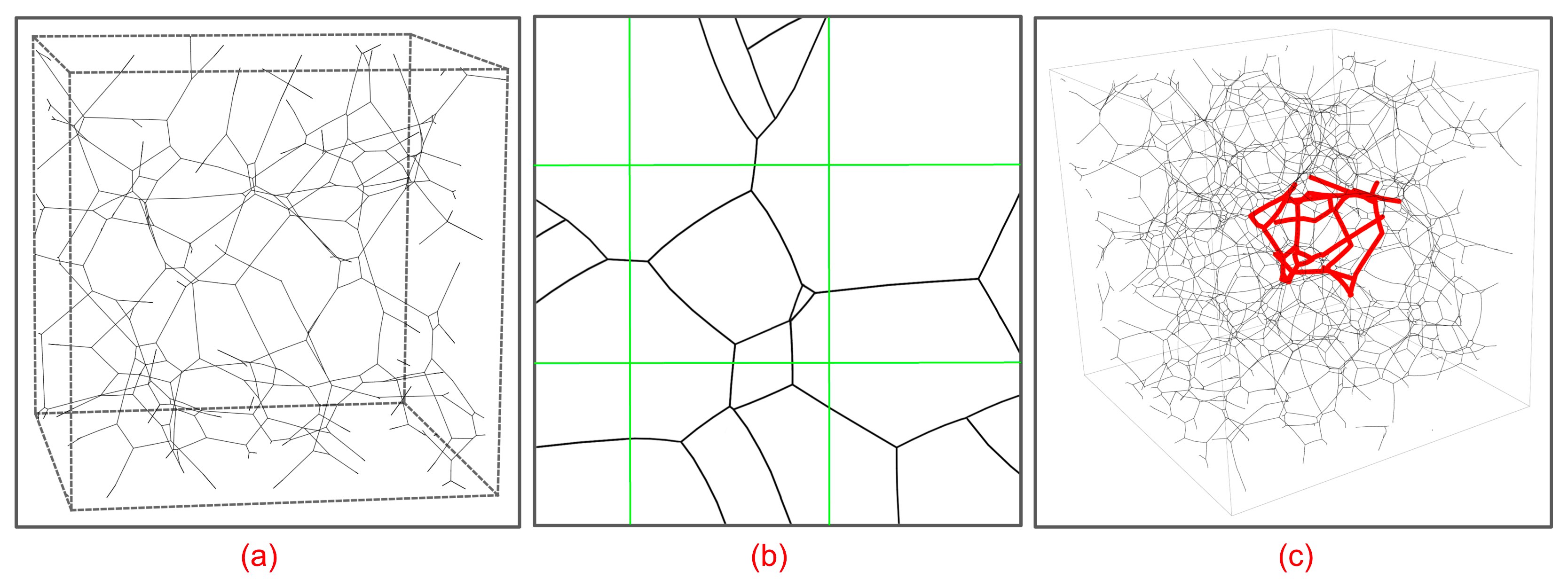

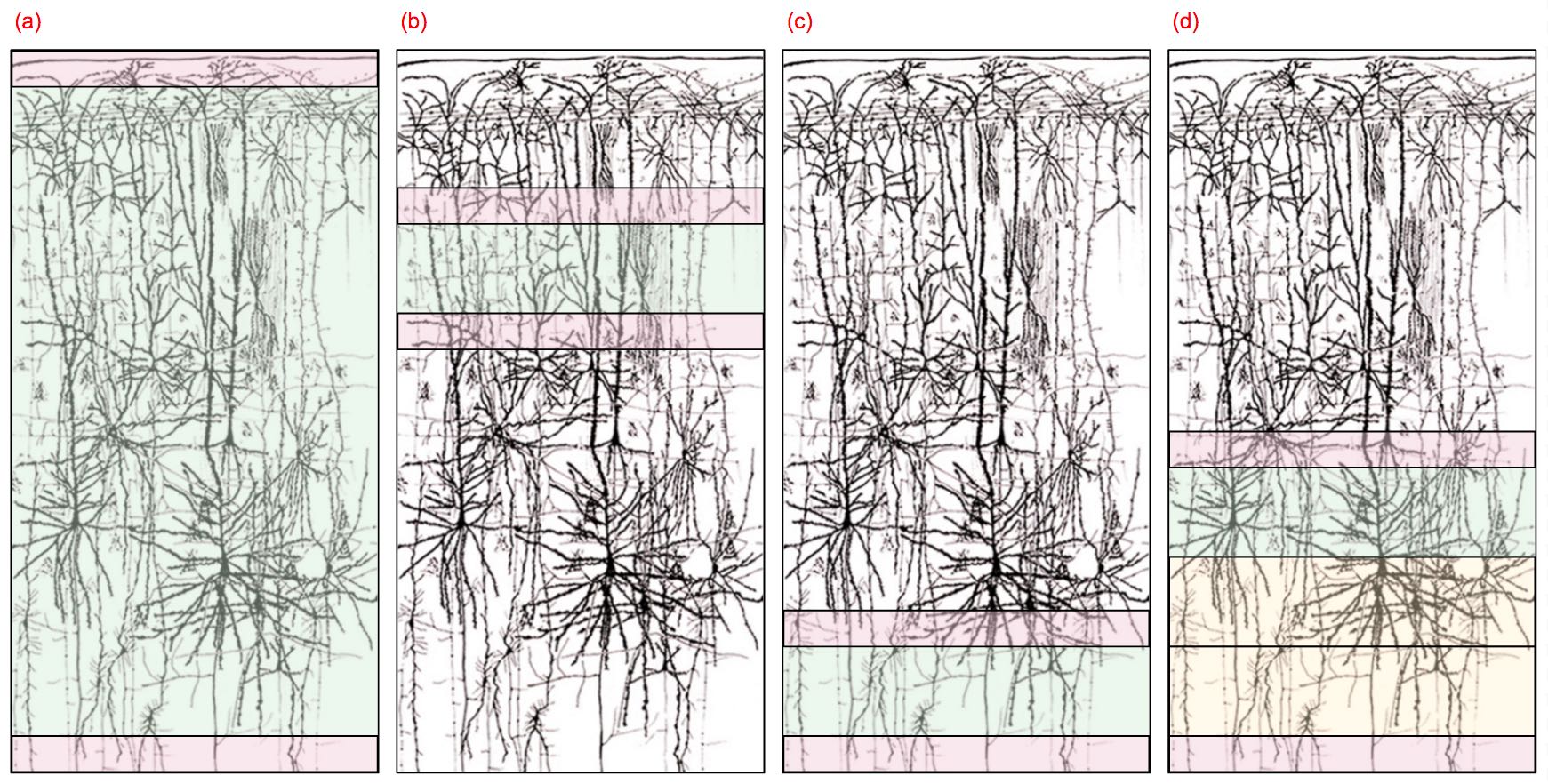

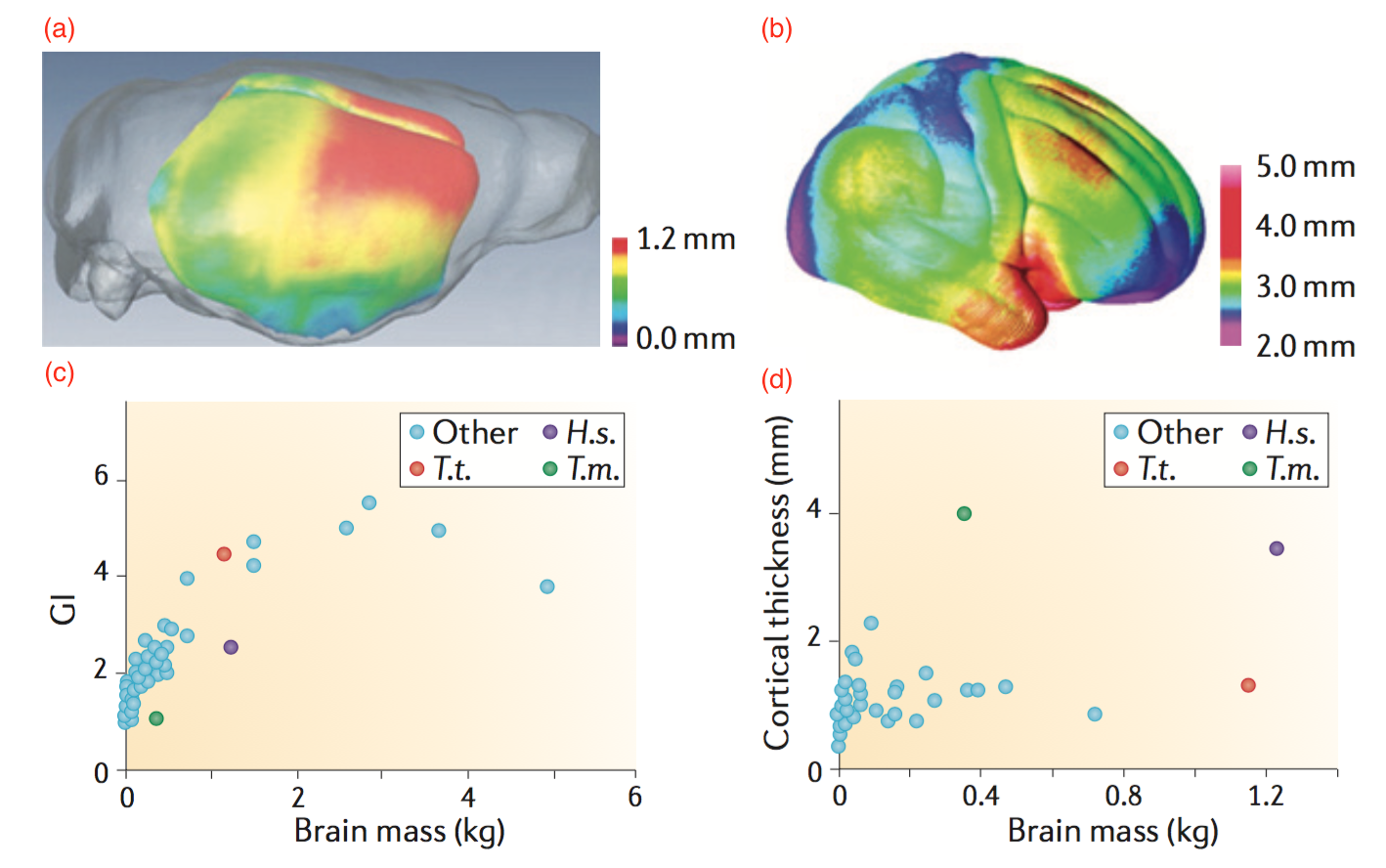

Until recently we worked with synthetic models from Costas' Anastassiou's and Henry Markram's labs at AIBS and EPFL respectively. These models may not exhibit biologically accurate circuits since they were generated probabilistically from distributions estimated by combining a wide array of published data [9, 166, 208]3. For example, the following plot shows a suspiciously-regular, synthetic cortical column consisting of approximately 20,000 neurons and 500,000 synapses:

|

Recently we've been working with the Janelia seven-column drosophila medulla dataset because (a) it is a relatively-large, well-curated dataset with high-quality reconstructions and detailed information about synapses, (b) it roughly corresponds to the same circuits we were looking at in the mouse visual system, and (c) it is more likely to provide us with a challenging test of our ability to automatically identify functionally similar regions of a large neural circuit using well-studied, spatially-mapped functional and structural designations.

If you're interested in learning about drosophila vision, these two review articles [25, 282] provide a good overview of the state of knowledge concerning the neural circuits implementing the fly visual system and related motion- and flight-control systems. Alexander Borst's website on the fly visual system at the Max Planck Institute is a great place to start for a quick introduction. The Janelia FlyEM website has lots of practical detail about how the data was collected and annotated, e.g., a set of rules used to classify neurons along with exceptions.

Takemura et al [254] review the work of several Janelia labs—including those of Lou Scheffer, Ian Meinertzhagen and Dmitri Chklovskii—analyzing the preliminary, semi-automated connectomic reconstruction of a portion of the seven-column medulla dataset, consisting of 379 neurons and 8,637 chemical synaptic contacts. This dataset continued to evolve and a somewhat larger set was featured in the 2015 Connectome Hackathon is available at FlyEM for download. The hackathon dataset includes 462 neurons and 53,383 (mostly chemical) synapses that, in the case of drosophila, have an unusual structure called a T-bar studded with multiple locations that make contact with post-synaptic neurons.

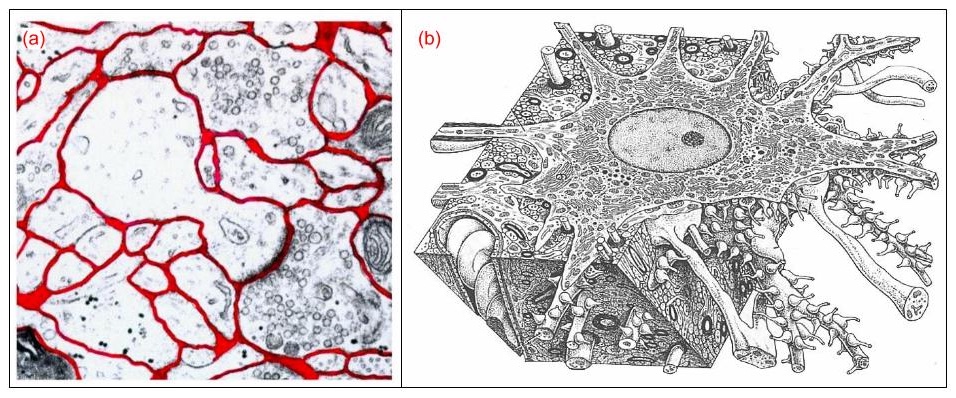

There is reason to believe that these highly-conserved regions of the drosophila brain are more stereotypical than the analogous regions of the mammal visual system. The primary components of this system consist of the lamina, medulla, lobula and lobula plate, including around 60K neurons—two thirds of which are located in the medulla, and representing a significant fraction of all drosophila neurons, usually estimated at around 150K [46]. As in mammalian cortex, the medulla is divided into several layers—ten in the case of drosophila—two of which have complicated local circuits consisting of inhibitory and excitatory interneurons.

There is a substantial literature focusing on the circuit-level function of the medulla and its neighboring visual areas. For example, Duistermars et al [62] provides interesting detail on the role of binocular interactions in flight, emphasizing the "interplay of contra-lateral inhibitory as well as excitatory circuit interactions that serve to maintain a stable optomotor equilibrium across a range of visual contrasts". Olsen and Wilson [189] provide insight into how genetic screening, optogenetic and pharmacological perturbations and modern imaging technology are being combined to map functional connectivity in the related areas and determine "causal relationships between activity and behavior".

Our initial experiments using the same code that we employed in analyzing the Anastassiou synthetic cortex data were disappointing. In the same regions where we would find subgraphs in mouse data consisting of hundreds or even thousands of neurons and ten times that many connections and exhibiting interesting topological motifs, the subgraphs in the fly data were small and structurally uninteresting. Initially, this didn't seem consistent with the analysis in Takemura et al [254]—for example:

|

The problem was that, although there were interesting circuits involving synapses linking axonal and dendritic arbors in the subvolumes corresponding to these graphs, the cell bodies (soma) were not located in these subvolumes. We store all the cell bodies in a KD tree and retrieve only those cell bodies that fall within a target subvolume to construct the corresponding subgraph G = { V, E }. The set of vertices V is just the set of neurons associated with the retrieved cell bodies. An edge (synapse) is in E if and only if the pre- and post-synaptic neurons are in V.

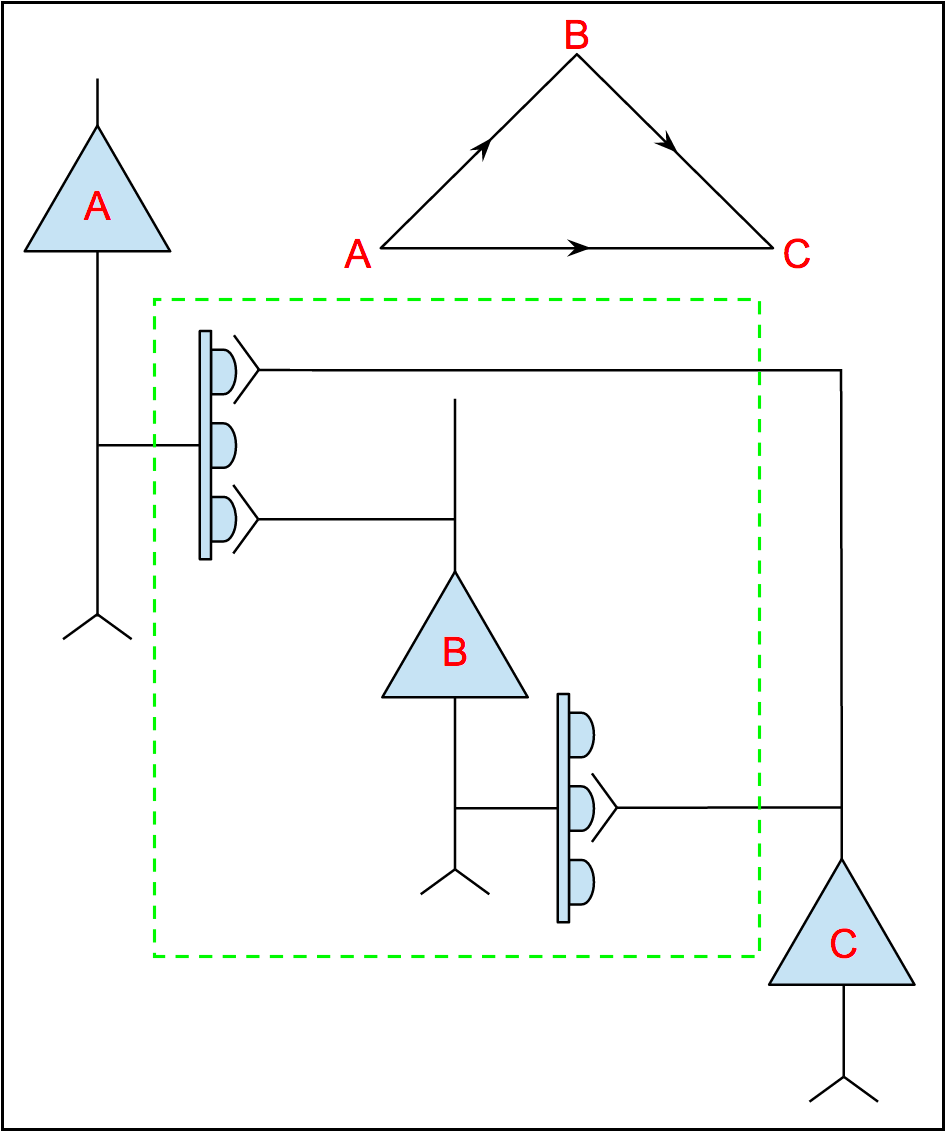

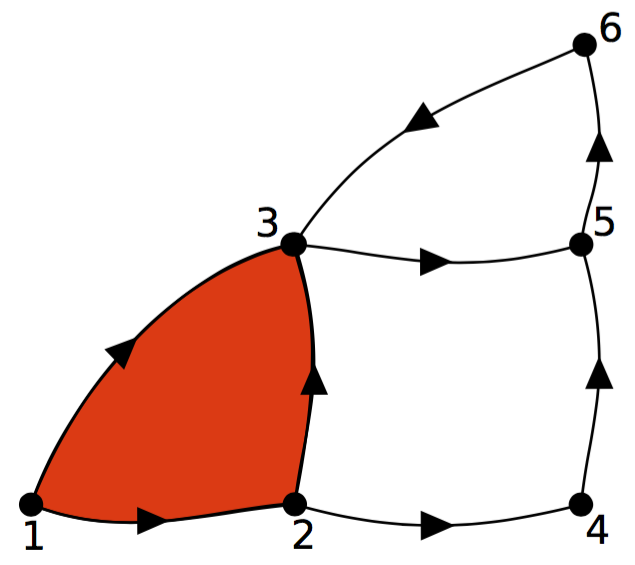

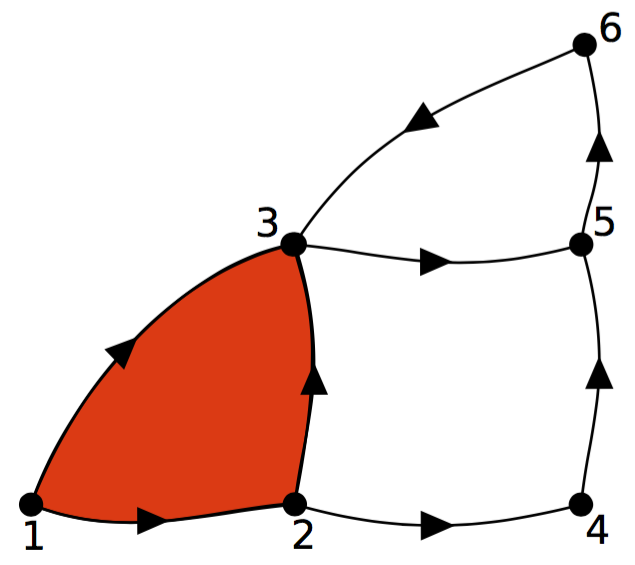

Note that, according to this rule, we can add an edge between n1 and n2 even when the all the synapses connecting n1 and n2 are located outside of the subvolume. We modified the rule so that, instead of storing cell bodies, we store all the synapses indexed by location and add an edge between nPRE and nPOST irrespective of whether the location of either cell body falls within the subvolume. For example, in the figure below, the dashed green line bounds a subgraph that exhibits a 3-simplex as shown despite the fact that only B of the three cell bodies is located within the subvolume:

|

The graphical conventions for drawing circuit schematics shown here are not standard, but, as I found out, there really is no widely-agreed-upon standard, a state of affairs leading to a good deal of confusion. It suffices in this case to know that synapses—generally T-bars in the case of the drosophila visual system—emanate from the axonal arbor of the pre-synaptic neuron. If you are interested in the debate over conventions for neural circuit schematic drawings you might be interested in this paper by Konagurthu and Lesk [146]. If you have more-realistic examples of 3-simplices, please share.

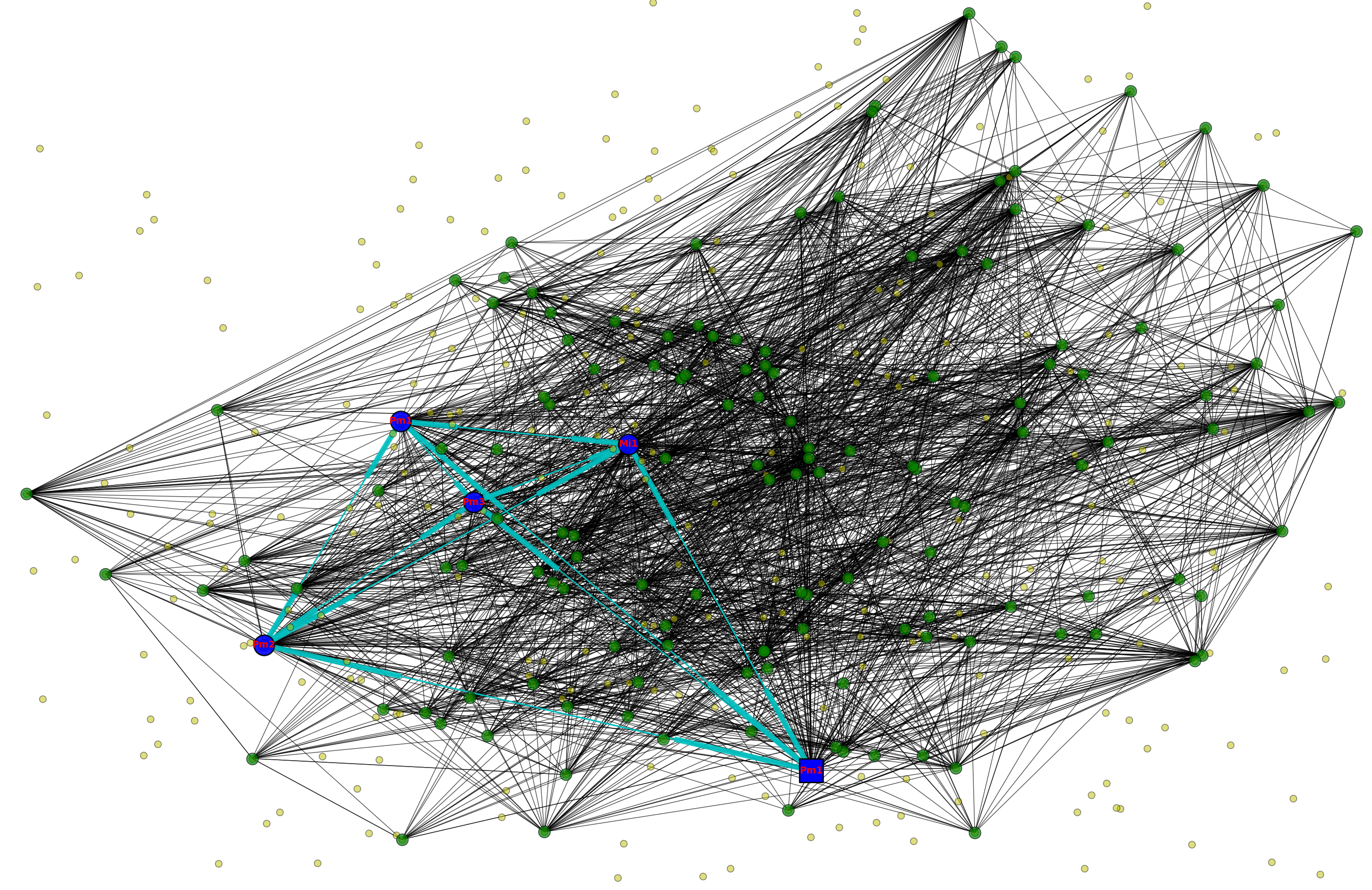

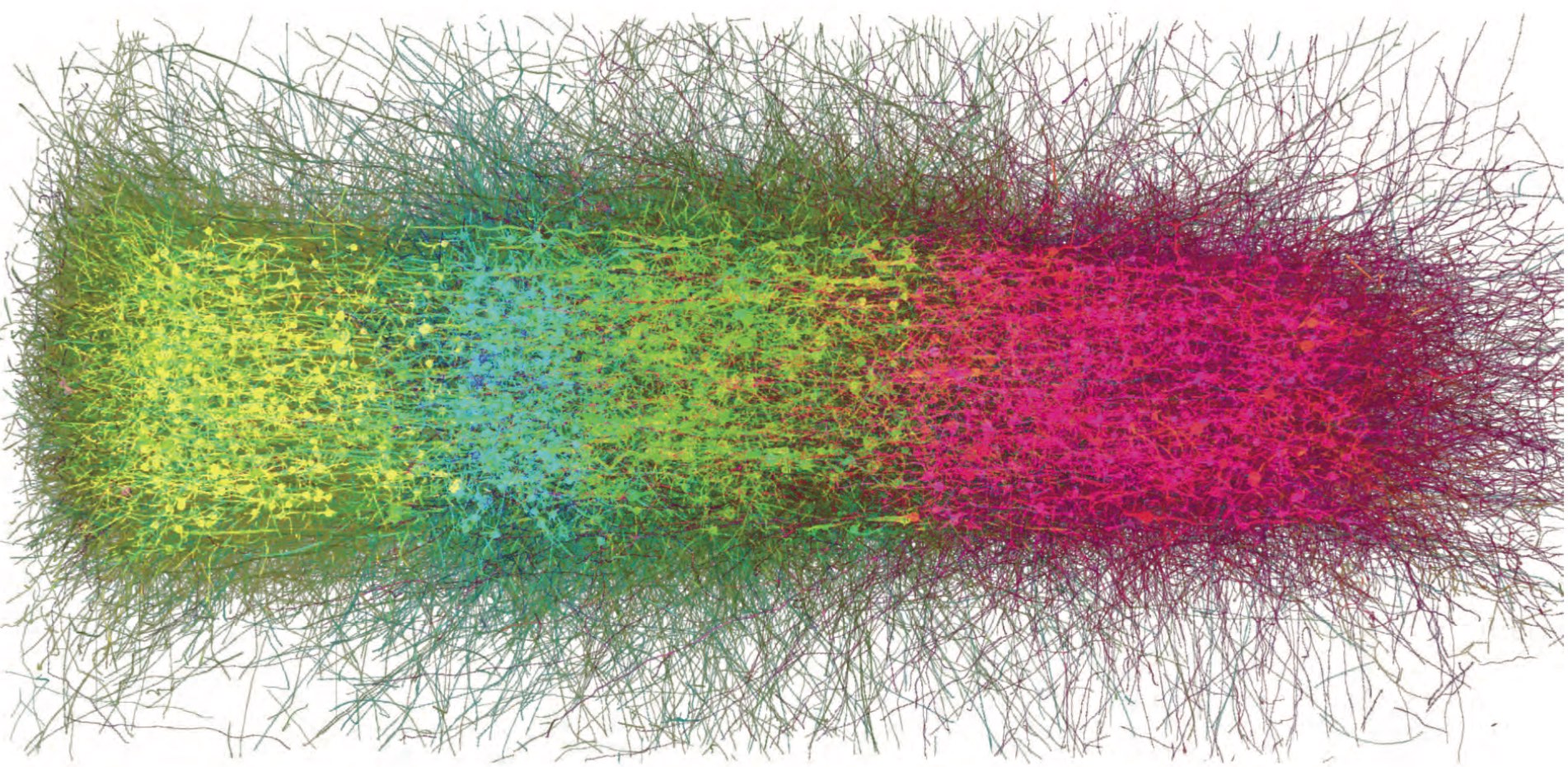

With this change in our definition of what constitutes an edge in a subgraph embedded in a given subvolume, we obtain a rich set of features that we can use in attempting to segment a connectome into functionally different regions. Here is a simple example in which we divide a unit cube enclosing the scaled coordinates of 50,000 synaptic (T-bar) loci into 1,000 subvolumes and, for each of the 1,000 associated subgraphs, compute a vector of topological features including those described in Dlotko et al [60] and cluster the vectors to produce the following graphic:

|

Miscellaneous loose ends: Look at the paper by Niepert et al [186] on a convolutional method for extracting and analyzing locally-connected regions of large graphs and and learning convolutional neural networks to classify such graphs according to various criteria. Read Ehrlich and Schuffny [65] on the touted novelty and relevance of published work on biologically-plausible network motifs.

June 1, 2016

Thanks to David Sussillo for the discussion at lunch today on inferring dynamical systems from aligned neural and behavioral data, and for the references to related work he recommended. Recall that David mentioned Omri Barak as someone else doing interesting related work in this area. Also remember that David's slides and the audio for his talk in class are available here and the class presentations from Saul and Andy are also on the course calendar page here if you want to review the material on C. elegans. The BibTeX entries are in the footnotes4

May 31, 2016

Here is some background information that I meant to send around earlier:

From talking with Clay Reid, Michael Buice and Jerome Lecoq, it looks like it will be another year and a half before we have aligned functional (CI) and structural (EM) data. There will likely be more experiments, variation in stimuli and annotation than we initially hoped for, but it will arrive later than expected. The bonus for waiting will include visualization tools, extensive annotation and careful curation. Depending on Neuromancer's priorities, we may be able to devote some cycles to expediting the image-processing requirements.

From talking with this same crew, I learned that the first installment of CI data is now available. The yield — number of neurons expressing the indicator — and coverage — number of neurons actually imaged — are disappointing, but will improve with Jerome's new microscope. You can learn most everything I know about the soon-to-be-released data from my notes following the Neuromancer rendezvous in Seattle last month, which you can find here if you want more detail.

When we met last week, we agreed to do the following exercise: the CI data is a 4D matrix s.t., for every x, y, z and t, we have a scalar whose value is an estimate of fluorescence at location (x, y, z) and time t. In fact, we'll have a raster representation with individual recordings for each neuron, but for now, think of the data as a 4D volume or, if you prefer, a 3D movie. Any sub-volume of the data corresponding to a four-dimensional hyper-rectangle represents the activity of a subset of the recorded neurons in carrying out a computation.

The only thing we know about the physical location of these neurons — in the current AIBS experiments GECIs are only expressed in the soma — is that if the 3D coordinates of the traces are close to one another then the neurons or at least the cell bodies are close to one another in the tissue sample. Without the morphological, genomic and proteomic information we will eventually get from the Allen Institute, we don't know a neuron's cell type, the circuits it is a part of, or the nature of its connections with other neurons5. What might we be able to infer about a sub-volume?

We could easily be looking at a sub-volume that represents but a fragment of anything one might recognize as a complete circuit. For example in our computer-chip analogy, we might be looking at the part of a multiplexer that performs the function of routing the selected inputs but is completely missing the part does the address decoding. Of course this begs two questions: (i) "Could we infer the function of the multiplexer if we were lucky enough to have selected the entire multiplexer circuit?" and (ii) "What's wrong with decomposing the circuit into separate addressing and routing circuits?"

I asked Semon and David to think about the problem as an image or video segmentation problem, and, in particular, come up with operators for performing the functional analog of boundary prediction. With the usual disingenuous warning that I didn't think very carefully about this, here are some observations — in the following, assume we treat each sub-volume as a multivariate time series. During our lunch conversation, we briefly discussed the idea of interestingness operators and I mentioned that the only coherent instantiations of this notion I know of make use of ideas from information theory, e.g., relative entropy also known as Kullback-Leibler divergence or some variant of Kolmogorov complexity — see Ahmed and Mandic [3].

The challenge in applying such ideas in practice generally comes down to finding a tractable algorithm. This often involves using tools from compressive sensing — see Yuriy Mishchenko [177], variants of Lempel-Ziv compression algorithms — see Ke and Tong [135], and sparse-coding and blind-source separation such as projection pursuit — see Aapo Hyvärinen [118]. I've also been thinking about indirect methods of measuring complexity that involve first fitting a general purpose RNN — perhaps a spiking neural network model like liquid-state machines [159] — and then estimating its complexity.

May 29, 2016

If your final project has hit a terminal snag and you despair of reviving it in time to meet the submission deadline, here's an alternative in the form of a research review paper that you might want to consider: Periodic discoveries of new types of neural structure and function continue to disrupt the field of neuroscience, threatening current theories that attempt to account for neural function. The project involves looking carefully at one such potential disruptive discovery.

Recently we learned about two mechanisms hypothesized to be relevant to both learning and neurological disorders including autism, Alzheimer's and Parkinson's. I'm referring to recent discoveries that the brain has its own immune system involving microglia and perhaps astrocytes, and that the neuritic plaques—extracellular deposits of amyloid β—and neurofibrillary tangles symptomatic of Alzheimer's and early onset senility might also be implicated in fending off infection.

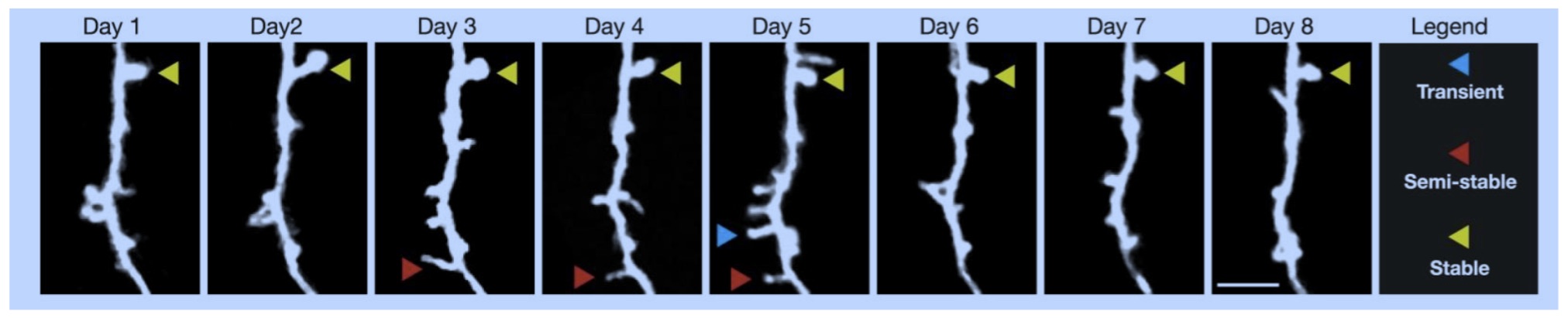

The evidence is still inconclusive but there are some basic observations we can make about both microglia and amyloid β and I'd like you to try to sort out what we know from what has been published and speculated. Some believe that microglia play an important role in managing dendritic spine growth and hence in development and learning and therefore may have something to do with autism.

Specifically, I'd like you to search the literature concerning the dual roles of both microglia and amyloid β. Your project is to select and read carefully a few particularly relevant papers, summarize the most credible current theories and findings, and—most directly relevant to CS379C—reflect on to what degree we may have to account for these factors in a computational model of neural function in healthy organisms.

I've included below two recent papers—one for the findings concerning the negative role of microglia and the other concerning the potentially positive role of amyloid β that have made the headlines and are generally acknowledged by scientists to be credible if only preliminary at this stage:

Amyloid-β peptide protects against microbial infection in mouse and worm models of Alzheimer's disease. Science Translational Medicine 25 May 2016: Vol. 8, Issue 340, Pages 340-372. (LINK)

Microglia mediate early synapse loss in Alzheimer mouse models. Science 31 Mar 2016: Issue 000, Pages 000-000. The Rogue Immune Cells That Wreck the Brain (LINK) Alzheimer's may be caused by haywire immune system eating brain connections (LINK)

You can also find an entry in my class course notes (here) concerning microglia and their potential role in addiction and congenital autism. You might also give some thought to whether microglia—which appear to ubiquitous throughout the central nervous system—might provide some delivery vector or reporting mechanism for whole-brain recording. One potential problem is that they move around a lot, and so, while a scanning two-photon excitation and fluorescent-labeling method might enable dense reporting of local field potentials, the locations reporting might be different on each scan.

May 27, 2016

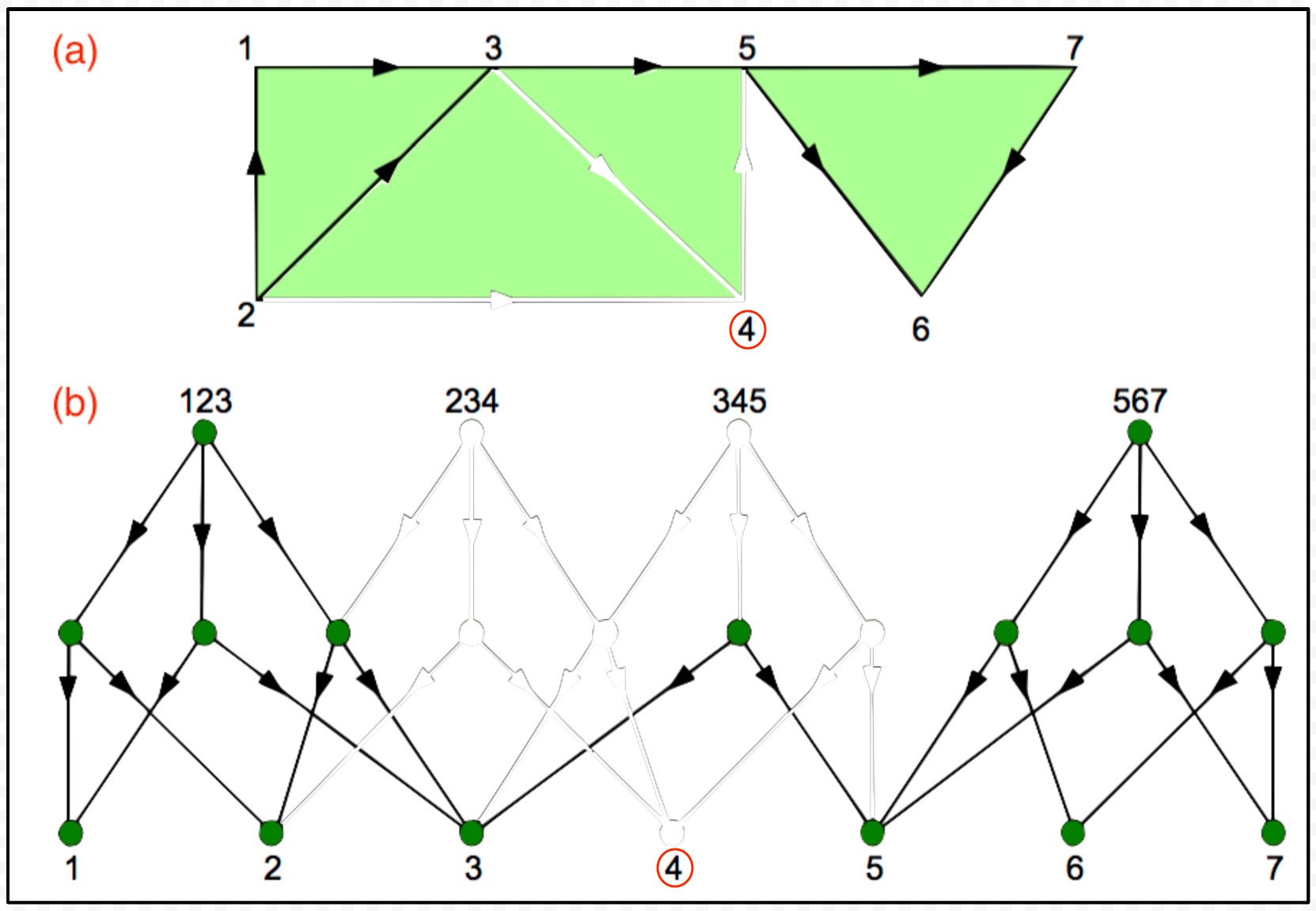

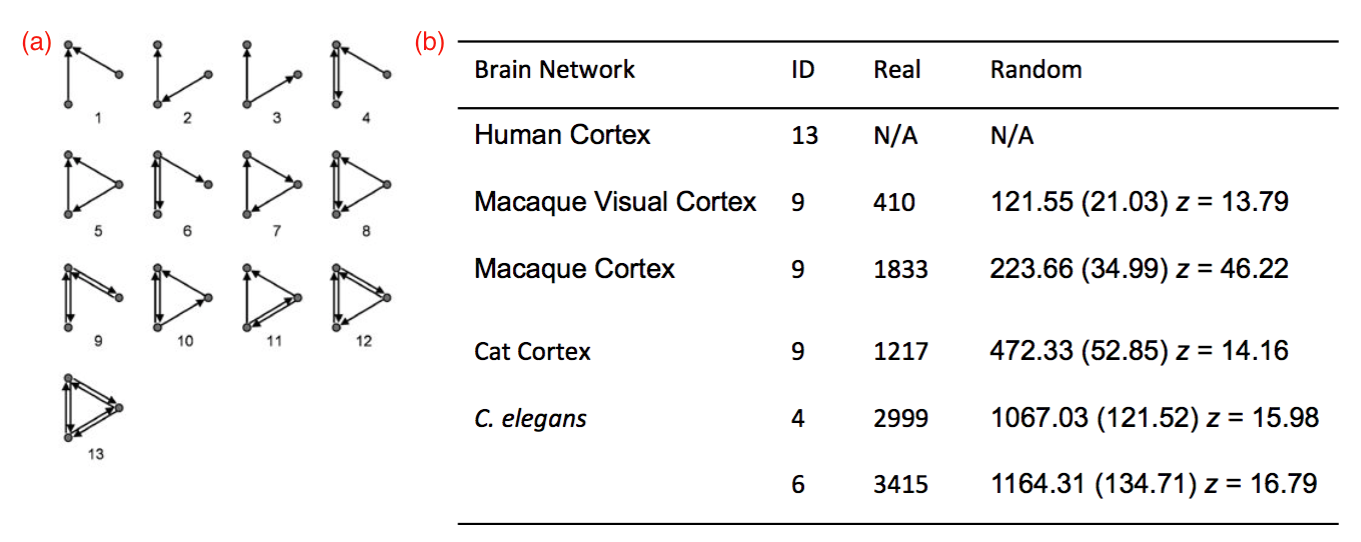

We discussed earlier how one might parallelize Algorithm 1 listed in the Supplementary Information section of [60] that generates the Hasse diagram of the directed flag complex associated with a given directed graph representing the connectome of a microcircuit. In this entry, we consider how to parallelize a multi-scale convolutional variant of the algorithm, and, in particular, how to compute feature-vectors consisting of topological invariants from local subgraph/subvolume regions of the microcircuit coordinate space so as to expedite the basic sliding-window algorithm used in applying linear filters and implementing convolution operators. We mention in passing how one could add a pooling layer that would smooth feature vectors over neighboring subvolumes, making it easier to segment large tissues into meaningful functional / computational components.

|

Figure 1: An illustration of the basic mathematical entities involved in computing topological invariants, including (a) the input corresponding to the directed graph of the microcircuit connectome, and (b) the output corresponding to the Hasse diagram representing the directed flag complex of the input graph. The graphic was adapted from Figure S5 from Dlotko et al [60].

I found the direction of the edges in the above depiction of the Hasse diagram confusing for two reasons: First, the edge, 123 → 12, is awkwardly understood as "the 2-simplex 123 has the 1-simplex 12 as a face", while 12 → 123, is naturally interpreted as "the 1-simplex 12 is a face of the 2-simplex 123". Second, the latter is consistent with the level-by-level construction of the Hasse diagram as described in Algorithm 1, starting with smallest simplices—the vertices of the graph—and working toward larger ones. In any case, I'm going to assume that the edge directions are reversed in the following discussion of how the Hasse diagram can be reused to expedite multi-scale convolutional persistent homology. If this not true in the current implementation, it can easily be remedied.

Let G = { V, E } be a directed graph with vertices V and edges E, and H = constructHasseDiagram(G) correspond to the Hasse diagram for the graph G—representing the microcircuit connectome. Create a spill-tree T to enable fast (parallel) retrieval of subsets of the 3D-indexed vertices of G—see [154, 153, 155] and the slide-deck presentation by Dafna Britten (PDF) for technical details. For the purpose of this discussion, let U = subsetSpillTree(T ,V, Xi, Yi, Zi, Width, Height, Depth) return the subset U of all the vertices in V whose coordinates are contained within the 3D region of size Width × Height × Depth located at ⟨ Xi, Yi, Zi ⟩.

Let { BN, EC } = computeBettiEuler(U, H) compute the Betti numbers BN and Euler characteristic EC for the subgraph GU defined by U ⊆ V together with edges EU ⊆ E such that ui → uj ∈ EU if and only if ui ∈ U and uj ∈ U. The function computeBettiEuler works by constructing the Hasse diagram for GU such that calls to the function computeHomology(HU) return the Betti numbers BN and Euler characteristic EC for HU as is done in Pawel's code. We construct HU from H as follows, assuming—as explained above—that the directed edges in H are reversed, i.e., edges in H point from k-simplices to (k + 2)-simplices.

|

Figure 2: In the above graphic, V is { 1, 2, 3, 4, 5, 6, 7 } and U is V − { 4 } = { 1, 2, 3, 5, 6, 7 }. The graphic shows (a) GU the subgraph of G defined by restricting the set of vertices to U, and (b) the Hasse diagram HU corresponding to GU with the parts of H not included grayed out to illustrate the property of downward inclusiveness, i.e., the Hasse diagram of any subgraph of G is a subgraph of H.

For a region of size Width × Height × Depth and a particular Stride—assumed here for simplicity to be the same along all three axes—we will likely have a relatively large number of subregions and hence subgraphs for which we want to compute topological-invariant feature vectors6 Assuming we don't want to construct a new Hasse diagram for each HU such that U ⊂ V and wish to parallelize the convolution algorithm by computing topological invariants for several subgraphs in parallel, there are a number of options that come to mind. In the following, we explore one such option that requires little modification of existing code and requires that we only compute H. With some abuse of notation, each vertex u ∈ U is a 0-simplex and hence also a vertex of HU.

Actually, we don't have to explicitly construct HU in order to compute feature vectors consisting of topological invariants; rather, we simply traverse the vertices of H that would have been present in HU had we constructed it. Specifically, we modify computeHomology so that it can operate directly on H given a list Ustart indicating which vertices of H to start at in order to (virtually) traverse HU and another list of vertices Ustop indicating when to stop. In the following, H is simply treated as a directed acyclic graph—vertices corresponding to k-simplices with outgoing edges pointing to vertices corresponding to (k + 1)-simplices—with multiple roots corresponding to the vertices in V. Ustart is just U ⊂ V. Ustop is obtained by performing a depth first search (DFS) of H starting from U with the following variation7:

Begin by setting Ustop to be the empty set { }. To distinguish from the elements u ∈ U from other elements of H not in U, we will use the variable v. The first time the DFS visits a vertex v, add v to Ustop, set nv to 1 and then backtrack. We use nv to keep track of the total number of times we've visited v during the DFS. Note that the in-degree dv of a vertex v ∈ H is equal to 1 plus its depth d in H, and ∀ u ∈ U, du = 1. On each subsequent visit to a vertex v, increment nv by 1. If nv < dv, then backtrack even if there are edges out of v. Otherwise, it must be that nv = dv, and therefore it follows that v ∈ HU, and so remove v from Ustop and continue the DFS traversal if there are edges out of v. If v ∈ HU the DFS is guaranteed to visit v exactly dv times.

Now it should be relatively easy to modify computeHomology so as to implement a version computeLocalHomology such that computeLocalHomology(H, Ustart, Ustop) = computeHomology(HU). However, it would be almost as easy to simply overload computeHomology so that computeHomology(H) performs exactly as the original and computeHomology(H, U) directly incorporates the functionality described above for restricting attention to HU which is guaranteed to be a subgraph of H.

May 25, 2016

Here's a brief report concerning the Neuromancer Rendezvous in Seattle this week: Breakfast at Google with Michael Buice to discuss the new datasets that we exchanged email on earlier in the week. (See here) for what I knew about these datasets prior to meeting with Michael.) Group meeting and lunch with Neuromancer and Clay's MindScope team along with Sebastian Seung's group at Princeton participating via video conferencing. I had additional meetings with Clay, Jerome Lecoq and Steven Smith later in the afternoon.

Clay told me that it would 2-3 years before we have aligned functional (calcium) and structural (connectomic) data and then only on mm3 samples. He said that in the meantime it might make sense to segment the rest of Wei-Chung's data [149] as "it is the best we'll have for years". Michael Buice—pronounced "bice" as in "vice" but according to Clay— had said that it might be some time before we have a Cre line for inhibitory neurons, but Clay said he expected to record from inhibitory neurons within couple of weeks and the dataset out within the next month and that a new Cre line wouldn't be necessary—they plan to use an existing Cre reporter line.

I saw Jerome Lecoq (<jeromel@alleninstitute.org>) in the meeting room next to ours and sent email suggesting we chat. He returned my message telling me to come by his lab to see his microscopes and experimental apparatus. Jerome indicated that Clay's prediction regarding when we can expected aligned, same-animal combined functional and structural datasets is consistent with his estimates. He opined that they would have multi-layer-location datasets somewhat sooner. Jerome was pessimistic about light-field scanning in deep tissue [156, 28], but suggested I take a look at work going on in Elizabeth Hillman's lab at Columbia.

Michael and I agreed that if we wanted to run the experiments I described to him anytime soon, our best bet might be to infer the connectomic structure from the functional data. I knew of some related work from Columbia University which I've queued up to read this weekend. The papers of Mishchenko, Paninski, Vogelstein and Wood [225, 178, 177, 176] are the only ones I found in my BibTeX database8 and their line of work appears to be the most cited work out there, but I expect there are some more recent ones I'm not aware of.

I also met with Steven Smith and we traded updates. I told him about how Neuromancer is progressing on fully-automated reconstruction and he told me about his recent array tomography work. At Paul Allen's urging, Steven has initiated an arrangement with a hospital in which he is supplied with mm3 — and larger — samples of human neural tissue that have been removed during surgery on patients suffering from a neurological disorder that ostensibly involves a portion of hippocampus adjoining the neocortex. According to Steven, Paul doesn't want his legacy "to be all about rodents."

Steven explained that rodent brain tissue is hard to work with in culture because it degrades quickly, allowing for at most a few of hours of in vitro recording and generally much less. Moreover rodent neurons rapidly generate new connections thereby masking the original circuitry. Steve explained that human neurons—perhaps because of their longer human life spans—are more stable and can kept alive and perform much as they would in an intact brain for many hours, even days. I read elsewhere that human cells in culture generated from induced pluripotent stem cells generally survive longer when densely populated, e.g., 50-100K cells, and survive even longer in tissue samples—corresponding to biopsies removed from patients during exploratory surgery—that include supporting structural proteins and glia.

Steven invited me to "high tea" which is a ritual performed each day on the sixth floor at 4pm and includes small, butter-rich English tea biscuits that Steven has obviously developed a taste for. Then I sent a note to Eric Jonas, Amy Christensen, and Vyas Saurabh asking for references on inferring structure from function, wrote this trip report in the executive dining room on the sixth floor overlooking Lake Union and walked back to the Ballard Hotel.

Miscellaneous loose ends: Read more carefully Jonas and Kording [126] and think about why Konrad might be far to pessimistic about the prospects for rich hierarchical models. Describe how the multi-scale, convolutional persistent homology can be applied to segment a connectome into functional parts and how those parts can be modeled with the dynamical systems methods that Semon, Wisam and Iran are working on for their projects.

May 23, 2016

Miscellaneous loose ends: Last week, an MIT student in Ed Boyden's lab asked if I had heard of "symbolic dynamics" and I drew a blank. As it turns out, however, I actually know quite a bit about the study of symbolic dynamical systems. [I couldn't quite say to him, "I've forgotten more about dynamical systems modeling than you'll ever know", but the part about "forgetting" was accurate enough.] At Brown University, starting in the 1995 Fall semester, I started teaching a graduate course on dynamical systems. The website still exists and comes up when you search for "dynamical", and "systems", but the material and level of mathematical sophistication is hopelessly outdated, and the field has advanced in some cases and been retarded by the influence of special-interest groups pushing particular modeling methodologies in other cases.

In the 1990's I spent several months at the Santa Fe Institute in New Mexico working with, among others, Jim Crutchfield who was one of the SFI / Berkeley/ UCSC "chaos" crowd, along with Farmer, Packard and Shaw [192, 70] who had just taken a "sabbatical" from SFI to try their hand at predicting financial markets [ostensibly to make lots of money for a major Swiss bank]. I drew inspiration for the course at Brown from an SFI monograph edited by Andreas Weigend and Neil Gershenfeld [267] and in particular work by Neil and Andreas as well as other scientists and mathematicians who contributed to their jointly edited collection of papers on time-series prediction [79, 216].

It was Jim's work that got me interested in state-space splitting and merging. Some of the motivations for studying such systems—see the preface to An Introduction to Symbolic Dynamics and Coding by Douglas Lind and Brian Marcus—has spurred work in other disciplines. For example, work by Michael Jordan, David Blei and Eric Sudderth and their students has resulted in much more rigorous methods for inferring stochastic processes—see for example Eric's paper "Split-Merge Monte Carlo Methods for Nonparametric Models of Sequential Data" (PDF). Mike's, David's and Eric's modeling work involving Dirichlet and Pitman-Yor processes has helped to transform—and make mathematically well grounded—multiple areas of research, including scene recognition in computer vision and document categorization in web search, that rely on inferring the arity of a discrete hidden variable such as the number of classes in a classification problem or the number of states in a dynamical systems model.

At one time, I spent considerable effort trying to apply the techniques described in a series of papers on "Bayesian Model Merging in Markov Models" by Andreas Stolcke and his colleagues at Berkeley [245, 244], before going back and learning more about simpler approaches from traditional time-series analysis [44, 255], stochastic automata theory [132, 111], optimal control [11, 88, 19], and traditional non-linear dynamical systems theory [247, 255]. My point, if indeed there is one, is that most of what you'll find within the narrow confines of what constitutes the purview of "symbolic dynamics / symbolic dynamical systems" — by which I mean the conferences, academic departments and journals that researchers who self-proclaim allegiance to this area of inquiry typically participate in — you will also find in more conventional fields of mathematics and automata theory.

And so, for what it's worth, my advice is to beware of the outliers; often, but obviously not always, they are outliers for good reason. It is also worth pointing out that many of the really disruptive, paradigm-shifting theories that turn out to be confirmed by experiment—usually after a considerable lapse in time due in large part to the chilly or outright hostile reception they receive from the incumbent owners of the theories about to be overturned, are also outliers, albeit a very small fraction of all outliers. And, if you want an introduction to how these ideas are applied with respect to neurons and neural circuits, you could do worse by consulting one or two of these: [36, 37, 246, 121, 144, 103].

A Stanford student wrote asking me about Benjamin Libet's experiments indicating that conscious intention to act appears, at least in some cases, to follow the onset of activity in the cerebral cortex—the so-called readiness potential—usually associated with commiting to act and what these experiments might imply about free will. I think there is no question that many of our routine activities are carried out with little or no conscious intervention. That neither bothers me nor does it necessarily imply that we have no free will. Let me explain what I mean from the perspective of a computer scientist.

Suppose we take some action without being aware we played any role in deciding whether or not to take that action. We can, however, accurately recall that we took the action and the circumstances under which it was taken. Now imagine that at some later time, with full conscious awareness, we ask ourselves whether it was the right action to take and conclude that some alternative action would have been more appropriate. We then associate the alternatve action with the original triggering circumstances, conditioning ourselves to take the alternative when circumstances present, just as Pavlov conditioned his dog to salivate whenever he rang a bell. The next time the triggering circumstances present, you automatically choose the more appropriate action selected earlier with no conscious deliberation on your part, nevertheless I warrant that you exercised free will in this case9.

May 21, 2016

My niece and nephew once removed10 were in San Francisco last week and so we met for dinner on Friday to catch up on news from our respective branches of the family. I had other meetings in the city and spent the afternoon at the Google office on Spear Street near the Ferry Building. With the hoards of developers flocking to MTV for the 10th Google I/O, it made a lot of sense to be elsewhere for the day. I borrowed the desk that Jon Shlens usually occupies when he's squatting in the SF office and I was pleasantly distracted by the spectacular view set directly in front of me:

|

In my email thanking the two of them for a pleasant dinner and stimulating conversation, I asked my nephew to tell me more about the different roles microglia play in the central nervous system and his scientific interests in particular. Here's what he wrote in return:

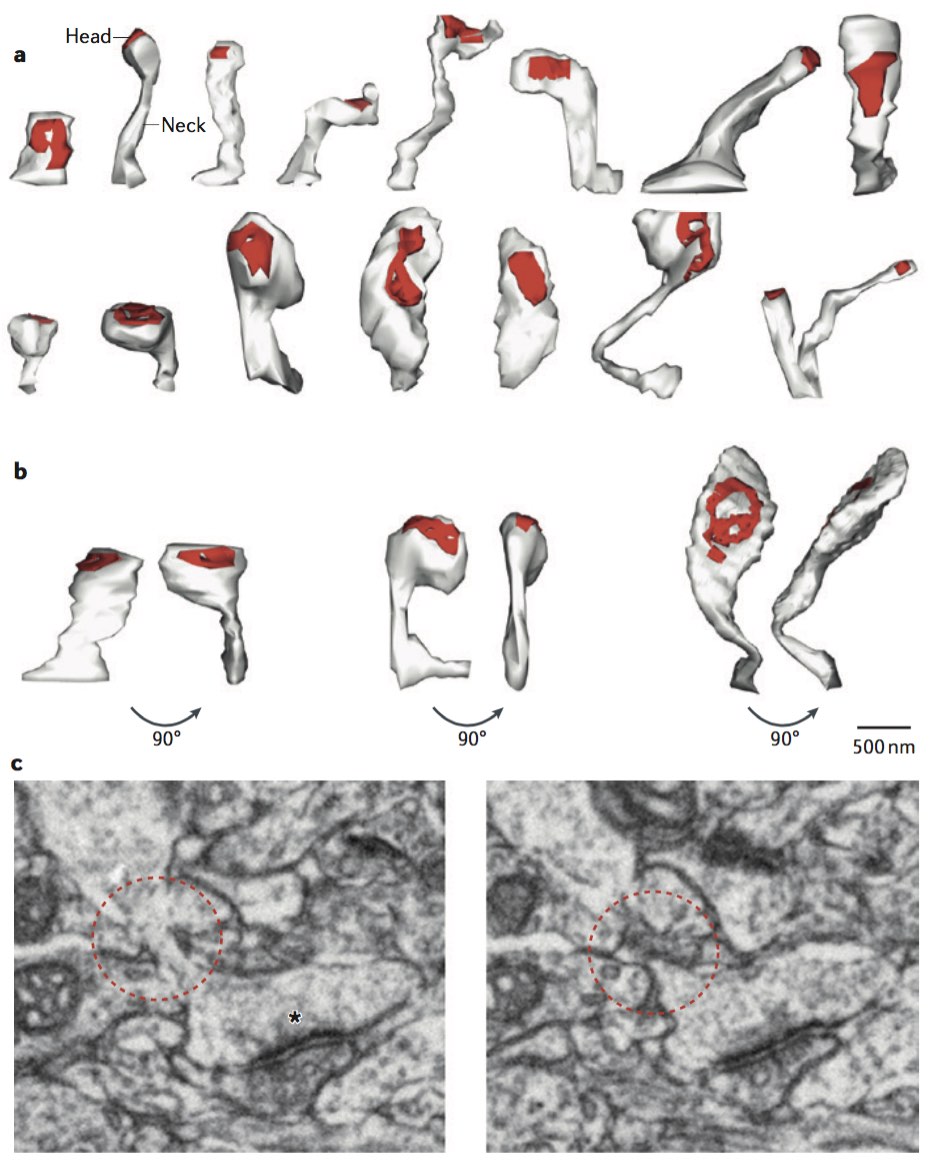

DGM: Here is a lengthy recording of an all day conference put on a couple years ago by the National Institute of Alcohol Abuse and Alcoholism — on the role neuro-immune mechanisms play in addictive behavior and pathology. I found all of the talks fascinating, but the two to focus on regarding the role microglia12 play in synaptic plasticity (both synaptic pruning and formation) and learning are by Wen-Biao Gan at NYU and Staci Bilbo at Duke. Gan's talk includes the two photon microscopy video of microglia in action — both under normal basal conditions and under conditions where a laser was used to lesion a small portion of the brain. [The full conference can be viewed here and the presentations by Gan and Bilbo are here if you don't want to watch the entire five hour video.]After seeing how mobile microglia are (even under non-pathological conditions) he and colleagues set out to answer the question, why do these cells move around so much. And what they showed was that microglia were not only implicated in ongoing and regular synaptic pruning (which one might expect from a phagocytic immune cell) but they were also necessary to synaptic formation. So, they are critical players in the sculpting of the neural network not only in prenatal development when microglia first colonize the developing brain (after the developmental period when the neuronal population is already established, but before synaptic connections are formed, and/or awakened), but they continue to reshape the network through life as the brain learns and unlearns.

TLD: This is really interesting. As you probably know, filopodia are thin, actin-rich plasma-membrane protrusions that "function as antennae for cells to probe their environment" and play an important role in creating and extending dendritic spines in neurons. The creation of these extensions requires the assembly of cytoskeletal polymers from simpler monomers and their subsequent disassembly and recycling when the filopodia are withdrawn, all of which happens on a scale of a few seconds, minutes or days depending on the circumstances. My first hypothesis as to the function being served by the frenetic microglial activity featured in Wen-Biao Gan's presentation is that the microglia are garbage collecting the detritus left over following the withdrawal and disassembly of the filopodia. If this cellular house cleaning is not carried out efficiently, the extracellular space would soon be littered with garbage slowing the diffusion of neural-signaling and metabolic-processing molecules and thereby compromising normal cognitive function. The footnote at the end of this paragraph includes some research notes I made in developing a proposal for a possible project at Google that never got enough traction with management to see the light of day13.

DGM: Bilbo's talk is fascinating. And it was through her written work [22, 271, 21] — see here and here for recommended readings — that I first became interested in microglia and the brain's auto-immune and inflammatory response mechanisms and their possible relevance to CNS pathologies — including developmental network disorders such as autism, and schizophrenia, and neuro-degenerative disorders such as Alzheimers, MS and ALS. The project I am spending all of my time on now is an early stage pharma effort to develop a modulator of microglial activity which could be useful in the treatment of CNS disease or injury.

What Bilbo has shown is that if the brain of a rodent is exposed to some sort of immune challenge — or if the mother is — during the developmental window when microglia are helping first to construct the synaptic connections between neurons, those microglia are permanently altered (for life) such that they will over-respond with a destructive vengeance to a subsequent shock later in life leading to potentially debilitating neurological disorder such as, for example, schizophrenia. This was really, really exciting for me, in part because it suggests their may be a therapeutic opportunity to prophylactically inhibit microglial over-reactivity and protect the brain, but also that network disorders may be a function not of the 100 billion neurons themselves (which for the most part do not reproduce themselves), but of the 100 trillion synaptic connections between neurons which are very plastic while they exist and are also transient (they form and are pruned in the face of learning).

TLD: This does sound pretty promising. From my relatively naïve understanding of the relevant pharmacology and gene-based therapies, an over-response requiring a chemical-suppressor or the quieting of a genomic pathway would seem easier to manage than if microglia were not being activated at all [59]. [I'm getting ahead of myself. I just watched the video of Wen-Biao's presentation at the conference you mentioned and looked at his paper in Cell describing the study that the results he presents are based on [197]. Some things became simpler and others more complex. The tradeoffs are clearly complicated but at least I now understand your comment about cytokines.]

Bilbo's approach to unraveling the role of microglia was distinctively different from Gan's as her analysis relied on gene expression levels and genetic pathways, while Gan relied primarily on two-photon microscopy, Cre-mediated recombinant methods and pharmacological intervention with toxins such as tamoxifen. She did an excellent job demonstrating the power of these techniques for analyzing genetic pathways in understanding the role of chemokines and cytokines in particular. The take-away messages were clearly stated at the end of her talk, namely that (a) the behavior she observed did not appear to be an inflammatory response in the brain, but rather a neuro-immune signalling response, and (b) the immune molecules featured in her work are powerful modulators of plasticity and should be considered in the context of addiction.

DGM: I've lots of other references to microglia and the role of neuro-immune signalling (via cytokines like IL-1, IL-6, IL-10 and TNF alpha, BDNF...) and could send those along if any of this looks to be useful/interesting to you. I'll be really interested to hear what you think about it in terms of your work on machine learning, and how this kind of amazing network plasticity at the root of human learning might prove to be inspirational for modeling machines that learn. [I'm not sure how knowing more about microglia will help in building better learning algorithm. It certainly could, but given the technology used in Gan's lab and how long it took them to generate the data for the results presented in [197], I'm not sanguine that we'll receive enlightenment anytime soon. In any case, I find the potential therapeutic impact worth a closer look. Perhaps someone from my class will have some ideas and want to look deeper.]

Miscellaneous loose ends: In looking at how microglia impact addiction, neurodegenerative disease and disorders like autism, I was reminded of our confusion about the origin of chocolate and cocaine. I did a quick search and here's what I found out: (a) Chocolate is made from the fruit of the Cacao tree, Theobroma cacoa in the plant family Sterculiaceae. (b) Cocaine is made from the leaves of the Coca tree, Erythroxylon coca in the plant family Erythroxylaceae. (c) Cocaine has no connection with poppies; opium and heroin are the most common products of poppies.

May 19, 2016

Here are some excerpts from a conversation with Robert Burton [35] concerning the confusion about consciousness in academic—and in particular philosophical—circles versus the relative clarity we find in functional analogs of consciousness in control theory and cybernetics.

TLD: Jo and I just finished the Robert Wright interview with Aron Thompson, the philosopher from UBC. We have been listening to meal-sized installments when we have breakfast or lunch together. I was impressed with Aron's comment at the beginning of the interview noting that consciousness is a process, thinking he had the same thing in mind as I have, namely, a computational process, but now I'm not so sure. I think he missed a chance to make clear what Buddhists mean by awareness and out-of-body experiences, as well as pin down the notion of consciousness while keeping the spectre of dualism at bay. Basically consciousness is to a human what photosynthesis is to a plant, at least insofar as are both are processes central to the organism.The digital computer also offers an explanation / justification for what Wright refers to as the "gaps between the phases of consciousness" or "the blinking view of consciousness" and Thompson is referring to when he mentions that there is "data from perceptual neuroscience that indicates what looks like a continuous field of visual awareness is actually made up of 'pulses' that are discontinuous and have to do with ongoing brain rhythms and that parse or frame stimuli into meaningful units."

Despite the confusion in how non-computer scientists and even some computer savvy experts talk about digital computers, they are actually implemented as analog circuits with a clock that orchestrates read and writes and initiates operations on operands stored in memory registers. The transistors and other components etched on the processor chip are analog devices and require time to transition from one state, say "on", to another, say "off" in the case of a simple binary latch used to implement registers, during which the information state of the latch is neither "on" or "off", and hence it is risky to apply an operator to operands stored in registers until the stored values stabilize.

RAB: When I was a resident in the 60's, it was widely held that the continuous stream of consciousness that we experience was nothing more than frames of experience welded together so seamlessly that they created what seemed like a steady flow of time and events. The oft-quoted prime example was flicker fusion rate—where individual visual experiences flowed at a speed sufficient to create the illusion of a continuous present, including the orderly flow of time (like the frame rate of motion pictures).

Even so, we can't viscerally understand how neurons create love and grief, and so we look to metaphors (man's way of explaining to himself what he cannot understand via equations and evidence). And we end up with the very cogent metaphor, photosynthesis is to plants as consciousness is to animals. Unfortunately, computer metaphor isn't emotionally satisfying to most people. (Reasons vary from not understanding how computers work to an ongoing sense that we wouldn't be unique or different from robots if all we are is computations).

Perhaps a useful analogy: Even if we understand gravity to be a function of the basic fabric of space-time, we think of gravity as a force. We cannot strip ourselves of the notion of gravity as the "attraction between two bodies." So the metaphor of "the force of gravity" works at the level of daily experience even when it isn't an accurate representation of the way gravity "works" or what it "is." Consciousness will continue as a puzzle even if it is fully understood at the physical level (just like gravity). People will continue to offer pet theories just as quantum physicists continue to root for the discovery of gravitons). It is our nature. Plants photosynthesize and we speculate.

TLD: And so perhaps I shouldn't disabuse others of their common-sense metaphors, especially, say, if they are comfortable with Newton's laws and never had cause to apply them to anything other than Newtonian circumstances that arise in daily life. However, there are cases when a more powerful metaphor helps as, say, in the case of gravitational lensing as means of constructing more powerful telescopes. In the case H. Sapiens 1.0, we are controlled to a significant degree in our social interactions by instincts honed by natural selection for a hostile environment we no longer inhabit. Luckily for us, civilization can effectively speedup natural selection.

Perhaps, if you had a better idea of what was happening in your brain, you might be able to redirect, harness or at least recognize when you are being ridden by instincts that are leading to bad outcomes. Or, if you aren't interested in why we behave as we do and can accept advice even when the reasons given are spurious, then you can still derive benefit by, say, miming a happy face, rearranging your body in a confident posture, wrapping your hands around a warm mug of tea, or speaking more slowly, distinctly and at a normal volume — all of which have been found empirically to improve mood, and for which we have some understanding of the relevant neural circuits, genetic pathways, neuromodulators, etc.

If we had a user's manual for H. Sapiens 1.0, it would surely include the following advice: losses compel more than gains, beware of the gambler's fallacy, power corrupts ... induces a sense of entitlement even in the best of us, social beings lie to themselves so they won't reveal their duplicity, and many others for which we have good evidence, and, in all the cases given here, some reasonable hypotheses about their neural correlates. There is a difference between using a metaphor as a heuristic or mnemonic for solving problems and believing the metaphor literally and having that belief bleed over into aspects of life for which it is completely inappropriate.

I think I'm being annoyingly pedantic and preaching to the choir. I get what you're saying. We all tell stories to supplement our understanding of complex systems; some believe those stories and take solace in their comforting familiarity more literally than others. None of us is far from the animal in the jungle, alert to every sound and movement, prepared to respond at a moments notice to whatever threat we encounter as our lives depend on it. "Faith in the unity of nature should not make us forget that the realm of life is at the same time rigidly unified abstractly and immensely diversified phenomenologically" ... Erwin Chargaff in warning his fellow molecular biologists not to become overly attached to their — increasingly abstract — theories or overly aggressive in selling their scientifically-motivated perspectives on life to the general public.

RAB: Ahh! Now we seem to be on the same page.

TLD: [Wool gathering] Think of a robot operating system (ROS) in which there is one master process called the controller, which has many sub processes that can run in parallel threads, one in particular we refer, to tongue in cheek, as the decider15 and will think of in the present context as the computational locus of the conscious self.

The remaining sub processes we'll refer to as the workers—though "worriers" might be more appropriate term—and they have access to a wide range of signals originating from the robot hardware, e.g., sensors and effectors, as well as from other workers. We treat consciousness as we would any other subprocess not because it's indistinguishable in either its specific activities or its neural substrate, but rather because it represents just another thread of computation that depends on an allocation of computing resources, can be sped up or slowed down by the operating-system process-scheduler, and can be put to sleep like any other process thereby curtailing its use of any resources until reawakened by the scheduler or a system interrupt.

News flash! Hold the presses. This story just in. — I was not looking forward to fleshing out the above narrative. Not because it would be particularly hard, but, rather, because it would be tedious and somewhat pointless. I'm not interested in explaining consciousness so as to account for the quirks of the human variant of consciousness. I'm an engineer and my only interest in consciousness now that I understand what it's good for and what purpose it can perform from a control systems perspective, is to construct particular instantiations of my systems-level understanding of consciousness useful for solving particular control problems. Well, that and because I'm a clever boy and believe that I could explain consciousness so a control theorist would recognize it immediately.

In any case, now I don't have to. Michael Graziano a psychologist and cognitive neuroscientist at Princeton has done it for me. Well, not for me in particular, and it needs some explaining, but Graziano has described a theory of consciousness that accounts for my systems-level understanding of consciousness and articulates it more clearly and comprehensively than I ever could or would have patience to do. Apparently he has written a book on the subject [94], but you can read his series of articles—linked to the publications page on his lab website at Princeton—in The Atlantic if you're interested [96, 97, 99, 95, 98].

I can only vouch for the articles in the January and June issues and recommend them highly. I'm so jazzed! It's like I had an assignment from my 5th grade teacher to explain the representatoin of self, attachment, transference, the difference between sensation, feeling and emotion16, etc. to a lay person and at the very last minute before school let out on a Friday afternoon she—her name was Mrs. Fisher and she taught me how to write good prose— decided to let us off the hook. Enjoy!

I'm re-reading selected excerpts of Horace Freeland Judson's The Eighth Day of Creation and reflecting on the character of the central players in this extraordinary of epoch of science, including Sydney Brenner, Francis Crick, Seymour Benzer and Fred Sanger17. I expect I'm selecting on the basis of my preferences in personality since not all of the players in Judson's account were people I would like to have a relaxed dinner with to discuss life, work and science.

May 17, 2016

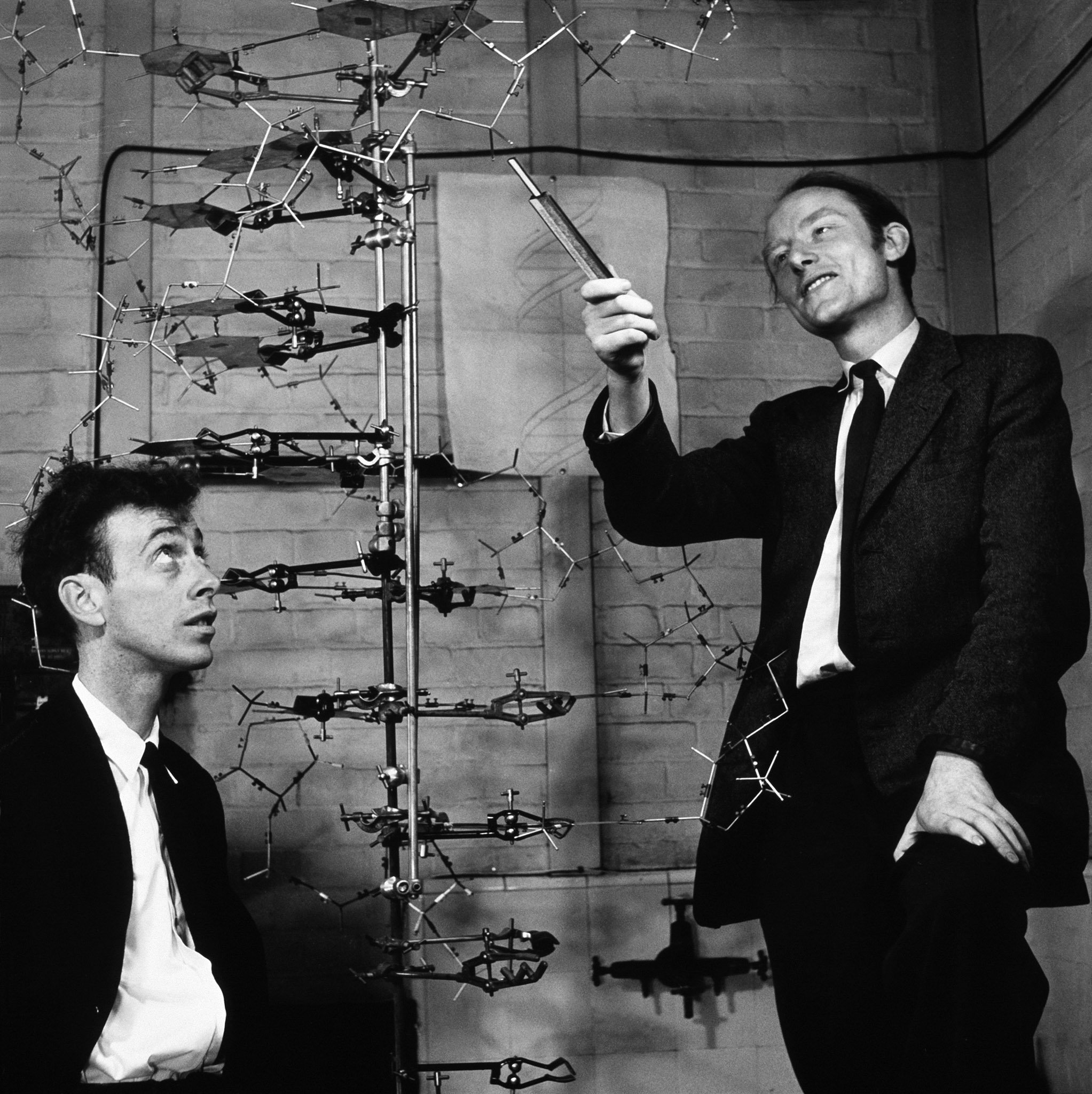

Wei-Chung ended his presentation with that famous (staged) picture of Crick and Watson standing next to a large model of the double helix with Crick pointing at the model with a slide rule and Watson looking on a little dumbfounded—see below. Wei-Chung made the point that having defined the structure of the genetic code, scientists had a model on which to fashion a functional account. It's worth pointing out that we're still just starting to make headway on this problem nearly sixty years after Crick and Watson's discovery.

|

The analogy to structural connectomics was obvious. Horace Freeland Judson's book The Eighth Day of Creation — which is, by all accounts, a popular science book of the first rank, written for a general but scientifically-literate audience, and revered by scientists — provides some hints about what might transpire as neuroscience tries to build a functional theory of neural computation on the structural backbone of the connectome [127]. The analogy has potential shortcomings.

In particular, there is no direct analog for the gene in the connectomic account, since the genome provides a complete account of both the structure and function of the organism; it's as though we knew about about the double-helix structure of DNA but hadn't a clue about how the nucleotide bases coded for protein. Of course, Jacques Monod and others have remarked, large proteins can fold in myriad ways and by so doing implement realize very different molecular machines, so maybe the analogy is more apt than it appears at first blush.

May 15, 2016

There have been some promising recent developments in the quest to build ultra-low-field MEG (magneto-encephalography) devices operating at room-temperature—no need for liquid helium cooling, providing a less expensive, less-cumbersome alternative to current SQUID-based (superconducting quantum interference) devices—no need for a room-size subject-and-sensor-enclosing Faraday cage, and relying on a tiny sensor compared to SQUID—some experimental devices are as small as 5 mm3 allowing for the possibility of monitoring brain activity in the case of unencumbered humans behaving in natural environments [81, 194, 214] — see this article on a device developed at NIST.

Unlike fMRI and SPECT, MEG is a direct measure—electromagnetic fields produced by current flow—of neural function. If it was simpler to use and less costly to purchase it would likely replace SPECT—which relies on injecting the patient with a radioisotope as a source of gamma-rays for imaging—for diagnosing neurodegenerative disorders. There are still S/N issues that need to be addressed in order to produce a reliable miniature sensor, but we believe these problems will be overcome in relatively short order given our current understanding of the relevant biophysics, and the improved spatial—on the order of a millimeter—and temporal—a millisecond or less—resolution coupled with the noninvasiveness of the procedure should create a demand for such instrumentation.

In January 2013, David Heckerman (UCLA and MSR) and I met in LA to discuss the practicality of using ultrasonic imaging to record activity in deep brain tissue. David did some work on this topic in his undergraduate thesis and I had been closely following the research of Lihong Wang on photoacoustic imaging with one of my former students who in an engineer working on ultrasonic imaging and stimulation at Siemens. In our 2013 technology roadmap we wrote that ultrasound imaging was beyond our five year planning window. But three years have passed and Lihong's work has exceeded our expectations to the extent that there may be reliable photoacoustic imaging devices within the next couple of years [277, 263, 72, 72, 138, 262] — see this NPR article featuring Professor Wang.

May 11, 2016

Summary: I. Change of venue for the class on Wednesday, May 26. II. New dataset soon to be released by the Allen Institute. III. Miscellaneous loose ends and class related updates.

I. Change of venue for Wednesday, May 26:

In class on Wednesday, we discussed the possibility of everyone car-pooling over to Google for the last class. Everyone present agreed this would be fun and so I'll arrange for it to happen. If you absolutely can't make it over to Google, I'll send you an invitation to the Hangout so you can participate virtually. This will be the last class of the course you will be expected to attend and so the meeting at Google will be a party of sorts replete with snacks from the MK (micro-kitchens) and perhaps some beer to lubricate your tongues and thus encourage questions and interactions. The last class features Peter Latham from the Gatsby Institute whom I visited recently and twisted his arm into giving a lecture. I'm also inviting several CS379C alumni including Amy, Mainak and Saurabh who've attended some of the lectures this year and several Google research scientists.

Directions: I'm in the 1875 Landings building at the corner of Landings Drive and Charleston—just Google 1875 Landings Dr, Mountain View, CA 94043 for directions. From Stanford, From Stanford, I usually drive straight down Embarcadero, across 101 overpass and then right on East Bayshore Road to avoid the traffic on 101. I'm expecting you to self organize to find transportation. If you don't have a car and can't find someone who is driving and has room, tell me and I'll help you arrange for transport.

II. New dataset released by AIBS in June:

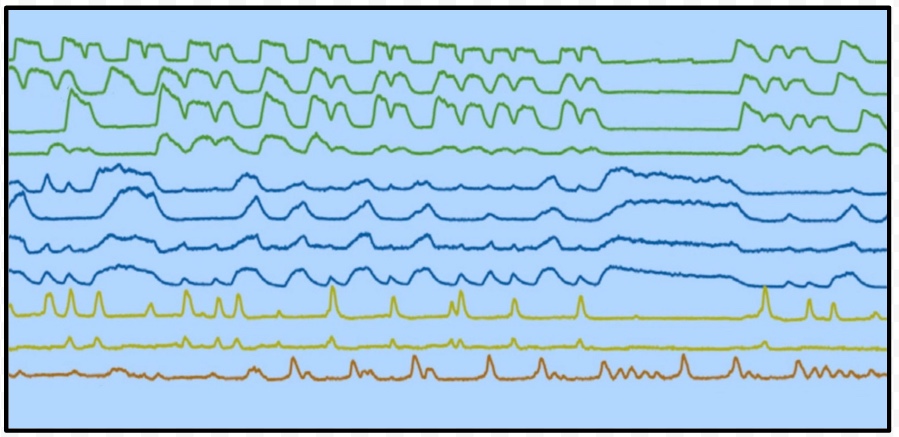

Here is a recent email exchange with Michael Buice, a theoretical neuroscientist from the Allen Institute for Brain Science, concerning calcium imaging data acquired as part of Project MindScope [143] studying the mouse visual cortex:

TLD: Hi Michael, Costas said you were handling the CI data and would likely know what the status is. I'm developing a proposal for a functional modeling project to complement Neuromancer and part of that proposal will involve making commitments to milestones, etc. Clay told me that CI data would likely be available by the end of last summer or early fall of 2015, but if that happened nobody told us. Can you give me a status update? Thanks.There was follow up discussion organized by Jon Shlens a week later which is summarized in the footnote at the end of this sentence18.MB: Hi Tom, The Cortical Activity Map—that's our project name for the large-scale 2-photon data—just finished the first product run about 3 or 4 weeks ago. We're processing and packaging the data now and the first release will be in June with a following release in October, at which point you can freely download the data and APIs. If there's something you'd like to look at sooner than that, we might be able to arrange something. It would also be relatively simple to get you some example data sets.

This data set consists of recordings from roughly 20 "Locations", where a Location is defined as a tuple of (Cre line, region, depth). The Cre lines range over (Cux2, Rbp4, Rorb, Scnn1a), the regions over (V1, LM, AL, PM), and the depths over (175μm, 275μm, 375μm) (there's some variation in the exact depth of particular recordings). I've attached an image [included below] to help with this (ignore the dots for now, the shaded regions in that matrix correspond to Locations from which we've taken data). For each Location, there are 3 stimulus Sessions (where Session defines the types of stimulus used) that contain about an hour of recording from the same area of cells, with between 60-80% of cell ROIs matched across sessions.

The mice are active and engaged in passive viewing while being allowed to run. The set of stimuli across the 3 sessions are static and drifting gratings, locally sparse noise, natural scenes and movies, and spontaneous activity (grey screen). For each Session and Location, there are about 4 (a few have 8) experiments with different mice. In all, we have about 16,000 uniquely identified ROIs, roughly 10,000 of which have the complete set of stimuli. Let me know if you have any further questions and I'll assure you of a speedier response.

TLD: This is sounds like a super interesting dataset! Peter Li sent around this paper [169] to help us in sorting out the extra-striate regions that you mentioned. We were curious why you picked those regions? I don't know how the data was acquired, but, if the animals were allowed to run while viewing, will you also be able to provide behavior activity traces? Not sure how much influence this could have on activity in these visual areas, but it might be worth looking at if available.

Also — probably too much to hope for at this stage — is there an EM stack associated with this data? It would be very interesting to align the structural and functional data. I'd like to apply an analysis similar to the one described in [60] to such an aligned dataset. The students in my Stanford class this year are working on a bunch of projects investigating related issues. I'll be in Seattle for the Spring Neuromancer Rendezvous on May 26 and would love to get together and catch up.

III. Miscellaneous updates and references:

Video and audio for the Anastassiou and Boyden presentations are now linked to their calendar entries. Remember that Ed invited your questions following his presentation, if you had any but were too shy to ask during class:

May 9, Monday: Costas Anastassiou, Allen Institute for Brain Science (TALK) [RELATES TO SUGGESTED PROJECT #3] May 11, Wednesday: Ed Boyden, Massachusetts Institute of Technology (TALK) May 16, Monday: Wei-Chung Lee, Harvard University (TALK) [RELATES TO SUGGESTED PROJECT #2] May 18, Wednesday: Adam Marblestone, Massachusetts Institute of Technology (TALK) May 23, Monday: Project Discussions May 25, Wednesday: Peter Latham, Gatsby Computational Neuroscience Unit (TALK) [TALK + END OF CLASS PARTY @ GOOGLE]

Here are some related references, including a chapter on micro-meso-macro models by Costas Anastassiou and Adam Shai appearing in a new compilation by György Buzsàki and Yves Christen (PDF), an interesting, but technically challenging paper suggested by James Thompson and another, suggested by Peter Li, that sorts out the different Cre lines in the AIBS calcium imaging datasets:

@incollection{AnastassiouandShai3MDB-16,

author = {Costas A. Anastassiou and Adam S. Shai},

title = {Psyche, Signals and Systems},

booktitle = {Micro-, Meso- and Macro-Dynamics of the Brain},

editor = {Buzs\`{a}ki, Gy\"{o}rgy and Christen, Yves},

publisher = {Springer New York},

address = {New York, NY},

year = {2016},

pages = {107-156},

}

@book{BuzsakiandChristen2016,

author = {Buzs\`{a}ki, Gy\"{o}rgy and Christen, Yves},

title = {Micro-, Meso- and Macro-Dynamics of the Brain},

publisher = {Springer},

year = 2016

}

@article{BuesingetalNETWORK-12,

author = {Buesing, L. and Macke, J. H. and Sahani, M.},

title = {Learning stable, regularised latent models of neural population dynamics},

journal = {Network},

year = {2012},

volume = {23},

number = {1-2},

pages = {24-47},