Today: AI

Say we start with the Gettysburg address:

Four score and seven years ago our fathers brought forth on this continent, a new nation, conceived in Liberty, and dedicated to the proposition that all men are created equal.

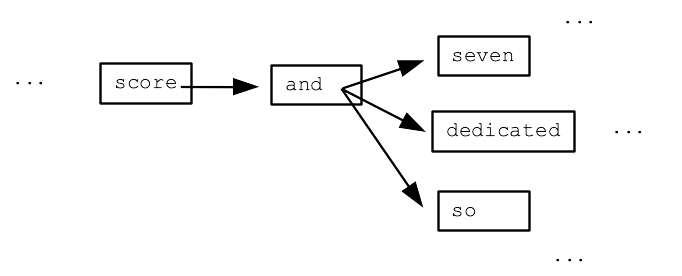

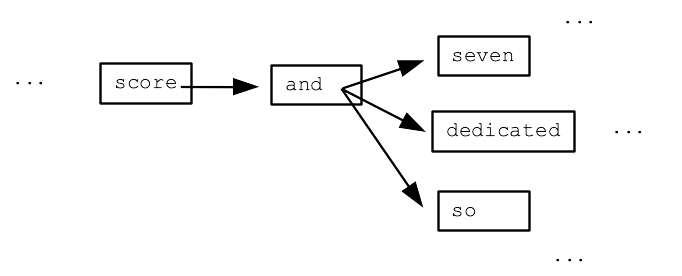

We'll build a "bigrams" dictionary with a key for every word in the text. (It's "bigrams" since we consider pairs of words, and the technique scales to looking at more than 2 words.) The value is a list of all the words that occur immediately after that word in the text. This is not a difficult dict to build with some Python code.

{

'Four': ['score'],

'score': ['and'],

'and': ['seven', 'dedicated', 'so', 'proper', 'dead,', 'that'],

'seven': ['years'],

...

}

The bigrams dict forms a sort of word/arrow model (aka a "Markov model), where each word has arrows leading to the words which might follow it.

We can write code to randomly chase through this bigrams model - output a word, then randomly select a "next" word, output that word, and keep going.

Here are 3 randomly generated texts using this simple bigrams Gettysburg model:

1. Four score and dedicated to the living, rather, to the great civil war, testing whether that all men are met on a great task remaining before us to dedicate a great battle-field of that we can not have come to add or any nation so nobly advanced.

2. Four score and seven years ago our poor power to dedicate -- we can never forget what we can not hallow -- and dead, who fought here to be dedicated to add or any nation so nobly advanced. It is for those who struggled here, have thus far so nobly advanced.

3. Four score and dedicated to add or detract. The world will little note, nor long endure. We are met on a portion of freedom -- that this nation, or any nation might live. It is for those who fought here to add or any nation might live. It is for us to be dedicated to dedicate a portion of devotion -- that nation might live.

Here are some examples from Alice in Wonderland, and the Apple software license

4. Alice's side of the question?' said Alice, very humble tone, and he stole those serpents! There's no label this he spoke. `As wet as he was all this be getting tired of that squeaked. This sounded promising, certainly: but he called a tiny hands up and went on, without speaking, so Alice said Alice, feeling a very neatly and the right thing the Queen to the Dodo.

5. SOFTWARE PACKAGE WITHIN THE TERMS OF THIRD PARTY RIGHTS. D. H.264/AVC Notice. To the fonts included with the Photos App Features may download was encoded by visiting http://www.apple.com/privacy/. At all warranties, expressed or services using the Apple Software, full force and conditions of the Apple Software. You acknowledge and how the GPL or control.

1. It's kind of vaguely similar to the input. It's impressive considering that we just look at pairs of words and that's all.

2. However the output doesn't really make sense or have sense in it; it's just replaying fragments vaguely imitating the source text.

This simple bigrams model reflects a bit of how ChatGPT / LLM AI works.

Read in a huge source text, building a "model" that in some way summarizes or captures patterns in the original. It's not a copy of the original, but a sort of distillation.

The contents of the model reflects and depends on the source text it's built from. A sort of summary or distillation.

The output is made by traversing and working through model (possibly with a prompt). The output just reflects the mode contents, so when we produce output from different models, the output looks very different too (e.g. Alice vs. Apple).

The bigrams example shows the structure, but the real AI is, say, a million times deeper. If the bigrams example is the scale of a shoe, then the LLMs are the scale of a whole automobile.

So with this in mind, here are 2 points to keep in mind about AI..

After training, we don't have a big brain, or something that thinks. It does have a lot of intelligent patterns in it. We should not imagine that this big brain will provide analytical answers for us. It is able to reflect the sources it was trained on.

e.g. "Should we fund new nuclear reactors" or "how does the US help achieve peace in the middle east" are deep and complicated questions, in an environment with many unknowns. Nobody should think that addressing these questions to an AI is going to be a big improvement. I mention this, to avoid some wish-projection onto the AI, that we now have a source of great wisdom to solve problems for us.

The AI output sounds intelligent, but this is mostly a mirage. It is replaying human fragments with intelligence in them. This why the AI is not good at avoiding falsehoods.

We could say there is range of interpretations of the AI ranging from "intelligent" to "replay" explanations of what it's doing. There is certainly a lot of replay in the AI output.

BUT now I'll try to portray AI's strengths..

Maybe replay is not 100% separate from intelligence. Isn't replay a big part of how you compose sentences, pulling up fragments from your experience?

Corollary: many problems maybe can be solved with a lot of replay. Problems that are kind of repetitive, like say, answering customer service questions that come in to some corporate help line. Many of the questions will resemble past questions.

Niches where good answers can be mined from the corpus of previous answers are where AI will do best at first.

However lame in some ways the AI is now, this is day 1. Vast amounts of money and talent are going into a race to further develop AI. I expect to see big improvements from today's state.

Say we have the beginnings of poems, each stored as a dict json text, like this. Each poem has a title and some other things, and a list of text lines under the key 'lines', as shown below. (I built this patterned off Chris Piech's material for calling AI from Python, and homework 8 will work similarly.)

# poem1.txt { "title": "lecture poem", "tone": "silly", "comment": "this one seems to induce some rhyming, fewer lines may work", "lines": [ "There once was a bird named flappy", "Who liked to get kind all kind of nappy", "We stood in a tree", "And they said unto me" ] } # poem3.txt { "title": "lecture poem", "tone": "jaunty", "lines": [ "Bippity bip bip", "Bap bap bap", "Fiddly dit dit" ] }

The Python code builds a prompt string to feed to the AI. In the prompt, the first poem gives the format we want, and the second is spelling out what the poem looks like thus far. We want the AI to add a line.

Here is an example prompt (i.e. these are instructions we feed the AI):

Return the next line for a poem. The result should be formatted in

json like this: {"title": "lecture poem", "tone": "silly",

"lines": ["There once was a bird named flappy", "Who liked

to get kind all kind of nappy", "We stood in a tree",

"And they said unto me"]}. The poem thus far is {"title":

"lecture poem", "tone": "jaunty", "lines": ["Bippity bip

bip", "Bap bap bap", "Fiddly dit dit"]}.

We have the neat f'hi {name}' format string feature here, where the python expression inside curly braces is pasted into the surrounding text. We use this below to construct the prompt string.

>>> name = 'alice'

>>>

>>> f'Hi there {name.upper()}'

'Hi there ALICE'

Here is the key function, making the prompt. The prompt is just a string the python code is putting together. The code uses format strings f' ..{expr} ..'. The format string has an 'f' to the left of the string. Then curly braces in the string enclose expressions. Each expression is evaluated and its result is pasted into the string at that spot. In this code, calls to json.dumps(d) are used to compute the json string for each dict, and paste that into the prompt. Recall that "dump" in JSON refers to creating a big string that represents the input data structure.

EXAMPLE_POEM = {

"title": "lecture poem",

"tone": "silly",

"lines": ...

}

def extend_poem(poem):

"""

Given poem dict, AI adds something to make a longer poem,

which is returned.

"""

print('[Suspenseful music plays as the AI thinks...]')

# Form the prompt, giving example json, and mentioning

# the poem thus far.

prompt = ('Return the next line for a poem. ' +

f'The result should be formatted in json ' +

f'like this: {json.dumps(EXAMPLE_POEM)}. ' +

f'The poem thus far is {json.dumps(poem)}.')

# This is the boilerplate to send the prompt to the AI

chat_completion = CLIENT.chat.completions.create(

messages=[

{

"role": "user",

"content": prompt,

}

],

model="gpt-3.5-turbo",

response_format={"type": "json_object"},

)

# Get the response back from the AI, convert back to a dict

json_response = chat_completion.choices[0].message.content

poem_new = json.loads(json_response)

return poem_new

What this example does is call the AI to make a new line, then prompt the user to choose: "k" keep means to take the AI suggested poem and loop around to add a new line to that. Or "t" try again means to discard the AI suggestion, and loop around to have it try again with the poem as is.

In this example, I "t" try-again the first AI suggestion, and then "k" keep all the later ones.

[Suspenseful music plays as the AI thinks...]

{

"title": "lecture poem",

"tone": "jaunty",

"lines": [

"Bippity bip bip",

"Bap bap bap",

"Fiddly dit dit",

"As we sipped on some tea"

]

}

Keep (k), Try again (t), Quit (q) ? t

[Suspenseful music plays as the AI thinks...]

{

"title": "lecture poem",

"tone": "jaunty",

"lines": [

"Bippity bip bip",

"Bap bap bap",

"Fiddly dit dit",

"Lalalalalala"

]

}

Keep (k), Try again (t), Quit (q) ? k

[Suspenseful music plays as the AI thinks...]

{

"title": "lecture poem",

"tone": "jaunty",

"lines": [

"Bippity bip bip",

"Bap bap bap",

"Fiddly dit dit",

"Lalalalalala",

"Sippy sip sip"

]

}

Keep (k), Try again (t), Quit (q) ? k

[Suspenseful music plays as the AI thinks...]

{

"title": "lecture poem",

"tone": "jaunty",

"lines": [

"Bippity bip bip",

"Bap bap bap",

"Fiddly dit dit",

"Lalalalalala",

"Sippy sip sip",

"On a bright sunny day"

]

}

Now we'll talk a little about ethics and AI.

The AI reflects whatever bias is in its source material.

"Bias" here just means that the data is not representative of the whole population, which may be a problem depending on what the AI is used for.

e.g. Suppose you trained an AI on nothing but TikTok videos. What's the average age there, like 20? You would not be surprised to get advice and views geared for 20 year olds from that AI.

e.g. A bias in US elections is that some groups are more likely to vote. In particular, old people vote at higher percentages. We are not surprised that Social Security, which benefits the elderly, is a big priority with politicians, reflecting the bias in who votes. (This is not necessarily a flaw in the voting system. The non-elderly are choosing to vote less.)

Currently AIs are trained on books and internet content, biased towards the developed world, and towards culture that is written (books, newspapers, magazines) or say, reddit type internet content. There is no doubt some bias in that source material.

What if the AI absorbs all the books, movies, etc. and then produces infinite knockoffs — possibly lower quality, but very low price. Think about bad and good scenarios. On the one hand, this will produce a large volume of cheap material. On the other hand, it interrupts the flow of money from the consumers to the human creators, so we might ultimately reduce the supply of original human-created material. It's unknown how how this will play out.