The parallelization of the time-loop of a partial differential

equation solver, or time-parallelism, has in general received little

attention in the literature compared to the parallelization of its

space-loop. This is because time-parallelism --- where the solution

is computed simultaneously at different time-instances --- is harder

to achieve efficiently than space-parallelism, due to the inherently

sequential nature of the time-integration process. However,

time-parallelism can be of paramount importance to many applications.

These include those time-dependent problems where the underlying

computational models have either very few spatial degrees of freedom

(dof) to enable any significant amount of space-parallelism, or not

enough spatial dof to efficiently exploit a given large number of

processors. Problems in robotics and protein folding, and more

generally, time-dependent problems arising from reduced-order models

usually fall in the first category. Many structural dynamics problems,

even those with hundreds of thousands of dof, can fall in the second

category when massively parallel computations are desired.

Accelerating the time-to-solution of the first category of problems is

crucial for real-time or near-real-time applications, and

time-parallelism can be a key factor for achieving this objective.

With the advent of massively parallel systems with thousands of cores,

time-parallelism also provides a venue for reducing the

time-to-solution of fixed size problems of the second category below

the minimum attainable using space-parallelism alone on such systems.

To achieve the aforementioned objective, the Parallel-In-Time

Algorithm (or PITA) relies on classical domain decomposition principles

that are usually applied to the spatial component of a PDE. The

time-domain is first divided into time-slices to be processed

independently. This decomposition can also be seen as defining a

coarse time-grid as opposed to the underlying fine time-grid, chosen

such as to allow convergence with the sought-after accuracy. Starting

with initial approximate seed values defined on the coarse time-grid,

the system evolution is then independently computed on each time-slice

using a classical time-stepping integrator. This solution exhibits

discontinuities or jumps at the time-slice boundaries if the initial

guess is not accurate enough. Applying a Newton-like approach to the

global time-dependent equation, a correction function is then computed

so that its values on the coarse time-grid are added to the current

seeds to improve their accuracy. The solution can thus be iteratively

refined until convergence, which can be monitored by the reduction of

the jumps.

|

While most steps of this iteration process are naturally parallel, the

key challenge is to perform efficiently the sequential correction

computation. For structural dynamics the best strategy consists in

propagating only a relevant part of the jump on the fine time-grid

while retaining an acceptable computational efficiency. Typically

speed-up factors of 3 or more can be achieved for both linear and

non-linear dynamics problems.

|

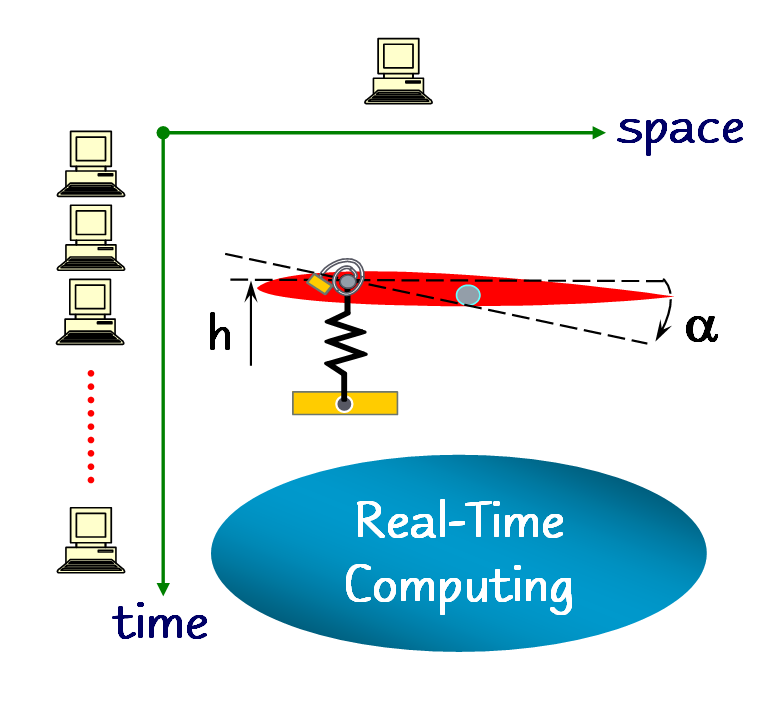

| Figure 2: Second time-parallel strategy for large-scale systems and reduced-order models. |

|