Using t-Distributions

2024-01-01

Outline

Case studies:

Volume of left hippocampus in discordant twins (for schizophrenia)

Difference in finches’ beaks before and after drought

Descriptive statistics:

Median

Standard deviation

Median

\(t\)-distributions

One-sample \(t\)-test

Confidence intervals

Two sample \(t\)-test

Numerical descriptive statistics

Mean of a sample

- Given a sample of numbers \(X=(X_1, \dots, X_n)\) the sample mean, \(\overline{X}\) is

\[ \overline{X} = \frac1n \sum_{i=1}^n X_i.\]

Artificial example

- Lots of ways to compute the mean

Exercise

- Compute the mean of volume in

Affectedgroup of Schizophrenia study

Standard deviation of a sample

Given a sample of numbers \(X=(X_1, \dots, X_n)\) the sample standard deviation \(S_X\) is \[ S^2_X = \frac{1}{n-1} \sum_{i=1}^n (X_i-\overline{X})^2.\]

Exercise

- Compute the standard deviation of volume in

Affectedgroup of Schizophrenia study

Median of a sample

Given a sample of numbers \(X=(X_1, \dots, X_n)\) the sample median is the middle of the sample: if \(n\) is even, it is the average of the middle two points. If \(n\) is odd, it is the midpoint.

One-sample \(T\)-test

Suppose we want to determine whether the volume of left hiccomapus in

Affectedvs.Unaffected.Let’s compute the

Differencesof the two

- The test is performed by estimating the average difference and converting to standardized units.

A mental model for the test

Formally, could set up the above test as drawing from a box of differences in discordant twins’ volume of left hippocampus.

We can think of the sample of differences as having drawn 15 patients at random from a large population (box) containing all possible such differences.

Under \(H_0\): the average of all possible such differences is 0.

Mental model: population of twins

- Population: pairs of discordant twins

Mental model: population of twins

\(H_0\): the average difference between

AffectedandUnaffectedis 0.The alternative hypothesis is \(H_a:\) the average difference is not zero.

Mental model: sampling 15 pairs

- A sample of 15 pairs of discordant twins

One-sample \(T\)-test

- The test is usually a \(T\) test that uses the statistic

\[ T = \frac{\overline{X}-0}{S_X/\sqrt{n}} \]

The formula can be read in three parts:

Estimating the mean: \(\overline{X}\)

Comparing to 0: subtracting 0 in the numerator. Why 0?

Converting difference to standardized units: dividing by \(S_X/\sqrt{n}\).

Standard error of \(\bar{X}\)

The denominator above serves as an estimate of \(SD(\overline{X})\) the standard deviation of \(\overline{X}\) .

Carrying out the test

Compute the \(t\)-statistic

Compare to 5% cutoff

Conclusion

The result of the two-sided test is

If reject is TRUE, then we reject \(H_0\) the mean is 0 at a level of 5%, while if it is FALSE we do not reject.

Type I error: what does 5% represent?

If \(H_0\) is true (\(P \in H_0\)) and some modelling assumptions hold, then

\[ P({\tt reject}) = 5\% \]

Modelling assumptions

For us to believe the Type I error to be exactly 5% we should be comfortable assuming that the distribution of

Differencesin the population follows a normal curve.For us to believe the Type I error to be close to 5% we should be comfortable assuming that the distribution of the \(T\)-statistic behaves similarly to as if the data were from a normal curve…

Normal distribution

Mean=0, Standard deviation=1

Rejection region for \(T_{14}\)

Looks like a normal curve, but heavier tails…

For a test of size \(\alpha\) we write this cutoff \(t_{n-1,1-\alpha/2}\).

Testing the difference of volumes in the Schizophrenia study

Computation of \(p\)-value

Confidence intervals

If the 5% cutoff is \(q\) for our test, then the 95% confidence interval is \[ [\bar{X} - q \cdot S_X / \sqrt{n}, \bar{X} + q \cdot S_X / \sqrt{n}] \] where we recall \(q=t_{n-1,0.975}\) with \(n=15\).

If we wanted 90% confidence interval, we would use \(q=t_{14,0.95}\). Why?

Computing an interval by hand

Interpreting confidence intervals

- Let’s suppose the average difference in volume is 0.1

Generating 100 versions of CI

A useful picture is to plot all these intervals so we can see the randomness in the intervals, while the true mean of the box is unchanged.

Case Study: beak depths

- Is there a change in the finches’ beak depth after the drought in 1977?

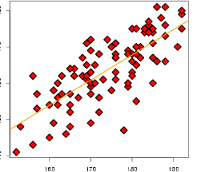

Boxplots

Histogram of Depth stratified by Year

Mental model: two samples

- Two populations of finches (

1976and1978)

Two-sample \(t\)-test

- We have two samples:

- \((B_1, \dots, B_{89})\) (before,

1976) - \((A_1, \dots, A_{89})\) (after,

1978)

- \((B_1, \dots, B_{89})\) (before,

- Model:

\[ \begin{aligned} A_i & \sim N(\mu_A, \sigma^2_A) \\ B_i & \sim N(\mu_B, \sigma^2_B) \\ \end{aligned} \]

- We can answer this statistically by testing the null hypothesis \(H_0:\mu_B = \mu_A.\)

Pooled \(t\)-test

If variances are equal \((\sigma^2_A=\sigma^2_B)\), the pooled \(t\)-test is appropriate.

The test statistic is

\[ \begin{aligned} T &= \frac{\overline{A} - \overline{B} - 0}{S_P \sqrt{\frac{1}{89} + \frac{1}{89}}} \\ S^2_P &= \frac{88\cdot S^2_A + 88 \cdot S^2_B}{176} \end{aligned} \]

Form of the \(t\)-statistic

The parts of the \(t\)-statistic are similar to the one-sample case:

Estimate of our parameter: \(\overline{A} - \overline{B}\): our estimate of \(\mu_A-\mu_B\)

Compare to 0 (we are testing \(H_0:\mu_A-\mu_B=0\))

Convert to standard units (formula is different, reason is the same)

Interpreting the results

For two-sided test at level \(\alpha=0.05\), reject if \(|T| > t_{176, 0.975}\).

Confidence interval: for example, a \(90\%\) confidence interval for \(\mu_A-\mu_B\) is

\[ \overline{A}-\overline{B} \pm S_P \cdot \sqrt{\frac{1}{89} + \frac{1}{89}} \cdot t_{176,0.95}.\]

Carrying out the test

Using R

Rhas a builtin function to perform such \(t\)-tests.

Unequal variance

If we don’t make the assumption of equal variance,

Rwill give a slightly different result.More on this in Chapter 4.

Pooled estimate of variance

The rule for the \(SD\) of differences is \[ SD(\overline{A}-\overline{B}) = \sqrt{SD(\overline{A})^2+SD(\overline{B})^2}\]

By this rule, we might take our estimate to be \[ SE(\overline{A}-\overline{B}) = \widehat{SD(\overline{A}-\overline{B})} = \sqrt{\frac{S^2_A}{89} + \frac{S^2_B}{89}}. \]

The pooled estimate assumes \(E(S^2_A)=E(S^2_B)=\sigma^2\) and replaces the \(S^2\)’s above with \(S^2_P\), a better estimate of n \(\sigma^2\) than either \(S^2_A\) or \(S^2_B\).

Where do we get \(df=176\)?

- The \(A\) sample has \(89-1=88\) degrees of freedom to estimate \(\sigma^2\) while the \(B\) sample also has \(89-1=88\) degrees of freedom.

Therefore, the total degrees of freedom is \(88+88=176\).

Our first regression model

We can put the two samples together: \[Y=(A_1,\dots, A_{89}, B_1, \dots, B_{89}).\]

Under the same assumptions as the pooled \(t\)-test: \[ \begin{aligned} Y_i &\sim N(\mu_i, \sigma^2)\\ \mu_i &= \begin{cases} \mu_A & 1 \leq i \leq 89 \\ \mu_B & 90 \leq i \leq 178. \end{cases} \end{aligned} \]

Features and responses

This is a regression model for the sample \(Y\). The (qualitative) variable

Yearis called a covariate or predictor.The

Depthis the outcome or response.We assume that the relationship between

DepthandYearis simple: it depends only on which group a subject is in.This relationship is modelled through the mean vector \(\mu=(\mu_1, \dots, \mu_{178})\).

A little look ahead

- Let’s carry out our test by fitting our regression model with

lm: