Course Notes: Idempotent Productions

AbstractThis document provides an introduction to the concepts, methods and technologies covered in this course. The research and engineering disciplines spanned by this introduction are evolving so fast that by this time next year much of the content will be obsolete. Why would anyone bother to learn this material? The answer is that the ideas explored in this course will transform how we think about machine intelligence and make possible the next generation of smart systems. This class will prepare students to contribute to the development of this technology and help shape the way it is employed in society1.

Contents

1 Artificial Neural Networks & Machine Learning

1.1 Basic Terminology

1.2 Network Components

1.3 Vectors and Matrices

1.4 Activation Functions

1.5 Perceptron Models

1.6 Connection Matrices

1.7 Differentiable Models

1.8 Learning the Weights

1.9 Convolutional Layers

1.10 Multilayer Networks

1.11 Energy Landscapes

1.12 Accelerating Returns

1.13 Recurrent Networks

1.14 Signifying Meaning

1.15 The History of Ideas

1.16 Key Ideas Summary

2 Accelerated Evolution of Artificial Intelligence

2.1 Technology Predictions

2.2 Thinking About Thinking

2.3 Programmer's Apprentice

2.4 Memory and Computation

2.5 Cognitive Neuroscience

2.6 Anatomy and Physiology

2.7 Models of Human Memory

2.8 Neural Net Terminology

2.9 Reinforcement Learning

2.10 Attentional Networks

2.11 Machine Memory Models

2.12 Embodied Cognition

2.13 Planning and Behaving

2.14 Language and Thinking

2.15 The History of Ideas

2.16 Key Ideas Summary

1 Artificial Neural Networks & Machine Learning

This chapter describes the basic the machine learning and artificial neural network concepts assumed in this class. The class encourages multi-disciplinary teams working on final projects that combine expertise in computer science and neuroscience and so it is not necessary that everyone taking the class has mastered these concepts; however, familiarity with the basic ideas is strongly encouraged, since the concepts presented here will provide a foundation for understanding and applying the more advanced concepts covered in the next chapter.

The human brain is incredibly complicated. The more we learn about it, the more we appreciate the intricacy of its structure and the breadth of its function. Scientists think of it as a network of neurons, but it is so much more complicated than any engineer's model or artist's conception of a neural network as to beggar the imagination. There are hundreds of specialized cell types classified as neurons that employ a bewildering array of chemical, electrical, mechanical and genetic signaling pathways in order to communicate with one another2. Hardly a month goes by when there isn't some new experimental finding published in a respected journal that unsettles the prevailing dogma or calls into question some cherished theory3. As an example with profound consequences for how we comprehend and treat brain disorders, there are thought to be as many or more glial cells as there are neurons in the mammalian brain and we've only begun to understand their function — it is not, as was once believed and is still taught in some textbooks, simply a means of structural and housekeeping support for and insulation between neurons4.

Artificial neural networks (ANNs) are simple by comparison. The sort of ANNs now an integral part of machine learning were originally inspired by biological networks and continue to exploit new insights from neuroscience, but they are now rapidly evolving as researchers incorporate ideas from computer science and machine learning to improve their performance and reliability. Artificial neural networks provide interesting abstractions useful in studying the computational properties of brains — they were originally conceived for this purpose, but provide little to advance our clinical or scientific understanding of healthy and diseased brains. There is, however, a level of abstraction on which they largely agree, and it is this level of abstraction that has played such an important role in the success of so-called deep neural networks in outperforming humans on tasks once thought to be out of reach for AI technology.

We take a closer look at biological brains in subsequent chapters, but, for the remainder of this chapter, we focus on ANNs, including a nuts-and-bolts introduction to ANN technology to demonstrate just how simple are the basic components, and set the stage for an explanation of how we can assemble these components into powerful architectures that combine ideas from biological and conventional computing. I promise that this is the only chapter in which you will see an equation and there are only a few included here. The objective is to convey to you in as direct a way possible just how simple are the bones of this technology in order to give you a better idea of how neural networks can be compactly described, functionally extended, scaled and adapted to run on commodity desktop computers, commercial cloud computing services, phones and laptops and specialized parallel processing hardware.

1.1 Basic Terminology

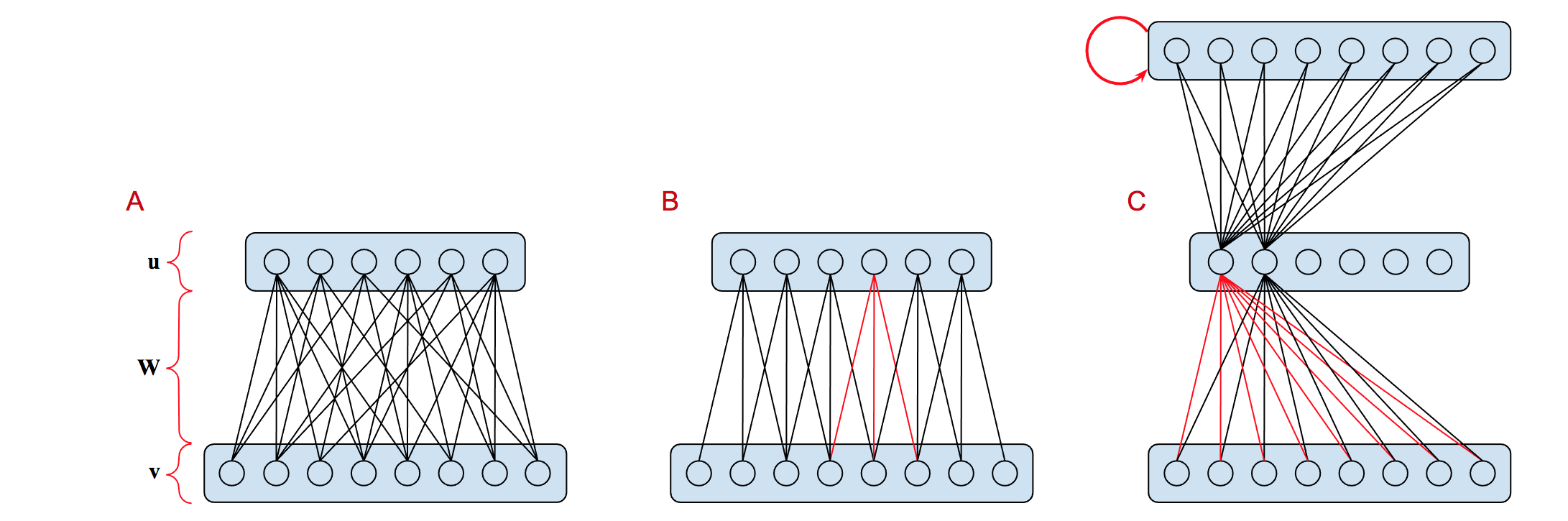

The terminology surrounding artificial neural networks can be confusing to the uninitiated. Part of the confusion arises due to the seemingly inconsistent uses of the word "layer". The network shown in Figure 1.A is characterized in the text as a single-layer perceptron5, whereas the network shown in Figure 1.C is called a multilayer perceptron6. The term deep neural network7 entered the lexicon much later and is generally used to refer to any artificial neural network with multiple layers between the input and output layer. We only briefly mention single-layer perceptrons in this chapter in order to make the point that these neural networks are less powerful8 than multilayer networks9 in a specific technical sense that sheds light on what it means to learn.

1.2 Network Components

In the following, we describe classes of specialized neural networks that can be used like Lego bricks to build more complicated architectures. We start by looking at three basic artificial neural network components. These three components are illustrated in Figure 1 and are examples of Lego-brick-like networks that can be used to construct more complicated networks. Networks are built of layers that are depicted as rectangular boxes with rounded corners.

| |

| Figure 1: Three of the most basic neural networks: (A) a single-layer perceptron has no hidden layers, (B) one layer of a convolutional neural network (CNN) showing one filter with a receptive field spanning three units, and (C) a multilayer perceptron (MLP) with one hidden layer and a recurrent (RNN) output layer. Note that a CNN layer used for object recognition might have 100 or more filters requiring that we replicate the network shown here once for each filter thereby creating a layer with a depth equal to the number of filters that feeds into a pooling layer combining the output of all the filters. Since there are six units in the output layer, if there were 100 filters instead of just the one shown, the resulting output layer would be \({6 \times{} 100}\) and since the parameters are shared and the receptive field — and hence filter size — is \({1 \times{} 3}\) there would only be \({3 \times{} 100}\) connection weights to learn. The MLP network is intended to show a connection matrix corresponding to a complete bipartite graph though only two units are shown to avoid an overly cluttered graphic obscuring the pattern of connections. The hidden layer of the MLP has fewer units than the either the input or the output layers which are of the same size, and so the hidden layer could serve as a bottleneck that facilitates learning a compact representation of the input, making (C) well suited to implementing an autoencoder trained by unsupervised learning. Finally, note that the output layer of of (C) has a recurrent connection highlighted in red here. The basic MLP architecture does not require recurrent connections. It is included here simply to underscore the fact that recurrent networks are just non-recurrent networks to which we have added one or more recurrent connections. | |

|

|

A neural network has two or more layers, an input layer, an output layer and zero or more hidden layers. Layers are stacked to construct networks. Each layer is composed of one or more units and selected units in one layer can be linked to selected units in an adjacent layer by connections. The units in adjacent layers and the connections between them constitute a graph in the terminology of graph theory where units are referred to as nodes in the graph and the connections between them are called edges. Graph theory10 is a sub-discipline of discrete mathematics, and won't figure prominently in our discussion except to characterize different patterns of connectivity. Nodes and edges have been associated with neurons and synapses in biological networks but the analogy can be misleading and is generally discouraged here and elsewhere in the scientific literature.

As a simple example of how graph theory is applied to neural networks, the connections plus the combined units in the two layers define a directed bipartite graph in which connections correspond to the edges in the graph and the units to nodes11. If each unit in the input layer — say there are \({n}\) of them — is connected to every unit in the output layer — say there are \({m}\) of them, then the connections form a complete bipartite graph and there are n × m connections — a number that can become rather large in practice. In our discussion of convolutional neural networks, we consider a powerful technique that ameliorates some of the computational problems that arise from working with very large input or output layers.

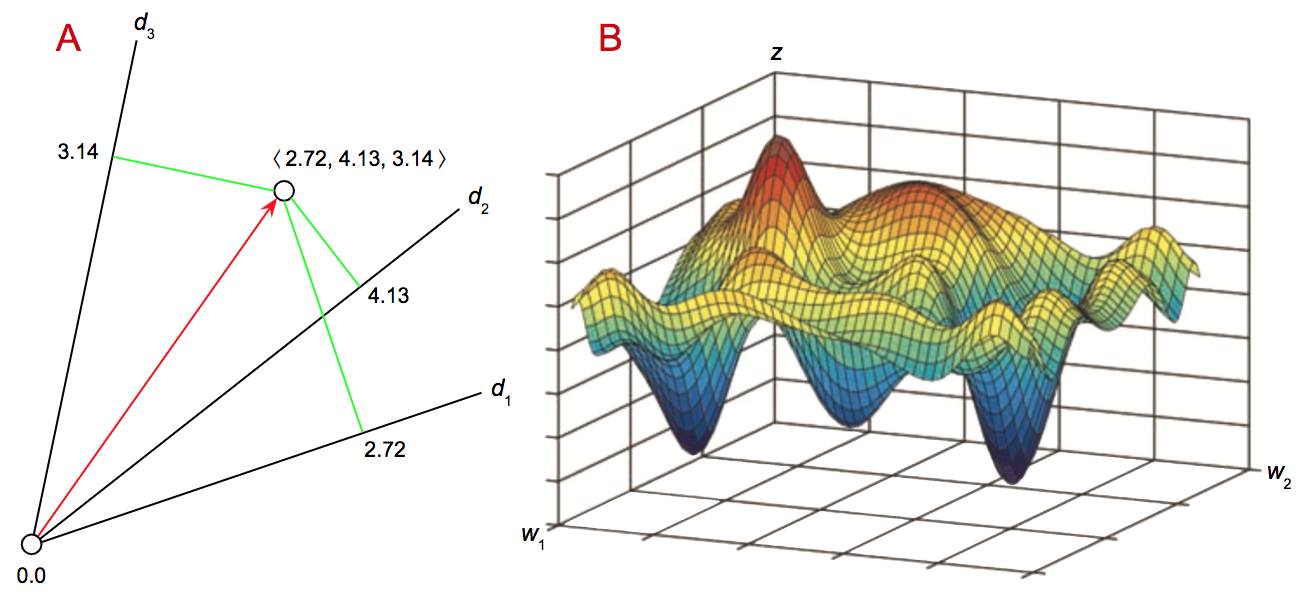

1.3 Vectors and Matrices

Neural networks represent both mathematical and computational objects. The nodes in a layer correspond to variables in equations describing the mathematical functions that neural networks implement as computable objects. It is often appropriate to think of the variables within a layer as defining vectors in a vector space and the edges as defining linear transformations from one vector space to another12. A vector space is an \({n}\)-dimensional generalization of the familiar three-dimensional Euclidean space you might have encountered in high-school calculus. A vector represents the coordinates of a point in that space — see Figure 4.A. This abstraction frees us from having to deal with individual units in layers and allows us to bring to bear the full power and beauty of linear algebra13 and multivariate calculus14 in order to share models and exploit powerful software packages and specialized hardware to expedite engineering and accelerate the computations required to develop, train and deploy neural networks in various applications.

From this perspective, each layer represents a sequence — ordered list — of variables that can be used to assign numeric values to the units that comprise a layer. This sequence is called a vector in algebraic matrix theory which is the underlying branch of mathematics associated with neural networks. A vector variable represents a sequence of scalar variables — meaning that each variable corresponds to single real number15, often referred to as vector components each of which can be assigned a numeric value. We display vector variables in bold font and scalar variables in italics using subscripts to refer to the individual units in a layer / components in the vector. For example, the expression \({\mathbf{u} = \langle{}u_1, u_2, \ldots, u_n\rangle{}}\) defines \({\mathbf{u}}\) to be a vector variable consisting of \({n}\) components shown as subscripted scalar variables delimited by angle brackets. A neural network defines a mathematical function that takes as input a vector of real numbers and produces as output a second vector of real numbers. The term function is used loosely to apply to both the abstract mathematical object and to any computable instantiation of that object corresponding to a program that runs on a conventional computer or specialized hardware designed specifically for neural networks.

In specifying functions represented by a network we often iterate over all the components in a vector using an indexed variable \({u_i}\) as in the expression \({\sum_{i-1}^{n} = u_1 + u_2 + \cdots+ u_n}\) representing the sum of the components of the vector \({\mathbf{u}}\). Algebraic matrix theory allows us to add two vectors component wise as in \({\langle{}1.3, 4.0, 7.5\rangle{} \oplus\langle{}0.5, 1.9, 5.2\rangle{} = \langle{}1.8, 5.9, 12.7\rangle{}}\) or normalize a vector \({\mathbf{u} = \langle{}4.0, 1.0, 5.0\rangle{}}\) by dividing each component by the sum of all the components as in the equation, \({\mathbf{u}\,/\,\sum_{i-1}^{i} u_i}\), which in this case yields the vector \({\langle{}0.4, 0.1, 0.5\rangle{}}\) in which all of the components are between \({0}\) and \({1}\) and together sum to \({1.0}\). Note that this calculation involves three scalar divisions, \({(4.0\,/\,10.0)}\), \({(1.0\,/\,10.0)}\) and \({(5.0\,/\,10.0)}\). These operations can be carried out in parallel given a processor such as the graphics processing unit (GPU) found in most laptops and cell phones.

1.4 Activation Functions

In the sequel, we define our neural network building blocks as having two or more layers where the required two correspond to the input and output layers. Where it aids exposition, we depict the individual units within layers and draw the connections between the units in adjacent layers. Otherwise, to render the diagrams less busy graphically, we hide the individual units using just the iconic round-edged rectangles as a visual proxy for the corresponding vectors and draw a single edge between the two layers to represent the linear transformation between the relevant vector spaces. Each layer is also characterized by an activation function — typically nonlinear — that is described in the text but generally not graphically depicted in the network architecture diagrams.

The artificial neural networks discussed here are said to be discrete-time16 rather than continuous-time dynamical systems17. The state of an artificial neural network at any point in time corresponds to the values assigned to the variables that constitute the vectors associated with each layer. For our purposes, each tick of the clock corresponds to a pass through the entire network during which all of the variables in the network are updated. Conceptually, it is easiest to think of the input layer as being on the bottom of a stack of layers culminating in the output layer at the top of the stack. A forward pass starts at the bottom with an assignment to the input layer, computing each layer in turn and ending up at the top with the final assignment to the output layer, ignoring for now the details of how we handle recurrent connections.

When we speak of a single layer \({L}\) as one of many in a stack of layers, think of the input to \({L}\) as being the vector of variables corresponding to all of the units that connect to the units in \({L}\). The update for a specified layer \({L}\) at time \({t}\) is defined as follows: given assignments to the variables in the input to \({L}\) at \({t}\), compute the linear transformation associated with \({L}\) and pass the result to the activation function associated with \({L}\) to obtain the assigments to the vector of variables associated with output of \({L}\) at \({t}\). The corresponding vector of values associated with the output of \({L}\) at time \({t}\) is called its activation state. The technical term thought vector is also used to refer to the activation state of a layer, but we generally reserve the term for hidden layers and, in particular, hidden layers that encode abstract concepts — though admittedly the meaning of the word "abstract" in this setting is a bit murky.

While we discourage equating patterns of spiking neurons in biological networks with the activation states of hidden layers, we will draw upon work in cognitive and systems neuroscience to make the case that both representations are examples of highly-parallel distributed codes capable of representing the sort of context sensitivity that one expects from connectionist systems [93]. Such representations are in stark contrast with the systematic, combinatorial nature of largely-serial symbolic systems that account for traditional computing paradigms such as those based on the computer architectures of Alan Turing [15] and John von Neumann [87]. There are also significant differences in that biological networks are continuous-time while most current artificial networks are discrete time.

Biological networks are inherently subject to the problems described by von Neumann in his treatise on the synthesis of reliable machines, including living organisms, from unreliable components [132], whereas artificial neural networks run on hardware that finesses the problems that von Neumann alludes to by enforcing the digital abstraction [37]18. If the spiking activity of biological neurons is primarily in service to achieving some degree of stability in the behavior of such neurons — whether by managing the cell's metabolic equilibrium to maintain internal state or by employing some method of coding19 to enable the reliable transfer of digital signals between neurons, then perhaps biological neurons enforce their own digital abstraction.

1.5 Perceptron Models

Layers sandwiched between the input and output layers are called hidden layers and play a key role in learning. Figure 1.A shows an historically important network called a perceptron or, more specifically, a single-layer perceptron20. A single-layer perceptron has no hidden layers and is restricted in what it can learn to the set of binary classifiers said to be linearly separable and perhaps best understood by an example21.

| |

| Figure 2: The binary AND function (representing the conjunction of two Boolean variables) is linearly separable and so it is easy to learn a classifier defining a straight line that divides the four possible Boolean assignments into two classes with a single-layer perceptron22. The XOR function (representing the exclusive disjunction of two Boolean variables) is not linearly separable since there is no single straight line (one-dimensional hyperplane) that divides the four assignments into two classes and hence XOR is not learnable with a single-layer perceptron. For each binary function, we show a simple two-dimensional space and plot each of the four possible truth assignments using a green circle to indicate assignments such that the respective binary function is TRUE and a red circle to indicate assignments for which it is FALSE. We show a linear separator for the AND function and it should be obvious by inspection that no such separator exists for the XOR function. A multilayer perceptron (MLP) with at least one hidden layer can learn any binary classifier including one that correctly classifies XOR. | |

|

|

The Boolean function for conjunction denoted AND is defined as follow: for any Boolean propositions \({A}\) and \({B}\), if \({A}\) and \({B}\) are both true, then the expression \({A}\) AND \({B}\) is true and otherwise, i.e., for any other assignments of truth values to propositions, it is false. Perceptrons have no problem learning the AND function. The Boolean function for exclusive disjunction denoted XOR is defined as follows: if \({A}\) is true and \({B}\) is false or \({B}\) is true and \({A}\) is false, then \({A}\) XOR \({B}\) is true, and otherwise it is false. Figure 2 illustrates how the property of linear separability distinguishes these two functions.

1.6 Connection Matrices

While the values of the units in a layer are determined by the input and the function defined by the network and connection weights, the connection weights are initially assigned randomly and then learned from data to complete the definition of the network function. The connection weights for a given layer are represented by a mathematical object called a matrix23. that stores the weights in a tabular format so that the row indices refer to the units in the bottom layer and the column indices refer to the units in the top layer. A matrix with \({n}\) rows and \({m}\) columns is called an \({n \times{} m}\) or \({n}\)-by-\({m}\) matrix where \({n}\) and \({m}\) are referred to as the dimensions of the matrix.

By convention, a matrix is generally denoted by an upper case letter in bold font, e.g., \({\mathbf{W}}\), and the individual matrix components are displayed in italics as done with vector components, except that they have two indices, e.g., \({w_{i,j}}\), one index to reference the row (\({i}\)) and the other to reference the column (\({j}\)) in which the entry is stored as illustrated below: $${\mathbf{W} = \begin{pmatrix} w_{1,1} & w_{1,2} & \cdots& w_{1,m} \\ w_{2,1} & w_{2,2} & \cdots& w_{2,m} \\ \vdots& \vdots& \ddots& \vdots\\ w_{n,1} & w_{n,2} & \cdots& w_{n,m} \\ \end{pmatrix} }$$ Matrices and vectors are related in that a \({1 \times{} n}\) matrix is called a row vector and a \({n \times{} 1}\) matrix is called a column vector.

The function definition corresponding to the perceptron network shown in Figure 1 can be written concisely as the equation \({\mathbf{v} \mathbf{W} = \mathbf{u}}\) where \({\mathbf{v} \mathbf{W}}\) denotes the matrix multiplication of \({\mathbf{v}}\) and \({\mathbf{W}}\) that determines the output vector \({\mathbf{u} = \langle{}u_1, u_2, \ldots{}, u_m\rangle{}}\) such that \({u_{i} = v_{i}w_{1,i} + \cdots{} + v_{i}w_{n,i} = \sum_{j=1}^{n} v_{i}w_{j,i}}\) for \({i = 1,\ldots{}m}\). Such compact notation considerably simplifies both describing neural networks and writing programs that implement neural networks since there exist programming languages that allow the same succinct representation thereby freeing the programmer from having to read and write the low-level code that implements operations on vectors and matrices.

1.7 Differentiable Models

As described so far, the function associated with a network is simply a linear transformation mapping from one vector space to another24. Moreover, since the composition of two linear transformations is itself a linear transformation, any neural network consisting of a stack of such layers defines a linear transformation. This means that, if you are trying to learn a nonlinear function — and much of what we want to learn is nonlinear, the best you can hope for is to find a linear function that closely approximates the nonlinear one. Of course, it could be that a good linear approximation does not exist. For this reason, we apply a nonlinear activation function to the result of performing the linear transformation, thereby enabling the network to learn nonlinear functions as well.

Learning inevitably involves search. In the case of learning a model corresponding to an artificial neural network, it involves searching in the high-dimensional space of possible assignments to the connection weights. Each weight assignment corresponds to a fully instantiated network function, and the learning algorithm is restricted to find a model within the space of all such functions. This restriction is referred to as an inductive bias25 in learning theory and it plays an important role in guiding search to predict outputs, given inputs that it hasn't encountered in the training data. Bias in the context of machine learning is a good thing since without bias there can be no generalization. The selection of activation functions and network topologies also serves to define the set of possible network functions and further bias the search. Here we consider two of the most common activation functions.

It is often handy to have a binary switch or threshold function. Whenever you have to make a choice between two options, e.g., between two competing chess moves or whether a photo includes a cat, you are making a binary decision. For example, the threshold function, \({f : \mathbf{R} \mapsto{} \{0,1\}}\), defined: if \({x \geq{} 0}\) then \({f(x) = 1}\) otherwise \({f(x) = 0}\), maps a scalar value on the real number line to the Boolean values \({0}\) or \({1}\). We can also use this scalar function to implement a component-wise vector version, \({\mathbf{f} : \mathbf{R}^m \mapsto{} \{0,1\}^m}\), defined: \({\mathbf{f}(\mathbf{v}) = \langle{}f(u_1), f(u_2), \ldots{}, f(u_m)\rangle{}}\). Another useful function is the max function \({f : \mathbf{R}^m \mapsto{} \mathbf{R}}\), defined: \({f(\langle{}u_1, u_2, \ldots{}, u_m\rangle{}) = u_i}\) such that for all \({j\,\neq{}\, i, u_j \leq{}u_i}\).

The problem is that these functions are discontinuous and hence they are not differentiable26. The threshold function has a discontinuity at \({0}\) — or its vector equivalent \({\mathbf{0}}\) in the case of the vector function, and the max function is also discontinuous though the multivariate case is a bit trickier to explain27.

To get around this problem, we substitute the continuous sigmoid and softmax functions for, respectively, the discontinuous threshold and max functions. These two continuous activation functions are among the most common such functions used in the design of artificial neural networks. A scalar sigmoid function, \({L : \mathbf{R} \mapsto{} \mathbf{R}}\), can be implemented in terms of the logistic function28 as: $${L(x) = \frac{1}{1+e^{-x}} = \frac{e^{x}}{e^{x}+1}}$$ where \({e}\) is the natural logarithm base — also known as Euler's constant \({2.71828 \ldots{}\,}\), and the most common corresponding vector function is the obvious component-wise \({\mathbf{L} : \mathbf{R}^{K} \mapsto{} \mathbf{R}^{K}}\). The softmax function, \({\mathbf{S} : \mathbf{R}^{K} \mapsto{} \mathbf{R}^{K}}\) is defined as a generalization of the logistic function: $${{\mathbf{S}}({\langle{} u_{1},\,\ldots{},\,u_{K} \rangle{}) = \langle{} z_{1},\,\ldots{},\,z_{K} \rangle{} {\rm{\;such\;that\;}} {z_j = \frac{e^{z_{j}}}{\sum_{k=1}^{n}e^{z_{k}}}} {\rm{\;for\;all\;}} k = 1 \ldots{} K}}$$ that squashes and normalizes a \({K}\)-dimensional vector \({\mathbf{u}}\) of arbitrary real values to a \({K}\)-dimensional vector \({\mathbf{z}}\) of real values such that each entry, \({z_i {\rm{\;for\;all\;}} i = 1 \ldots{} K}\), is in the interval \({(0, 1)}\) and all the entries sum to \({1.0}\). In order to understand why it is important that the objective function be differentiable, we need to consider how neural networks are trained.

1.8 Learning the Weights

Once the architecture of the network is completely configured — including the number of units in each layer, the connections between layers and the activation function for each layer, the only step remaining to fully define the network function is to assign values to the connection weights. This is referred to as training a neural network and, as mentioned earlier, it involves searching in the high-dimensional space of possible assignments to the connection weights. One of the most powerful algorithms for training neural networks is called stochastic gradient descent29. While it is possible to train a large network by breaking it up into more tractable pieces and training each piece separately, this is generally undesirable since it often means optimizing the pieces in isolation resulting in a global solution that is suboptimal. Training the entire network simultaneously is called end-to-end training30.

Training relies on an objective function — also called a loss function — to evaluate the expected performance of the network for a given assignment of connection weights and guide search for an optimal assignment31. SGD attempts to minimize the network loss by following the gradient which is the multi-variate generalization32 of the derivative — the derivative for a real-valued function \({f(x) = y}\) measures the sensitivity to change of the output value \({y}\) with respect to a change in its input value \({x}\) and plays an important role in machine learning by way of the calculus of variations33.

Developing an artificial neural network that can be trained end-to-end with gradient descent involves the design of a differentiable objective function. This can be challenging given that many of the supporting functions routinely used in defining objective functions for related optimization problems are not differentiable. These basic support functions include the threshold and max functions mentioned earlier Since the objective function incorporates the network function, we can't compute the gradient of the objective-function and apply SGD unless the network function is differentiable, and if the network function uses the discontinuous threshold function mentioned earlier then the network function will not be differentiable. When used to minimize the above function, the standard34 gradient descent method performs the following weight update on each iteration: $${\mathbf{w}_{t+1}\,\colon{=}\,\mathbf{w}_t -\eta{} \nabla{} L(\mathbf{w}_t)}$$ where \({\mathbf{w}_t}\) is the vector of all the weights in the network prior to the update, \({L}\) is the objective or "loss" function, \({\eta{}}\) is the learning rate that determines how much to change the weights on each iteration, \({\nabla{}}\) is the gradient operator and \({\nabla{} L(\mathbf{w}_{t})}\) is the gradient of the loss, and \({\mathbf{w}_{t+1}}\) is the updated vector of all the weights.

1.9 Convolutional Layers

Fully connected neural network layers don't scale well to large inputs such as high-resolution images. If we have an input vector corresponding to a million pixel image, e.g., \({1000 \times{} 1000}\), and an output vector of the same size, then the fully connected weight matrix for the resulting bipartite graph would have a trillion (\({10^{12}}\)) entries. The class of models called convolutional neural networks (CNNs) solve this problem by recoding large input layers as the composition of features that span relatively small contiguous regions of the input35. In the case of an image, these features might correspond to small patches that represent abrupt changes in contrast — often called edge detectors — and thereby emphasize the boundaries of objects.

In the terminology of CNNs, such a feature is called a filter — specifically a linear filter36, and the contiguous region to which it applies is its receptive field. A collection of filters is called a filter bank, and a typical filter bank could easily include hundreds of filters, e.g., a set of edge detectors that discriminate edge orientation in increments of \({5^{\circ}}\). A fully configured convolutional layer consists of a set of slices, one slice for each filter in the filter bank. Figure 1.B represents a single CNN slice and the connections highlighted in red depict a \({1 \times{} 3}\) filter consisting of just three weights. Let \({\mathbf{v} = \langle{}v_1, v_2, v_3, v_4, v_5, v_6, v_7, v_8\rangle{}}\) represent the vector corresponding to the input layer and \({\mathbf{u} = \langle{}u_1, u_2, u_3, u_4, u_5, u_6\rangle{}}\) represent the vector corresponding to the slice output layer.

The filter as shown has a receptive field corresponding to \({\langle{}v_4, v_5, v_6\rangle{}}\) and is poised to compute the response of the filter at \({u_4}\) in the output layer of the slice. The response value is intended to measure how well the filter accounts for the part of the image covered by its receptive field. If you think of the filter and the receptive field as vectors — if they happen to be some other shape, e.g., a 2-D array as in the case of images, then just flatten them to make a vector, then one of the most common methods is to use cosine similarity37 as a proxy for the angle between the two vectors. In the case of vectors in positive space, i.e., all of the vector components are positive, cosine similarity is bounded by \({1.0}\) for an angle of \({0^{\circ}}\) — so the two vectors are parallel and hence similar — and \({0.0}\) for an angle of \({\pi}\) radians — the two vectors are perpendicular and hence dissimilar.

Often the inner — or "dot" — product of two vectors is used as a more easily computed but numerically less stable measure of similarity that must treated with mathematical care. If the filter vector is \({\mathbf{w} = \langle{}w_1, w_2, w_3\rangle{}}\) and the receptive field vector is \({\mathbf{u} = \langle{}u_4, u_5, u_6\rangle{}}\) then the response value at unit \({v_4}\) in the output layer of the slice is the dot product of filter weight vector and the receptive field input vector \({\mathbf{w} \cdot{} \mathbf{u} = (w_1 * v_4) + (w_2 * v_5) + (w_3 * v_6)}\). The filter response is computed for each unit in the output layer of the slice. This is done for all slices and the slices are stacked in column vectors such that the \({i}\)th element of this vector corresponds to the computed response of the \({i}\)th filter. If we started with a square — two-dimensional — matrix of pixel values we would now have a three-dimensional output layer. The activation function for this layer is typically max pooling38 in which the output of pooling collapses the extra dimension added in order accommodate the filter responses by selecting the maximum value in each column vector.

Note that if we have a bank of 100 \({10 \times{} 10}\) filters, each slice has 100 weights and there is a total of one thousand weights for the entire filter bank. That's quite a difference from the trillion weights required for the fully connected case. The downside of this saving is that we can only learn relatively small features. However, if we stack convolutional layers — as is typically done in practice — we can learn a hierarchy of features that account for increasingly larger patterns in the image at each successive stage in the hierarchy.

Convolutional networks are not biologically plausible in the particular way that they share variables and compute filter responses. They are, however, extraordinarily powerful neural network components and have shown off their advantages in diverse applications far from computer vision including natural language processing. Moreover the required computations can be efficiently carried out on parallel processing hardware such as are used in rendering graphics39.

1.10 Multilayer Networks

Figure 1.C depicts a multilayer perceptron — commonly called a deep neural network — with one hidden layer. The MLP network is intended to illustrate a connection matrix corresponding to a complete bipartite graph though only two units are shown to avoid an overly cluttered graphic obscuring the pattern of connections. The output layer of (C) has a recurrent connection highlighted in red, making the point that a recurrent network is just a non-recurrent network with recurrent connections. In the case shown here, we might update the output layer by taking the average of the current activity with that of previous, e.g., \({\mathbf{v}_{t} = ( \mathbf{v'}_{t} + \mathbf{v}_{t-1} )\,/\,2}\) where \({\mathbf{v'}_{t}}\) is intended to represent the layer output prior to taking the recurrent connection into account.

In the example shown here, the hidden layer of the MLP has fewer units than either the input or the output layers which are of the same size. This is not required in general and only serves in the present circumstances to demonstrate a special case of MLP called an autoencoder that is worth emphasizing here40. An autoencoder is an MLP that facilitates learning a compact encoding of the input that reconstructs the input and in so doing assists generalization — the ability to generalize from the training data to account for novel input. For this reason, the resulting encoding is often referred to as a generative model. The narrowing in the hidden layer is sometimes referred to as a bottleneck in that it prevents training from simply learning the identity function41.

Generative learning is contrasted with discriminative learning in that the latter emphasizes discriminating between similar examples and needn't construct an explicit model of the data, whereas the latter emphasizes learning a general model that can be used for multiple purposes by downstream components in an MLP with many layers. An important feature of autoencoders is that they can be trained by unsupervised learning in which the objective function compares the original encoding represented by the input with the encoding produced by the learned model represented in the output layer and the objective function measures the fidelity of the reconstruction. More sophisticated objectives penalize reconstruction error while at the same time rewarding the facilitation of downstream components.

While we seldom learn models just for the sake of learning them, in some cases, a discriminative model is just what you need, as in the case where you want to sort manufactured parts into two categories: defective versus certified. Generative models are often useful in cases in which their ultimate purpose is not known or clearly understood, or when it is important to explain why the system made a decision or arrived at a particular conclusion. There is some evidence to suggest that humans employ some form of Bayesian statistical inference42 to construct generative models of their environment that explain how humans can make reliable generalizations from so little data — what are commonly referred to as inductive leaps in learning.

1.11 Energy Landscapes

The notion of an energy landscape43 arises in many disciplines where optimizing a function is seen as search in a combinatorial space of possible parameter settings of a complex system. In the case of computational chemistry, the parameter settings correspond to the possible conformations of a molecular entity and the goal is to find a conformation that minimizes the free energy of the system44 so that we can build a model of molecular structure to use in synthesizing new materials or drug discovery [133, 74]. In the case of training an artificial neural network, our goal is to search for a weight assignment (conformation) that minimizes the loss function (free energy).

| |

| Figure 4: The left (A) graphic shows the coordinate axes of a three dimensional vector space — also known as a Hilbert space45 — along with a single vector \({\langle{}2.72, 4.13, 3.14\rangle{}}\). Such vectors are used to represent the activation states of artificial neural circuits encoded in the numerical assignments to the units in a ANN layer. We associate a distance metric — typically the obvious generalization of the Euclidean metric — with the vector space that allows us to compute the distance between two vectors as a measure of their similarity. The graphic on the right (B) represents an energy landscape which is a map of all the possible conformations of a complex system and their corresponding energy levels. The conformations of a neural network correspond to the set of all possible weight assignments and the energy levels correspond to the value of the loss function for each such assignment. Here we show a very simple example with just two weights — \({w_1}\) and \({w_2}\) — and the loss plotted on the \({z}\) axis to create the continuous surface of the energy landscape. The goal of training a neural network is to find a global minimum of the loss function using stochastic gradient descent to search in weight space by crawling around on the energy landscape. | |

|

|

Figure 4.(B) illustrates the energy landscape for a network consisting of two weights, \({w_1}\) and \({w_2}\), assigned to what are conventionally called the \({x}\) and \({y}\) axes, and the value of the objective function on the \({z}\) axis computed at each point on the \({x\,y}\) plane. The resulting rugged surface exhibits multiple local maxima and minima. Ideally the surface would be convex but this is rarely the case in practice46. In training networks with millions of weights, the energy surface is likely to be pockmarked with local minima as well as saddle points47 that can mislead gradient descent. More sophisticated methods using second-order partial derivatives can test if a critical point is a local maximum, local minimum or saddle point, but they don't scale and can't be expected to point the way to global minimum of the objective function. Remarkably, in many cases, having more parameters results in a rougher landscape — more potholes to get stuck in, but also produces more local minima that are closer to the global minimum. Suffice it to say that a great deal of effort has been put into designing better solvers.

1.12 Accelerating Returns

Researchers developing neural network solutions typically have to train and test hundreds if not thousands of experimental networks. It's one thing if running a test on a network, takes no more time than it takes to grab coffee, and quite another if it takes days or even weeks. It helps if you're working in industry and have access to unlimited computing resources. Unfortunately, there is no such thing as unlimited computing and engineers are always competing with one another and with the needs of customers to get their work done. It is perhaps no surprise then that engineers have taken to applying neural networks to automatically and efficiently search in the space of network architectures for solutions that satisfy their needs.

This kind of technology is part of a trend in applying machine learning technology recursively to accelerating the development machine learning technology. Throughout the last few decades, researchers working on neural networks often expressed frustration with progress in the development of better analytical tools, mathematical foundations, software and scalable infrastructure to run it on. At times, the state-of-the-art in developing neural-network technology seemed more like alchemy than science or engineering.

The wave of excitement around the beginning of the new millennium as neural networks were shown to outperform more traditional methods of machine learning was tempered by a realization that we were in uncharted territory. There was time when, miraculously and contrary to received wisdom, adding more layers or layers with more units and more connections often seemed to help rather than hinder finding good if not optimal solutions. Researcher puzzled over why this should happen, and the mathematically adept resurrected old or developed new sophisticated regularization methods to understand such phenomena48.

Neural network technology is flourishing in large part because it has been so successful and there are so many smart individuals contributing to its development and savvy companies investing in a growing ecosystem of talent and supporting technologies. Artificial neural networks have received the lions share of the attention but the dividends from its success are being spread across a much wider spectrum of technologies including robotics, computer vision, imaging, advanced biosensors and neural prostheses.

An entire generation is growing up with possibility of living decades longer than their parents, conquering the major causes of mental and physical decline and developing technology to make us smarter and better able to address problems on global scale. Machine learning, artificial intelligence, synthetic biology, genetic engineering and materials science are advancing at an incredible, some would say alarming pace, reinforcing and accelerating one another. It may sound trite to say so given all the hype surrounding these technologies, but we will have evolve along with our technologies if we are to continue to play our enhanced and artificially intelligent friends.

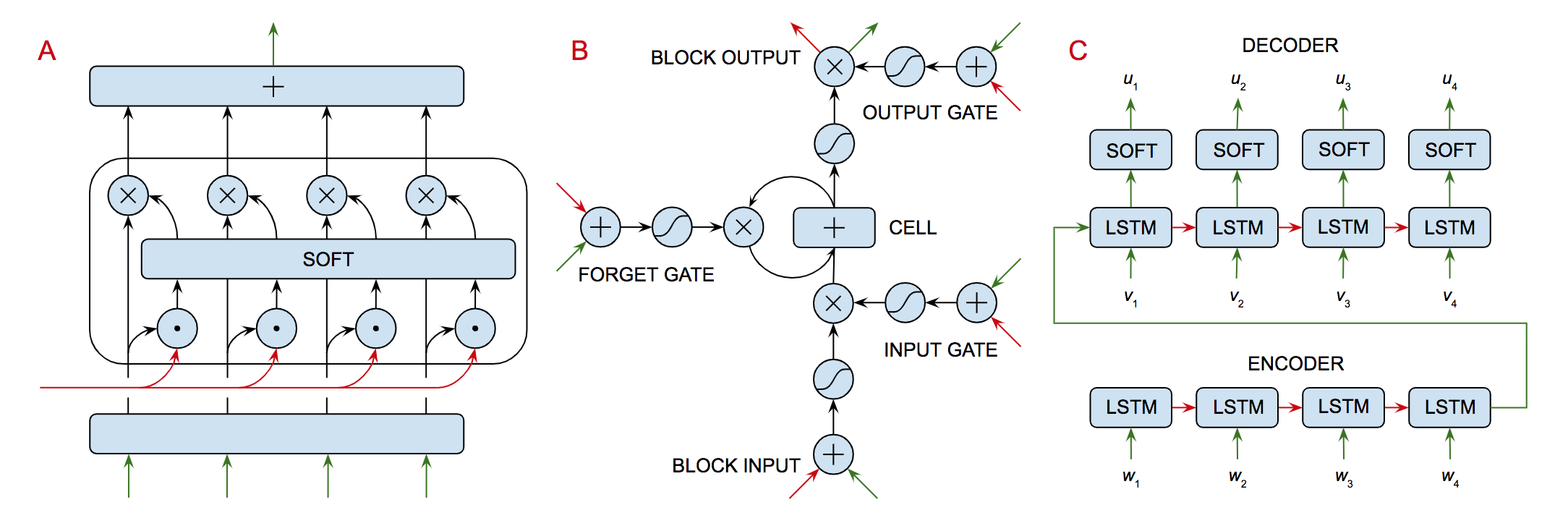

1.13 Recurrent Networks

In his book I Am a Strange Loop49 Douglas Hofstadter explores the idea of consciousness and the concept of "self" in terms of a recurrent process thereby dismissing the philosopher's confusion about homuncular regress50 and the viewer in the Cartesian theatre51. In a subsequent chapter, we consider how one might go about implementing a digital assistant that exhibits many if not all of the properties of human consciousness worth preserving in an artificial intelligence. The artificial neural network implementation makes use of recurrent connections to explain and provide a compelling use case for the emergent phenomena of inner speech52 and self-reflection. In the following, we explore more practical, less controversial applications of recurrent networks.

All three of the networks shown in Figure 5 are said to be recurrent. In contrast, the networks shown in Figures 1.A and 1.B include only feedforward connections. Recurrent connections run in the opposite direction from feedforward connections and can span multiple layers. A recurrent connection can also feed back into the same layer it originated from as illustrated in Figure 1.C or it can connect two units within a layer. Recurrent neural networks — networks that have recurrent connections — will play an important role in our understanding human cognition and figure prominently in our discussion of neural network architectures53.

Even the simplest biological networks make use of feedback. Without feedback, networks couldn't keep state — they couldn't remember past events, they couldn't measure progress or adjust their responses to deal with errors in perception or motor control. Norbert Wiener55 introduced the term cybernetics56 to describe the study of systems that employ feedback to control their behavior. Biological systems employ feedback to regulate everything from hormone balance to consciousness. The three networks shown in Figure 5 illustrate the use of feedback to support three basic functions: attention, memory and recursion. In subsequent chapters we'll apply these basic functions to support a wide range of activity. Here we take a closer look at how they are implemented since, the detail provide insight into how they work and how they might adapted for other purposes.

| |

Figure 5: Three specialized neural networks components that deal with: (A) selective attention, (B) short-term memory, and (C) and working with structured data. To minimize clutter, the drawings don't show the individual units or their corresponding connections. Each directed edge represents a group of connections between the units in the corresponding adjacent layers and implements a linear transform as discussed earlier. We use the following conventions for representing activation functions:

| |

|

|

Three specialized recurrent neural networks components are illustrated in Figure 5: (A) is an attentional network that learns how to shape the context for performing inference as in the case of machine translation or continuous dialogue, (B) is a short-term memory module that learns how to anticipate information required for inference and set it aside until needed, and (C) is a network architecture consisting of a pair of recurrent networks used to transform one sequence into another as in the case of question answering.

Figure 5.A shows a schematic description of an attentional selection module that learns what part of our recent experience is most important for our current purposes and makes that information available to guide inference. The network shows four (feedforward) connections to the bottom layer each of which corresponds to a thought vector of the same size. The red (recurrent) connection corresponds to a single thought vector summarizing the current state of information, i.e., the context for current decision making. Each of the feedforward vectors is concatenated with the current context vector and fed into a softmax layer, the output of which is used to decide (gate) which of the four (feedforward) thought vectors is most relevant to the current context.

The attentional selection module is useful in machine translation (MT) to determine which parts of the original (input) sentence are relevant in deciding the next word in the translated (output) sentence. For example, in deciding the gender or number — singular or plural — of a pronoun to use in the translation it is often useful to refer back to any previous information in the input sentence that mentions the reference of the pronoun under consideration. The network in Figure 5.C is designed to support MT as well as other problems that involve transforming one sequence — of words in the case of MT — to another sequence.

Figure 5.B shows a schematic description of a gated memory module that can be used for any application in which it is useful to learn what information to keep in short-term memory in performing a task. This module makes use of three trainable gates that control the contents of a memory cell. The three gates determine (a) when the cell content should be updated with the current input, (b) when the current content of memory should be made available as output and (c) when the current content should be erased.

Each gate consists of a learnable network that takes as input both feedforward and feedback connections, computes the weighted sum of these inputs and then applies a sigmoid activation function to produce a (differentiable) approximation to a binary switch. The scalar output of the sigmoid function can be used to — depending on the gate — add the block input to the content of the memory cell, make the content of the memory cell available as the block output, or control whether the current content of the memory cell is fed back to cell by the gated recurrent connection or not, i.e., it is forgotten.

As an example, if the output value of the forget-gate sigmoid is close to one and the output value of the input-gate sigmoid is also close to one, but the output-gate sigmoid is close to zero, then the cell memory will contain the sum of its previous content and current block input vector and the block output will be (approximately) a vector of all zeros the same dimensionality as the memory cell content — which is the same size as input block vector. This affords the rest of network using the gated cell block a great deal of learnable control over the information required to perform its assigned duties.

The autoencoder described in Figure 1.C works well for learning useful representations of fixed size data like still images or video from a given camera58. In principle, you could make it work with text data in the form of sentences for machine translation or documents for summarization, but processing text sequentially is convenient for many applications including, for example, continuous conversation and sentiment analysis59. Recurrent models are particularly useful for such applications and there is a variation on the autoencoder architecture we described earlier that has proved especially versatile for working with structured data including but not limited to text sequences.

The Long Short-Term Memory (LSTM) model was invented by Sepp Hochreiter and Jürgen Schmidhuber [57]. It was originally developed to deal with a problem in training recurrent models in which the gradient either grows without bound or shrinks to zero. The initial version of an LSTM block included memory cells plus input and output gates as described in our discussion of Figure 1.B. Forget gates were added later by Felix Gers [45]. A fully configured LSTM includes one or more memory cell blocks along with conventional multilayer network components that control the memory cell gates in order to anticipate the need for and remember state information for as long as is required.

Figure 5.C shows the LSTM model used as the recurrent module in an encoder-decoder architecture that can be trained for machine translation, dialogue management or code synthesis. Unlike the stacked encoder-decoder architecture of the autoencoder, here we have a separate encoder and decoder each of which is implemented as a recurrent neural network. The encoder ingests the input sentence, \({\langle{}w_{1},w_{2},w_{3},w_{4}\rangle{}}\), one word at a time starting with some initial thought vector encoding previously processed text and providing a context for understanding the next sentence in a document or conversation.

When the sentence is completely ingested, the encoder hands off the resulting thought vector to the decoder that generates the translation, \({\langle{}u_{1},u_{2},u_{3},u_{4}\rangle{}}\), one word or phrase at a time. The decoder enriches the thought vector it has been provided as its input in order to account for its extension of the ongoing translation. The decoder continues in this fashion until the translation is complete. In Figure 5.C there is just one LSTM network for the encoder and one for the decoder. The graphic shows the recurrent process as it unfolds over time one word at a time. The lengths of the two sentences needn't be the same.

In production, the output of the decoder is sent to a softmax layer that generates a probability distribution over possible next words that is used to select the next word in the translation. Training data consists of source-language target-language sentence pairs, e.g., \({\langle{}\,\langle{}w_{1},w_{2},w_{3},w_{4}\rangle{},\,\langle{}v_{1},v_{2},v_{3},v_{4}\rangle{}\,\rangle{}}\). During training, the encoder-decoder is provided with such a pair and the objective function takes into account the difference between the supplied target, \({\langle{}v_{1},v_{2},v_{3},v_{4}\rangle{}}\), and the generated translation, \({\langle{}u_{1},u_{2},u_{3},u_{4}\rangle{}}\).

Knowing the exact technical details regarding how LSTM technology works is not our goal here. The LSTM technology is now nearly two decades old and several related competing technologies have been developed in the meantime. The important take away is that the LSTM technology provided us with new capabilities and did so by using existing components and relying on fully differentiable networks that can be trained with gradient descent. An LSTM can learn to anticipate information that it will need later, store that information and maintain it until it is required. The information is stored in the form of a high dimensional vector, what we have been calling the thought vector, that allows us to encode abstract concepts in a native format that is completely compatible with our existing neural network technology. We can further enhance these capabilities by incorporating the attentional module in Figure 5.C.

In subsequent chapters will look at network architectures that allow us to make complex predictions about the future and use those predictions to construct and execute plans to solve all sorts of practical problems from driving a car to writing a computer program. These architectures will depend upon the same basic components and training methods in much the same way that designing new computer architectures depends upon the same logic gates and memory devices as the computers we use today. Many of the recent innovations in the development of artificial neural networks have come about by figuring out better ways of training such networks that don't rely upon labeled data and that can be trained in stages by exposure to increasingly complicated learning environments much as a child learns by building new skills on top of existing ones throughout the decades long developmental stages that set humans apart from any other organism that we know about.

As we follow this thread throughout the subsequent chapters, we will also be looking for opportunities to accelerate skill acquisition by artificial means in both humans and machines. The path to Matrix style uploading of new skills is probably either technically infeasible or simply too risky to perform on humans anytime soon. But it may be possible to develop AI systems dedicated to the education of each individual child in a way that was never practical for either public school systems or working parents. The implications of this for society could herald new hope for a broadly educated, well informed self-governing society.

1.14 Signifying Meaning

Words are signs and signs signify meaning. According to Charles Sanders Peirce60, one of the founders of semiotics61, there are three types of sign: icons, indexes and symbols. Words are symbols. This turns out to be important in communicating ideas that have a precise technical meaning, but not so much for communicating about more fluid concepts.

What about images? Images are also signs and signify meaning. We know how to represent images in a computer. Images are composed of pixels arranged in rectangular grids and pixels are represented as numbers encoded as bit vectors. Unfortunately, this representation isn't particularly useful for working with visual information in artificial neural networks. Let's consider the meaning of images, and we'll get to words and the fact that words are symbols in due course.

The meaning of words and images is all about context62. If we're looking at a photo of a barnyard with cows and pigs and a farmer sitting on a tractor, and we notice something the size of a small dog wearing what looks like a red hat, we might reasonably jump to the conclusion that it's a rooster. However, if someone were to cut out and then present us with just the pixels corresponding to a pig or the farmer's upper torso, we probably wouldn't have clue what we were looking at.

From linguistic standpoint, individual words have little meaning without some context in which to interpret their meaning. In conversation, the context of a spoken word has many levels: the words that immediately proceed and follow, the last few statements, our previous conversations with the person we are speaking, etc. We rely on context to imbue words with meaning in developing neural networks to handle natural language. Here is a good example of how, by taking inspiration from human cognition in the design of neural network architectures, we achieve a high degree of competency. This observation is not surprising given that we invented natural language to serve our purposes.

We represent words, phrases and larger fragments of language by embedding63 them as points in high-dimensional vector spaces so that nearby points are semantically related and different dimensions reflect different comparative characteristics along which words differ such that relatively simple operations, e.g., subtracting one vector from another, expose these differences and reveal these relationships. In this approach, the boundaries between words are not sharply defined and meaning has a tendency to drift as usage evolves and exposure to language changes over time, e.g. you start reading more Tolstoy and Dostoyevsky and fewer superhero comics.

We can create embeddings of all sorts of abstract entities including relational graphs, computer programs, taxonomies, family trees, organizational charts, social networks, dynamical system models, etc. We can use such models to answer questions, "Who was Sophie's grandmother her father's side?, make predictions "What if the pressure relief valve failed?" and keep track of and reason about personal interactions "Does Alan know Sherry has the keys?". AI systems can't automatically decide when to learn these model but that is largely because the current generation of AI personal assistants aren't smart enough [...] humans do this sort of thing all the time. Creating models and making use of them is an integral part of how we [manage our complex lives | go about our every day lives | conduct business ].

1.15 The History of Ideas

The limitation to linearly-separable classifiers was considered so restrictive that it effectively discouraged a generation of computer scientists from pursuing research in this area. Academic advisers would often speak disparagingly about neural network, joking that the second best approach to solving any problem was either an artificial neural network or genetic algorithms — proponents of which were likened to alchemists in their single-mined pursuit of a method for turning dross into gold, thereby sending a clear signal to their students not to waste time pursuing such solutions.

A number of determined researchers persisted and were able to demonstrate that networks with one or more hidden layers were able to learn much more complicated functions. These networks — often referred to as multilayer perceptrons would resurface decades later as so-called deep neural networks. The reason for the delay can be attributed to several factors. Multilayer networks required more connections and hence more parameters; with more parameters to fit it was assumed that it would be easy to overfit, and, as a consequence, generalization and therefore performance on unseen data would suffer. There were also numerical problems with the existing optimization algorithms and surface of the energy landscape was riddled with potholes corresponding to local minima for gradient methods to fall into or saddle points where the slopes (derivatives) in orthogonal directions are all zero, but which is not a local extremum of the function64.

Among those who kept the faith a small group of researchers who referred to themselves as the PDP group were able to make considerable theoretical progress even without the benefit of more powerful computing resources. Some of the most prolific included David Rumelhart, Jay McClelland, Geoffrey Hinton, Terrence Sejnowski, David Touretzky and Peter Dayan. Hinton in particular developed a huge number of sophisticated models, useful algorithms and clever insights. Together they created a wealth of science and technology that would serve as a springboard when the tide turned in the late 20th and early 21st century and neural networks saw a reversal in fortune and a renaissance in the underlying theory and practical applications of neural networks.

It is perhaps worth pointing out that despite the contributions of the PDP group and those few stalwarts who followed in their footsteps, much of the opposition came from researchers in the United States who were convinced that symbolic methods and logic-based artificial intelligence was the best way to pursue AI. The 1980s saw the rise of expert systems and rule-based technologies promulgated by researchers at Stanford University including John McCarthy and Ed Feigenbaum. During the same time members of the PDP group and their colleagues in cognitive science were discovering how symbolic and connectionist approaches complemented one another, explaining how human beings solve complex problems.

During the 1980s and the decades following, interest in connectionist models remained relatively strong in Canada, Europe and Asia with Yoshua Bengio, Jürgen Schmidhuber and Kunihiko Fukushima among those making foundational contributions. During the same period, the PDP group produced a two-volume compilation of their research and that of their students and colleagues that consolidated their research and has provided a wonderful resource for the current generation of connectionists65.

The computational resources available to most researchers at the time were such that training was prohibitive or at the very least tedious — it is difficult to make progress when running one experiment takes days to complete with little or no intermediate indication that it will produce a good solution. In the intervening years the picture has changed dramatically. Increasingly personal computers and small servers were being networked and networking hardware was becoming faster even relatively small academic departments could afford to build clusters consisting cheap commodity computers.

Practical parallel computing was still in its infancy but as innovations in semiconductor lithography increased the number and density of transistors you could fit on an integrated circuits, chip manufacturers started designing chips with multiple cores and dedicating silicon to SIMD66 hardware (implementing so called single-instruction, multiple-data parallelism) and a few intrepid hackers were figuring out how to write parallel programs that ran on dedicated graphics processors [...] eventually better graphics, cell phone technology and the Internet would create an insatiable demand for computing resources [...] leading to the rapid deployment of cloud computing services and data warehouses sprinkled around the globe [...]

1.16 Key Ideas Summary

Here is a summary of the most important ideas covered in this chapter:

Networks with one or more hidden layers are more powerful than those with none;

Biological and artificial neural networks rely on different design principles;

ANNs exploit different opportunities for parallelism than biological networks;

Biological and artificial neural networks use similar computational strategies;

ANN vectors play a similar role to spiking neurons in biological networks;

Gradient descent relies on the network objective function being differentiable;

Convolutional neural networks work on large input layers by sharing weights;

An hierarchy of convolutional layers can learn features at multiple scales;

Autoencoders use unlabeled data to learn representations without supervision;

Recurrent networks extend feedforward networks using feedback to retain state67

Attentional networks learn how to create an appropriate context for inference;

Encoder-decoder architectures make it possible to work with structured data;

Nonlinear Functions

We make a rather big deal about linear systems in these pages. Linear functions are generally more tractable to manipulate mathematically. It's as if you were to draw a Venn diagram, linear functions would look like a small circle surrounded by everything else — meaning all the nonlinear functions. Like a tiny kernel in middle of a giant peach. Classifiers that have certain linear properties are easier to learn than those that don't. Linear systems are easier to control than nonlinear systems. Linear systems theory is pretty much a closed book — current textbooks look a lot like the textbooks that were printed 40 years ago.

Often complex systems include a combination of linear and nonlinear components simplifying analysis somewhat, but there is always the concern that the nonlinear parts are the most important understand68. I can't remember just now who first said it — might have been Carver Meade, but I seem to recall I heard it from Bruno Olshausen: "It isn't surprising that neurons behave nonlinearly. What's surprising is that in combination they can manage to behave linearly." John von Neumann's ideas concerning the synthesis of reliable organisms from unreliable components were motivated by dealing with unreliability of vacuum tube components for building computers, but apply equally well to biological systems.

One important consideration is that solving systems of linear equations can be done in polynomial time69. Linear systems theory70 is enormously useful in a wide range of applications from seismology to circuit design. Linear programming is often used to generate approximate answers to intractable problems. For example, many important optimization problems in operations research can be expressed as a (mixed) integer linear program71 which are notoriously hard to solve given that integer programming is NP-complete.

One of the easiest approximations is to reduce the problem to a linear program by relaxing the integral requirement, solve the resulting linear system and then round the original integer variables to satisfy the integral requirement72. This approximation runs in polynomial time and often produces solution that are competitive than those arrived at by (mixed) integer linear program solvers.

No Magic Required

I've said that this chapter is an attempt to make sure that you understand exactly what an artificial neural network consists of. If you follow the presentation here, you should be able to look at an artificial neural network and break it down into simpler components until everything can be written down as an equation and that equation consists entirely of basic arithmetic operations, including additions, subtractions, and multiplications plus a few mathematical functions such as sines and cosines that are either familiar to you from high school math or easy to understand intuitively when plotted as a graph.

I want you to contrast that level of understanding with our current understanding of biological neural networks composed of neurons. These are living cells that are constantly directing their genetic machinery to manufacture the molecules required not only to perform the complicated signaling that goes on when two neurons communicate with one another, but also produce the proteins and lipids that are required to maintain its molecular integrity and generate the energy required for every operation carried out in the cell. And then there are the poorly understood glia, the dark matter of the brain. We do know a lot about neurons but I would hazard a guess that (a) there is much more that we don't know than we do, and (b) we are not even sure that what we think we know is true.

Anybody who tells you that artificial neural networks are nothing like biological neural networks is very likely to be right, but their confidence is unwarranted since at best they dimly perceive the true function of biological networks. Simply because no one does. Moreover, there may very well be a strong case to be made that at some level of description artificial and biological networks are more similar functionally than they are different, and that there is a great deal of value to be had by studying artificial neural networks if you want to understand biological ones. Later we will talk about different levels of abstraction and the overused and often abused concept of emergence. At that point, I hope we can make even more clear the benefits of studying artificial networks.

2 Accelerated Evolution of Artificial Intelligence

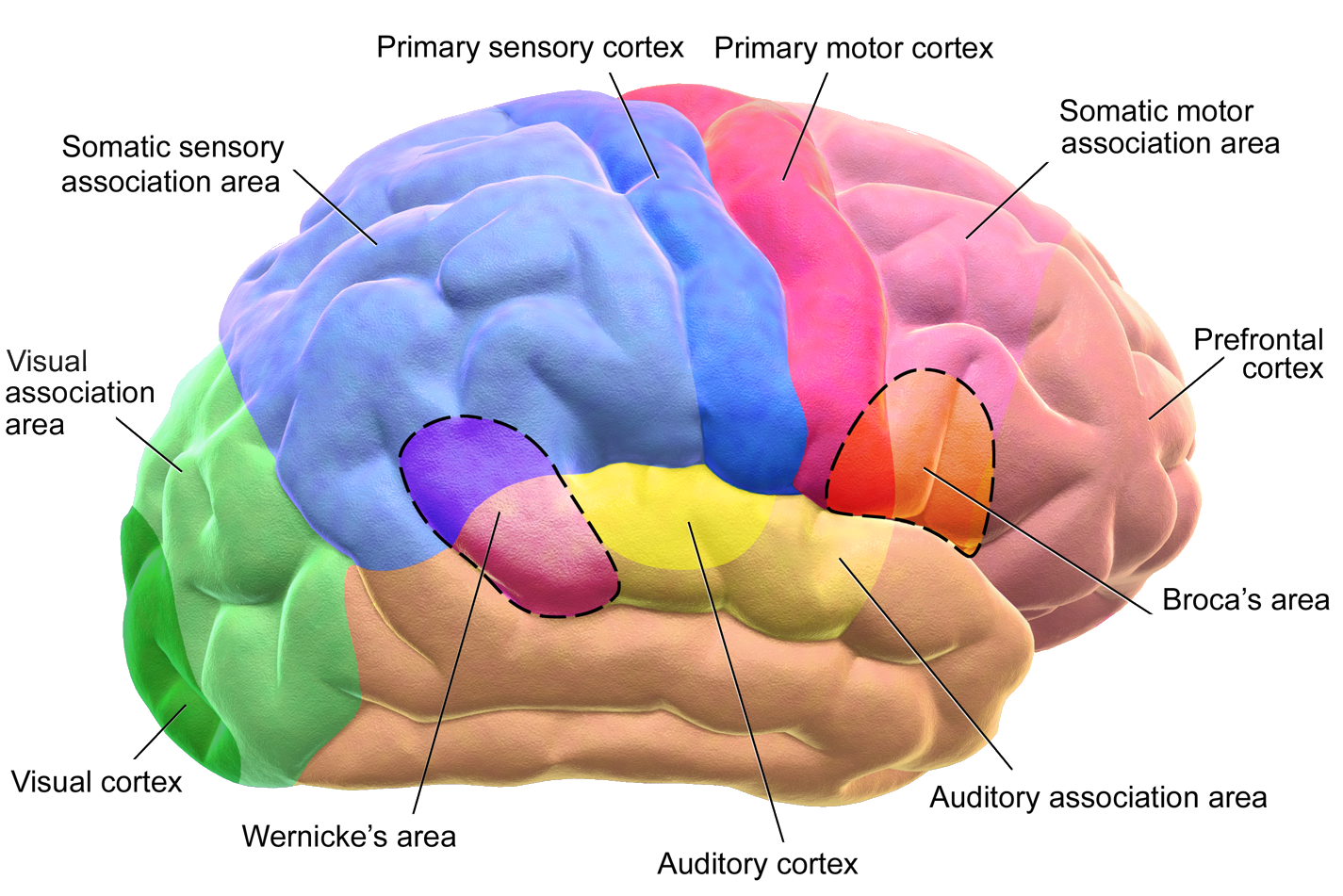

In this chapter, we investigate the prospects for building digital assistants that meet or exceed human performance in specialized technical areas and are capable of interacting with humans using natural language to collaborate and share knowledge. We draw upon research from cognitive and systems neuroscience for insight into the neural basis of human cognitive function. The sections on automated planning and natural language processing are a work in progress and will be fleshed out with help from our invited speakers and the students taking the class.

2.1 Technology Predictions

In the previous chapter we looked at some of the basic neural-network components from which more complicated network architectures are constructed. These components, referred to as Lego-block like networks, are the foundation for the current generation of artificial neural networks. The intention is to make it clear that there is no magic and demonstrate that the basic ideas are accessible to anyone with high-school math. In this chapter, we use those basic building blocks to predict the next generation of personal assistants. This exercise sets the stage for thinking about how the technology might help us deal with the problems facing us as a species.

To focus discussion, we consider a personal assistant that works with a software engineer in the role of an apprentice learning on the job, as was common in the guilds and trade associations of medieval cities and continues to this day in some crafts such as glassworking, albeit with better laws to protect the rights of employees. The programmer's apprentice we consider here is a novice programmer but has the intuitive skills of an idiot savant, given that the apprentice has a suite of powerful programming tools as an integral part of its brain. The assistant application allows us to explore the important role of language in solving problems and the technical challenges in exploiting cognitive enhancements.

While the next generation of AI systems will include personal assistants familiar to those of us who have sampled the current generation of consumer products, we can also expect systems that exhibit greater autonomy, improved communication skills and specialized expertise on a par with or exceeding that of humans lacking augmentation. We can also expect technology that will make it possible for us to seamlessly integrate advanced capabilities with our innate human cognitive apparatus utilizing increasingly powerful and invasive brain computer interfaces. It is inevitable that consumers will crave and engineers will invent technologies that provide an edge — an edge that will quickly become the new norm.

This will be just the beginning of how intelligent machines become more deeply integrated into human society, both as cognitive enhancements and as autonomous agents that will no doubt become increasingly demanding of rights on a par with those accorded humans. In the near term we have an opportunity to solve a wide range of human problems even as we come to grips with the possibility of AI systems that deserve to be treated with the same respect we currently reserve for one another. The goal here is not to anticipate cross-species conflict or suggest how we might resolve such conflicts. The goal is to explore the options for our own evolution even as we invent the possibilities for own creations to evolve.

Making predictions even a few years out is difficult. Making predictions a few decades out is best left to science fiction writers. Accurate prediction is made much harder as a consequence of technologies accelerating the pace of change to such an extent it is almost impossible for us to really comprehend. In any case, long-term prediction is a fools errand and we are generally better off thinking about how to apply ourselves to solving today's problems given that they aren't likely to disappear without our intervention. In this and subsequent chapters, we focus on how to make the best of what is likely to transpire technologically in the ten years or so.

2.2 Thinking About Thinking

This chapter provides the technical basis for making reasonable predictions about the development of AI technology over the next decade. I think it's important the reader understand the technology well enough to form their own opinion about how this technology might make a difference within our lifetimes. The story is complex in large part because it involves a merging of human and machine intelligence. The focus in this chapter is on how our understanding of one informs the design of the other.

But there is another advantage of the way this chapter and the book as a whole are organized. Artificial neural networks are simpler than biological neural networks. They were developed to understand biological networks as computing machines. In much the same way as the digital abstraction makes it possible to design digital computers with analog components, the artificial neural network abstraction (ANNA) allows us to ignore the details of how neurons function while retaining a level of description that provides insight into how human think.

Cognitive neuroscientists routinely employ concepts from artificial intelligence in order to explain complex phenomena that would be difficult to explain in terms of genes, molecules and basic chemistry. ANNA serves to bridge the conceptual gap between neurons and behavior. The goal is to use ANNA to explain how human brains accomplish basic cognitive skills, to demonstrate how machines can emulate such skills, and to describe how we can engineer autonomous systems and powerful cognitive prostheses that enhance our innate cognitive abilities.

The reader is led to imagine a recurring cycle of activity that begins at the periphery of our bodies as we gather information through our senses and engage with the world through our limbs. Information from multiple sensory modalities is combined to create abstract representations that we consciously choose to attend to and by doing so conjure up memories of related experience that we then apply to engaging both the complex physical world that we inhabit and the equally complex intellectual world that defines our social and cultural milieu.

This cycle is explored further by considering how we can take what we have learned about human cognition to build artificial systems of the sort alluded to above. In this case, we consider the design of a highly capable digital apprentice that would work with and learn from a human software engineer in writing computer programs as an initial step in bootstrapping the next generation of autonomous agents and ultimately creating a world in which intelligent machines and augmented and baseline humans live and work together toward shared goals.

2.3 Programmer's Apprentice

The original programmer's apprentice was the name of project initiated at MIT by Chuck Rich and Dick Waters and Howie Shrobe to build an intelligent assistant that would help a programmer to write, debug and evolve software. Our version of the programmer's apprentice is implemented as an instance of an hierarchical neural network architecture. It has a variety of conventional inputs that include speech and vision, as well as output modalities including speech and text. In these respects, it operates like most existing commercial personal assistants.

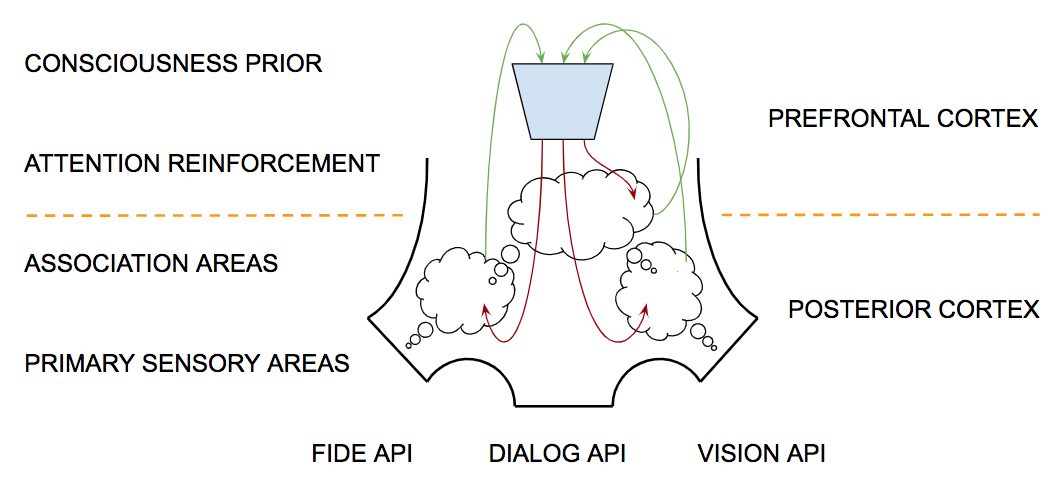

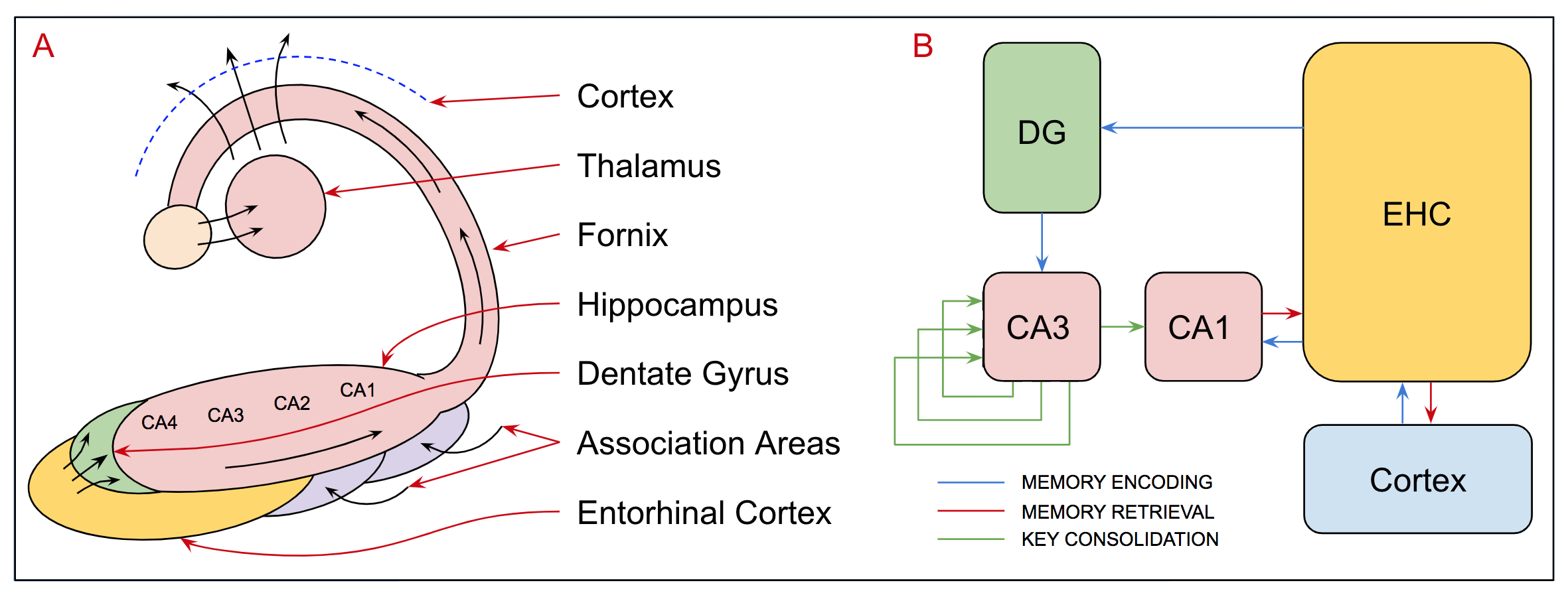

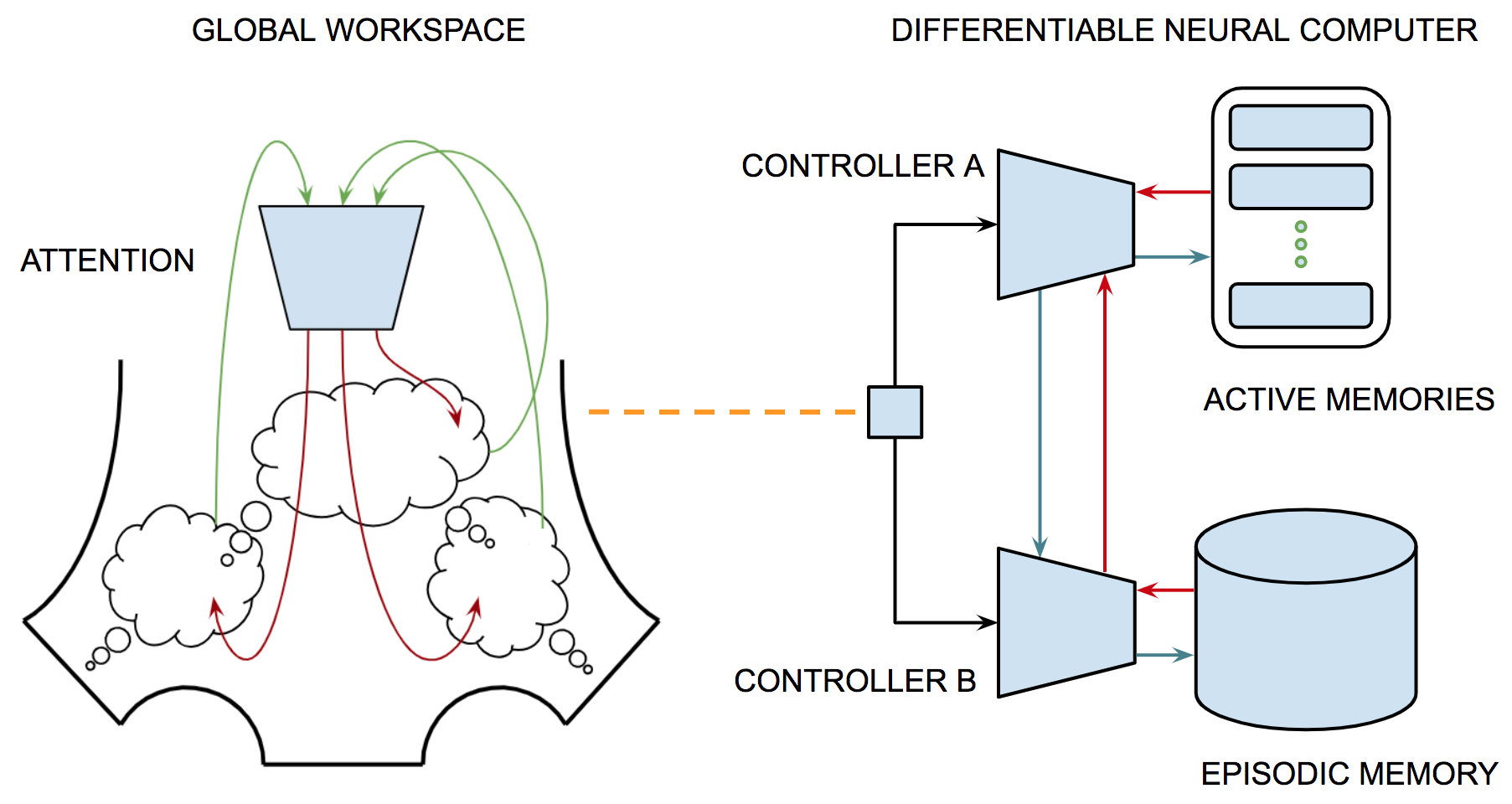

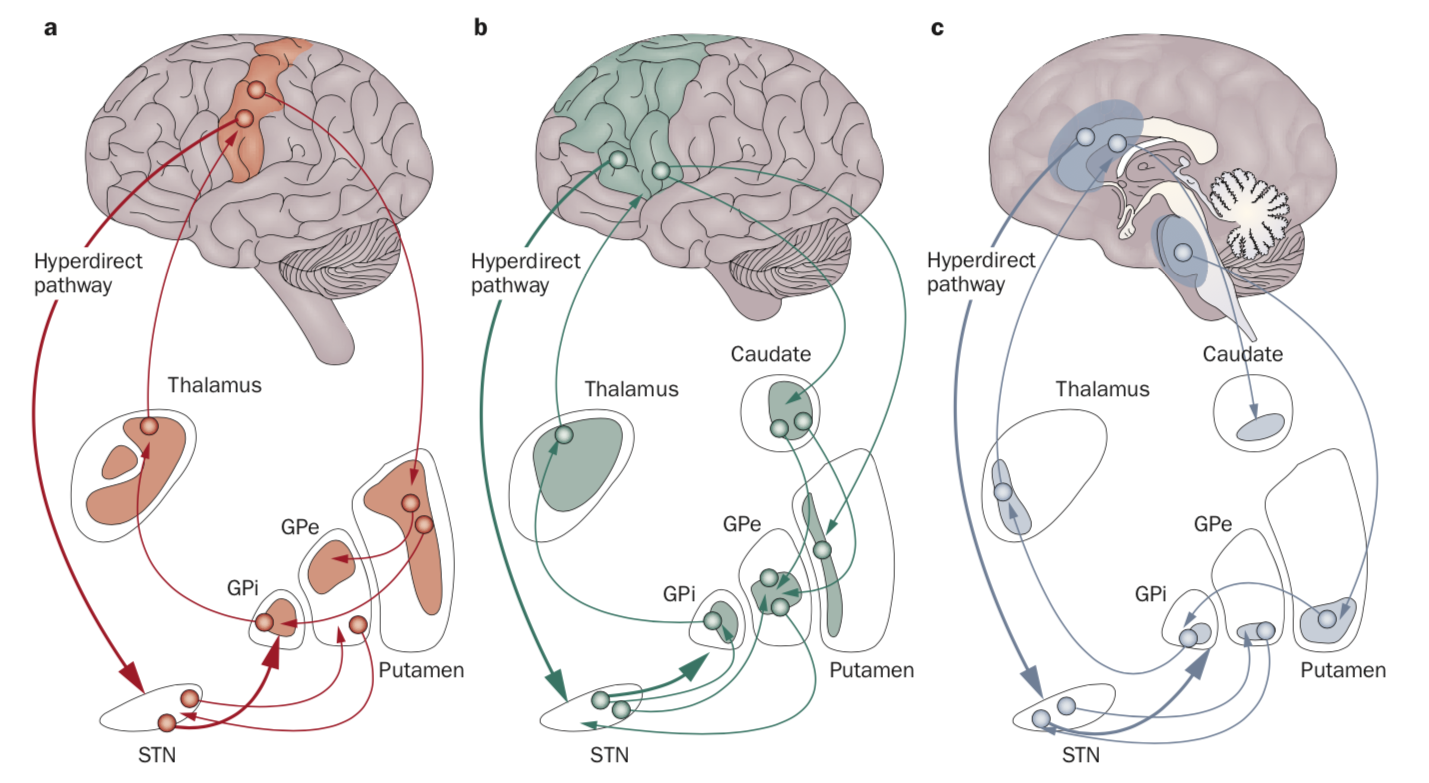

It differs substantially, however, in terms of the way in which the apprentice interacts with the programmer. It is useful to think of the programmer and apprentice as pair programming, with the caveat that the programmer is in charge, knows more than the apprentice does — at least initially, and is invested in training the apprentice to become a competent software engineer. One aspect of their joint attention is manifest in the fact that they share a browser window. The programmer interacts with the browser in a conventional manner while the apprentice interacts with it as though it is part of its body directly reading and manipulating the HTML using the browser API. The browser serves both programmer and apprentice as and encyclopedic source of useful knowledge as well as another mode of interaction and teaching.