Research Discussions

The following log contains entries starting several months prior to the first day of class, involving colleagues at Brown, Google and Stanford, invited speakers, collaborators, and technical consultants. Each entry contains a mix of technical notes, references and short tutorials on background topics that students may find useful during the course. Entries after the start of class include notes on class discussions, technical supplements and additional references. The entries are listed in reverse chronological order with a bibliography and footnotes at the end.

May 11, 2017

Stephen Plaza in collaboration with Shinya Takemura and their collaborators at HHMI Janelia Farm Research Campus run the FlyEM Project. Stephen sent us an early draft of the Drosophila Mushroom Body Dataset available to students taking CS379C interested in structural connectomics projects or combined structural and functional alignment. Regarding the draft data, Stephen writes, "To clarify, the dataset is complete in terms of neuron morphology and connectivity detail. It is only missing the grayscale and near pixel perfect segmentation of neurons."

For those of you familiar with Drosophila Seven-Column Medulla dataset, Stephen notes that "You will [need → "meet" or "find"] interesting motifs (that are not in the optic lobe) with multiple KC [Kenyon Cell] neurons synapsing at the same location of an MBON [Mushroom Body Output Neuron] dendrite. We often call these sites convergent synapses. It appears that the KC is probably connecting to a neighboring KC and the MBON. It is possible that multiple KCs at the site need to be 'active' for the MBON to be active there."

Here are a few review papers that will introduce you to the mushroom body and related parts of the olfactory system: [9] PDF, [153] PDF, [21] PDF, [71] PDF, and [109] PDF and here is a snapshot of the Mushroom Body Dataset. The tar ball (TAR) includes json files containing Python dictionary data structures describing neurons, T-bar structures, and synapses as well as skeletons for all of the reconstructed neurons. For those of you familiar with the Seven-Column Medulla Dataset, the format is almost identical:

% du -h

41M ./mb6_skeletons_7abee

220M .

pystat -v ./mb6_skeletons_7abee/ → 36,549,715 bytes (43.1 MB on disk) for 2,400 skeletons

% cat annotations-body_7abee_201705_11T234538.json | grep -i "body id" | wc -l → 2,434 neurons

% cat mb6_synapses_10062016_7abee_all.json | grep -i "T-bar" | wc -l → 91,443 T-bar structures

% cat mb6_synapses_10062016_7abee_all.json | grep -i "body id" | wc -l → 317,998 T-bar + synapses

% cat mb6_synapses_10062016_7abee_kc_roi_alpha.json | grep -i "T-bar" | wc -l → 13,664 T-bar structures

% cat mb6_synapses_10062016_7abee_kc_roi_alpha.json | grep -i "body id" | wc -l → 48,210 T-bar + synapses

@article{AsoetallELIFE-14,

author = {Aso, Y. and Hattori, D. and Yu, Y. and Johnston, R. M. and Iyer, N. A. and Ngo, T. T. and Dionne, H. and Abbott, L. F. and Axel, R. and Tanimoto, H. nd Rubin, G. M.},

title = {The neuronal architecture of the mushroom body provides a logic for associative learning},

journal = {Elife},

volume = {3},

year = {2014},

pages = {e04577},

abstract = {We identified the neurons comprising the Drosophila mushroom body (MB), an associative center in invertebrate brains, and provide a comprehensive map describing their potential connections. Each of the 21 MB output neuron (MBON) types elaborates segregated dendritic arbors along the parallel axons of approximately 2000 Kenyon cells, forming 15 compartments that collectively tile the MB lobes. MBON axons project to five discrete neuropils outside of the MB and three MBON types form a feedforward network in the lobes. Each of the 20 dopaminergic neuron (DAN) types projects axons to one, or at most two, of the MBON compartments. Convergence of DAN axons on compartmentalized Kenyon cell-MBON synapses creates a highly ordered unit that can support learning to impose valence on sensory representations. The elucidation of the complement of neurons of the MB provides a comprehensive anatomical substrate from which one can infer a functional logic of associative olfactory learning and memory.}

}

@article{ShaoetalTBE-14,

author = {H. C. Shao and C. C. Wu and G. Y. Chen and H. M. Chang and A. S. Chiang and Y. C. Chen},

title = {Developing a Stereotypical Drosophila Brain Atlas},

journal = {{IEEE Transactions on Biomedical Engineering}},

volume = {61},

number = {12},

year = {2014},

pages = {2848-2858},

abstract = {Brain research requires a standardized brain atlas to describe both the variance and invariance in brain anatomy and neuron connectivity. In this study, we propose a system to construct a standardized 3D Drosophila brain atlas by integrating labeled images from different preparations. The 3D fly brain atlas consists of standardized anatomical global and local reference models, e.g., the inner and external brain surfaces and the mushroom body. The averaged global and local reference models are generated by the model averaging procedure, and then the standard Drosophila brain atlas can be compiled by transferring the averaged neuropil models into the averaged brain surface models. The main contribution and novelty of our study is to determine the average 3D brain shape based on the isosurface suggested by the zero-crossings of a 3D accumulative signed distance map. Consequently, in contrast with previous approaches that also aim to construct a stereotypical brain model based on the probability map and a user-specified probability threshold, our method is more robust and thus capable to yield more objective and accurate results. Moreover, the obtained 3D average shape is useful for defining brain coordinate systems and will be able to provide boundary conditions for volume registration methods in the future. This method is distinguishable from those focusing on 2D + Z image volumes because its pipeline is designed to process 3D mesh surface models of Drosophila brains.},

}

@article{CampbellandTurnerCURRENT-BIOLOGY-10,

author = {Robert A.A. Campbell and Glenn C. Turner},

title = {The mushroom body},

journal = {Current Biology},

volume = {20},

number = {1},

pages = {R11-R12},

year = {2010},

abstract = {The mushroom body is a prominent and striking structure in the brain of several invertebrates, mainly arthropods. It is found in insects, scorpions, spiders, and even segmented worms. With its long stalk crowned with a cap of cell bodies, a GFP-labeled mushroom body certainly lives up to its name (Figure 1). The mushroom body is composed of small neurons known as Kenyon cells, named after Frederick Kenyon, who first applied the Golgi staining technique to the insect brain. The honey bee brain, for instance, contains roughly 175,000 neurons per mushroom body while the brain of the smaller fruit fly Drosophila melanogaster only possesses about 2,500. Kenyon cells thus make up 20\% and 2\%, respectively, of the total number of neurons in each insect’s brain. Kenyon cell bodies sit atop the calyx, a tangled zone of synapses representing the site of sensory input. Projecting away from the calyx is the stalk comprised of Kenyon cell axons carrying information away to the output lobes. }

}

@article{HeisenbergNATURE-REVIEWS-NEUROSCIENCE-03,

author = {Heisenberg, M.},

title = {Mushroom body memoir: from maps to models},

journal = {Nature Reviews Neuroscience},

volume = {4},

number = {4},

year = {2003},

pages = {266-275},

abstract = {Genetic intervention in the fly Drosophila melanogaster has provided strong evidence that the mushroom bodies of the insect brain act as the seat of a memory trace for odours. This localization gives the mushroom bodies a place in a network model of olfactory memory that is based on the functional anatomy of the olfactory system. In the model, complex odour mixtures are assumed to be represented by activated sets of intrinsic mushroom body neurons. Conditioning renders an extrinsic mushroom-body output neuron specifically responsive to such a set. Mushroom bodies have a second, less understood function in the organization of the motor output. The development of a circuit model that also addresses this function might allow the mushroom bodies to throw light on the basic operating principles of the brain.},

}

@article{McGuireetalSCIENCE-01,

author = {McGuire, S. E. and Le, P. T. and Davis, R. L.},

title = {The role of {D}rosophila mushroom body signaling in olfactory memory},

journal = {Science},

volume = {293},

number = {5533},

year = {2001},

pages = {1330-1333},

abstract = {The mushroom bodies of the Drosophila brain are important for olfactory learning and memory. To investigate the requirement for mushroom body signaling during the different phases of memory processing, we transiently inactivated neurotransmission through this region of the brain by expressing a temperature-sensitive allele of the shibire dynamin guanosine triphosphatase, which is required for synaptic transmission. Inactivation of mushroom body signaling through alpha/beta neurons during different phases of memory processing revealed a requirement for mushroom body signaling during memory retrieval, but not during acquisition or consolidation.}

}

May 5, 2017

Data Alignment Problems

Despite the claim of its being a full reconstruction, most cell bodies in the seven-column data are not included. Technically, the claim is valid, since in most cases the cell bodies are not even in the medulla. Stephen Plaza said this is due to a limitation of earlier FIBSEM technology, and, while the problem does not arise in aligning the whole fly brain connectome, it undermines our original idea of aligning the known locations of cell bodies in the EM data with the 2PE emissions / putative cell-body locations in the calcium imaging data. However, while we don't know the locations of most cell bodies, we do know most of the structure of the axonal and dendritic arbors as well as location of most if not all the locations of synapses arranged on the T-bar structures typical of most fly neurons. Unfortunately, the synapses do not express the fluorescent calcium indicators, indeed it would seem we might expect very little in terms of fluorescent excitation within the medulla proper.

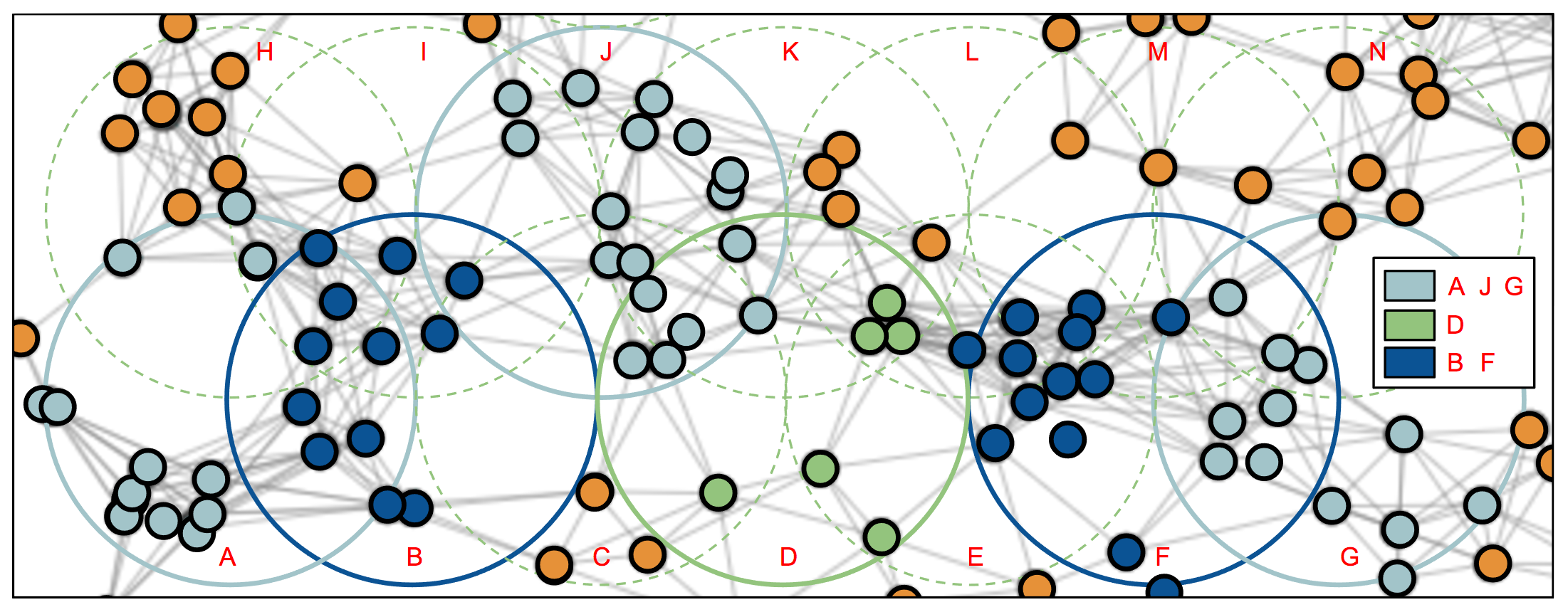

| |

| Figure 1: This slide shows a cloud of points that reveal the outlines of seven neurons called type-one medullary intrinsic neurons or Mi1 for short. The points of the central column Mi1 are blue, and those of the surrounding six Mi1 neurons are shaded green. I’ve fit a line to each neuron: red for the central-column Mi1 and yellow lines for the adjacent Mi1 neurons. The data is from the HHMI Janelia Research Campus FlyEM Project. | |

|

|

Stephen and Shinya Takemura suggested that, since neurons are identified by their cell types (Tm, Dm, Mi, etc) and their position and morphology is significantly stereotyped, we could use full reconstructions found in the literature to predict exactly where their cell bodies would lie just outside or within the tracts / bundles around the periphery of the medulla1. Alternatively, depending on the type of microscopy employed for calcium imaging, e.g., some variant of confocal microscopy could work, we might be able to construct a distinctive pattern using the full skeletons of those neurons, e.g., Mi1 and Tm1, whose processes are entirely contained within the columns of the medullar neuropil—see Figure 1.

By using the skeletons to register the seven column data, we would not immediately have the aligned cell bodies, but we would be in a much better position to estimate their position, making additional changes to the alignment in order to find the best alignment with the functional data. Just to be clear matching a pattern constructed from the full skeletons of column bound neurons would have to be done relying upon confocal imagery alone; I am presuming the imagery data could be acquired prior to running the experimental protocol and recording the florescent proxy for the calcium flux.

| |

| Figure 2: A 3D mesh—rendered here using MeshLab—reconstruction of the Drosophila half brain employed by the Insect Brain Name Working Group in developing a hierarchical nomenclature system for the major brain regions and fiber bundles found in insect brains, using the brain of Drosophila melanogaster as the reference framework. This nomenclature is intended to serve as a standard of reference for the study of the brains of Drosophila and other insects [81]. The region shaded pink in the above graphic corresponds to the medulla. | |

|

|

Fly Datasets and Tools

We've been working with two lower-resolution datasets to facilitate registration: the Adult Drosophila Brain (2010) from the HHMI Janelia Farm Research Campus (JFRC) and the Adult Drosophila Half Brain (2014) from the Insect Brain Name Working Group (IBNWG) —see Figure 2. Both datasets include image stacks, 3D mesh templates, and image masks for 50 neuropil domains including medulla.

For analysis and visualization, we're using a number of tools including: Vaa3D from JFRC and the Allen Institute for Brain Science (AIBS), ImageJ from NIH, open-source tools for working with points clouds CloudCompare and 3D mesh models MeshLab, and a Python library NeuroBot for working with the JFRC Seven-Column Medulla dataset [CODE].

Alignment Algorithms

Here is a first pass at an algorithm for aligning the functional and structural datasets, taking into account the issues raised above: The first step is to construct a morphologically-unique 3D pattern using structural features from the JFRC dataset, for example, by using the skeletons of columnar-bound intrinsic neurons fully contained within the medulla (Figure 1), in order to identify the location and orientation of the corresponding seven columns in Sophie's data. The pattern is basically a 3D binary image that we match against 3D whole or half-brain image—an image of just the medullar neuropil is sufficient—generated using a light source with a broader spectrum than the fluorescent excitation light and perhaps a different microscope than the epifluorescence microscope used to acquire the calcium imagery2.

Once we know the location of the seven columns in Sophie's fly data, we need to identify the cell bodies of the neurons whose processes are represented / largely contained within the seven-column volume. Given that most if not all of the these cell bodies will be located outside the seven-column volume, we need to extend this volume to include the extra-medullar regions likely to contain these cell bodies. Having identified a minimal volume V in which to search for the cell bodies of medullar neurons, we need to construct a dataset corresponding to a 3D V-shaped slice out of the calcium indicator time-series recordings.

| |

| Figure 3: The upper green rectangle with the inset reddish-hued 2PE image slice—rendered here using Fiji—is superimposed on a cross-section of the IBNWG half-brain—rendered using Vaa3D, and represents the approximate location of the functional data that Sophie Aimon sent us in April. For the purpose of aligning the JFRC seven-column data with the medulla, we need functional data from the region roughly corresponding to lower green rectangle. | |

|

|

Figure 3 shows the approximate location of the region of adult fly brain that was imaged in the data that Sophie sent us in April—see the inset reddish-hued 2PE image slice in the upper green rectangle shown in Figure 3. For our present purposes, we need functional data from the region roughly corresponding to lower green rectangle in Figure 3. We also need 3D light imagery of the region for the purpose of alignment.

Finally, we should think about how we can verify that we have correctly aligned the seven columns with the functional recordings. For one thing, we know from Shinya Takemura [171, 172] that the tissue corresponding to the seven-column data was approximately from the center of the visual field. We will be able to check on this in silico using the reference frame provided by the Ito et al [81] data. In addition, Art Pope and I discussed various experimental stimuli3 and fiducial labeling strategies4 that might help us to determine whether we have correctly identify the seven-column sub volume.

Functional Analyses

There are a number of ways in which we could succeed in this endeavor and related ways in which we could capitalize on our success—all of them listed here assume some success in matching the seven-column-pattern to some location and orientation in the medulla that satisfies the centrality constraint and bears up under visual scrutiny:

We (a) obtain one stand-out match and (b) succeed in lining up the corresponding extra-medullar cell bodies.

We obtain multiple matches with approximately the same score, and achieve (b) for each one of these matches.

We (a) obtain one stand-out match but (b) we fail in lining up the corresponding extra-medullar cell bodies.

Case #1 is the ideal case and it provides the opportunity to generate time series of transmission-response matrices [37] and perform analyses using tools from graph-theory, e.g., spectral methods [113], and algebraic topology, e.g., persistent homology [26, 99]. In Case #2, we can treat the seven-column pattern as a motif finder [85, 179, 74, 96, 97] that can be used to statistically analyze the corresponding collocated functional and structural domains, even though we can't map fluorescent emissions directly to nodes (neurons) in the connectome graph.

Case #3 offers the opportunity to apply unsupervised machine learning methods to automatically sort out the connections by using the connectome graph, the functional data and a set of proposals for mapping fluorescent emissions to nodes in the connectome graph. Basically, we assign each cell body in the seven-column structural data a set of candidate locations in the functional data and then train a network with a loss function that measures how well different assignments predict the next neural state vector. I realize these descriptions are too brief to be of much use to anyone who can't read my mind, but perhaps they'll motivate you to start thinking about what sort of science we can enable in this project.

April 27, 2017

A few observations and suggestions concerning the problem of aligning functional and structural data. First, our basic task is to fit a point cloud corresponding to the coordinates of the non-zero voxels in some thresholded version of Sophie's data to the mesh, i.e., find the orientation and scaling parameters that "optimally" enclose this point cloud. Once we have that we can use the medulla model to identify all putative neurons in the medulla and construct a point cloud for these neurons that we can use to find correspondences with the seven-column cell bodies. Here are some notes relating to implementation5:

I was able to use a 2008 MacPro server with 8GB and 8 cores to run ImageJ and allocated 7GB to the application. It eventually ran out of memory on even the smallest of Sophie's datasets, but basically did fine for viewing for long enough to be useful before it froze;

The raw video shows the outlines of the (outer surface of the) brain tissue, well enough that we can probably get some accurate initialization alignment points to make it easier to fit the data;

I found that the preprocessed video is best viewed by applying the 16 Color LUT — click the >> icon on the far right hand side of the tools menu, and select LUT which will put the LUT options sub menu on the main tools menu;

In this mode, you should see a lot of activity as you move through the center of the stack. In my case, most of the screen was light blue, the majority of cell bodies yellow but with a few other colors.

I am using MeshLab for viewing mesh models and CloudCompare for point clouds. Thanks to Art for pointing out these tools as they are — for the most part — professionally engineered tools that scale to accommodate large datasets;

There are a bunch YouTube videos showing how to use these tools to register / align point clouds and mesh models. One problem I anticipate involves registering a point cloud in which the points (a) reside in the interior as well as on the surface, and (b) are sparse and don't represent a random sample ... indeed, they very sparse in some peripheral areas of the brain. It may be possible to segment out the coronal cross sections of the brain well enough to define the exterior surface and then densely sample points on each such cross section to create a point cloud of the whole brain consisting of only points on the brain tissue surface;

Given a point cloud of the sort described in item 6 it should be relatively easy register the cloud with the JFC [83] (Janelia Farm Campus) mesh model using an affine transformation. There are also tools that can compute non-affine transformations to handle non-uniform deformations. This may be overkill if we use physics-based deformable (spring) models to estimate different possible alignments. Fortunately, Art knows a lot about this sort of problem and the existing algorithms and tools for solving them;

I didn't look very hard but so far I haven't found a 3D mesh of the medulla, though Virtual Fly Brain — VFB_BRAIN and VFB_HALF_Brain — claims to have (or soon have) them. However, I did find a stack of annotated coronal-cross-sectional images that clearly show the boundaries of the medulla and these masks directly map to the whole-brain mesh model I sent around earlier;

Once we register the CI image point cloud with the JFC mesh model we should be able to use the same transformation on the stack of medulla-image masks to determine a point cloud consisting of just neurons residing within the medulla;

The final step is to align the point cloud corresponding to the coordinates of the cell bodies in the seven-column dataset with the point cloud consisting of the CI identified medullar cell bodies;

April 25, 2017

You can get the general idea of what I expect for the final project proposals from the archived class web pages, but it's important to note that the content of the proposals depends very much on the topic for each year.

In 2013, the focus was on identifying technologies that would mature in 2, 5 and 10 years and that could fundamentally change how we do neuroscience:

Neuroscientists have by and large failed to take advantage of the exponential trend in computational power known as Moore’s Law. In this class, we investigate new approaches to scalable neuroscience that might enable systems neuroscience to exploit the accelerating returns from recent advances in sensing and computation.

Projects (see here) were in the form of a term paper:

Grading is based on a midterm white paper [the proposal] and a final technical report [the project] evaluating an appropriate technology selected in collaboration with the instructor. Examples include nanoscale networks, photo-acoustic microscopy, high-intensity focused ultrasound and computer-vision-based analysis of micrographs.

The results were combined into a jointly-authored arXiv paper describing our findings entitled On the Technology Prospects and Investment Opportunities for Scalable Neuroscience, available in HTML and PDF formats, that was published on arXiv, widely circulated in the community, and quite influential.

In 2012, the focus was on modeling neural systems and analyzing data:

This year students in CS379C will have the opportunity to interact with some of the most innovative scientists and engineers working in systems neuroscience today. We will study their methods and hear directly from them about the challenges they face, some of which the students can actually help out with now.

Projects (see here) were in the form of writing code and testing systems:

Projects will include replicating and evaluating existing computational models and implementing novel models that extend or combine the features of existing ones.

This year the focus is on learning models from large datasets:

This year's class focuses on inferring computational models from neural-recording data. Lectures, invited speakers and projects all emphasize functional rather than structural inference. [...] We will arrange access to large functional datasets along with tools for working with such datasets and suggestions for modeling methods and machine learning technologies for performing inference.

The class discussion includes a number of project ideas and I've mentioned others in class. I'm not a stickler for the exact format and I've taken pains not to stifle your creativity, but I expect you to make a best effort to align your interests with the focus spelled out in the course description and reiterated above. Here are some ideas that you should be able to expand into a 300-word pre-proposal:

Use the new C. elegans BIORXIV 2017 data from Andrew Leifer's lab and apply the techniques that Saul Kato described to build and compare a dynamical systems model.

Analyze the Kato CELL-2015 and Leifer PNAS-2016 data using a more powerful dimensionality reduction technique and compare to the original Kato model.

Compare the mouse striate cortex resting state (no stimulus) activity in the Pachitariu data with the drosophila (no stimulus) activity in the Aimon data.

Compare the mouse striate cortex resting state (no stimulus) activity in the Pachitariu data with activity in related cortical areas from the Allen Institute data.

Replicate [a specified subset] of the results reported in the Ahrens NATURE-2012 or ELIFE-2016 papers ... and so on ...

... and so forth. These are just suggestions. Before you can expand any one of them into a 300 word pre-proposal, you'll have to read (or at least scan) the relevant papers (listed in the calendar entries of the above-mentioned authors ... Andy Leifer participated in class last year and so look at his entry in the 2016 calendar that you'll find in the course archives) and check out the relevant supplementary materials (which you'll find on along with the paper on the journal website).

The final version of your proposal will be due one week (Tuesday, May 9) after the pre-proposal is due.

April 23, 2017

There's a new paper from Andrew Leifer's lab on his most recent work on recording from C. elegans in freely moving nematode worms. See Nguyen et al PLoS Computational Biology (in press) 2017 [118] (PDF) and accompanying data and software tools Nguyen et al IEEE Dataport [119] (HTML). If you're interested in C. elegans, this paper and its associated supplements are worth a look.

Sophie Aimon has provided two datasets using the recording technology described in her 2015 bioRxiv paper [4]. Keep in mind that this data is lower resolution than the best recordings we have for zebrafish. Nonetheless it probably represents the current state of the art in whole-fly functional imaging. My colleagues, Peter Li and Art Pope at Google, are looking at the data to determine if alignment with the seven-column-medulla structural data from FlyEM is possible.

April 21, 2017

Subject: "Frustration is a matter of expectation", Luis von Ahn on the the value of "I don't understand":

My phD advisor at Carnegie Mellon was Manuel Blum, who many people consider the father of cryptography. He's amazing and he's very funny. I learned a lot from him. When I met him, which was like 15 years ago, I guess he was in his 60's, but he always acted way older than he actually was. He just acted as if he forgot everything.I had to explain to him what I was working on, which at the time was CAPTCHA, these distorted characters that you have to type all over the Internet. It's very annoying. That was the thing I was working on and I had to explain it to him.

It was very funny, because usually I would start explaining something, and in the first sentence he would say, 'I don't understand what you're saying', and then I would try to find another way of saying it, and a whole hour would pass and I could not get past the first sentence. He would say, 'Well, the hour's over. Let's meet nest week.' This must have happened for months, and at some point I started thinking, 'I don't know why people think this guy's so smart.'

Later, I understood what he was doing. This is basically just an act. Essentially, I was being unclear about what I was saying, and I did not fully understand what I was trying to explain to him. He was just drilling deeper and deeper and deeper until I realized, every time, that there was actually something I didn't have clear in my mind. He really taught me to think deeply about things, and I think that's something I have not forgotten.

April 19, 2017

Five Suggested Topics for Final Class Projects in CS379C:

Topic #1: Dynamical System Modeling Nematode Caenorhabditis elegans:

Functional → Zimmer and Leifer Labs

Contacts: Saul Kato, Andrew Leifer

(see here [Kato, 2016], here [Leifer 2016] and here [Leifer 2017])

Structural → Worm Atlas Hermaphrodite Connectome

Contacts: Saul Kato, UCSF, Semon Rezchikov, MIT

Topic #2: Structural and Functional Alignment Drosophila melanogaster:

Functional → Greenspan Lab

Contacts: Sophie Aimon, UCSD

Structural → HHMI Janelia FlyEM Project

Contacts: Stephen Plaza, HHMI, Shinya Takemura, HHMI

The medulla forms hexagonal columnar arrays: one center column with six neighbors. One can think of these columns as parallel units in a receptive field within a retinotopic map if flies had a retina.

|

Biologically, adjacent columns when correlated together should uncover the motion detection circuit. Fly EM explored this circuitry in a previous reconstruction that is described in Takemura [171]. Our seven column dense reconstruction is larger and more comprehensive than the one in this paper and should offer new insights to this critically important circuit.

Furthermore, this circuit is the focus of much research including several theoretical models and general physiological studies. With this rich background, we may be able to produce reasonable hypotheses on the circuit dynamics from structural connectivity, which can then guide further focused experimentation.

Presumably, these adjacent columns will be very stereotyped and similar in number of neurons, synapses, etc. It provides an opportunity to study stereotypy, understand biological variability, and examine common motifs over similar columns of neuropil. This may also allow one to distinguish biological sources of variability from the variability due to the reconstruction process.

Shinya Takemura writes: "Below shows a medulla neuron Mi9 that stretches the entire medulla depth and has a cell body in the medulla distal surface. Our EM image stack spans these medulla cell body layers through the depth slightly deeper than the proximal edge of M10. I can search another cell type if it is useful to align it with the functional data. I can also provide multiple cells because we have reconstructed seven medulla columns. The cell body locations and the proximal edge of the medulla neuropil would be useful landmarks. The seven columns were imaged in the middle of retinotopic field, i.e. almost the middle of the medulla in both dorso-ventral and antero-posterior axes":

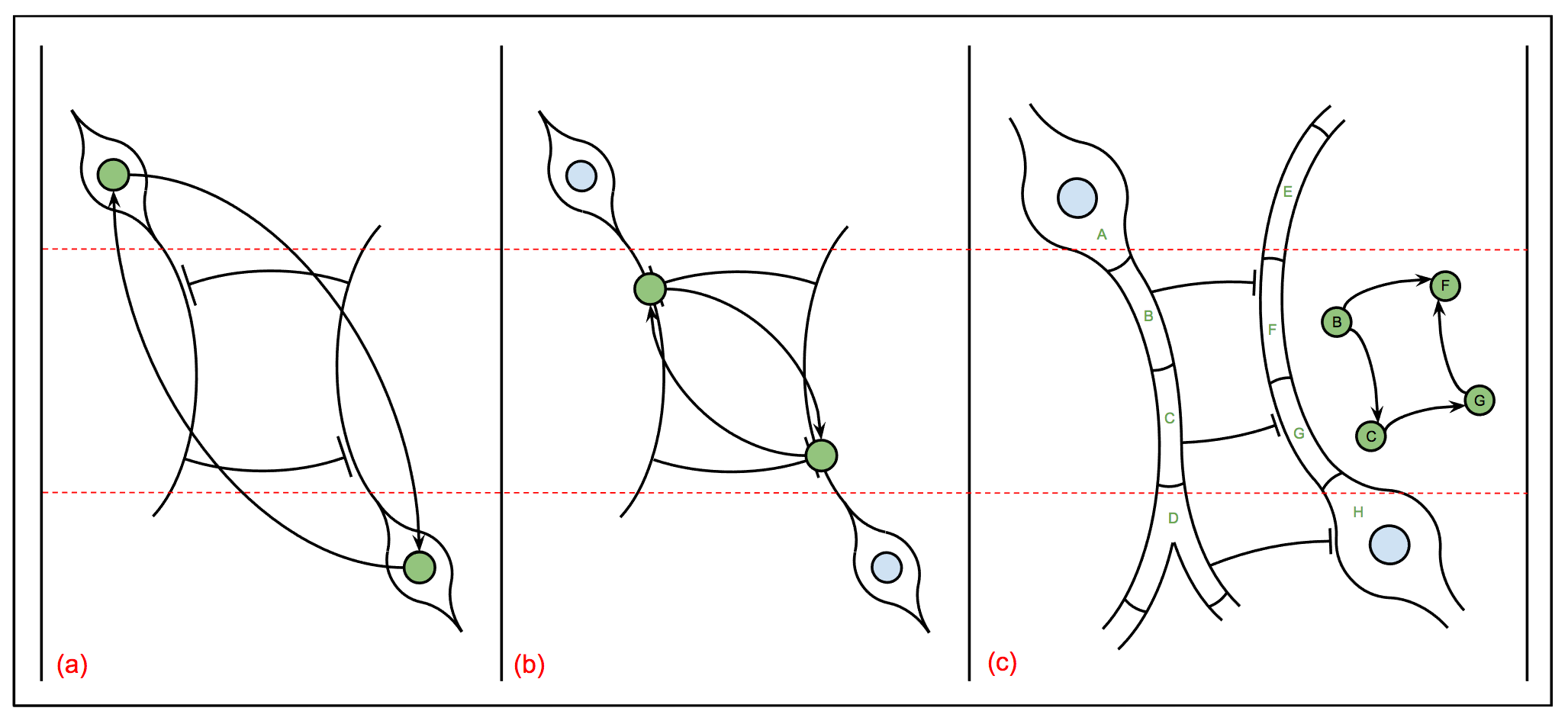

|

Functional imaging the brain using light field microscopy [4]. a) Experimental set up: The fly is head fixed and its tarsi (legs) are touching a ball. The light from the brain goes through the objective, the microscope tube lens, a microlens array, and relay lenses, onto the sensor of a high-speed sCMOS camera. The behavior is recorded with another camera in front of the fly. b) Example of a light field deconvolution. Top: 2D light field image acquired in 5 ms exposure with a 20 x objective. Bottom: Anterior and posterior views (slightly tilted sideways) of the computationally reconstructed volume. 3D bar is 90 x 30 x 30 µm. (SOURCE)

|

Topic: #3: Functional Modeling of Whole Zebrafish Brain Danio rerio:

Functional → Ahrens Lab

Contacts: Misha Ahrens, HHMI

Topic: #4: Functional Modeling of Mouse Visual Cortex Mus musculus:

Functional → Harris and Carandini Lab

Contact: Marius Pachitariu, UCL

Functional → Allen Institute Project MindScope

Contact: Michael Buice, AIBS

Topic: #5: Atari Game Console and 6502 Processor Computatus motorola:

Functional → 6502 Emulation

Structural → Virtual 6502

Contacts: Eric Jonas, UCB (see here)

April 17, 2017

There seems to be some confusion regarding the different types of microscopy discussed in the readings and used in collecting the datasets. Here's a quick comparison of the different technologies we'll be reading about that are employed for collecting functional data and you can find a concise compilation that covers a larger collection of technologies here:

Bright-Field Microscopy is the simplest of all the optical microscopy illumination techniques. Sample illumination is transmitted (i.e., illuminated from below and observed from above) white light, and contrast in the sample is caused by attenuation of the transmitted light in dense areas of the sample. SOURCE

Light-Field Microscopy captures information about the intensity of light in a scene, and also the direction that the light rays are traveling in space, in contrast with conventional (bright-field) microscopes, that record only light intensity. [135, 134]. SOURCE

Light-Sheet Microscopy employs a cylindrical lens to focus light—used to excite fluorescent proteins—on a sheet of light that illuminates only the focal plane of the detection optics that are responsible for recording the reflected light emanating from the illuminated planar region of the target tissue6. SOURCE

Resonant-Scanning Microscopy relies on laser positioning in the x-axis provided by a special resonant scan mirror that oscillates at a fixed frequency of 5-10kHz, thus enabling very fast scanning of high resolution full field frames (512 x 512 pixel) with frame rates of up to 30 fps. The microscope itself can employ a standard optical or confocal lens.

So, for example, Ahrens et al [3] use light-sheet microscopy whereas Aimon et al [4] use light-field microscopy. Pachitariu et al [126] use an off-the-shelf resonant-scanning microscope but employ a novel pipeline for post-processing the raw image data7. Saul Kato and other researchers in Manuel Zimmer's lab use light-field microscopy and related imaging technology developed in Alipasha Vaziri's lab [135, 136, 134, 149].

The microlens arrays used in commercial light-field cameras such as those manufactured by Lytro mimic the structure of the insect compound eye such as the multi-faceted arthropod eye of the fly. However, light-field cameras also capture the direction of the light rays and hence collect more information than insect eyes. See Song et al [162] for the description of an engineered lens system that uses an array of 180 artificial ommatidia to achieve a 160-degree field of view.

April 15, 2017

Here is the first installment of sample projects that make use of the datasets mentioned in the April 11 entry in this log:

Aligning Functional and Structural Connectomic Data: (a) Identify the location of the seven columns in the Drosophila medulla accounted for in the dataset from Janelia [171]. (b) Align the whole-brain functional data [4] from Ralph Greenspan's lab with the columns of the Janelia data. (c) Use the aligned data to generate a times series of transmission-response graphs. (c) Apply graph-theoretical and topological algorithms to analyze the resulting time series.

Commentary: This is probably the closest we can come to creating an aligned functional and structural dataset at this time. When Janelia and Neuromancer complete the whole-fly connectome, the capability addressed in this project will realize its full potential. The project is challenging enough that it will require a small team of students to take on the alignment part. Fortunately, we will have help from two labs. Sophie has provided an initial sample of data and promises to collect more if we come up with some interesting results. Stephen Plaza and Shinya Takemura are helping to identify the location, shape and orientation of the sample tissue they used for the seven-column data.

As an incentive, Olaf Sporns, the editor of Network Neuroscience, has asked me to submit a manuscript describing the work I presented at the Keystone Symposium on Molecular and Cellular Biology in March, and, if successful in aligning the functional and structral data, I will be happy to add the members of the successful team to the list of co-authors on this paper.

Challenges: (i) The two datasets were collected from different phenotypes. The good news is that the fly optic lobe exhibits a good deal of stereotypy across phenotypes and is not known to exhibit plasticity, unlike the olfactory bulb and related mushroom bodies. The medulla is highly regular and so we hope to construct a pattern of points corresponding to the locations of the cell bodies in the seven-column connectome graph embedding, and then search for correspondences within the functional point cloud. (ii) The EM (structural) image data has voxels of size on the order of 10nm on a side, while the 2PE (functional) data has approximately 2μm resolution. The good news is we are trying to match fluorescent emissions from cell-body nuclei with known locations of specific neuron types in the seven-column data.

Applications of Algebraic Topology in Neuroscience: This is really a constellation of possible projects centered around the use of tools from algebraic topology to analyze structural and functional datasets. Here are a few representative papers organized roughly by topic: (i) introductory [33, 128], (ii) structural [37, 158, 56, 131], (iii) functional [28, 34, 32], and (iv) morphological [99], and (v) circuit motifs [74, 96]. Think about starting with a literature search if you pursue any of these alternatives.

Challenges: The math can be somewhat daunting if you don't have the necessary background in algebraic topology. However, the tools are simple to use and the results relatively easy to interpret. Look at Pawel Dlotko's calendar entry from last year for an introduction to simplicial complexes and persistent homology. You might also look at the Python package called NeuroBot that I wrote using his simplicial-complex library—called NeuroTop—to analyze the seven-column dataset.

April 13, 2017

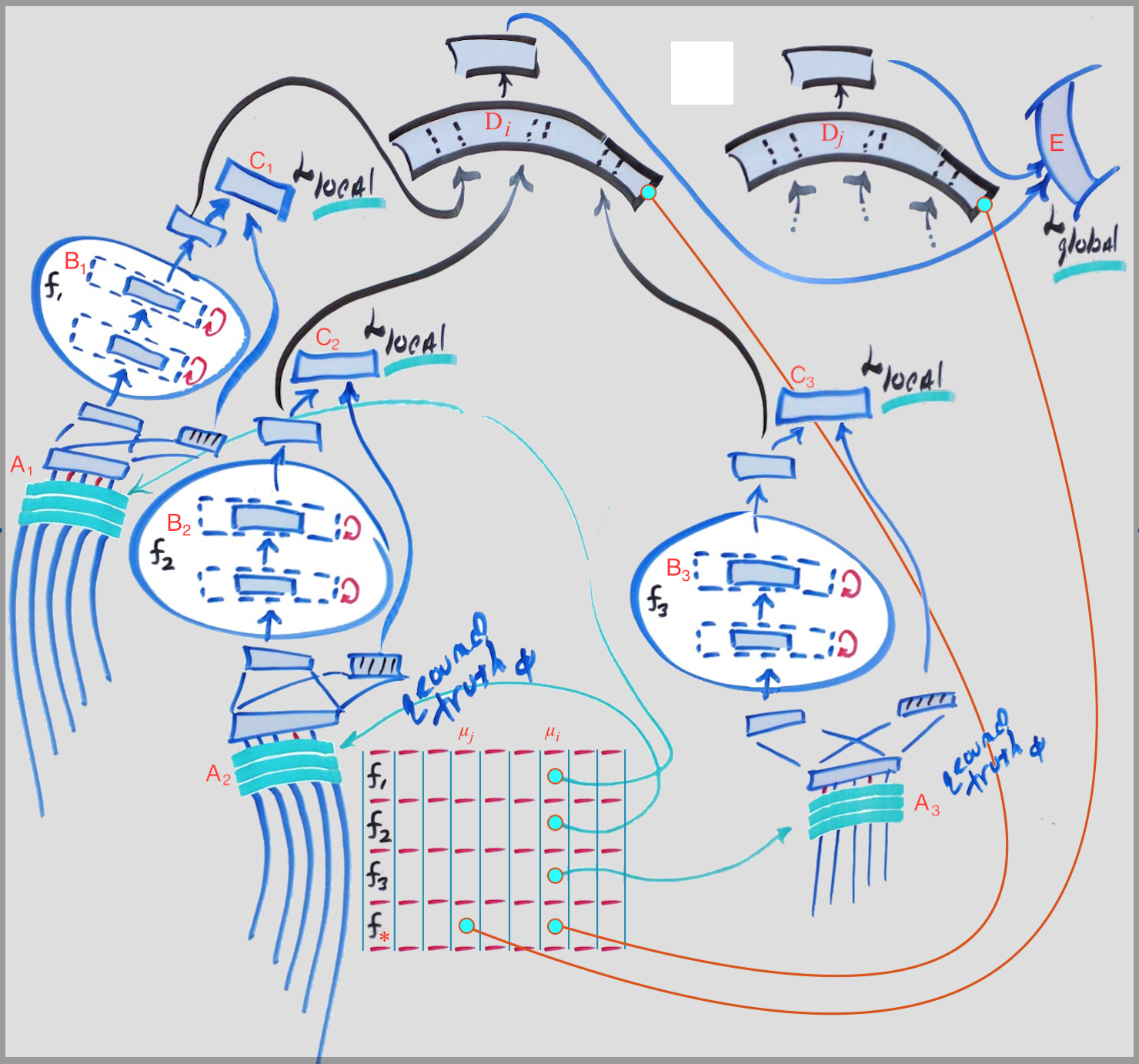

Notes from Adam Marblestone's presentation on April 13 [...] motivated by Greg Wayne's observations concerning our [105] paper in Science [...] the role of loss functions in shaping artificial and natural neural-network representations [...] interesting to think about how sparse coding plus natural image reconstruction autoencoders fit into the overall picture [...] L1 —least absolute deviations (LAB) and L2 —least squared error (LAE)—norms used as loss functions versus regularization term [...] both representation and coding as inherently distributed and time varying (think of indefinitely prolonged development) [...]

[...] learning by twiddling coefficients using differences to guide search, i.e., node perturbation [...] the Francis Crick quote damming back propagation as a biologically implausible learning mechanism [...] weight transport [...] solved by having completely bidirectional weight matrices [...] result using random matrices (HTML) [...] Blake Richards [...] combined with random feedback weights solves the problem of weight transport and explains the morphology of pyramidal neurons [...] Walter Senn [...] Geoff Hinton and Yoshua Bengio [...] context encoders aren't supervised [...] what can't you do with back-prop and reconstruction loss [...]

[...] Issa, Cadieu and DiCarlo [80] (HTML) provide evidence that the ventral stream computes errors [...] computes a perceptual signal, takes feedback to compute a local loss reflecting its "change of mind" [...] Elias Issa et al synthesis / synthetic gradient propagation [...] Shimon Ullman's internally-generated bootstrap cost functions [...] faces are often looking at hands [...] embedding spaces, skipgram models and Adam's learning-from-context example in image understanding [...] prediction as a general cost function [...] acetylcholine in cortex (glial pathway) versus dopamine in the basal ganglia [...] hippocampus as a three layer cortex but optimized for very specialized computations [...]

[...] attractors in thalamocortical recurrent loops [...] difference between short (quasi-stable encodings, depending on sustained reentrant patterns of activation) and longer term (consolidated encodings) in terms of the mechanisms involved in initiating memory formation, maintaining the necessary state information in a quasi-stable form and then consolidating the nascent engram into a more-or-less stable (at least long-term and perhaps more energy efficient) representation that is superficially reminiscent of the difference between dynamic (needing periodic refresh) and static (not needing static refresh) RAM.

P.S. In email to me, Konrad Kording and Greg Wayne, Adam wondered if this paper [55] might explain where cost functions for the different cortical areas live:

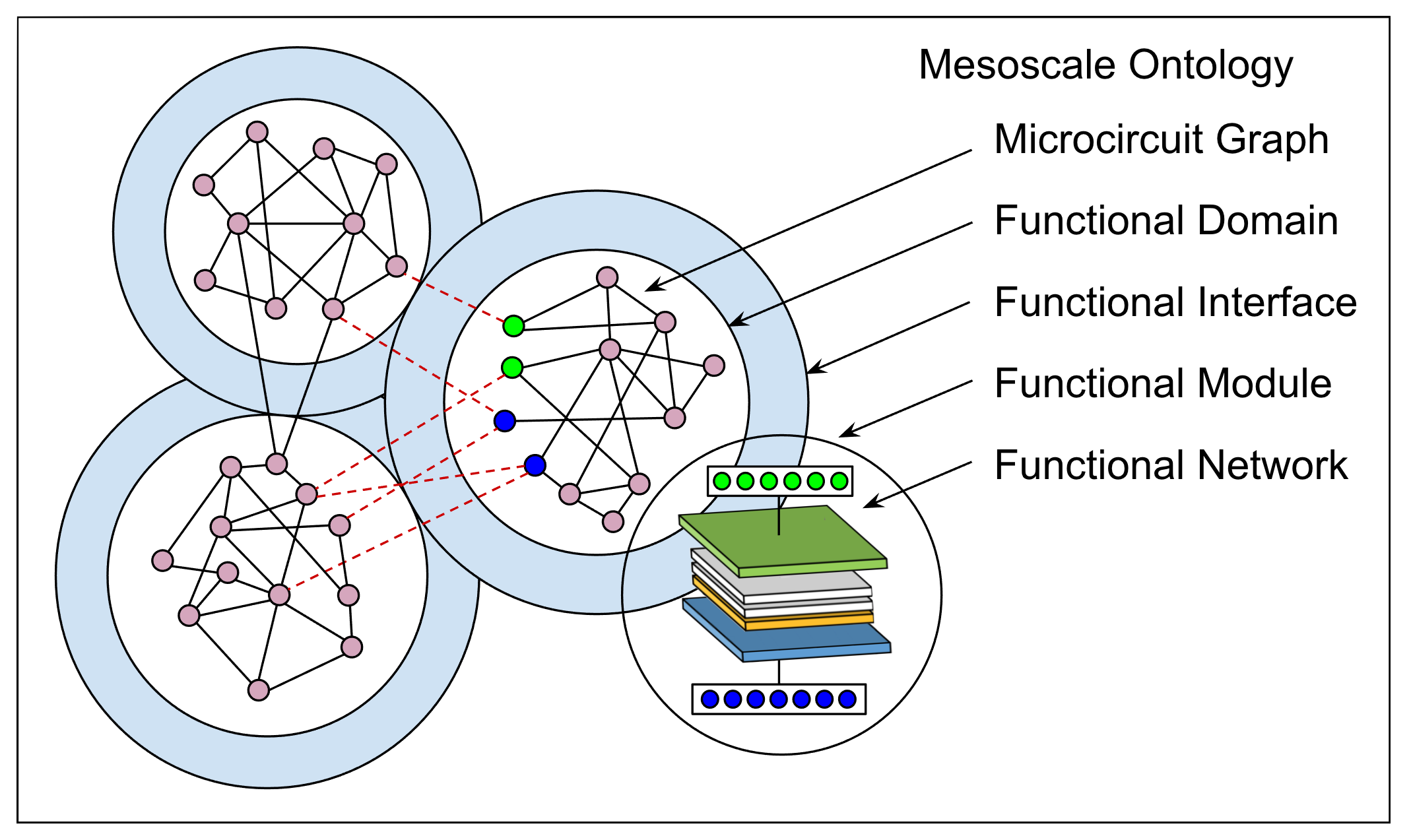

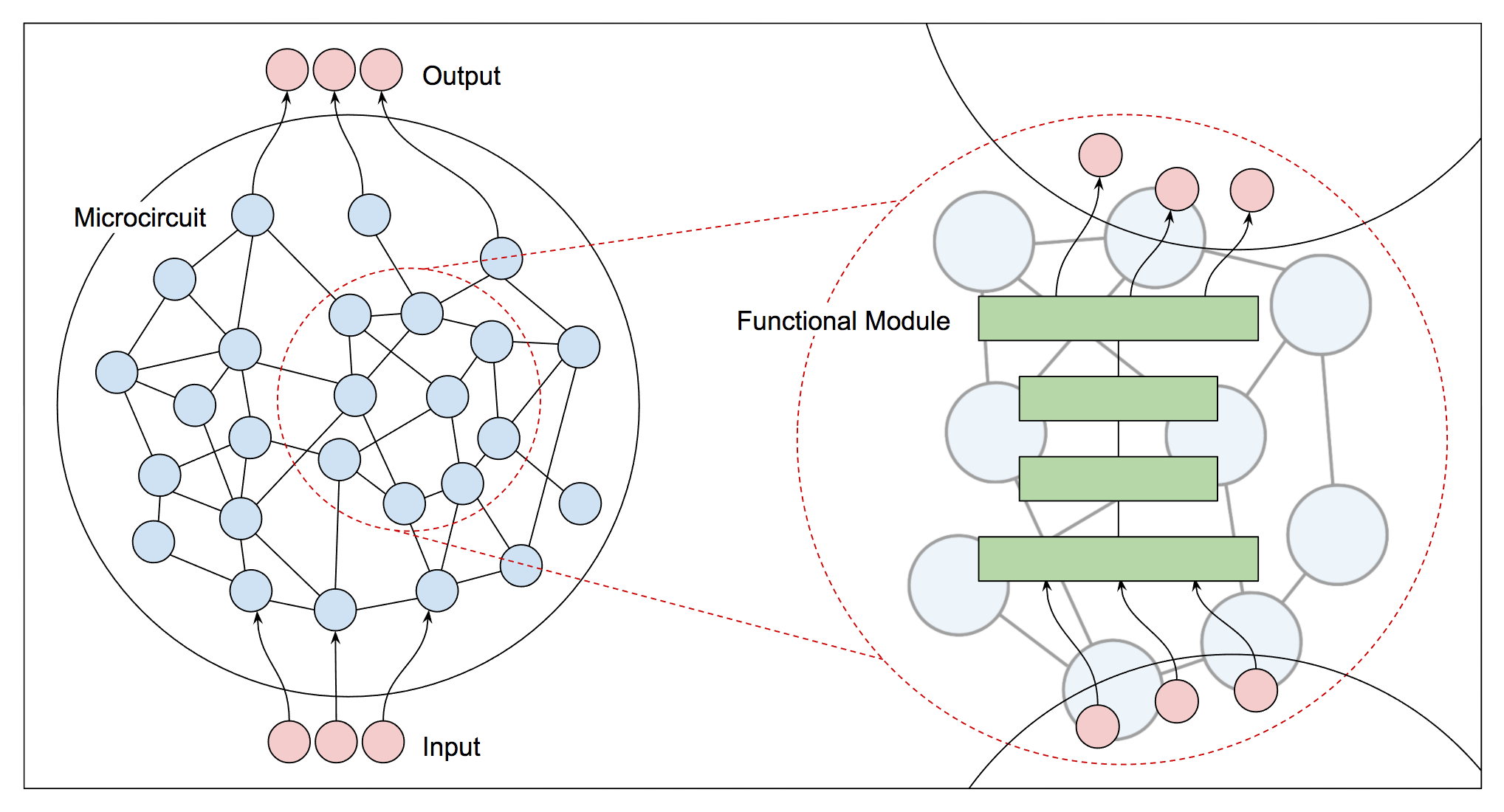

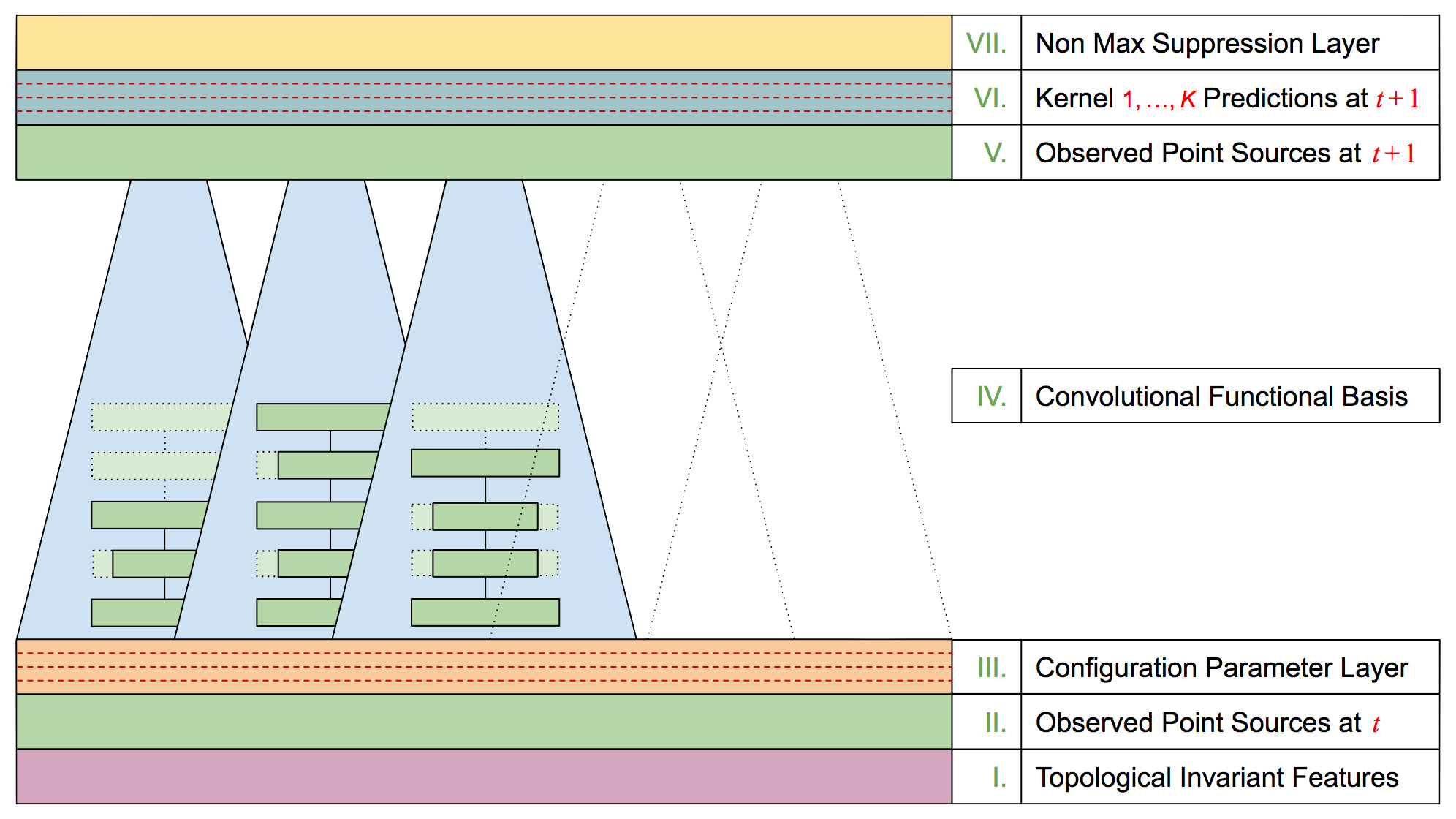

|

Basal forebrain cholinergic neurons influence cortical state, plasticity, learning, and attention. They collectively innervate the entire cerebral cortex, differentially controlling acetylcholine efflux across different cortical areas and timescales. Such control might be achieved by differential inputs driving separable cholinergic outputs, although no input-output relationship on a brain-wide level has ever been demonstrated. Here, we identify input neurons to cholinergic cells projecting to specific cortical regions by infecting cholinergic axon terminals with a monosynaptically restricted viral tracer. This approach revealed several circuit motifs, such as central amygdala neurons synapsing onto basolateral amygdala-projecting cholinergic neurons or strong somatosensory cortical input to motor cortex-projecting cholinergic neurons. The presence of input cells in the parasympathetic midbrain nuclei contacting frontally projecting cholinergic neurons suggest that the network regulating the inner eye muscles are additionally regulating cortical state via acetylcholine efflux. This dataset enables future circuit-level experiments to identify drivers of known cortical cholinergic functions. [SOURCE]

April 11, 2017

This class is all about scaling computational neuroscience to work with large datasets. Here are some of the datasets that you may use for course projects. As with all of the data made available for projects relating to this class, the data was shared with the understanding that it is to used for your sole use in this class, it cannot be shared with anyone outside the class, and, if you have aspirations to publish a paper or present a poster referring to any use of this data you must first obtain the consent of the owner, who is generally the director of the lab that produced the data in the first place. Here is an introduction to the datasets:

Fruit Fly (Drosophila melanogaster): Stephen Plaza [HHMI Janelia] FlyEM Project — partial connectome spanning seven columns in the fly medulla (structural) → See here for the FlyEM Wiki page plus access to the connectomic dataset, relevant papers and useful tools. Projects combining this dataset and the next are encouraged.

Fruit Fly (Drosophila melanogaster): Stephen Plaza [HHMI Janelia] FlyEM Project — partial connectome of the Drosophla mushroom body (structural) → This connectome is likely to exhibit circuit motifs different from those found in the seven-column dataset and therefore jointly classifying motifs in both data sets could yield interesting results.

Fruit Fly (Drosophila melanogaster): Sophie Aimon [University of California San Diego] Greenspan Lab — two-photon light-sheet whole-organism 2 μm resolution (functional) → See Sophie's entry in the class calendar for references.

Zebrafish (Danio rerio): Misha Ahrens [HHMI Janelia Campus] Ahrens Lab — two-photon whole organism (functional) → See Misha's entry in the class calendar for references.

House Mouse (Mus musculus): Michael Buice [Allen Institute for Brain Science] (functional) Project MindScope — two-photon selected regions of the mouse visual cortex (functional) → Start here with the AIBS Brain Observatory Data Portal.

House Mouse (Mus musculus): Marius Pachitariu [University College London] Carandini and Harris Lab — two-photon selected regions of the mouse visual cortex (functional) → See Marius Pachitariu's calendar entry for data, relevant papers and provenance. The format of the data is specified in the footnote at the end of this sentence8.

Nematode (Caenorhabditis elegans): Andrew Leifer [Princeton University] Leifer Lab — two-photon whole organism (functional) → See Andy Leifer's calendar entry from last year that, in addition to his talk and slides, provides a link to the C. elegans data from his Cell paper provided by the head of his lab and co-author Manuel Zimmer.

Nematode (Caenorhabditis elegans): Manuel Zimmer [Research Institute for Molecular Pathology] Zimmer Lab — two-photon whole organism (functional) → See Saul Kato's calendar entry from last year that, in addition to his talk and slides, provides a link to the C. elegans data from his Cell paper provided by the head of his lab and co-author Manuel Zimmer.

Nematode (Caenorhabditis elegans): Worm Atlas [Albert Einstein College of Medicine] Multiple Labs — whole-organism connectome (structural) → The Worm Atlas provides a comprehensve source of information relating to the C. elegans connectome—including hermaphrodite, male and dauer larval stage nematodes— along with a wealth of behavioral, morphological and anatomical metadata.

Atari Game Console and Motorola 6502 Processor (Computatus motorola): In Jonas and Kording [86], Eric Jonas and Konrad Kording post the question "Could a neuroscientist understand a microprocessor?" In their analysis, they use EM imagery of chips as structural data for computing connectomes, and emulators as synthetic organisms to perform experiments and record functional data—see Virtual 65029. See also Eric's calendar entry from last year.

April 9, 2017

Here are examples of computational advances resulting from the study of relatively simple organisms, including the common fruit fly (Drosophila melanogaster, house mouse (Mus musculus) and larval zebrafish (Danio rerio) that have or likely will have an impact in developing algorithms that further the state of the art in artificial intelligence:

relating to sensation and sensory processing, including the mouse vibrissal touch in the barrel cortex [132], the visual and olfactory systems of the fly and zebrafish including the extraordinary multifaceted eyes of the fly and its associated highly conserved and stereotyped visual system [171, 166], the original motivation for and subsequent development of hierarchical predictive coding algorithms for object recognition in natural images [164, 70, 43, 44, 138];

relating to highly parallel algorithms, including systems performing the algorithmic analog of locality sensitive hashing that have yielded improved algorithms for performing nearest-neighbor search, models of Drosophila glomeruli and Kenyon cells that implement artificial olfaction [142, 45, 146], the ring attractor circuit in the fly central complex implementing proprioceptive sensing crucial in orientation [177], and associative memory in the mushroom bodies that perform robust multi-sensory learning that serve in guiding avoidance, aggression and mating behaviors [5];

Unlike more general insights that have their origins in the cognitive and behavioral neurosciences, e.g., reinforcement learning, Hebbian learning, associative memory, the examples above provide specific algorithmic insights of a sort we have barely begun to mine from our study of functional connectomics. I expect the microscale architecture of the brains of diverse organisms and their functional analysis will yield a windfall of engineering insights that will be translated into hardware and software for solving very different problems than those originally solved by natural selection.

Moreover the sort of architectures we are proposing for inferring such knowledge from data are themselves relevant to artificial intelligence insofar as these architectures enable us to infer models of complex dynamical systems, including new classes of artificial neural networks that extend the possibilities of current DNN's and DQN's that either optimize or synthesize new neural network architectures [188, 94]. By the way, Geoff Hinton had a huge influence on the development of predictive coding and hierarchical and Bayesian extensions.

I emphasize Drosophila in large part due to our collaboration with Janelia and the fact that Neuromancer working with HHMI is very likely to have the complete connectome by the end of this year if not sooner. The Zebrafish is attractive for different but similarly compelling reasons. It is a vertebrate, with a close homolog of the basal ganglia which is central to our understanding of reinforcement-based learning. DeepMind and Google Brain are substantially invested in temporal difference reinforcement learning, how intrinsic reinforcement signals are incorporated into the framework, how to make it more efficient, and so forth. DeepMind explicitly mentions the striatal inspiration in their work—see the above-linked review in Current Biology and the paper that it refers to [165] for background on the relationship between the striatum and basal ganglia.

The Zebrafish is the simplest, most accessible system in which we can comprehensively measure and model reinforcement learning in a close analog of the mammalian striatum and basal ganglia. It can learn complex sensorimotor tasks by reinforcement, using systems analogous to mammals, and yet is a preparation in which this entire process can be comprehensively studied. The overall expediency of the system is key: The functional imaging is simply better than in anything else, even C. elegans, and it is of a sufficiently small size where we can readily imagine getting a complete connectome for a functionally imaged organism—indeed I'm already talking with two teams, Seung's lab at Princeton and Lichtman's at Harvard to do just that. If we want to understand how reinforcement learning actually works in biology, this system is perfect.

Caveats: As far as I can tell, we don't truly have good models either of the dopamine signals themselves or of how they shape the basal ganglia's action selection policy. Or mechanistically of how action selection works. As far as I know, there are a few BG action selection models like the direct / indirect pathway model [64, 65] and work by Gurney, Prescott and Redgrave [173, 66], but little is really known about how exactly this works in reality. Recent papers showed spatial clustering of striatal medium spiny neuron responses, for example—what is the significance of this? How does the BG manage the huge convergence of inputs from all around the brain and reduce it into a small set of output dis-inhibitory actions. Then, exactly what influence does this output have on the thalamus [114]—also see here and here. A similar state of affairs probably holds for basal forebrain cholinergic systems, which at least in mammals have a reinforcement signaling role. [Thanks to Adam Marblestone for his contributions to the above. The example pertaining to the basal ganglia is largely due to Adam.]

April 7, 2017

Excerpts from Daniel Dennett's Bacteria to Bach and Back: The Evolution of Minds [35] prompted by a discussion with Robert Burton:

Words are the lifeblood of cultural evolution. (Or should we say that language is the backbone of cultural evolution or that words are the DNA of cultural evolution? These biological metaphors, and others, are hard to resist, and as long as we recognize that they must be carefully stripped of spurious implications, they have valuable roles to play.) Words certainly play a central and inelliminable role in our explosive cultural evolution, and inquiring into the evolution of words will serve as a feasible entry into daunting questions about cultural evolution and its role in shaping our minds. […]In both cases — it is possible that life, and language, arose several times or even many times, but if so, those extra beginnings have been extinguished without leaving any traces we can detect. […] Dawkins (2004, pp. 39-55) points out that, in many languages, tree diagrams showing the lineages of individual genes are more reliably and formatively traced than the traditional tree showing the dissent of species, because of "horizontal gene transfer"—instances in which genes jump from one species or lineage to another. […]

Sometimes, failure to find the word we are groping for hangs us up, prevents us from thinking through to the solution of a problem, but other times we can wordlessly make do with unclothed meanings, ideas that don't have to be in English or French or any other language. [Jackendoff 2002, especially chapter 7, "implications for processing," is a valuable introduction.] Might wordless thought be like barefoot waterskiing, dependent on gear you can kick off once you get going? […] An interesting question: could we do this even if we didn't have the neural systems, the "mental discipline," trained up in the course of learning our mother tongue? […]

The idea that languages evolve, that words today are the descendants in some fashion of words in the past, is actually older than Darwin's theory of evolution of species. Text of Homer's Iliad and the Odyssey for example were known to descend by copying from text descended from text descended from text going back to their oral ancestors in the Homeric times.

Commentary:

The evolution of language and especially the language of scientific disciplines, is subject to more rigorous, less forgiving interpretation / selection pressure, for example, by "correcting" contextually inappropriate inferences drawn from analogies that have otherwise proved to be useful in understanding complex phenomena. Induced mutation in the form of variable substitution and the inclination to "fill in" a missing term in an analogy is an exercise that can yield new insights and extensions to a theory, but can also demonstrate the limitations of a given concept or analogy, by inviting increased scrutiny that ultimately undermines the value of an analogical framing of a concept or theory altogether. It remains to see whether the DNA analogy illuminates or distorts our understanding of language and linguistic variation.For some reason, the concept that came immediately to mind when I read this excerpt had to do with a meme introduced into computer science and its relevance to document search, namely "the long tail" of a distribution where lie the obscure queries and their preferred less commonly known content of all stripes that distinguishes a search engine like Google from those with less coverage that fail on queries in the long tail. As an undergraduate math major, I was fascinated with long tails in a careless way, not really distinguishing between density plots where the area under the curve is equal to 1, and plots of arbitrary positive-valued functions where the area under the curve might not even be bounded.

Sequences that converge to zero but that the residues — the sum of terms to the right of any given term — are always infinite, e.g., the harmonic series: 1 + 1/2 + 1/3 + 1/4 + … were especially interesting. Learning about them provided my introduction to the "plug and replay" approach to doing science10. Given the concept of a "series whose terms converge to zero but whose residues sum to finite numbers" leads inexorably to the question of what happens when you substitute "sum" with "do not sum". So much of mathematics and physics arise out of making such substitutions and working through the consequences. Of course, you’d need the notion of infinite sums and, eventually, the notion of transfinite numbers, but, as Cantor discovered, that sort of thinking can lead to madness.

April 5, 2017

Here is the somewhat long-winded note I sent to selected contacts in several of our collaborating academic and privately-funded research institutes asking them for examples of research on functional connectomics potentially leading to results of interest to Google. The length can be explained in part due to my remarks reassuring them that such a project would be open in terms of sharing the data and tools we generate in our collaborations with external labs:

If Google builds a team to conduct research on functional connectomics, it will do so for two reasons, (a) the particular focus of the research demands the scale of computational resources that only Google and a handful of other corporations can possibly muster, and (b) that Google believes the anticipated products of this research will be beneficial to the scientific enterprise and potentially to human health and welfare.

If it does build such a team, then it is likely that a good portion of the effort would be directed at building tools useful to the scientific community and that these tools would be made available without cost. Moreover Google would very likely partner with a number of academic labs and privately funded research institutes, such as the Allen Institute for Brain Science, the Howard Hughes Medical Institute, and the Max Planck Institute, to name a few with which we have had fruitful collaborations in the last few years.

If past is prologue, then Google will advocate that the fruits of these collaborations, in terms of research products such as high-volume recordings of neural activity and their analyses, will also be made available to the scientific community, and we will negotiate the precise conditions under which such data will become available, working with our partners to accommodate their self interests in terms of academic priority in publishing.

The above three paragraphs are intended to make clear both the conditions under which Google would proceed in developing such an effort, and its expectations in terms of collaborating with diverse teams expert in the underlying neurobiology. It would not likely replicate the expertise within the community, and the existence of such expertise and willingness of those possessing such expertise is a precondition for our embarking on an effort to accelerate this area of science.

For efforts that require a substantial outlay of capital to provide the necessary computing resources and pay the engineers that would develop the underlying technology, Google would very likely add an additional condition that the knowledge gained would provide some benefit to Google in terms of new technologies that might ultimately find their way into products. This has been the case for project Neuromancer and will likely be the case for any reasonably well staffed project that requires more than a few quarters to complete.

Having set the stage, I'll now outline what I need from you to help me make the case for starting a project in functional connectomics, complementing our existing project focusing on structural connectomics:

A list of noteworthy fundamental scientific results that such a project might reasonably generate in collaboration with its partners. It would be more compelling if that list also includes a description of the enabling technologies required to achieve said results and an estimate of the time required to develop said technology.

In the case of studying the brain, it is natural for Google to be interested in the prospects for the knowledge gleaned from such studies to further the development of technologies that are important in improving our products and services, and in particular those that pertain to image understanding, robotics, natural language understanding, artificial intelligence and large-scale computing and networking.

If the anticipated fruits of the scientific effort enabled and accelerated by Google’s involvement are unlikely to lead to the development of such useful technologies, that outcome would likely undermine Google's interest in making such an investment. This does not reflect Google's disinterest in the scientific enterprise, but rather the line between Google's responsibilities to its investors and the economic well-being of the company, and its aspirations vis-à-vis corporate philanthropy and on-going efforts to contribute to the health and welfare of its employees, customers and society in general.

April 3, 2017

What is a scientific theory and what use is it?

Consider, Ptolemy's "geocentric theory" with the earth as the center of the universe and his "epicycles" that were required to make the theory fit the data, Aristotle's "geocentric celestial spheres" sustained the geocentric conceit until the 17th century, Copernicus and his much maligned "heliocentric theory" with the sun as the center of universe, and Galileo who was tried and found guilty of heresy for his belief that the "sun" was the center, thereby disagreeing with the church's self-serving interpretation of the bible which already had multiple layers of interpretation. Galileo was an instrument builder, data driven experimentalist and, for his time, mathematically sophisticated theorist; he substantially improved the best telescopes of the time by grinding his own lenses and carefully tracked the position of the planets in the night sky to support his theory. Newton changed the way astronomers did science. He invented the first practical reflecting telescope, replacing the refractory telescope with the reflector telescope for all but the smallest instruments, he was incredibly careful in making his observations, and was, for all intents and purposes, the first [human] computer to solve differential equations in order to fit the data, concluding that the planets followed elliptical orbits around the sun. It is difficult for us to comprehend the degree to which he accelerated the advance of science and influenced the way we conduct science today.

Not all self-proclaimed scientists pursue their scientific interest as methodically as Newton. In some disciplines, data is hard to come by, in others it is difficult to conceive of how to build mathematical models of the sort Newton championed, and, in still others, what is accepted as a theory is more like a parable or fictionalized account of the observed phenomena. Not all phenomena yield to the methodology of science as Maxwell, Rutherford, Einstein, Crick and Watson, Hodgkin and Huxley etc would recognize it. What is a "good" model or theory? To begin with it should be "usefully" explanatory and predictive, not a "just so" story: "The Leopard used to live on the sandy-colored High Veldt. He too was sandy-colored, and so was hard for prey animals like Giraffe and Zebra to see when he lay in wait for them. The Ethiopian lived there too and was similarly colored. He, with his bow and arrows, used to hunt with the Leopard. [...] Then the prey animals left the High Veldt to live in a forest and grew blotches, stripes and other forms of camouflage." [...] "So the Ethiopian changed his skin to black, and marked the Leopard’s coat with his bunched black fingertips. Then they too could hide. They lived happily ever after, and will never change their coloring again." — How the Leopard got His Spots by Rudyard Kipling. Scientists working in evolutionary biology are often accused of generating "just so" stories, but many theories start out that way.

How do the best theories stand up to scrutiny?

A. Newtonian celestial mechanics: [POSITIVES] accurate prediction of planetary motion, mathematically elegant — Dirac's "the unreasonable effectiveness of mathematics", broad application — no need for a separate theory of terrestrial motion, or a separate method for estimating the orbits of asteroids, comets or any other macroscale objects; no prime mover — apparently this didn't upset Newton's religious views as he simply pushed the problem back another step and had God [of the old testament variety] create gravity; [NEGATIVES] invokes spooky action at a distance, doesn't accord with the general theory of relativity (Einstein), doesn't predict space-time curvature (Minkowski) and how massive bodies can deflect even light, and doesn't account for quantum effects — but then neither does Einstein's theory.

Ptolemy and Aristotle gave us the "geocentric theory", "celestial spheres" and "the unchanging celestial realm". The Catholic church took their word as God's; Why? Copernicus was derided for his "heliocentric theory", but luckily he as ignored by the Vatican. Tycho Brahe discovered "super novae", demonstrated that stars come and go and discredited the "unchanging celestial realm" theory. Kepler offered his "three laws of planetary motion". Galileo improved the refractor telescope, showed us how to collect good data and perform experiments to test hypotheses and then ran afoul of the Inquisition, barely escaping with a life in exile. Newton built one of first reflector telescopes in an instance of parallel invention and then vastly improved its design winning him "early admission" into Royal Society, then, as an afterthought, invented the calculus, predicted the elliptical orbits of planets, and spent a few years breathing toxic fumes while playing at alchemy.

Some of these theories seem ludicrous to us today but all of them are false, some more than others, some egregiously so. In fact according so a study "Most published research findings are false" Annual Review Statistics and its Applications. 2017, according to a 2015 paper appearing in Science "Fewer than half of 100 studies published in 2008 in three top psychology journals could be replicated successfully, and then in 2015 we read "Biomedical Science Studies Are Shockingly Hard to Reproduce". Who here popped vitamin C like candy in the 60's, stopped consuming fat in the 80's or eliminated carbohydrates entirely from their diet and ate only red meat in the oughts.

"The best [summary description of natural selection], for simplicity, generality, and clarity is probably [that of] philosopher of biology Peter Godfrey-Smith: Evolution by natural selection is change in a population due to (i) variation in the characteristics of members of the population, (ii) which causes different rates of reproduction, and (iii) which is heritable. Whenever all three factors are present, evolution by natural selection is the inevitable result, whether the population is organisms, viruses, computer programs, words, or some other variety of things that generate copies of themselves one way or another. We can put it anachronistically by saying that Darwin discovered the fundamental algorithm of evolution by natural selection, an abstract structure that can be implemented or "realized" in different materials or media." — From Bacteria to Bach and Back: The Evolution of Minds by Daniel Dennett.

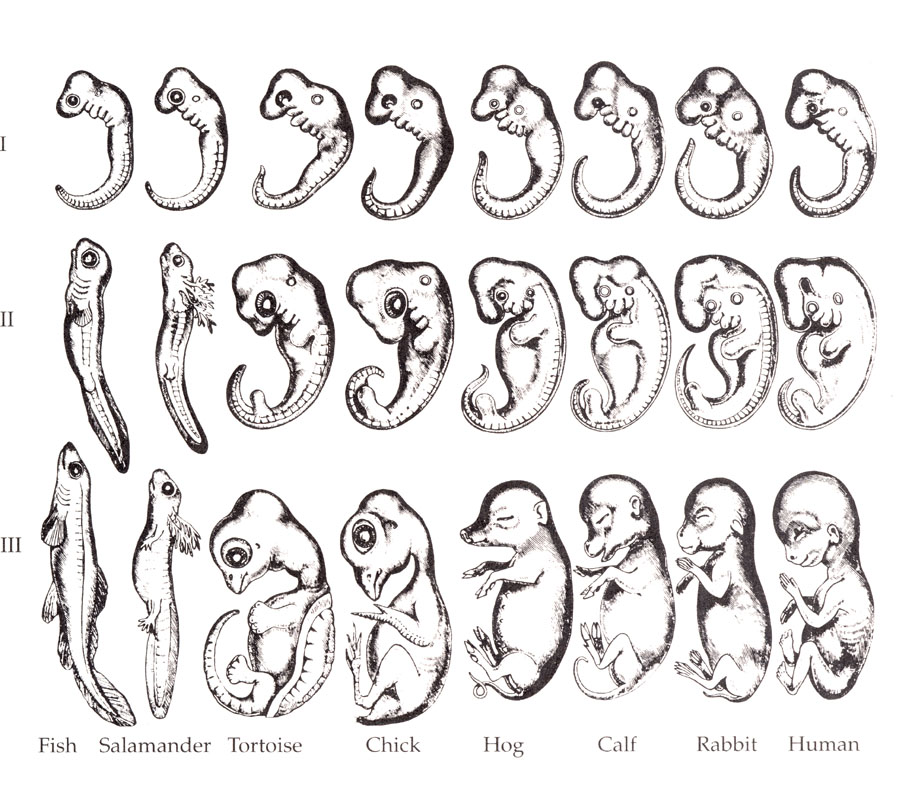

B. Darwinian natural selection: in a nutshell, if there is a source of variation in the traits of organisms, and these traits differentially impact reproduction, and there is a mechanism whereby organisms can pass on traits to their offspring, then natural selection will prefer species that have more offspring: no explanation of a mechanism for how traits are passed on from one generation to the next — this will have to wait for the rediscovery of Mendel's work by Bateson and others and the work of Crick and Watson and their colleagues in determining the molecular structure of DNA and the role of genes in building bodies; no explanation of how variation arises naturally and how it can alter reproductive success — this will have to wait for the discovery of mutations and early demonstrations that even point mutations can have a devastating impact on the ability of an organism to pass on traits and introduce new traits that confer a selection advantage or disadvantage; to his credit Darwin did understand that the process of natural selection could take a long time to to produce new species.

As an example of a theory run amok, "Ontogeny recapitulates phylogeny" is a catchy phrase coined by Ernst Haeckel, a 19th century German biologist and philosopher to mean that the development of an organism (ontogeny) expresses all the intermediate forms of its ancestors throughout evolution (phylogeny). Haeckel's theory was large discredited but surfaces from time to time just like the face of Jesus turns up regularly on tortillas, cloud formations and slices of burnt toast.

|

We won't be fooled again! — The Who

If you believe astrophysicists who write books or produce documentaries like Sean Carroll and Neal deGrasse Tyson, then you probably believe that [physicists] know all the fundamental particles and associated forces that can interact with the human body or influence human destiny in any way ... that's ANY way and not just any DISCERNIBLE way. There are particles and forces that we don't understand and possibly some that we don't know even about, e.g., beyond gravitons and Higgs bosons, but they don't interact with us, nor do they operate on spatial or temporal scales that could make a difference in our lives or those of our offspring. — Carroll says that if paranormal powers were possible scientists would have detected them, and suggests quite reasonably that if God [of the old testament variety] exists we would have detected him and since God doesn't register on any of our sensitive instruments then he can't have any impact on our lives. I think Carroll is right — Cartesian dualism is dead, but he is quick to point out that his claims are merely hypotheses albeit hypotheses that are almost overwhelmingly supported by the data. We could be wrong. We could be fooled again. It's just not very likely.

Just prior to the beginning of the 20th century, "There is nothing new to be discovered in physics now. All that remains is more and more precise measurement." — this quote which is often misattributed to William Thomson, Lord Kelvin, is more likely a paraphrase of Albert A. Michelson — of Michelson-Morley fame — who in 1894 stated: "[...] it seems probable that most of the grand underlying principles have been firmly established [...] An eminent physicist remarked that the future truths of physical science are to be looked for in the sixth place of decimals." An interesting combination of hubris and pandering to authority. It used to be that it wasn't true unless Socrates said so, and, conveniently, we don't know what Socrates said because he never wrote anything down. He left the scribbling to his protege Plato who apparently took it upon himself to write down everything that Socrates did say or might have said. A sure recipe for some creative writing.

My friend Mario Galarreta [50, 49, 48, 46, 47] is fond of saying [or showing with a Venn Diagram] that if all that WE KNOW is in a small box A, then the box B containing A and all the things WE KNOW THAT WE DON'T KNOW is substantially larger, and the box containing A, B and all the things WE DON'T KNOW THAT WE DON'T KNOW is much larger than either A or B. Perhaps some of you remember Donald Rumsfeld. "Reports that say that something hasn't happened are always interesting to me, because as we know, there are known knowns; there are things we know we know. We also know there are known unknowns; that is to say we know there are some things we do not know. But there are also unknown unknowns — the ones we don't know we don't know. And if one looks throughout the history of our country and other free countries, it is the latter category that tend to be the difficult ones." — Secretary of Defense, Donald Rumsfeld answering a question during a Department of Defense news briefing in 2002. Rumsfeld attributed the key phrase, "unknown unknowns" to William Graham, the Director of the White House Office of Science and Technology Policy during Ronald Reagan's administration.

|

In August of 2015, the Smithsonian ran an article reporting: "According to work presented today in Science, fewer than half of 100 studies published in 2008 in three top psychology journals could be replicated successfully. The international effort included 270 scientists who re-ran other people's studies as part of The Reproducibility Project: Psychology, led by Brian Nosek of the University of Virginia." A similar study published the same year ran with the title "Biomedical Science Studies Are Shockingly Hard to Reproduce", and one idiot — more charitably, "one well-meaning, technically-correct, but politically-naive and dangerously-incendiary loose cannon" — wrote, referring to the article in Science, "This project is not evidence that anything is broken. Rather, it's an example of science doing what science does. [...] It's impossible to be wrong in a final sense in science. You have to be temporarily wrong, perhaps many times, before you are ever right." Donald Trump would so agree. Why do scientists publish work that can't be reproduced, manipulate data to their advantage and fudge the statistics to make their case? Perhaps they are not maliciously trying to fool their colleagues. Perhaps we are constitutionally challenged when it comes to reporting our findings.

"The observer-expectancy effect (also called the experimenter-expectancy effect, expectancy bias, observer effect, or experimenter effect) is a form of reactivity in which a researcher's cognitive bias causes them to subconsciously influence the participants of an experiment. Confirmation bias can lead to the experimenter interpreting results incorrectly because of the tendency to look for information that conforms to their hypothesis, and overlook information that argues against it. It is a significant threat to a study's internal validity, and is therefore typically controlled using a double-blind experimental design." — https://en.wikipedia.org/wiki/Observer-expectancy_effect This is just one of many cognitive biases that cloud human decision making. Much of the original research such as the behavioral economics of Daniel Kahneman and Amos Taversky initially met with a great deal of skepticism, but now there a veritable cottage industry of psychologists and behavioral scientists coming up with new biases and aberrant behavior — possibly itself flawed by the very biases they seek to uncover:

|

Will the real theory please stand up?

Our understanding of gasses is perhaps best articulated in a series of theories — quantum mechanics (QED) → the kinetic theory of gasses → fluid dynamics — that operate at different spatial and temporal scales and invoke different assumptions, physical laws, language and mathematics. The notion of "emergence" is often maligned by scientists as a politically-correct alternative to admitting ignorance. In some disciplines and some theoretical accounts, however, emergence is a natural consequence of simplifying our understanding of complex phenomena to make them more tractable mathematically and computationally in order to facilitate analysis or prediction.

"Seeing how relatively easy it is to derive fluid mechanics from molecules, one can get the idea that deriving one theory from another is what emergence is all about. It’s not — emergence is about different theories speaking different languages, but offering compatible descriptions of the same underlying phenomena in their respective domains of applicability. If a macroscopic theory has a domain of applicability that is a subset of the domain of applicability of some microscopic theory, and both theories are consistent, then the microscopic theory can be said to entail the macroscopic one; but that’s often something we take for granted, not something that can explicitly be demonstrated. The ability to actually go through the steps to derive one theory from another is great when it happens, but not at all crucial to the idea." — from The Big Picture: On the Origins of Life, Meaning, and the Universe Itself by Sean Carroll.

There are many more neurons conveying information "down" the visual stream from higher association areas back toward the primary (striate) cortex than there are neurons conveying information "up" the visual pathways initiating in the retina, traveling along the optic tract, crossing over to the opposite hemisphere, moving through the mysterious pathways—it probably isn't "just" a relay station"—of the lateral geniculate, being processed (to some degree) in the striate cortex prior to splitting out into multiple (sub) streams and feeding into a dozen or more (additional) retinotopic maps before "combining" in the inferotemporal cortex and upstream association areas. Why? From Hubel and Wiesel [77, 76, 75] onward part of the answer has been "hierarchy" — if you haven't seen it, check out the "One Word: Plastics" scene in "The Graduate" starring Dustin Hoffman. But now we're more sophisticated, now the word is a phrase "Bayesian hierarchical predictive coding" and neuroscientists are scrambling to determine if it's "right" or "wrong" [70, 156, 44] (these papers are a very small sample of what is now a veritable cottage industry if academics churning our papers on predictive coding ... not all at once, fads come and go and then come again.

On the white board, draw simple control-theory view of systems neuroscience: → controller → physical plant → feedback. Now play around with labeling the components: the Atari game console, the physics engine, the game controller, the CRT or LCD screen, a person playing the game, etc. Now imagine ... a fly with a tiny bundle of wires protruding from its brain and leading to a two-photon (fluorescent) excitation (2PE) microscope ... or implanted with one of the miniature fluorescent microscopes developed in Mark Schnitzer's lab [84, 57, 13, 54]. Now go wild and imagine a fruit fly walking on a tiny ball tethered to a microscale fiber optic capable of limited flight ... Read the controversial, thought-provoking paper by Eric Jonas and Konrad Kording [86] entitled Could a neuroscientist understand a microprocessor?.

March 31, 2017

Email exchange with Grace Hunyh in Ed Boyden's Lab regarding my presentation at MIT last week: